What Is Lidar, and How Does It Work?

The same technology used to find ancient Maya cities also powers your robot vacuum and self-driving cars. Here's where you can find it and how it works.

Did you know the technology that helps the Neato Botvac Connected navigate around furniture and stray shoes also helps self-driving cars from Alphabet's Waymo steer busy city streets? It's called lidar, and it's used in everything from guiding autonomous vehicles to digging up ancient cities in archaeological digs.

Lidar has been around for more than half a century, so it's nothing particularly new to the world of technology. However, it's been adapted from its original use to work in a number of different industries.

What is lidar, and how does it work?

Lidar, an almost-acronym for "light detection and ranging," has been in use since the 1960s, when planes employed it to survey landmasses.

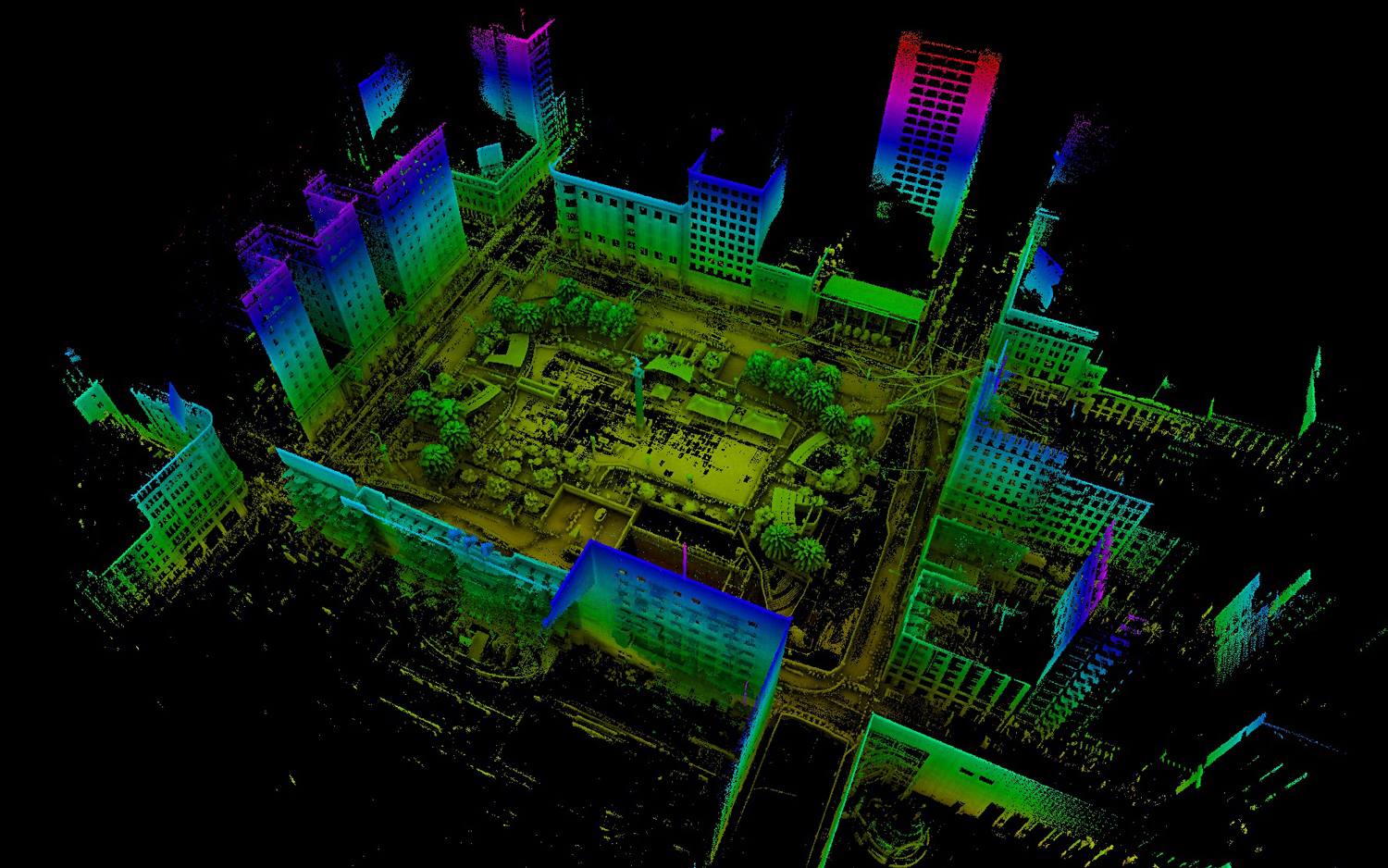

The technology uses light to determine how far away something is, but it's easier to understand if you think of it as echolocation, like how dolphins and bats discern their surroundings. Lidar bounces out laser beams and then measures how long it takes for the light to hit an object or surface. That data is then reflected back to a scanner, which calculates the distance to the object the light just hit. Those data points, of which there can be millions, are then processed as a 3D visualization, like a map or a point cloud. And unlike radar or sound waves, lidar doesn't dissipate as it travels back to its scanner.

Lidar is quick and efficient; it can grab a bunch of data in very little time, which is why self-driving cars (and robot vacuums) use it to find their way around. The technology has been helpful in a remarkably wide variety of industries; archaeologists, geologists, farmers, law enforcement and the military have all relied on lidar in one way or another.

MORE: Android Auto FAQ: Everything You Need to Know

This technology is not without its caveats. Lidar sensors are expensive to manufacture at scale, and the technology doesn't work too well in dense fog or inclement weather. These two limitations are part of the reason why the self-driving car industry is having trouble actually getting any product onto the road.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Lidar in self-driving cars

If you've witnessed a self-driving car in action or peeped any of the pictures of the Waymo mobiles, you've probably noticed the bulky camera perched on top of such vehicles. That's part of the lidar system, and what's inside helps autonomous cars navigate city streets. Not all self-driving vehicles use lidar, however; Elon Musk, the man behind Tesla, has said lidar is a "crutch" for autonomous driving systems and that he prefers to rely on cameras.

Unlike traditional lidar systems, self-driving cars from the likes of Toyota and Audi don't rely on the technology to "see" so much as to help process data. Many autonomous vehicles utilize radars and cameras, too, and where those two fall short, lidar can help pick up the missing pieces with clean, computer-ready data.

MORE: Connected Cars: A Guide to New Vehicle Technology

The use of lidar systems in self-driving cars has been trending upward since 2005, after a demonstration in a race called the Darpa Grand Challenge. The race pitted 15 autonomous vehicles against one another in the Mojave Desert, and it was there that the CEO of Velodyne, Dave Hall, showed off his vision of how lidar could be used to pilot a car. That helped encourage others participating in the race to try implementing the technology into their own vehicles, and a few years later, the same contestants returned for a rematch, this time with Velodyne lidar systems leading the way.

Velodyne is an important name that you'll hear more of as self-driving cars become increasingly commercialized in the coming years. The company's evolved a bit since the big race, and is now known for its smaller, more affordable lidar systems. But at $4,000 apiece, they're still too expensive to toss on top of the other costs associated with manufacturing a car. And while startups have promised sensors for as little as $250 apiece, there's still a long journey ahead before lidar is small, inexpensive and competent enough to steer everyone's cars.

How lidar works in robot vacuums

The lidar system in a robot vacuum doesn't look like the kind that pilots autonomous vehicles, but it works the same as what's inside Alphabet's self-driving cars.

In the case of the Neato Botvac Connected, there's a spinning lidar sensor on top of the vacuum that maps out the house while the device is cleaning. This lidar sensor fires a laser in every direction; then, the laser light reflects that back into the sensor to collect the data that helps the robot map out your house. Most Neato Botvacs will offer a readout after cleaning in the companion app, so you can see what the lidar system saw.

Even with lidar at the helm, robot vacuums aren't perfect navigators. They still require assistance as they're cleaning, ensuring that they're not getting tangled in cords or stuck under low furniture. Lidar isn't perfect, and that it's a factor in why robot vacuums aren't yet fully autonomous is a nice parallel to why self-driving cars haven't been widely adopted. There's still so much work to do before an autonomous lidar system can fully operate it on its own.

What's the future for lidar?

Lidar itself is a continually evolving technology. But in the case of self-driving cars, there's still so much to do before it's accurate enough to drive the vehicles that human beings ride in — not to mention, it's prohibitively expensive for the every-person. With other technologies becoming cheaper and better at surveying the world around them, manufacturers may have less of a reason to consider a lidar system in the first place.

There are startups hoping to help move the industry forward with new ideas for lidar-hybrid systems for the self-driving car. Companies like AEye have built hybrid sensors that combine a solid-state lidar, a low-light camera and chips that run artificial-intelligence algorithms to reprogram how the hardware is used in real-time. However, there's doubt about whether machine learning is viable in helping robot cars see.

Quanergy, another Silicon Valley startup, is building its own "cheap" version of a solid-state lidar system, but there's skepticism about whether the system would be worth using at that price. And then there's Velodyne, which is working on shrinking its sensors down and adapting them to solid-state setups, though they lack the range and resolution required for high-speed driving.

Tesla's decision to not use lidar could affect the technology's status as a popular choice for autonomous systems down the road, especially if that company shows that it can achieve what other self-driving car companies have not. As the cost of cameras go down and pixel resolution improves, there may be less of a reason to rely on a lidar system behind the wheel instead of cameras.

Florence Ion has worked for Ars Technica, PC World, and Android Central, before freelancing for several tech publications, including Tom's Guide. She's currently a staff writer at Gizmodo, and you can watch her as the host of All About Android on the This Week in Tech network.