Google Duplex FAQ: Amazing (But Creepy) AI Explained

Google Duplex promises to take tasks like booking appointments and put them in the hands of human-sounding robots. Here’s a rundown of what we know so far.

There’s no argument as to what the star attraction was at this year’s Google I/O developers conference.

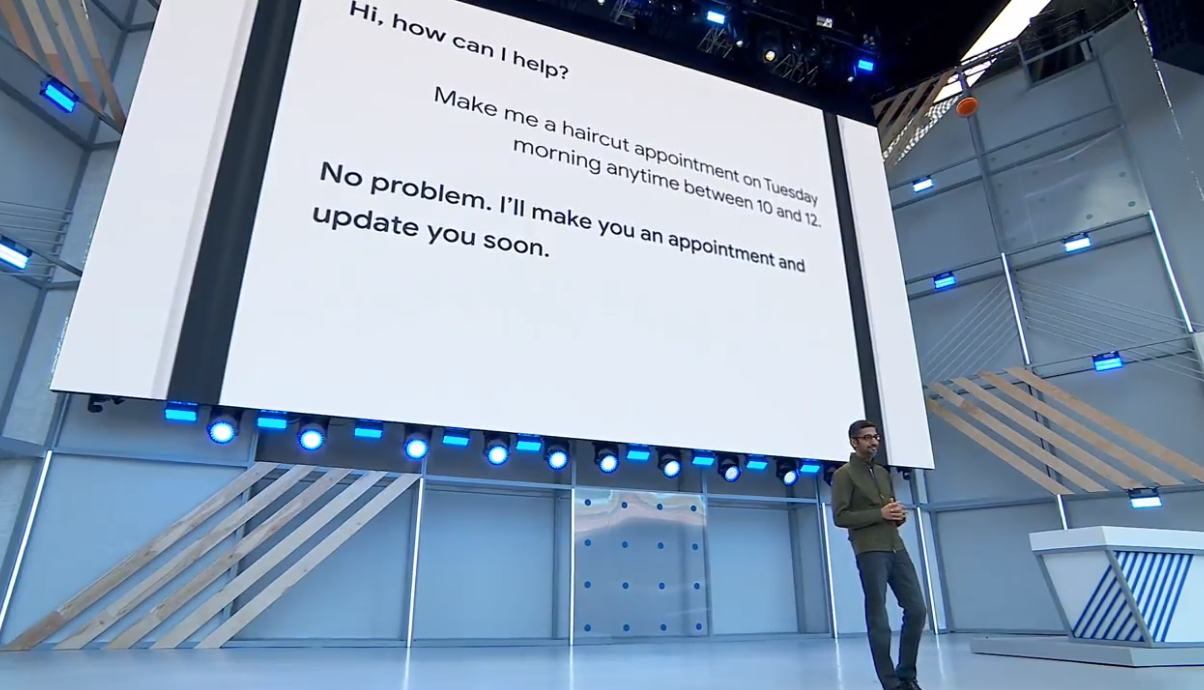

Google Duplex, a Google Assistant feature in which a human-sounding voice can book appointments and reservations over the phone, wowed attendees during the opening keynote and had other sectors of the tech world wondering about the implications of a robot assistant that sounds pretty life-like.

A lot of details about Duplex remain unclear. As of right now, Google is limiting its public pronouncements about Duplex to its keynote presentation and a deep dive into the technology on its blog, while it fine-tunes the feature for launch. But here’s a rundown of what we know so far about Google Duplex and what it means for your future interactions.

What Is Google Duplex?

Google defines Duplex as “a new technology for conducting natural conversations to carry out ‘real world’ tasks over the phone.” It’s AI that can make calls for you.

But rather than simply leaving a message, Duplex can dynamically converse with employees on the other end of the line, responding to unexpected questions in real time with the cadence and mannerisms of an actual person.

What will I use Google Duplex for?

Google Duplex won’t say whatever you tell it to — it only works for specific kinds of over-the-phone requests. The two examples Google presented at I/O involved setting up a hair salon appointment and a reservation at a restaurant. Another example is asking about business hours. According to an extensive post on the technology on Google’s AI Blog, Duplex has been purposefully constrained to “closed domains.” In other words, it only works for those scenarios because it’s been trained for them, and cannot speak freely in a general context.

How does Google Duplex work?

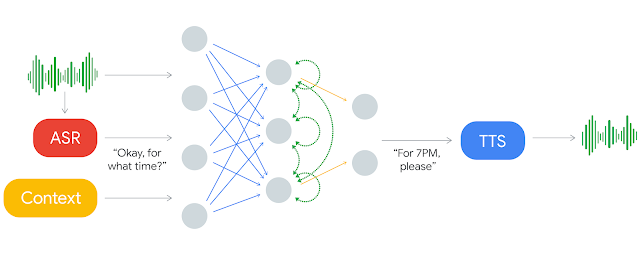

Like all of Google’s most innovative software, Duplex relies on a machine learning model drawn from anonymized real-world data — in this case, phone conversations. It combines the company’s latest breakthroughs in speech recognition and text-to-speech synthesis with a range of contextual details, including the purpose and history of the conversation.

To make Duplex sound natural, Google has even attempted to replicate the imperfection of natural human speech. Duplex incorporates a brief delay before issuing certain responses and says “uh” and “hmm” as it synthetically recalls information. The result is extraordinarily lifelike with astonishingly short latency.

It’s so accurate — at least in demos — that you probably would never guess you weren’t talking to a person. Duplex not only sounds like we do, but it displays human attentiveness, too.

Of the two calls Google played on stage during this week’s I/O keynote, the second was significantly more complex with a non-native English speaker on the other side of the phone and a conversation that went off the rails rather quickly. The employee thought a request for a reservation at 7:00 was actually one for seven people, but Duplex was able to roll with the unexpected redirect. It answered the employee’s question and still managed to complete the task.

MORE: Google I/O: What's New with Google Assistant, Android P and More

When will Google Duplex be ready to use?

Google says it plans to test Duplex over the summer, with testing focusing on making restaurant reservations, booking hair salon appointments and asking about holiday hours. It’s unclear if Duplex will be limited to those tasks upon launch, but it’s a safe bet that those three things will be a focus of the new feature if that’s what Google plans to test.

In addition to fine-tuning the service, Google says it's looking for feedback about Duplex as part of its testing. It certainly got an earful about whether the service was going to ID itself to callers, as we'll get to in a minute.

What are the limitations of Google Duplex?

As natural as Duplex sounds — at least in these initial demos — there seem to be limits to what the AI can do at this point. Google says Duplex “is capable of carrying out sophisticated conversations and it completes the majority of its tasks fully autonomously,” but the key word there is “majority.” Based on what we’ve seen, Duplex works best when it’s performing a very specific task (i.e. scheduling an appointment or reservation at a very specified time) and less suited to open-ended tasks like follow-up questions on specific services. That’s subject to change as the service matures, of course.

Google says Duplex is self-monitoring so that it can detect when there’s a task that it can’t complete all by itself, such as if booking an appointment offers too many complex options. In that instance, Google says, Duplex “signals to a human operator” — that would be you — to handle the task. In such circumstances, it even learns from how you navigate the conversation it couldn’t complete, so it can incorporate those lessons into the next time it encounters a similar situation. In other words, don’t expect to hand over everything to robots just yet.

Could Google Duplex eventually handle other tasks?

Ultimately, the natural-sounding AI technology behind Google Duplex could be used widely for tasks that humans can't do or don't want to do.

A natural-voice AI assistant could be a huge boon to elderly or disabled people living alone. For those people, the AI could be both someone to talk to and someone to help do things, even it's just a disembodied voice.

Or a natural-voice AI could become an online doctor performing diagnoses. Go ask it for medical advice about a problem, and it could either give you quick suggestions for treatment if it's nothing serious, or tell you to go to a real doctor if it is.

Those robots sound very realistic. Should I be concerned?

Humans adapt to and accept humanlike interactions with machines pretty easily. We're used to natural-sounding robocalls, and we find them annoying rather than alarming. But Duplex does represent a new level of sophistication, which raises all sorts of questions.

Granted, most robocalls are only pre-recorded for the initial part of the call. Once you agree to hear more, they hand you off to a human operator who tries to close the sale. However, this tech could be abused by tech-support scammers or stock-pumpers calling from a boiler room.

It was initially unclear if Google Duplex would identify itself as a robot to real people answering one of its calls — it didn't in the demo calls Google showed off during its I/O keynote. That’s a fairly obvious obligation, according to AI ethicists interviewed by The Verge. And after Google product managers indicated to Cnet earlier this week that they would look into some way for Google Duplex to identify itself, the company came out with a more definitive statement as Google I/O wrapped on May 10 that Duplex would be built "with disclosure built-in."

This article was originally published May 9. We've updated it with new information about Google's plans for Duplex.

Credit: Google

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Tom's Guide upgrades your life by helping you decide what products to buy, finding the best deals and showing you how to get the most out of them and solving problems as they arise. Tom's Guide is here to help you accomplish your goals, find great products without the hassle, get the best deals, discover things others don’t want you to know and save time when problems arise. Visit the About Tom's Guide page for more information and to find out how we test products.