Fingerprint Scanners: What They Are and How They Work

Fingerprint scanners continue to grow in popularity as a security measure that cannot be stolen, lost or forgotten.

For many years, computerized fingerprint scanners have been common plot devices in spy thrillers and heist flicks. The technology has existed in the business world, out of reach or need of regular consumers until recent years. Now they can be found everywhere, from high-security buildings to standard electronics like USB-enabled personal fingerprint scanners.

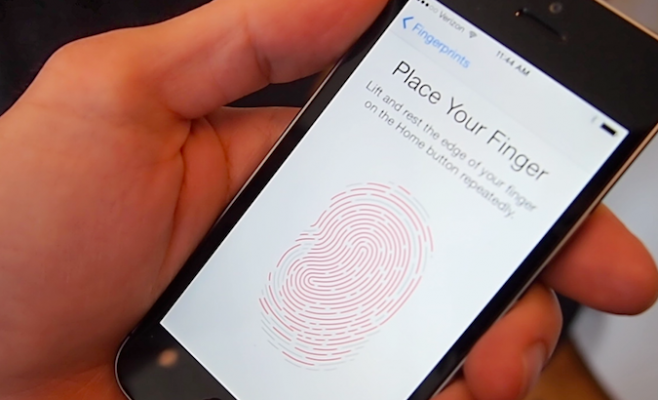

With the release of technology like the iPhone 5s, however, fingerprint scanning technology is on the rise in popularity and will continue to become more common as a security measure among personal electronic devices.

The typical safeguard for many security measures is the use of a PIN code or password. However, these protective layers can be guessed quite easily if it's too simple, and once a password is known it can be used by anyone. Fingerprint scanners rely on the presence of the individual cleared for access. Fingerprints are unique to the person they belong to and cannot be duplicated without extensive preparation.

Your fingers have tiny ridges of skin on their tips that form through a combination of genetics and environmental factors. Your DNA dictates how your skin forms at birth, and from then until your current state your body’s growth is influenced by the conditions you live in, from your environment to your experiences. In the end, fingerprints become a unique marker for people. While some prints may appear the same, advanced software such as thumbprint scanners can easily pick out the differences between prints.

A fingerprint scanner performs a very basic function: it takes an image of your fingertip and compares it against the data of a previously scanned fingerprint. If the two match, then security is granted. It compares the patterns of “ridges” and “valleys,” as the bumps on your fingertip are called, and determines if all elements match.

Most fingerprint scanners are made up of optical scanners, consisting of a charge coupled device (CCD), a light sensory system commonly used among digital cameras and camcorders. When you place your finger across the glass place, the CCD takes a picture of the thumb print. It illuminates the ridges of your fingertip and creates an inverted image of the picture to place attention on the valleys. If the image taken is of an acceptable level of quality and accuracy — a decision made by the CCD based on the image definition and pixel darkness — it begins comparing the captured fingerprint with the images stored on file.

Another common form of fingerprint capturing is the capacitance scanner, which instead of sensing the print using light, it utilizes an electrical current. The ridges of your skin, the dermis, are electrically non-conductive, whereas the valleys of your fingertip, the subdermal layer, are conductive. When touching a fingerprint sensor, it measures the minutest differences in conductivity caused by the presence of ridges.

Using these measurements, capacitance scanners create a picture of the fingerprint to compare against the original fingerprint. While still an uncommon sensor, Apple’s use of it with the iPhone 5s may set precedent for new opportunities of use due to the compact nature of the sensor and the incredible detail it is capable of reading at — more than 500 pixels per inch (ppi) resolution.

Once your fingerprint is scanned, the sensor compares this new picture against the pre-stored print to determine if they match. Comparing a full fingerprint against the newly scanned image is impractical because smudging could make an identical print look different from the scanned image. That method would also take a lot of processing power.

Instead, fingerprint scanners compare specific areas of the fingerprint, referenced to as “minutiae.” Scanners and professional investigators concentrate on points where ridge lines end or split into multiple ridges, known as bifurcations. A single finger could have numerous minutiae, and rather than try to identify all of them, scanners simply need to find a preprogrammed number of them to determine the two prints are identical.

When setting your initial fingerprint, the scanner will identify a series of minutiae and store that data rather than a picture of the entire fingerprint, thus ensuring higher levels of security and making replicating a fingerprint based on these details alone virtually impossible.

Computers can use many different ways of identifying authorized users. Fingerprint scanners are considered among the more secure methods of entry as they don’t rely on items that can be lost, like keycards or text-based passwords. Physical attributes are quite difficult to fake as opposed to identity cards and fingerprints cannot be guessed, misplaced or forgotten.

However, like most any method of security, fingerprint scanners aren’t impervious to loopholes. Optical scanners sometimes struggle to tell the difference between a picture of a finger and the finger itself. Capacitive scanners can be fooled with a mold of a person’s fingertip. Even damaging or cutting your fingertip can result in denials of access. [Related: iPhone Fingerprint Reader Already Hacked]

Despite these significant downsides, fingerprint scanners are an excellent way of proving your identity as a security measure. Many security professionals suggest using multiple tiers of security, for example pairing a fingerprint scanner with a more convenient security method like passcodes. But when used by themselves, fingerprint scanners still prove a new and unique method of ensuring the security of your electronics or data.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Tom's Guide upgrades your life by helping you decide what products to buy, finding the best deals and showing you how to get the most out of them and solving problems as they arise. Tom's Guide is here to help you accomplish your goals, find great products without the hassle, get the best deals, discover things others don’t want you to know and save time when problems arise. Visit the About Tom's Guide page for more information and to find out how we test products.

-

jjarnts Fingerprints along are a horrible security measure; you cant change them and you leave them everywhere. That is why they are use in multi-factor authentication purposes. Telling the world that just a fingerprint is good enough, especially with 3D printers becoming affordable is a horrible idea.Reply -

Ella Kramer I use a finger print scanner to get into my office everyday. It kind of freaks me out that I can use the same thing on my phone.Reply