This AI-Powered Brain Implant Can Turn Your Thoughts Into Speech

A new invention could help patients with no speech, paving the way to direct brain-to-computer interfaces for everyone.

For the first time, scientists have captured information directly from the brain cortex and used artificial intelligence to reconstruct intelligible speech, opening a path towards direct brain-to-computer interfaces.

Until now, scientists have been able to identify simple things by analyzing people’s mind activity from inside of a fMRI brain scanner.

Showing these people photos of famous people or different objects, the computer was able to analyze the fMRI data and recognize what they were looking at. But these scanners are giant machines, which make this method impractical for daily usage.

Last year, neurosurgeon Dr. Ashesh Mehta at the Feinstein Institute for Medical Research on Long Island placed a flat array of electrodes on a patient’s brain, right over the parts of the brain dedicated to speech and hearing. He was also working on creating a system to enable speech on people with a variety of paralyzing conditions, from spinal cord injuries to ALS.

The team working at Columbia University’s Neural Acoustic Processing Lab has been able to solve this challenge by using temporary implants that connect directly to a patient’s cortex too.

According to the researchers, their objective is to create an implant that could be permanent so people that currently use keyboards to talk to others (think the late Stephen Hawking) could synthesize speech on the fly, just by thinking. Like researcher Nima Mesgarani told New Scientist, “speech is much faster than we type. We want to let people talk to their families again.”

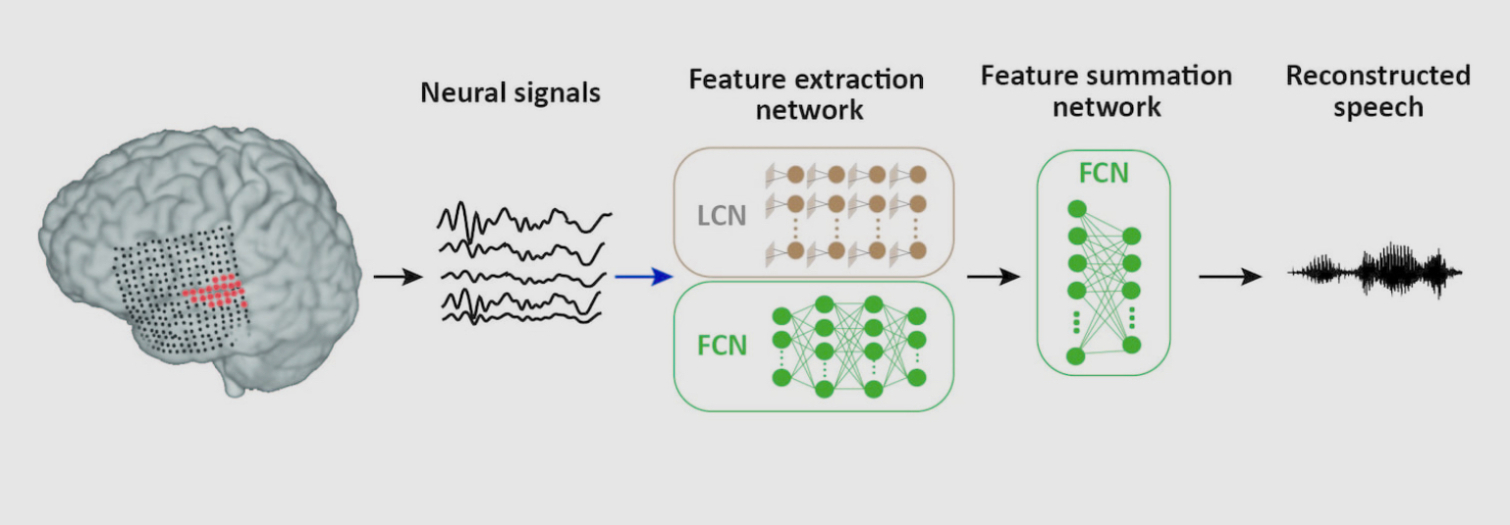

Their implant captures the patient’s neural signals from their auditory cortex, which get fed to an artificial intelligence network. You can hear a sample of the reconstructed speech right here.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

After training the AI by reading 30 minutes of continuous speech to five patients, the AI was then able to decode new words — the digits zero to nine — and synthesize them. Independent listeners were able to recognize three out of every four numbers.

It’s not perfect, but it’s an amazing feat for a first version. As implants and dedicated AI processors keep increasing in power, it’s not hard to imagine a near future in which people could regain full speech abilities by just thinking.

The applications don’t stop there. When this finally happens, people would be able to willingly get brain implants to interact with computers using the same technology.

After that, it’s not hard to envision a future in which we will get brain-to-computer interfaces that don’t require implants but instead rely of external units sensitive enough to capture the same signals through our skulls.

Black Mirror, here we come.

Jesus Diaz founded the new Sploid for Gawker Media after seven years working at Gizmodo, where he helmed the lost-in-a-bar iPhone 4 story and wrote old angry man rants, among other things. He's a creative director, screenwriter, and producer at The Magic Sauce, and currently writes for Fast Company and Tom's Guide.