This is the feature Android should steal from iPhone 16

Camera Control's link to Visual Intelligence looks super convenient

Earlier this month I spoke about how the iPhone 16 probably doesn’t need a Capture button, and instead could have opted for the camera shortcut ubiquitous to Android phones instead. Now that the iPhone 16 has been announced and the Camera Control button officially revealed, I have a slightly different opinion on the whole thing.

Admittedly, we always knew that the Camera Control button would be capable of a lot more than just opening the Camera app and snapping photos. The rumor mill had made it very clear that we would see things like pressure sensitivity and the ability for the button to understand swiping gestures. But the one thing we didn’t hear about was how the Camera Control button links to Apple’s AI-powered Visual Intelligence mode.

Ever since that particular tidbit was announced at Apple’s Glowtime event, I’ve realized that it’s the one iPhone 16 feature that Google and Android should definitely copy. But maybe not as a standalone button.

Camera Control & Visual Intelligence are perfect together

Apple hasn’t fully explained how the relationship between the Camera Control button and Visual Intelligence will work, and how the iPhone 16 will differentiate between opening the AI vision mode and controlling the camera. All we know is that the new button “will make it even easier to access Visual Intelligence” and that the AI Vision mode will be “just a click away” with the Camera Control button.

From that it sounds like the Camera Control button will act as a way to quickly launch the Visual Intelligence interface. Apple’s presentation also mentions that clicking the button will tell Visual Intelligence that you want to analyze whatever is in view. Sadly we didn’t get a chance to try this out for ourselves in our iPhone 16 hands-on review, because Visual Intelligence isn’t available yet — and won’t be until later this year.

But that limited amount of information is enough to make me realize that this is a very basic feature that could prove invaluable. Which is why Google should be taking notes.

Circle to Search is great, but it could be better

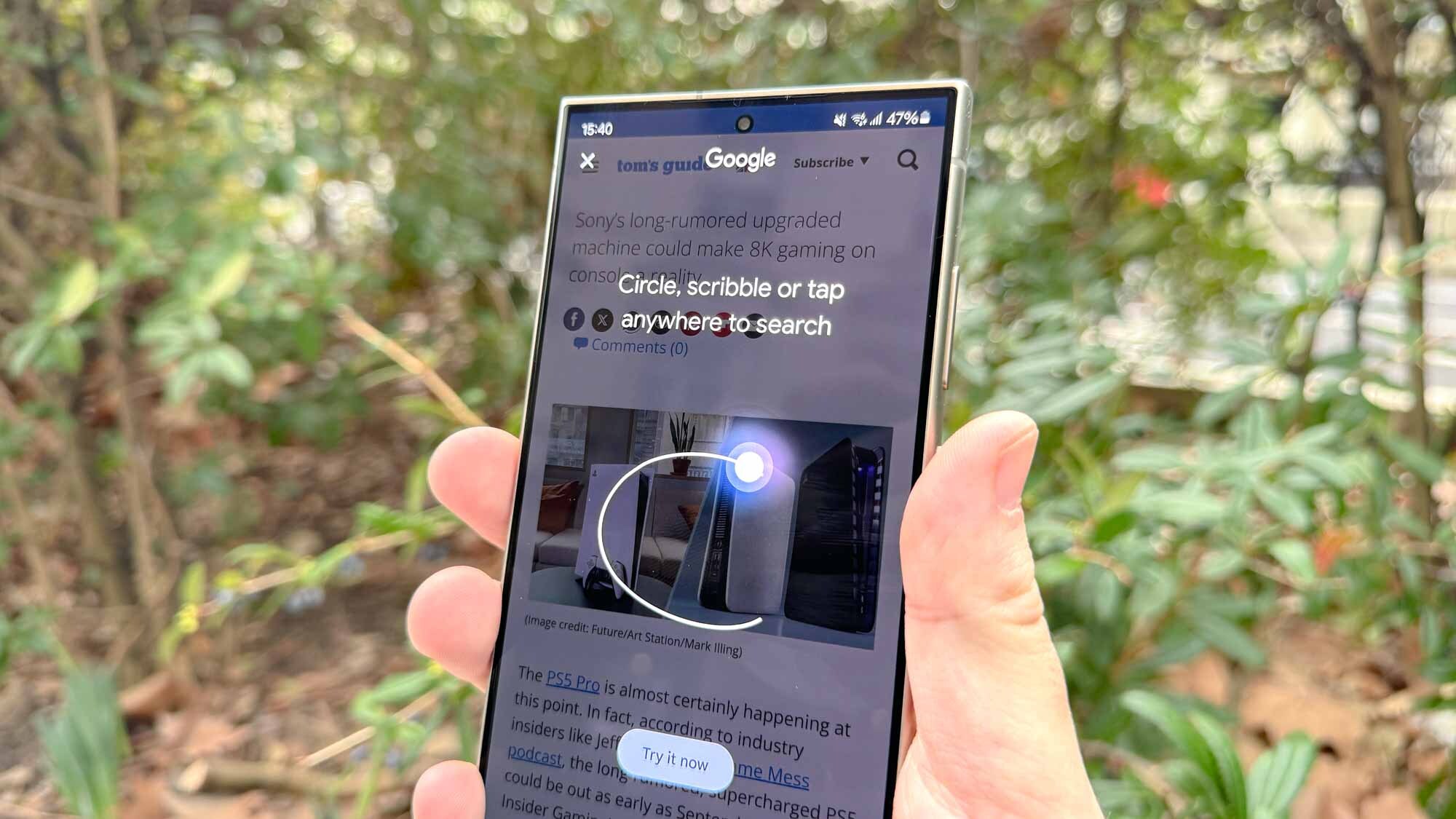

Google has put a lot of effort into making access to AI tools a little easier — and Circle to Search is a great example of that. This tool makes the whole process of using Google Lens significantly easier, because you don’t need to navigate to the standalone Lens app, or take a screenshot of content on screen.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Hold and press, then either circle whatever it is you want Google’s AI to check out or use one of the buttons on the menu bar at the bottom of the screen. From there you can check Google Search results, copy or translate text, identify music and a bunch of other things. If that sounds familiar, it’s because Visual Intelligence is doing something very similar, while also taking one awkward step out of the equation.

I’m not saying Android should universally adopt the dedicated Camera Control button. Certain phone makers may be looking to add their own dedicated camera buttons in future, and some brands have already done this in the past. That’s their prerogative. But in the same way Android has a quick-launch button gesture for the camera it should also consider a similar system for opening up Google Lens.

Older iPhones shouldn't be left out

While older iPhones can’t be retrofitted when new hardware is released, the Camera Control button is something that could still be adapted for older phones. Or at least in part. Things like the swiping gestures, which are reliant on a very specific kind of button to function, might be restricted to iPhone 16 and whatever else Apple announces in the future. But that doesn’t mean some of the Camera Control button features can’t be adapted as a gesture of some sort.

Yes, just like the double-tap camera gesture that’s been available on Android for so many years. At the very least it will make accessing the camera a little easier on older iPhones, even if there isn’t a proper software solution to everything else the Camera Control button can do.

Older iPhones won’t come with Visual Intelligence. The majority won’t come with Apple Intelligence at all, and the two that will (iPhone 15 Pro and iPhone 15 Pro Max) will be missing out — as far as we know anyway. But that shouldn’t matter that much.

The camera is one of the iPhone’s crowning features, and while Apple is offering a bunch of better ways to access it quickly thanks to iOS 18. But in the same way access to Circle to Search could be improved, so too could access to the iPhone camera on older models. Because those phones aren’t all going anywhere just because the iPhone 16 exists.

Bottom line

Apple has genuinely surprised me with the Camera Control button. Rather than just being a new button for the sake of adding a new button, it seems the company has thought long and hard about why this button should exist — and how useful it should actually be. And Apple has found a way to integrate that extra piece of hardware into a feature that the iPhone has needed for a while — Visual Intelligence.

Admittedly we still don’t know when Visual Intelligence is going to be available. Apple Intelligence looks like it’ll be stuck in beta for a little while longer, so it may be a while before we see how Visual Intelligence actually performs. But at least we know users will be able to access it quickly, with a very simple tap of a button. And that’s something Google (and others) should be considering for their own platforms.

Of course having not used an iPhone 16 in person, I can’t give a definitive opinion on Camera Control and how it works — but color me impressed so far.

More from Tom's Guide

- iPhone 16 preorder deals are here — save up to $1,000 right now

- iPhone 16 just made the iPhone 16 Pro look pointless — here’s why

- How to use iPhone 16 Action Button for more than one thing

Tom is the Tom's Guide's UK Phones Editor, tackling the latest smartphone news and vocally expressing his opinions about upcoming features or changes. It's long way from his days as editor of Gizmodo UK, when pretty much everything was on the table. He’s usually found trying to squeeze another giant Lego set onto the shelf, draining very large cups of coffee, or complaining about how terrible his Smart TV is.