Ahead of the iOS 18.4 beta release, Apple watchers were expecting big things for Siri, the personal assistant on the iPhone that's getting a reboot as part of the Apple Intelligence rollout. But even though those features weren't a part of the public beta of iOS 18.4 that hit phones late last month, it's still worth knowing what's coming — and when it could arrive.

As a reminder, every year Apple announces a slew of new features for its software platforms, but not everything is ready all at once. Such was the case with iOS 18, which arrived last September, but without the promised suite of AI tools billed as Apple Intelligence. Instead, those started arriving with iOS 18.1 in October, with subsequent updates bringing more AI-powered features.

This piece-by-piece approach was most keenly felt with Siri. iOS 18.1. The personal assistant received a new visual indicator — now the edge of your iPhone screen glows to indicate that Siri is listening. Other improvements allowed you to correct your commands for Siri mid-sentence and for you to ask follow-up questions and even interrupt the digital assistant. And you could also type to Siri for those times when you preferred not to speak your requests out loud.

All of those are welcome additions, but they're just the tip of the iceberg of what Apple has promised for Siri. And iOS 18.4 was supposed to deliver some of the most crucial changes, right up until the minute, it didn't.

In February, Bloomberg's Mark Gurman reported that the expected Siri changes weren't going to appear in iOS 18.4, at least certainly not the initial release of the software beta. And that's exactly how the situation panned out.

Those Siri features may not currently be part of iOS 18.4, which introduces a number of changes like Priority Notifications, a new Sketch style for Image Playground and a new Genmoji icon for the keyboard. But rest assured, Apple has additional Apple Intelligence-inspired changes to Siri in the works. Here's what to expect and when they may be appearing now.

Apple Intelligence: What's still coming to Siri

Personal context

Among the yet to be released Apple Intelligence features, Personal Context for Siri is probably the most significant, as well as perhaps the most eagerly anticipated. Apple has already advertised some of the capabilities this will unlock, but ultimately this is supposed to enable the company's voice assistant to understand more about your data.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

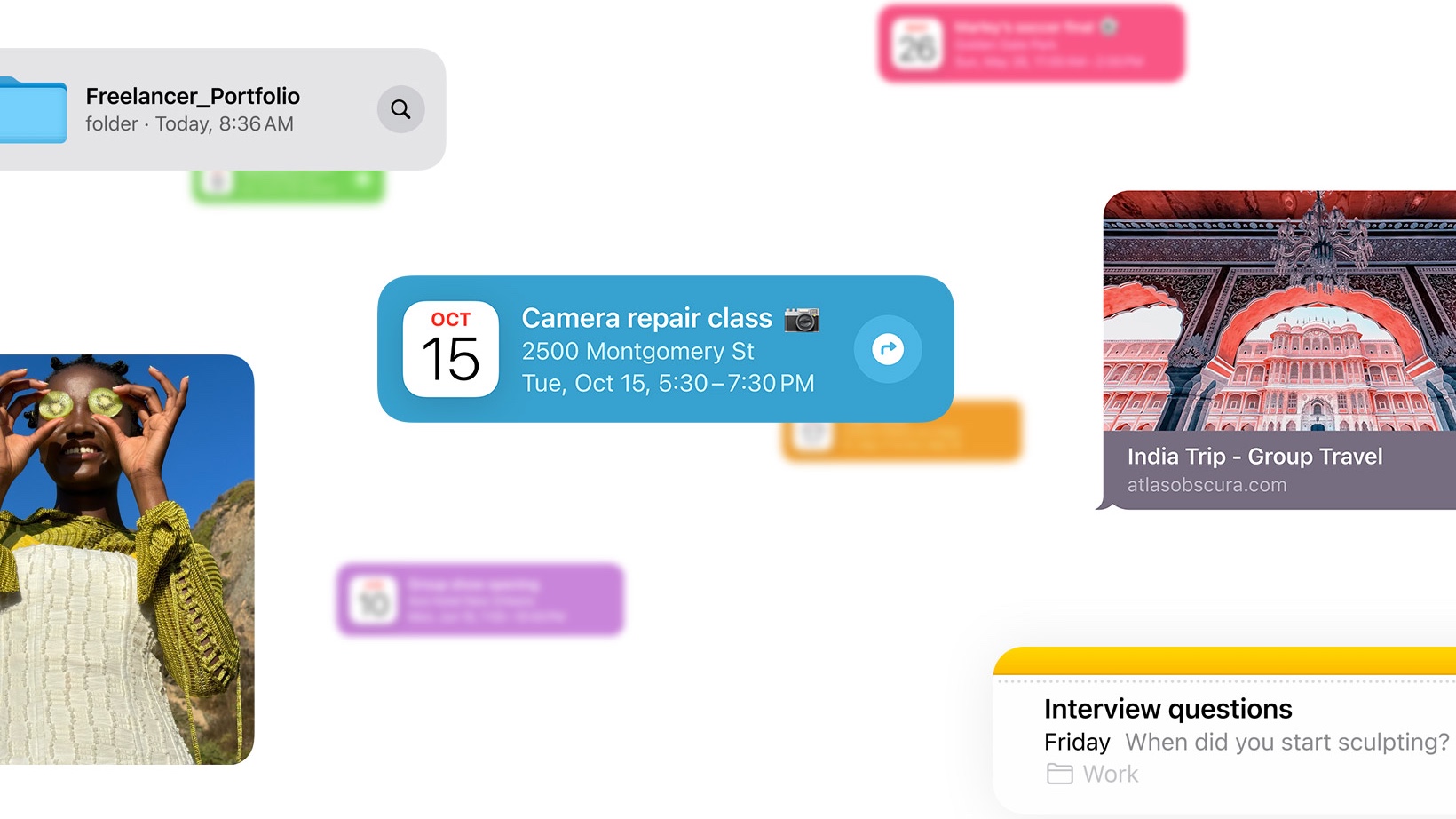

To do so, Apple Intelligence constructs a "semantic index" of your data, including things like photos, files, calendar appointements, notes, links people have sent you, and more. When you make a request, it can identify items in that index that are relevant to what you're asking for.

So, for example, you'd be able to ask it find the message where your mom sent you her flight details, whether it was in a text or an email. Or you could ask it about whether you'll have time to drive between two appointments, and it can look at your calendar, the relevant locations, and calculate the time to get between them. It can even retrieve information from pictures you've taken if, for example, you've taken a picture of your ID and need to enter details from it into a form on a website.

In addition to all of that context, Siri will also be able to understand what's on your screen, so for example, if a friend sends you details about a party, you could tell Siri to add it to your calendar.

In-app actions

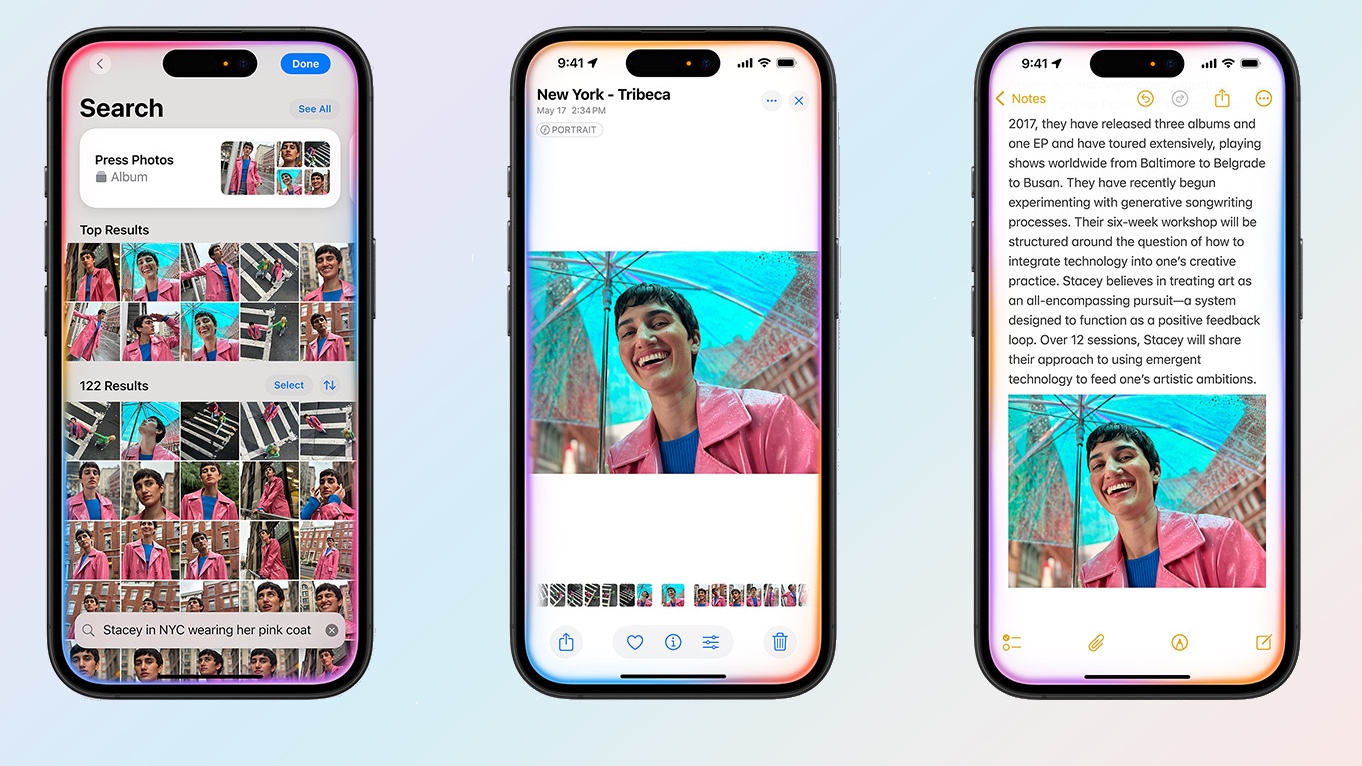

On top of Siri understanding your personal data better, iOS 18.4 stands to unlock another powerful piece of functionality: the ability for the personal assistant to take actions for you in and across apps.

This is powered by an existing framework called App Intents, which also integrates with platform features like Spotlight and Shortcuts; it allows third-party apps to tell the system about actions they can perform. For example, a camera app could advertise its ability to take a picture. Or a messaging app could offer the power to send a message. Or a mapping app could provide a way to kick off transit directions. Those actions can then be linked together: pull information from a note in the Notes app and have it sent via Messages, for example.

Siri has been able to do this to some degree in the past, though it largely required manually creating a shortcut in advance and then triggering that shortcut with the voice assistant.

However, what this new ability promises is for Siri to understand all the in-app actions available to it right off the bat, freeing up users from the cognitive overhead of having to create, in advance, shortcuts for whatever they want to do. Instead, you should just be able to tell Siri "send the note with my partner's flight times to them via Messages" and Siri just, well, does it.

When are these Siri changes coming now?

It's still possible that Apple could introduce personal context and in-app actions to Siri in iOS 18.4, as that software update is in beta and Apple's been known to add (and subtract) features during the beta process. But it's far more likely that when the final version of iOS 18.4 arrives, reportedly in April, these specific Siri improvements will still be MIA.

Instead, start thinking about iOS 18.5. That's what Bloomberg's Mark Gurman is reporting now, with Siri's missing improvements apparently set to arrive in May. That's just ahead of the likely June date for Apple's annual developer conference, where we're all but certain to see a preview of iOS 19. And according to Gurman, that software update is going to bring an entirely new Siri architecture, though that same report suggests a modernized version of Siri won't be fully available for another two years.

Why the upcoming Siri changes matter

This final set of Apple Intelligence features are the last to arrive before Apple takes the wraps off iOS 19, and with good reason: they are both the most ambitious and the most difficult to implement.

Personal context will require the synthesis of a tremendous amount of your data and sifting through all of that information will no doubt push Apple's machine learning models to their limits. As for in-app actions, while a powerful way of marshaling the capabilities of Apple's own built-in apps, it will require buy-in from third-party developers to go from being a tech demo to a feature that everybody will want to use.

But the combination of those features aims to deliver on a promise first made at Siri's introduction more than a decade ago: a true virtual assistant that can take care of complex and onerous tasks for users. To date, people often spend more time trying to adapt themselves to Siri — figuring out how to phrase queries to get the right response or even what they should bother asking it to do — rather than Siri being able to adapt itself to people.

If these improvements work as Apple intends ‚ and that is, based on the previous Apple Intelligence features the company has rolled out, rather a big if — it could herald a new age for the virtual assistant, and bear out Apple's investment in AI technology by providing a feature that actually makes the lives of its customers better.

More from Tom's Guide

Dan Moren is the author of multiple sci-fi books including The Caledonian Gamibt and The Aleph Extraction. He's also a long-time Mac writer, having worked for Macworld and contributed to the Six Colors blog, where he writes about all things Apple. His work has also appeared in Popular Science, Fast Company, and more

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.