This Android camera feature is awesome — but the iPhone still does it better

Autofocus tracking performs better on Android, but the iPhone implements it cleverly

I spend a large amount of my time, both in and out of work, using cameras — from some of the best camera phones to the best mirrorless cameras. As such, I'll admit that it isn't very often that I find smartphone cameras impressive, or even pleasantly surprising.

Don't get me wrong, the cameras in today's smartphones are great for "general use" photography — dog photos, food snaps, family memories, and so on. However, they still cannot replicate the quality or control that a full camera body gives you. What's more, camera phones naturally don't come with many of the features a dedicated DSLR or mirrorless camera will give you.

One of those features is autofocus tracking — where the camera tracks a subject that it's focusing on through the frame, ensuring it remains in focus even when it moves. This is a boon for any photographers who take photos of moving subjects.

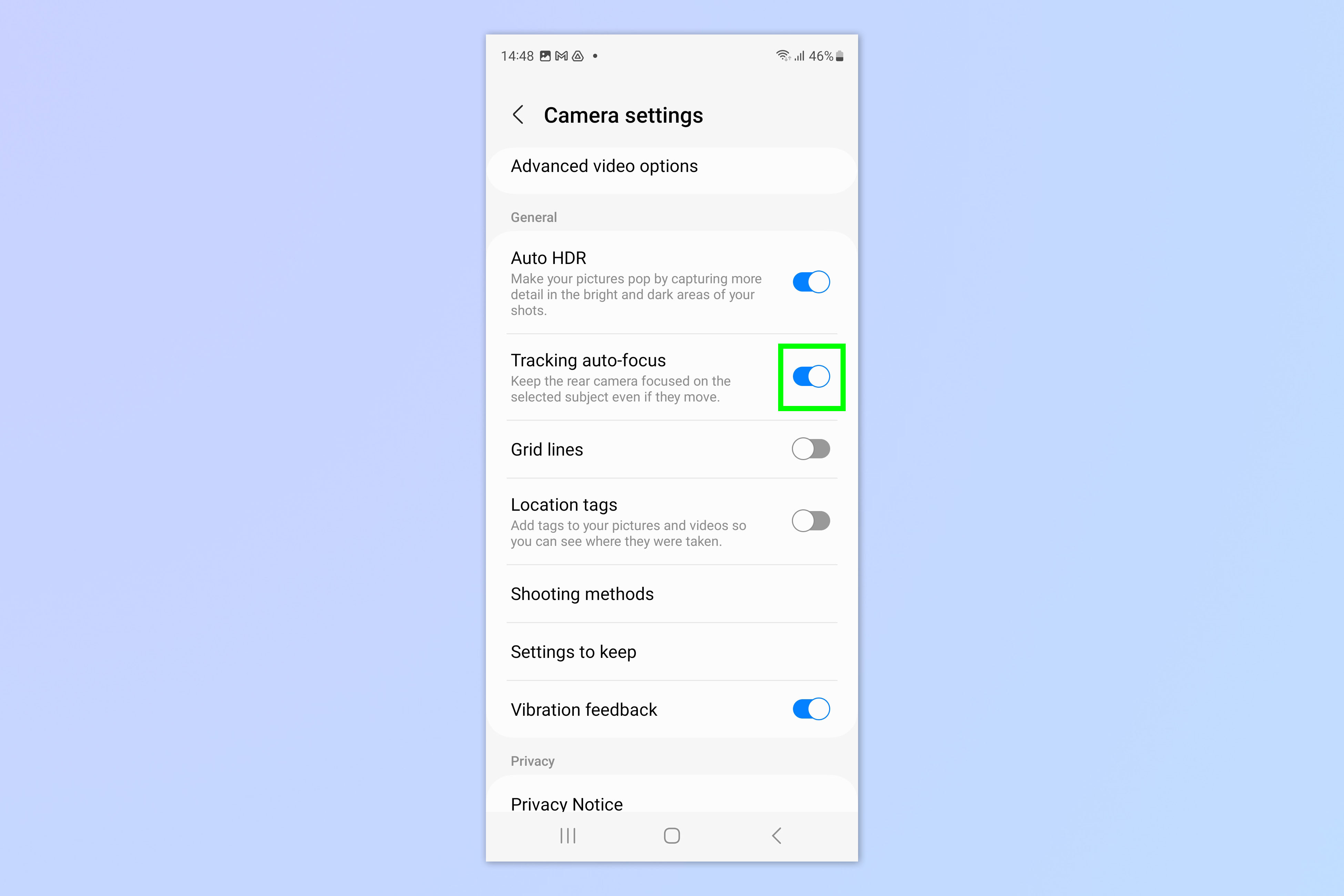

In my opinion, this is an underrated feature in the smartphone arena — I don't tend to see it mentioned much in promotional material. So I was pretty happy to see that many Android phones, such as my Galaxy S20 and my Pixel 6 Pro feature subject tracking autofocus. (You'll need to know how to enable autofocus tracking on Samsung; on the Pixel, the feature is turned on by default.)

For me, decent tracking would add weight to a decision to purchase either of these phones over an iPhone. After all, autofocus features are dealbreakers in the dedicated camera world (but weirdly not as much in the smartphone sphere) — I even switched my main camera once to buy one that could detect and track my dogs running at full pelt. The iPhone 14 Pro, though, doesn't feature focus tracking in stills, which kinda sucks.

However, there's one key feature that Android phones are missing when it comes to tracking, which iPhones have. And for most people, the iPhone will make more sense.

Autofocus tracking: Android vs iPhone

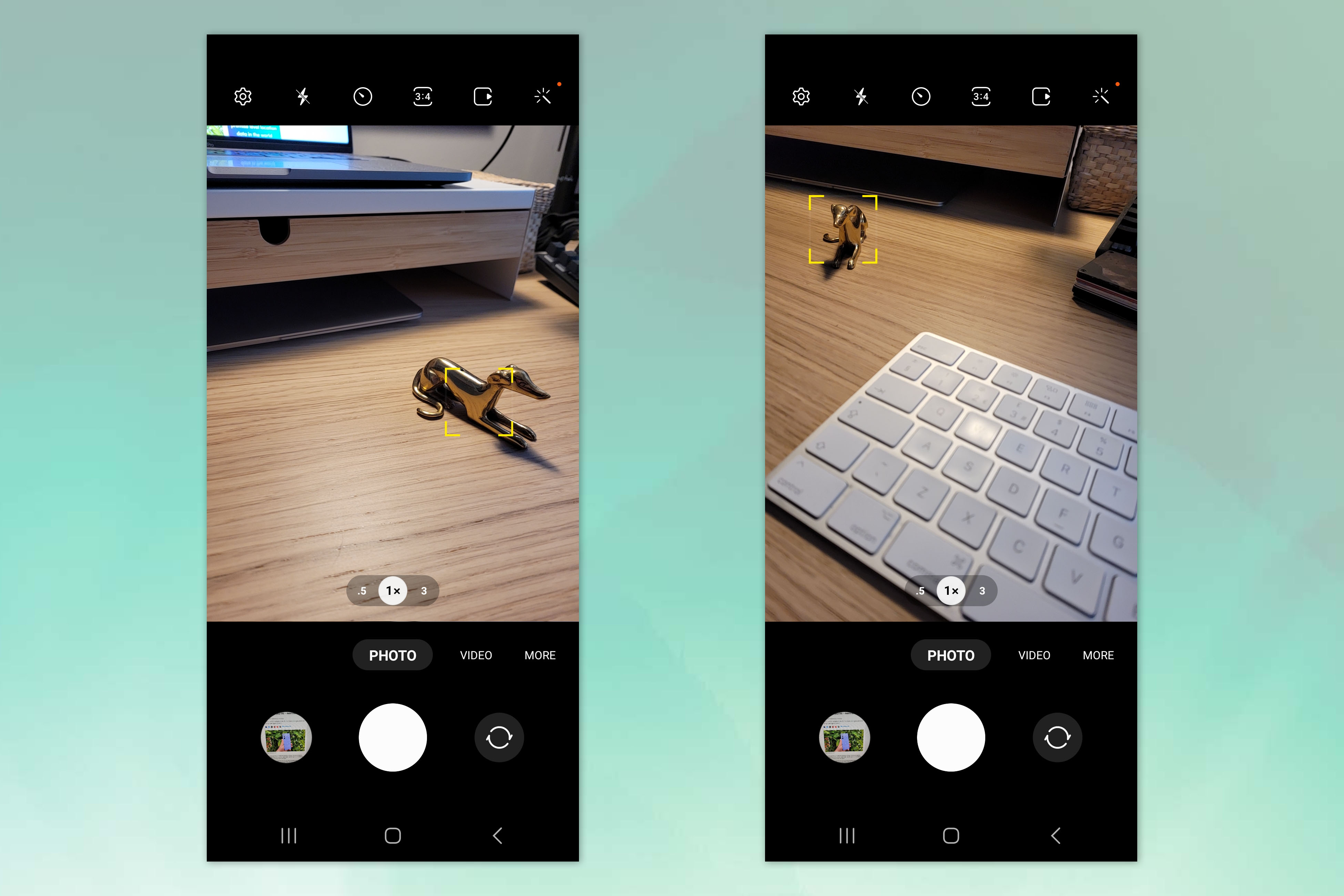

In testing out this feature using my Galaxy S20 and Pixel 6 Pro, I was genuinely surprised at how well autofocus tracking performed on both phones. Smartphone tracking is obviously no match for a high end mirrorless camera's AF system — but the autofocus and tracking seems to work to the very edge of the frame on both devices, which is more than can be said even for some cameras.

The tracking held onto subjects that moved moderately fast through the frame, although lost anything moving fast, such as my dogs chasing a ball. In that respect, it wouldn't hold up well in sports or wildlife photography.

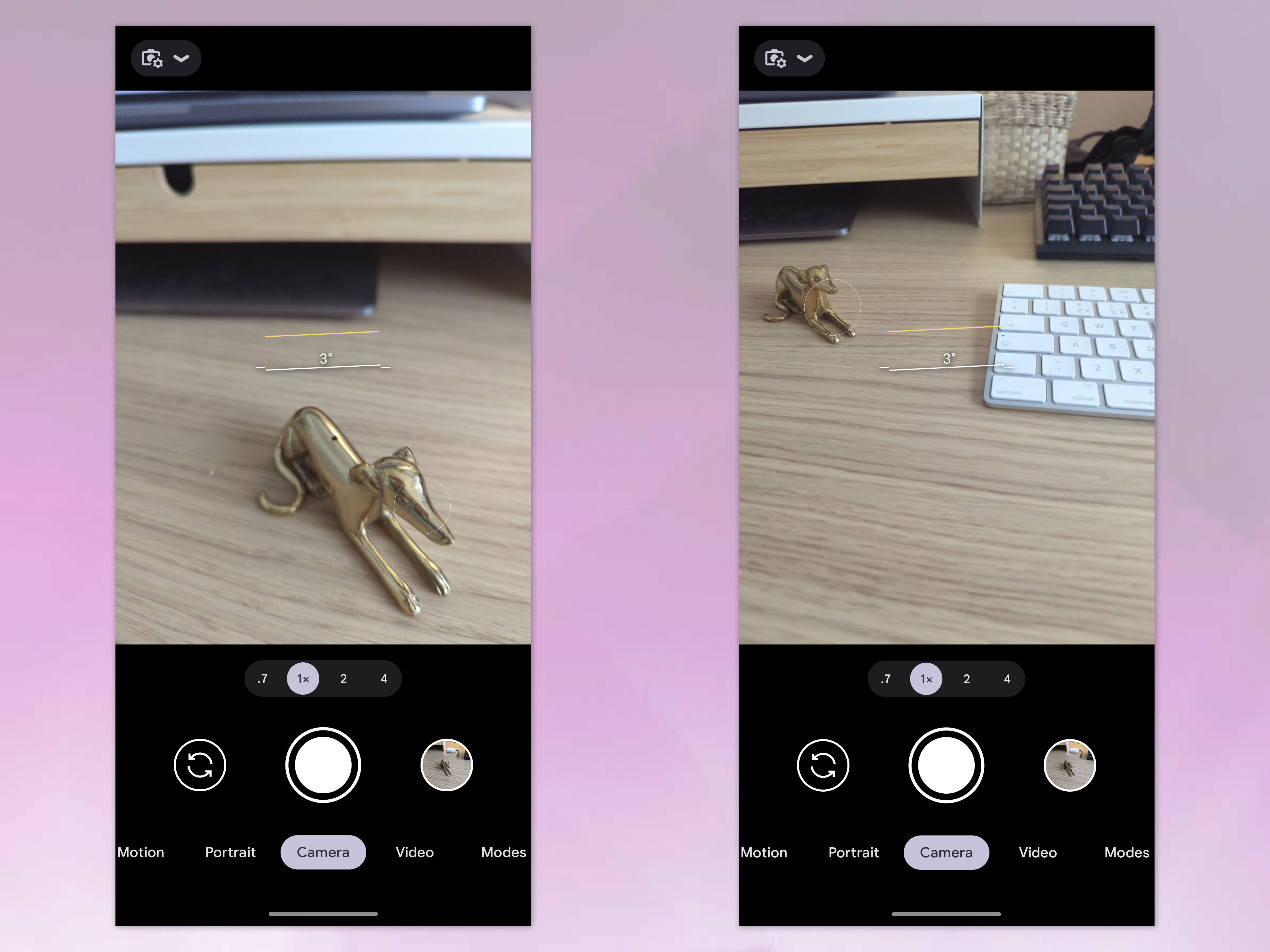

I also tested this feature on my iPhone 13 Pro Max and iPhone 14 Pro to see if they could match the Galaxy S20 and Pixel 6 Pro. On all phones, I moved camera to force the model greyhound to pan from the bottom right to the top left of my phone screen, while also pulling back to ensure the greyhound moved out of the original focal plane, meaning the camera would need to completely refocus.

First, the iPhone 13 Pro Max didn't have a subject tracking mode in Photo, only in Cinematic video mode — which kinda sucks. Second, I found the tracking on the Android devices much more tenacious: it held the subject for much longer before losing focus, and tended to incorrectly move the focus point much less. What was especially cool on the Samsung, though, was that the detection picked up subjects that moved back into frame after going out of shot. The Pixel lacked this feature, which I found rather disappointing.

The iPhone beats Android for TikTok

Where the iPhone currently beats both the S20, though, is in frontal view tracking. Although the iPhone's subject tracking only works in Cinematic Mode, it also functions fully on the frontal camera. For most people, this makes more sense than the rear camera — we live in an increasingly video-first age, where more and more people use their smartphones to create video content.

As such, having the ability to track your face while shooting video in selfie mode for TikTok or Shorts, is arguably more useful than having rear camera AF stills tracking. And besides, anyone who wants decent tracking for sports, wildlife or other hobbyist genres of photography will probably own a dedicated camera anyway.

Even on the latest Samsung S23, subject tracking is restricted to the rear camera — totally limiting its use in selfie content. It's disappointing that three generations after my S20, this still isn't implemented into the frontal camera. For today's content-creating masses, thats a win for the iPhone.

More from Tom's Guide

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Peter is Reviews Editor at Tom's Guide. As a writer, he covers topics including tech, photography, gaming, hardware, motoring and food & drink. Outside of work, he's an avid photographer, specialising in architectural and portrait photography. When he's not snapping away on his beloved Fujifilm camera, he can usually be found telling everyone about his greyhounds, riding his motorcycle, squeezing as many FPS as possible out of PC games, and perfecting his espresso shots.

-

S Timpson No the iPhone doesn't do it beter - at least on the Samsung Fold 4.Reply

Auto tracking is a cool feature, and the Samsung Fold's and Flips do do this in selfie mode as you can use the main camera as the selfie camera if you open them up. Score one for Samsung over the iPhone at least for the flips and folds. And if you are a content creator the main camera's are very high quality as well.