Neon: Is the world ready for artificial humans?

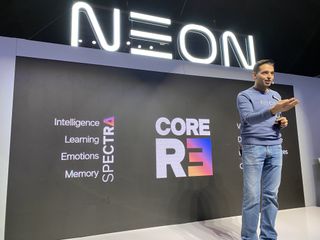

Neon's highly advanced avatars express life-like emotions, but it's clear they are still very early in development.

Everyone following CES 2020 seems captivated by Neon artificial human technology. Yep, the intangible Neon is more viral than Charmin’s toilet-paper robot.

Samsung-supported startup Star Labs has been extremely cryptic when presenting Neon, parading the life-like avatars around the world’s largest tech show while sharing little about how they work or why they’re on display.

These avatars of varying ages and ethnicities look real enough that one might mistake them for video streams of actual humans. But "Neons" are actually conceptual chatbots created to hold conversations and convey emotions. Their forthcoming AI-foundations will let them learn to become more human-like over time.

Confused? Don’t worry. I was, too, until I had the chance to learn about Neon’s foundations during its second day in the wild at CES 2020.

What is Neon?

The question is actually "What is a Neon?” and the answer is a computer-generated avatar that looks like a human. But unlike AI assistants such as Alexa, each Neon is its own entity. There’s no catch-all, “Hey Neon,” inhibiting Neons from developing singular personalities.

In fact, Star Labs CEO Pranav Mistry assigned individual human names (and professions, even) to each of the avatars on display. (Star Labs is a Samsung subsidiary.)

During the Neon demonstration I attended at CES, Mistry explained that the project began with extracting face maps from existing videos. Using landmarks and key points, he developed a code for manipulating facial features with graphic imaging. Eventually the program, called Core R3, became trained enough to create faces and bodies. And that’s what he brought to show at CES.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Neon demo: What can it do?

Neons can replicate human activity and expressions. A Neon can be controlled to raise a single eyebrow or fully replicate emotions like joy and confusion. When I saw the technology in action, a Neon employee used a tablet to direct four different activated avatars.

I could have sworn I was watching pre-recorded looped video had I not seen the manual operation of the Neon.

But the person could only control one Neon at a time. Those not in use, or set in “Auto” mode, continue existing like a human might. A yoga instructor Neon stretched out, while another in jeans and white sneakers flipped through a book. I would have sworn I was watching pre-recorded looped video had I not seen the manual operation moments prior.

Neons can talk, too, although not very well. The code grabs highly-robotic, third-party audio to make a Neon speak basic phrases like “Hello.” While I was impressed that it could do so using different languages, the awkward animations while speaking spoiled the avatar's attempt to pass as an actual person.

In addition, the renders Neon’s communication capabilities are clearly in their early stages.

What's next for Neon?

Today’s Neons are far from perfect. Though they reflect emotions with eerie accuracy, their communication skills at this early stage feel robotic and disrupt the illusion of humanity. That’s why Neon is looking for partners to help build out the AI component of Neon, which is already in development under the name Spectra.

Once Spectra is out in the wild, Neons will begin learning emotions and personalities using memory. But this is going to take some time, as powering a non-AI Neon already demands a ton of computing power. We're talking 128 cores.

It seems like we’re learning about Neon in its early stages to start familiarizing ourselves with how the avatars are made before they’re equipped to become our best friends. Or our hotel concierges or healthcare providers.

When this technology hits the mainstream, it’s destined to have ethical implications. The only way I can see Neon thriving is if its users fully comprehend how it works through demos like those taking place at CES.

Be sure to check out our CES 2020 hub for the latest announcements and hands-on impressions from Las Vegas.

Kate Kozuch is the managing editor of social and video at Tom’s Guide. She writes about smartwatches, TVs, audio devices, and some cooking appliances, too. Kate appears on Fox News to talk tech trends and runs the Tom's Guide TikTok account, which you should be following if you don't already. When she’s not filming tech videos, you can find her taking up a new sport, mastering the NYT Crossword or channeling her inner celebrity chef.