Amazon Web Services, the server system that hosts 32% of the internet according to Statista, has been hit with a major outage today (Dec. 7) affecting everything from Alexa, Prime Video and Ring security cameras to Disney Plus and League of Legends. Per a report by Bloomberg, it seems that Amazon delivery drivers are also being affected by the outage, affecting drop-off times. And according to users on the r/sysadmin subreddit, everything is being affected, from school systems to warehouses.

As of 6:30 p.m. ET, much of the issues that caused service shutdown have been migrated. Amazon claims that all services are independently working, and that AWS is still pushing towards full recovery. Certain services such as SSO, Connect, API Gateway, ECS/Fargate and Eventbridge are still running into issues, however.

Down Detector, a website that tracks real-time outage information, shows AWS reporting significant problems. As of writing, here are all the services that are down or experiencing some problems:

- Disney Plus

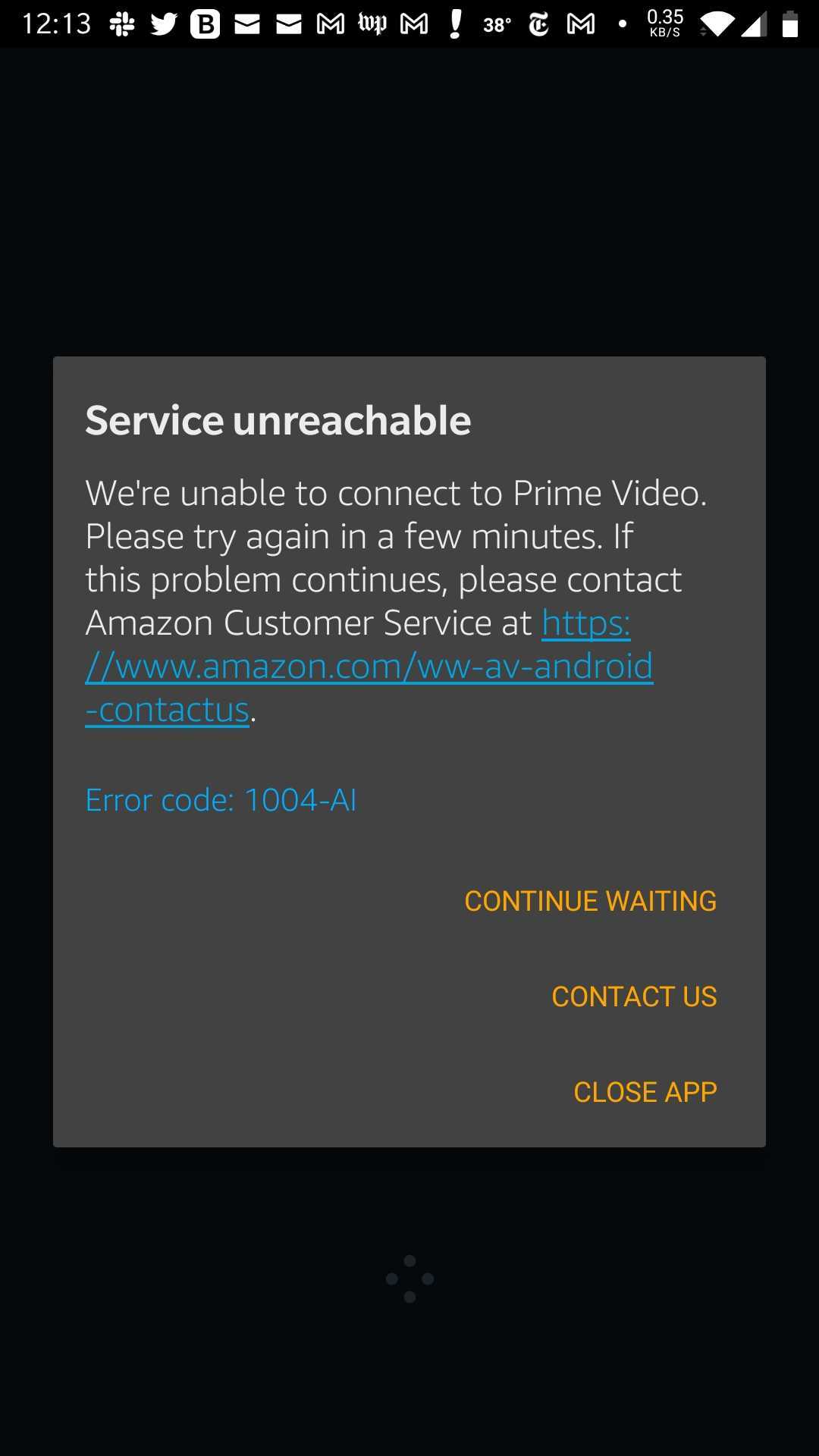

- Prime Video

- Alexa

- Ring

- Tinder

- Canva

- League of Legends

- League of Legends: Wild Rift

- Valorant

- PUBG

- Dead by Daylight

- Clash of Clans

- Duolingo

- Pluto TV

The Amazon Web Services Twitter account has not yet given an update regarding this situation. But the official AWS Service Health Dashboard does have up-to-date intel on what's happening.

At 6:03 p.m. ET, AWS said that many services have now recovered. Only certain services are still being impacted.

In an earlier message, it stated that there is a problem in the US-EAST-1 Region, hosted in Virginia, but that a fix is being worked on:

"We are seeing impact to multiple AWS APIs in the US-EAST-1 Region. This issue is also affecting some of our monitoring and incident response tooling, which is delaying our ability to provide updates. We have identified the root cause and are actively working towards recovery."

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

That message was updated at around 1.15 p.m. ET to add that "We have identified root cause of the issue causing service API and console issues in the US-EAST-1 Region, and are starting to see some signs recovery. We do not have an ETA for full recovery at this time."

Below we've listed all the previous prompts in chronological order. All times are in PST.

[8:22 AM PST] We are investigating increased error rates for the AWS Management Console.

[8:26 AM PST] We are experiencing API and console issues in the US-EAST-1 Region. We have identified root cause and we are actively working towards recovery. This issue is affecting the global console landing page, which is also hosted in US-EAST-1. Customers may be able to access region-specific consoles going to https://console.aws.amazon.com/. So, to access the US-WEST-2 console, try https://us-west-2.console.aws.amazon.com/

[8:49 AM PST] We are experiencing elevated error rates for EC2 APIs in the US-EAST-1 region. We have identified root cause and we are actively working towards recovery.

[8:53 AM PST] We are experiencing degraded Contact handling by agents in the US-EAST-1 Region.

[8:57 AM PST] We are currently investigating increased error rates with DynamoDB Control Plane APIs, including the Backup and Restore APIs in US-EAST-1 Region.

[9:08 AM PST] We are experiencing degraded Contact handling by agents in the US-EAST-1 Region. Agents may experience issues logging in or being connected with end-customers.

[9:18 AM PST] We can confirm degraded Contact handling by agents in the US-EAST-1 Region. Agents may experience issues logging in or being connected with end-customers.

[9:37 AM PST] We are seeing impact to multiple AWS APIs in the US-EAST-1 Region. This issue is also affecting some of our monitoring and incident response tooling, which is delaying our ability to provide updates. We have identified the root cause and are actively working towards recovery.

[10:12 AM PST] We are seeing impact to multiple AWS APIs in the US-EAST-1 Region. This issue is also affecting some of our monitoring and incident response tooling, which is delaying our ability to provide updates. We have identified root cause of the issue causing service API and console issues in the US-EAST-1 Region, and are starting to see some signs of recovery. We do not have an ETA for full recovery at this time.

[11:26 AM PST] We are seeing impact to multiple AWS APIs in the US-EAST-1 Region. This issue is also affecting some of our monitoring and incident response tooling, which is delaying our ability to provide updates. Services impacted include: EC2, Connect, DynamoDB, Glue, Athena, Timestream, and Chime and other AWS Services in US-EAST-1. The root cause of this issue is an impairment of several network devices in the US-EAST-1 Region. We are pursuing multiple mitigation paths in parallel, and have seen some signs of recovery, but we do not have an ETA for full recovery at this time. Root logins for consoles in all AWS regions are affected by this issue, however customers can login to consoles other than US-EAST-1 by using an IAM role for authentication.

[12:34 PM PST] We continue to experience increased API error rates for multiple AWS Services in the US-EAST-1 Region. The root cause of this issue is an impairment of several network devices. We continue to work toward mitigation, and are actively working on a number of different mitigation and resolution actions. While we have observed some early signs of recovery, we do not have an ETA for full recovery. For customers experiencing issues signing-in to the AWS Management Console in US-EAST-1, we recommend retrying using a separate Management Console endpoint (such as https://us-west-2.console.aws.amazon.com/). Additionally, if you are attempting to login using root login credentials you may be unable to do so, even via console endpoints not in US-EAST-1. If you are impacted by this, we recommend using IAM Users or Roles for authentication. We will continue to provide updates here as we have more information to share.

[2:04 PM PST] We have executed a mitigation which is showing significant recovery in the US-EAST-1 Region. We are continuing to closely monitor the health of the network devices and we expect to continue to make progress towards full recovery. We still do not have an ETA for full recovery at this time.

[2:43 PM PST] We have mitigated the underlying issue that caused some network devices in the US-EAST-1 Region to be impaired. We are seeing improvement in availability across most AWS services. All services are now independently working through service-by-service recovery. We continue to work toward full recovery for all impacted AWS Services and API operations. In order to expedite overall recovery, we have temporarily disabled Event Deliveries for Amazon EventBridge in the US-EAST-1 Region. These events will still be received & accepted, and queued for later delivery.

[3:03 PM PST] Many services have already recovered, however we are working towards full recovery across services. Services like SSO, Connect, API Gateway, ECS/Fargate, and EventBridge are still experiencing impact. Engineers are actively working on resolving impact to these services.

This is not the first time this has happened. In July, AWS services were disrupted for around two hours, causing issues on Amazon stores worldwide.

In June, another internet outage, this time affecting the Fastly content delivery network (CDN) brought down Amazon, Reddit, Twitch and huge numbers of other big sites.

We will continue updating this story as it develops.

Imad is currently Senior Google and Internet Culture reporter for CNET, but until recently was News Editor at Tom's Guide. Hailing from Texas, Imad started his journalism career in 2013 and has amassed bylines with the New York Times, the Washington Post, ESPN, Wired and Men's Health Magazine, among others. Outside of work, you can find him sitting blankly in front of a Word document trying desperately to write the first pages of a new book.