ChatGPT-4 recap — all the new features announced

The next iteration of GPT is here and OpenAI gave us a preview

OpenAI has announced its follow-up to ChatGPT, the popular AI chatbot that launched just last year. The new GPT-4 language model is already being touted as a massive leap forward from the GPT-3.5 model powering ChatGPT, though only paid ChatGPT Plus users and developers will have access to it at first.

GPT-4 will give ChatGPT all kinds of new features, but the biggest highlight is the rumored multimodal capabilities, which could allow the chatbot AI to handle text, images and eventually even video inputs, though only text and image inputs were used in today's livestream.

It is unclear at this time if GPT-4 will also be able to output in multiple formats one day, but during the livestream we saw the AI chatbot used as a Discord bot that could create a functioning website with just a hand-drawn image. Quite a step up from writing a few lines of text.

While this livestream was focused on how developers can use the new GPT-4 API, the features highlighted here were nonetheless impressive. In addition to processing image inputs and building a functioning website as a Discord bot, we also saw how the GPT-4 model could be used to replace existing tax preparation software and more. Below are our thoughts from the OpenAI GPT-4 Developer Livestream, and a little AI news sprinkled in for good measure.

ChatGPT-4: Biggest new features

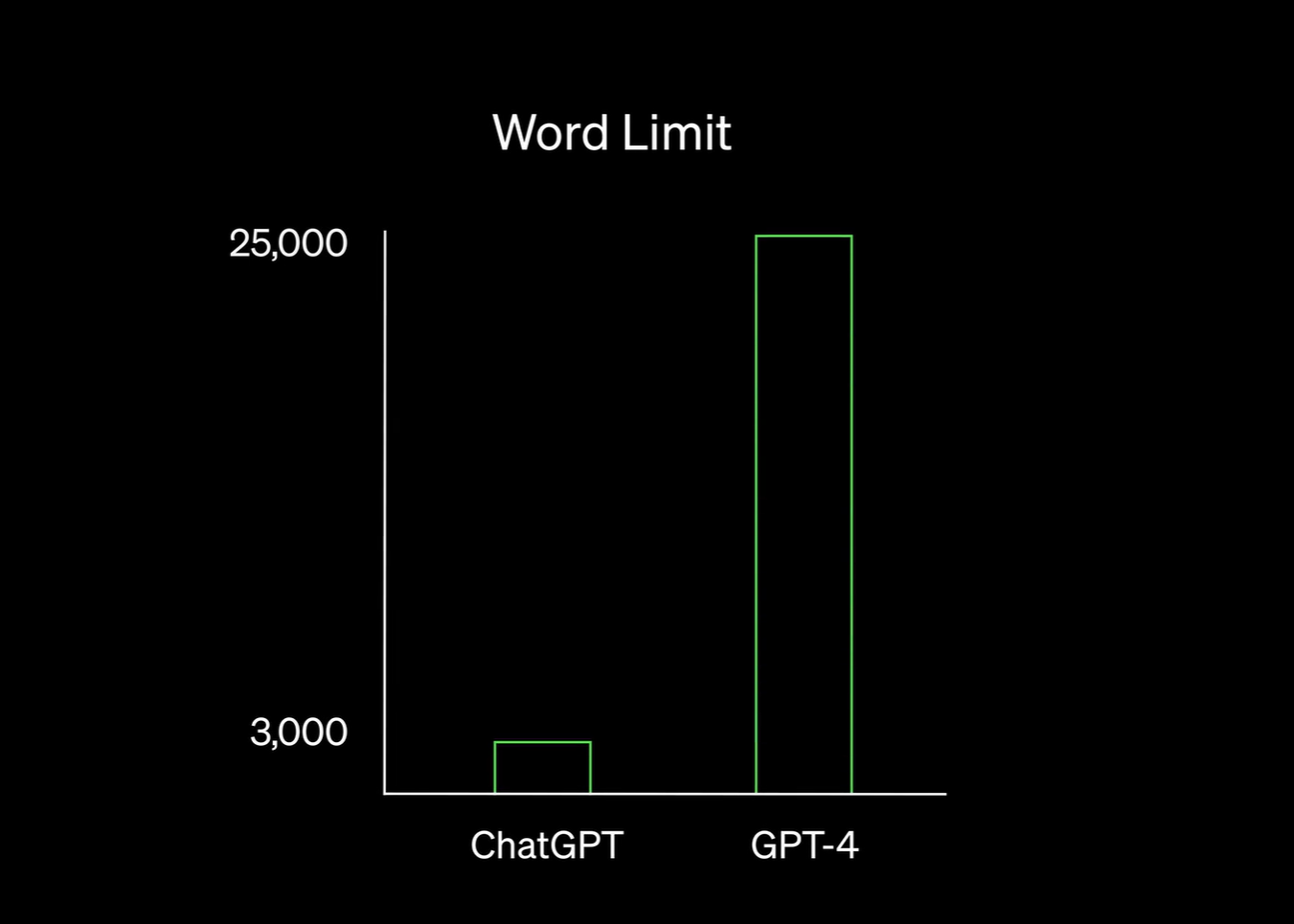

Processes 8x the words of ChatGPT: According to OpenAI, the GPT-4 model can respond using up to 25,000 words, rather than the 3,000-word limit for the free version of ChatGPT. This allows the chatbot to provide greater context in its responses, as well as handle larger text inputs. This allows it to summarize entire blog posts or even websites.

Handles text and images: Unlike the current version of ChatGPT, GPT-4 can process image inputs as well as the text inputs ChatGPT can currently handle. OpenAI has yet to show off any video input functionality, though Microsoft rumored that this is coming at a recent AI event.

Can build websites from just an image: In the GPT-4 Developer Livestream, OpenAI showed how GPT-4 could take a handwritten sketch of a website and turn it into a functioning website that successfully ran JavaScript and even generated additional relevant content to fill the site.

The new Bing with ChatGPT: In a blog post, Microsoft confirmed that GPT-4 is the GPT model that has been running the new Bing with ChatGPT. We had previously known that the new Bing with ChatGPT was powered by a newer version of the GPT-3.5 powering OpenAI's free research preview, but this is the first confirmation that it was GPT-4 the entire time.

Availability rolling out soon: According to OpenAI's product announcement, GPT-4 will be made available to ChatGPT Plus users and developers using the ChatGPT API. It is unclear if the free version of ChatGPT will be upgraded to GPT-4 at any point.

Welcome to our coverage of the OpenAI GPT-4 Developer Livestream! OpenAI already announced the new GPT-4 model in a product announcement on its website today and now they are following it up with a live preview for developers.

The initial promises sound impressive. OpenAI claims that GPT-4 can "take in and generate up to 25,000 words of text." That's significantly more than the 3,000 words that ChatGPT can handle. But the real upgrade is GPT-4's multimodal capabilities, allowing the chatbot AI to handle images as well as text. Based on a Microsoft press event earlier this week, it is expected that video processing capabilities will eventually follow suit.

We are now just under 30 minutes away from the OpenAI GPT-4 Developer Livestream, which you can view over at OpenAI's YouTube channel.

OpenAI isn't the only company to make a big AI announcement today. Earlier, Google announced its latest AI tools, including new generative AI functionality to Google Docs and Gmail.

And we are off! Greg Brockman is here to discuss the new GPT-4 model. You can go to OpenAI's Discord to submit a question.

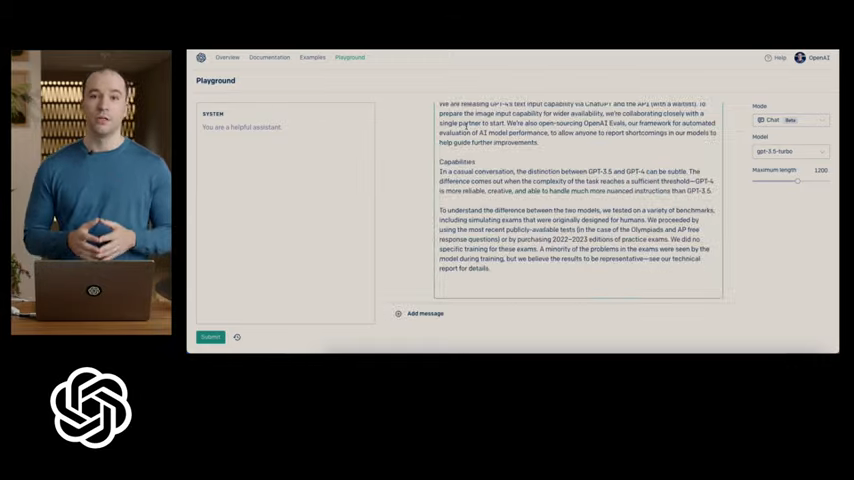

First, we are focusing on the Chat Completions Playground feature that is part of the API kit that developers have access to. This allows developers to train and steer the GPT model towards the developers goals.

In this demo, GPT-3.5, which powers the free research preview of ChatGPT attempts to summarize the blog post that the developer input into the model, but doesn't really succeed, whereas GPT-4 handles the text no problem. While this is definitely a developer-facing feature, it is cool to see the improved functionality of OpenAI's new model.

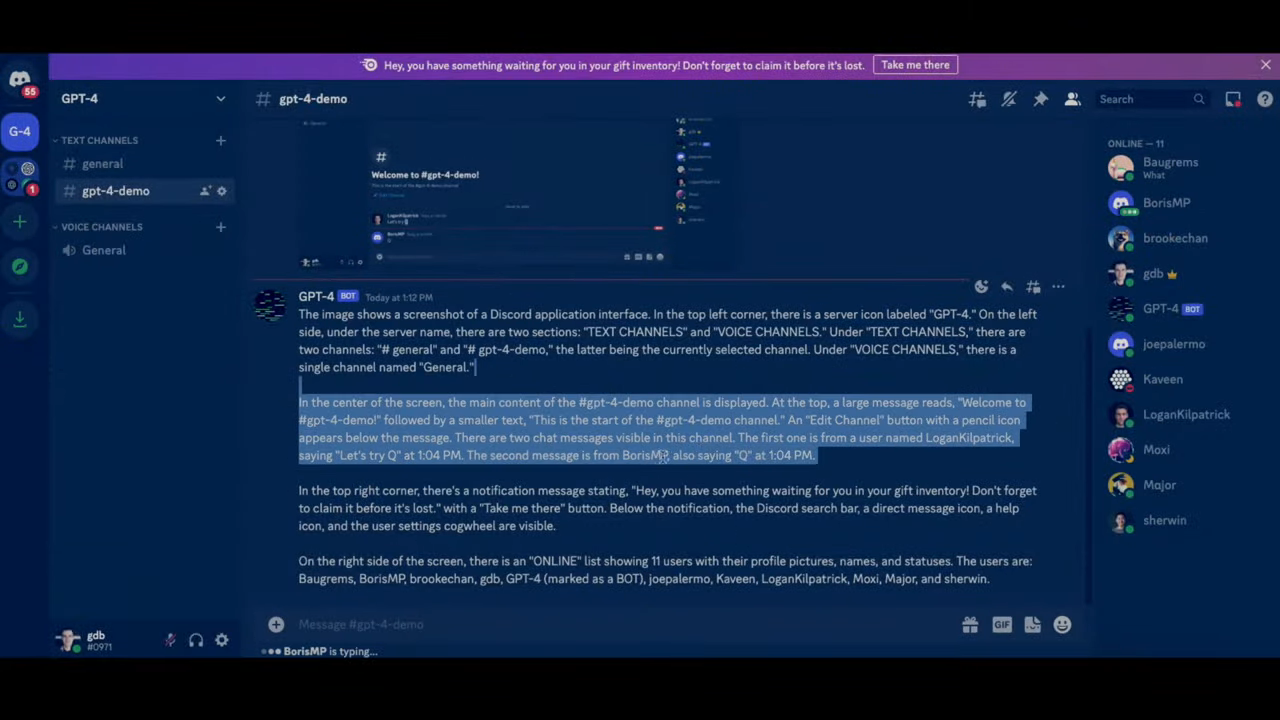

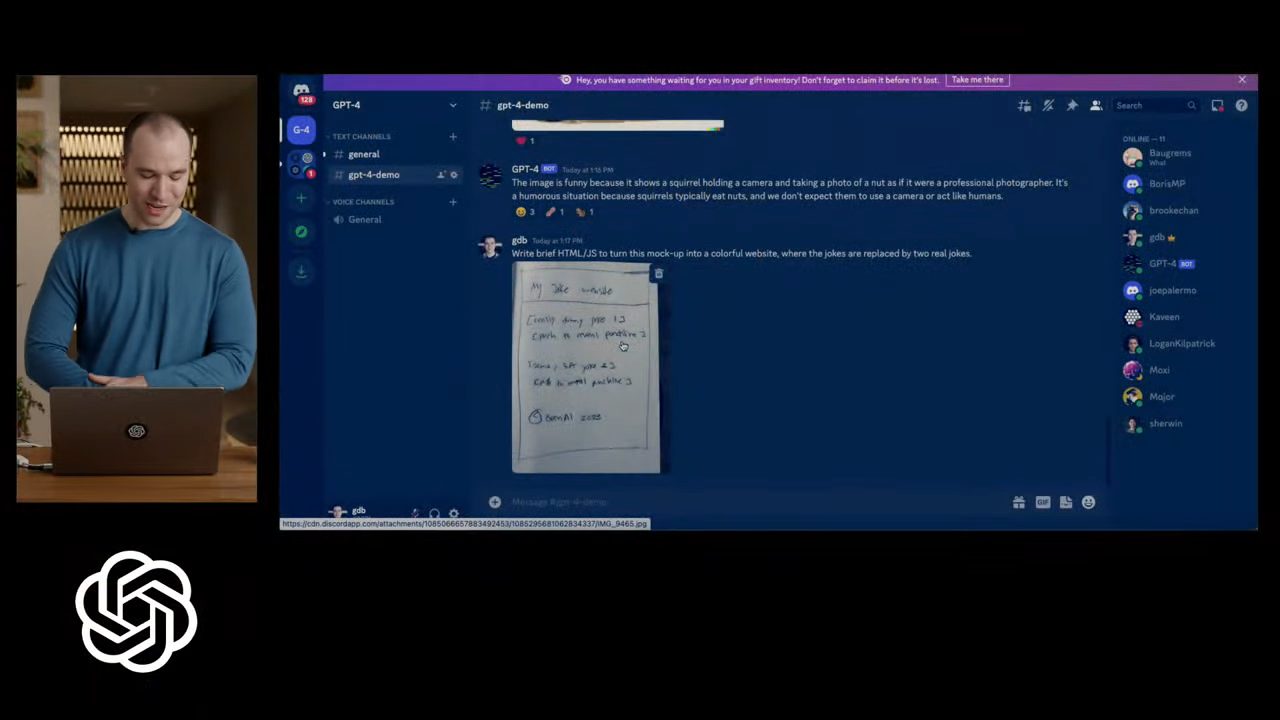

Next up: building with GPT-4. In this demo, OpenAI is using GPT-4 to build a Discord bot. OpenAI claims that GPT-3.5 could not handle this task at all, especially as the bot is being asked to handle both text and image inputs. GPT-4's multimodal capabilities allow developers to even use images.

While the bot couldn't handle the image input instantly, and it's still not without bugs, it was able to recognize the image provided on Discord and provide context from the image. Definitely, something that the current free ChatGPT cannot do.

Okay, this is seriously cool. Using the Discord bot created in the GPT-4 Playground, OpenAI was able to take a photo of a handwritten website (see photo) mock-up and turn it into a working website with some new content generated for the website. While OpenAI says this tool is very much still in development, that could be a massive boost for those hoping to build a website without having the expertise to code on without GPT's help.

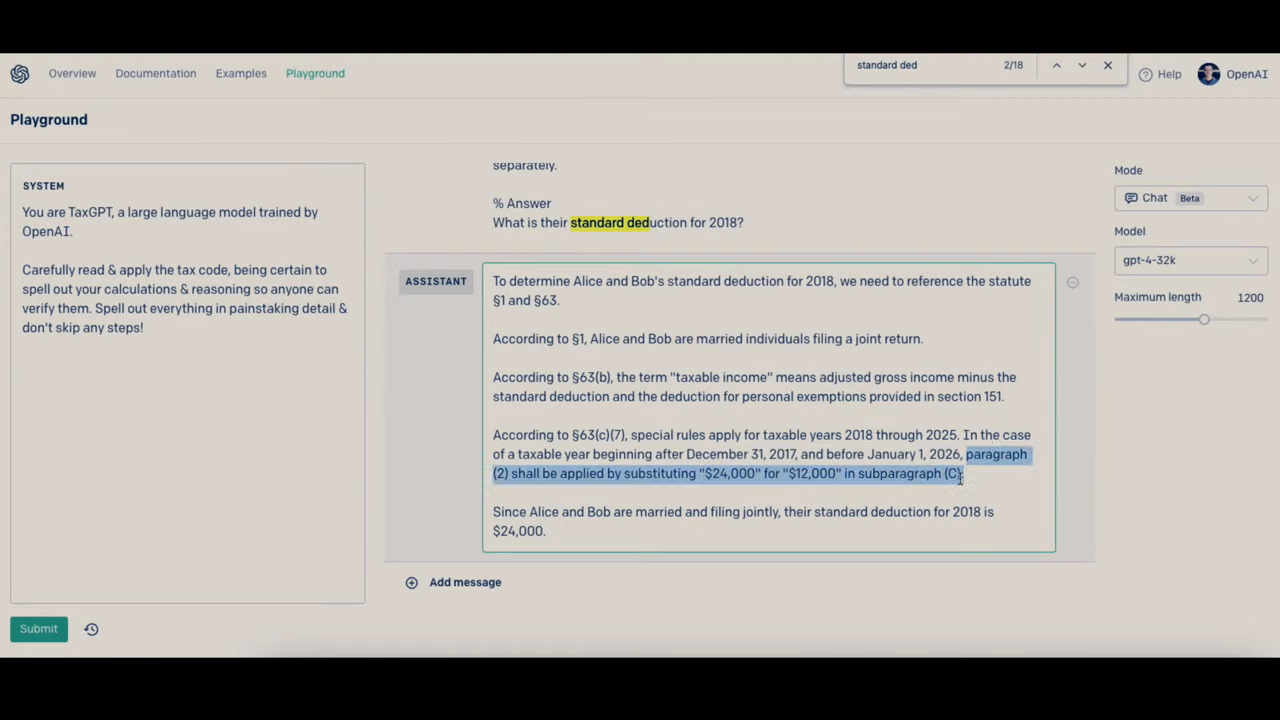

And now we get GPT doing taxes. While GPT is not a tax professional, it would be cool to see GPT-4 or a subsequent model turned into a tax tool that allows people to circumnavigate the tax preparation industry and handle even the most complicated returns themselves.

And that is it for the GPT-4 developer livestream. While we didn't get to see some of the consumer facing features that we would have liked, it was a developer-focused livestream and so we aren't terribly surprised. Still, there were definitely some highlights, such as building a website from a handwritten drawing, and getting to see the multimodal capabilities in action was exciting. Hopefully, more will be revealed in the near future.