Google and Samsung look set to take on Apple Vision Pro with 4-year-old radar tech and a VR sensor that is inspired by bats

Google and Samsung has entered the VR headset chat

Apple Vision Pro is close to launch, and the competition around it is starting to heat up. From the likes of the Meta Quest 3 offering a similar experience for cheaper, and the Xreal Air 2 using software to give you spatial computing in its standard AR glasses, companies are lining up to take on Cupertino.

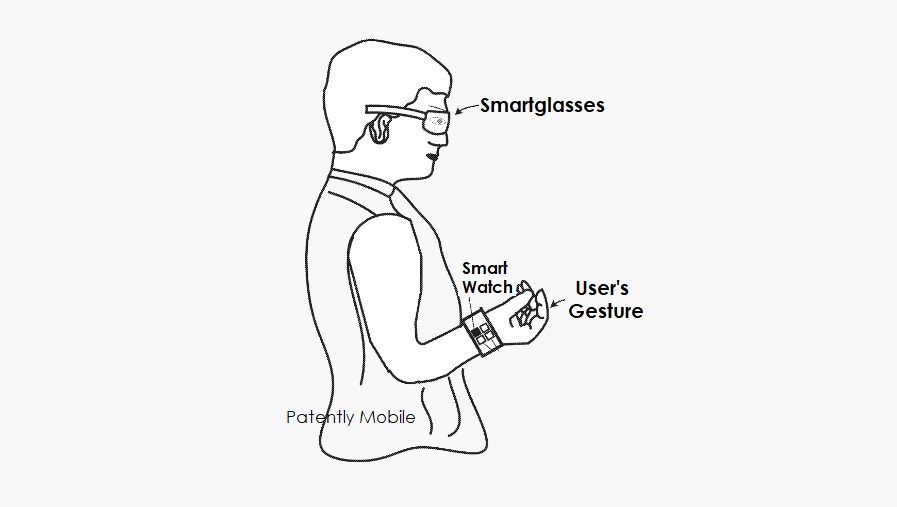

And now, two more companies are lining up with some big ideas. Reported by Patently Apple, Google is reusing its radar tech from the Pixel 4 as a breakthrough method of hand gesture recognition, and Samsung has made two new image sensors that drastically improve inputs and depth sensing.

Back to radars

Some of the most successful gadgets come from companies that take old tech from bad devices and reuse it in a far better way. By bringing back the ‘Soli’ radar solution from Pixel 4 to use in an XR headset, Google is onto something in my opinion.

The Apple Vision Pro’s method of tracking hand gestures is rather basic in principle — stick a load of cameras in the bottom to see what you’re doing and call it a day. Yes, I know there is a lot of software work and computation happening in the background to make this work, but this is what’s happening on a hardware level.

Google’s recent patent shows a reinvention of the method to do this, by taking the same radar tech that gave the Pixel 4 all of its weird touch-free gesture controls, and putting it in a smartwatch. With this band, the headset can read gesture inputs using the radar, as well as the tiniest movements in muscles or ligaments of up to 3mm.

What is the main benefit of sticking all the tech around the wrist? It will dramatically reduce the size of the headset itself. Apple’s hefty option has to sport those larger dimensions to fit all the cameras and sensors inside it. This could vastly improve the wearability of Google’s headset, which could arrive sometime in 2024 or later.

Seeing things differently

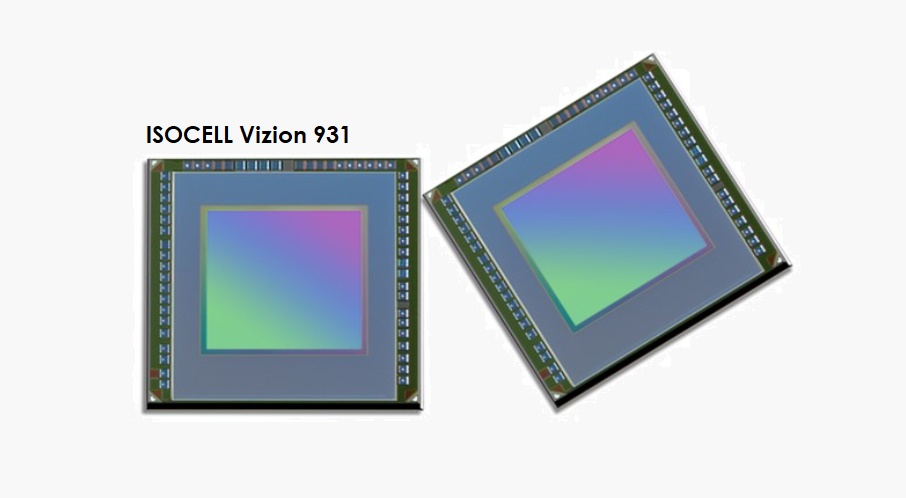

Meanwhile, Samsung is taking a more traditional approach with image sensors and has invented a new type of sensor to help in its next-gen XR headset efforts. Specifically, the ISOCELL Version 931, which is a new sensor that works like the human eye — packing a rolling shutter that exposes all pixels to light at the same time,

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

This allows the sensor to accurately track eyes, facial expressions, hand gestures and even provide iris recognition for device security. That’s not all though, as Samsung has also introduced the ISOCELL Vizion 63D, which works similarly to how bats navigate in the dark — using an indirect time of flight sensor that can measure distance and depth.

With these sensors combined, you can see Samsung coming close to cracking the code on competing with Apple Vision Pro, with an iTOF sensor mapping 3D environments for augmented reality, and the sensors reducing system power drain by up to 40%.

More from Tom's Guide

- Meta’s new VR headset looks just as weird as Apple Vision Pro — I really don’t want to see your eyes

- Apple Vision Pro could launch sooner than expected — here’s what we know

- Apple Vision Pro rumored 'Magic Battery' is anything but magical — here's why

Jason brings a decade of tech and gaming journalism experience to his role as a Managing Editor of Computing at Tom's Guide. He has previously written for Laptop Mag, Tom's Hardware, Kotaku, Stuff and BBC Science Focus. In his spare time, you'll find Jason looking for good dogs to pet or thinking about eating pizza if he isn't already.

-

Hyacin It has a global shutter, not a rolling shutter.Reply

3rd last paragraph -

Specifically, the ISOCELL Version 931, which is a new sensor that works like the human eye — packing a rolling shutter that exposes all pixels to light at the same time,