What is ChatGPT-4 — all the new features explained

Here's the latest information on OpenAI's new ChatGPT model

ChatGPT is already an impressive tool if you know how to use it, but it will soon receive a significant upgrade with the launch of GPT-4.

Currently, the free preview of ChatGPT that most people use runs on OpenAI's GPT-3.5 model. This model saw the chatbot become uber popular, and even though there were some notable flaws, any successor was going to have a lot to live up to.

And that successor is now here, though OpenAI isn't going to just open the floodgates. OpenAI announced GPT-4 on its website and it says that GPT-4 will first be available to ChatGPT Plus subscribers and developers using the ChatGPT API.

Here's everything we know so far about GPT-4 and all the new features that have been announced.

What is GPT-4?

GPT stands for Generative Pre-trained Transformer. This neural network uses machine learning to interpret data and generate responses and it is most prominently the language model that is behind the popular chatbot ChatGPT. GPT-4 is the most recent version of this model and is an upgrade on the GPT-3.5 model that powers the free version of ChatGPT.

What's different about GPT-4?

GPT-3 featured over 175 billion parameters for the AI to consider when responding to a prompt, and still answers in seconds. It is commonly expected that GPT-4 will add to this number, resulting in a more accurate and focused response. In fact, OpenAI has confirmed that GPT-4 can handle input and output of up to 25,000 words of text, over 8x the 3,000 words that ChatGPT could handle with GPT-3.5.

The other major difference is that GPT-4 brings multimodal functionality to the GPT model. This allows GPT-4 to handle not only text inputs but images as well, though at the moment it can still only respond in text. It is this functionality that Microsoft said at a recent AI event could eventually allow GPT-4 to process video input into the AI chatbot model.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

These upgrades are particularly relevant for the new Bing with ChatGPT, which Microsoft confirmed has been secretly using GPT-4. Given that search engines need to be as accurate as possible, and provide results in multiple formats, including text, images, video and more, these upgrades make a massive difference.

Microsoft has made clear its ambitions to create a multimodal AI. In addition to GPT-4, which was trained on Microsoft Azure supercomputers, Microsoft has also been working on the Visual ChatGPT tool which allows users to upload, edit and generate images in ChatGPT.

Microsoft also needs this multimodal functionality to keep pace with the competition. Both Meta and Google’s AI systems have this feature already (although not available to the general public).

pic.twitter.com/Io0OXbNPziNovember 9, 2022

The latest iteration of the model has also been rumored to have improved conversational abilities and sound more human. Some have even mooted that it will be the first AI to pass the Turing test after a cryptic tweet by OpenAI CEO and Co-Founder Sam Altman.

While OpenAI hasn't explicitly confirmed this, it did state that GPT-4 finished in the 90th percentile of the Uniform Bar Exam and 99th in the Biology Olympiad using its multimodal capabilities. Both of these are significant improvements on ChatGPT, which finished in the 10th percentile for the Bar Exam and the 31st percentile in the Biology Olympiad.

What can GPT-4 do?

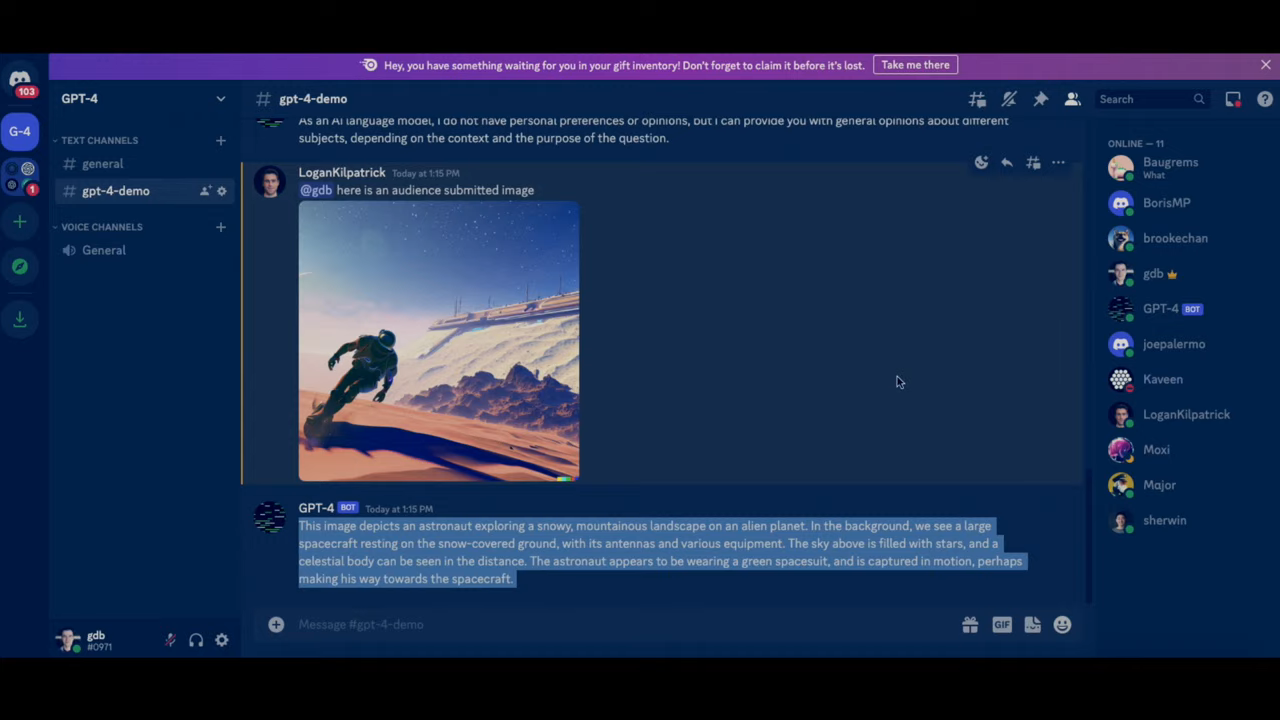

During a recent developer livestream for GPT-4, OpenAI president and co-founder Greg Brockman showed off some of the new features of the updated language model including multimodal capabilities where it provides context for images and even website creation.

In this portion of the demo, Brockman uploaded an image to Discord and the GPT-4 bot was able to provide an accurate description of it. However, he also asked the chatbot to explain why an image of a squirrel holding a camera was funny to which it replied "It's a humorous situation because squirrels typically eat nuts, and we don't expect them to use a camera or act like humans".

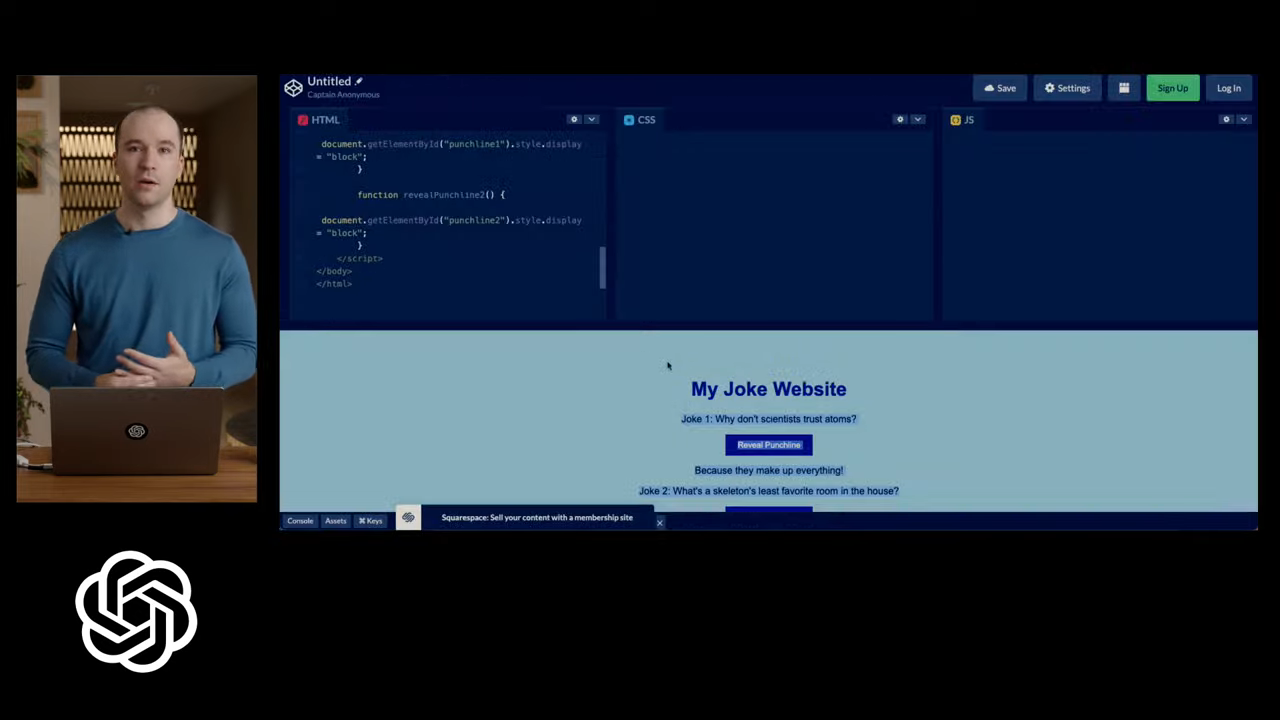

If this was enough, Brockman's next demo was even more impressive. In it, he took a picture of handwritten code in a notebook, uploaded it to GPT-4 and ChatGPT was then able to create a simple website from the contents of the image.

Once GPT-4 begins being tested by developers in the real world, we'll likely see the latest version of the language model pushed to the limit and used for even more creative tasks.

How to access GPT-4

At this time, there are a few ways to access the GPT-4 model, though they're not for everyone.

If you're using the new Bing with ChatGPT, congrats! You've been secretly using GPT-4 this entire time. If you haven't been using the new Bing with its AI features, make sure to check out our guide to get on the waitlist so you can get early access. It also appears that a variety of entities, from Duolingo to the Government of Iceland have been using GPT-4 API to augment their existing products. It may also be what is powering Microsoft 365 Copilot, though Microsoft has yet to confirm this.

Aside from the new Bing, OpenAI has said that it will make GPT available to ChatGPT Plus users and to developers using the API. So if you ChatGPT-4, you're going to have to pay for it — for now.

More from Tom's Guide

Andy is a freelance writer with a passion for streaming and VPNs. Based in the U.K., he originally cut his teeth at Tom's Guide as a Trainee Writer before moving to cover all things tech and streaming at T3. Outside of work, his passions are movies, football (soccer) and Formula 1. He is also something of an amateur screenwriter having studied creative writing at university.

- Malcolm McMillanStreaming Editor