Apple Glass could help you ‘see’ in the dark — here's how

Apple Glass patent suggests the company has clever ideas to augment low-light vision

We’ve seen some unusual patents for Apple Glass ideas in recent months, from navigating via subtle changes in your music to bone conducting audio tech, but this latest one may just be the strangest yet. It seems Apple is contemplating innovative ways that Apple Glass could help wearers see more clearly in low-light conditions.

No, this isn’t some kind of night vision plug in. Rather, a new patent explores how Apple Glass could use various sensors to measure the world around the owner, giving them a better comprehension of what’s nearby.

- iPhone 12 vs Samsung Galaxy S20: Which is best?

- Everything we know about AirPods Studio

The patent, titled “Head-Mounted Display With Low Light Operation”, first outlines the problem. “Photopic vision,” it explains, is when the eyes work best: with “high levels of ambient light… such as daylight.” Other kinds — mesopic and scotopic — “may result in a loss of color vision, changing sensitivity to different wavelengths of light, reduced acuity and more motion blur.”

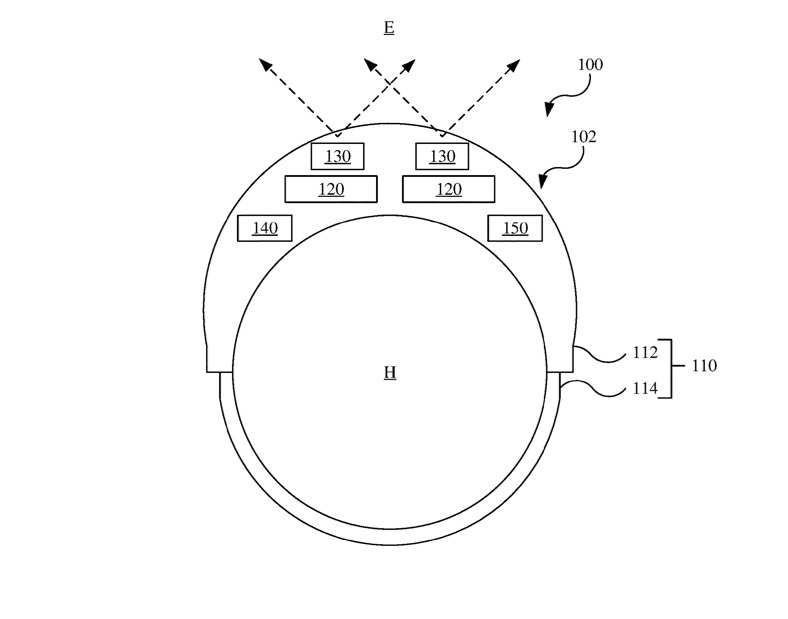

That’s a roundabout way of explaining what most people already know — that humans struggle to see in the dark — but what’s the solution? This is where it gets interesting. Sensors in a head mounted display (HMD) could detect the environment around the wearer, before being broadcast back in the form of graphical content.

“The depth sensor detects the environment and, in particular, detects the depth (e.g., distance) therefrom to objects of the environment,” the patent explains. “The depth sensor generally includes an illuminator and a detector. The illuminator emits electromagnetic radiation (e.g., infrared light)... into the environment. The detector observes the electromagnetic radiation reflected off objects in the environment."

The type of depth sensor isn’t set in stone, and Apple gives a number of examples. One uses time of flight (ToF), where a pattern is projected onto the environment, and the amount of time taken for the device to see it again gives an approximation of depth.

Apple also says RADAR or LiDAR — fresh off its iPhone 12 Pro debut — could be used. “It should be noted that one or multiple types of depth sensors may be utilized, for example, incorporating one or more of a structured light sensor, a time-of-flight camera, a RADAR sensor, and/or a LIDAR sensor,” the company writes.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

However it’s done, the ultimate aim is to give the wearer details of their environment that their eyes aren’t capable of spotting. "The [HMD's] controller determines graphical content according to the sensing of the environment with the one or more of the infrared sensor or the depth sensor and with the ultrasonic sensor and operates the display to provide the graphical content concurrent with the sensing of the environment."

It’s certainly intriguing, albeit a bit sci-fi sounding. While we’re not expecting everything Apple patents to ever see the light of day, such filings still offer interesting insights into the thinking in Cupertino, and what problems the company thinks need to be overcome.

Even if this patent is actualized in a commercial product, it won’t be for some time. Apple Glass isn’t expected until next spring at the earliest and, more likely, not until 2023. Plenty of time for this technology to mature a bit more, then...

Freelance contributor Alan has been writing about tech for over a decade, covering phones, drones and everything in between. Previously Deputy Editor of tech site Alphr, his words are found all over the web and in the occasional magazine too. When not weighing up the pros and cons of the latest smartwatch, you'll probably find him tackling his ever-growing games backlog. Or, more likely, playing Spelunky for the millionth time.