Apple creates new HUGS AI tool that can convert a video into a dancing avatar in minutes

A virtual you!

Apple has unveiled a new research project called HUGS, a generative artificial intelligence tool that can turn a short video clip of a person into a digital avatar in minutes.

This is the latest in several Apple research and product announcements designed to blend the physical and the virtual worlds. While HUGS has no immediate application, it will likely form part of the Apple Vision mixed-reality ecosystem in the future.

With its Vision Pro headset announcement earlier this year, Apple revealed techniques for creating virtual versions of the wearer to represent them in Facetime calls and meetings. This is likely an extension, providing full-body avatars without 3D scanning equipment.

What is HUGS and how does it work?

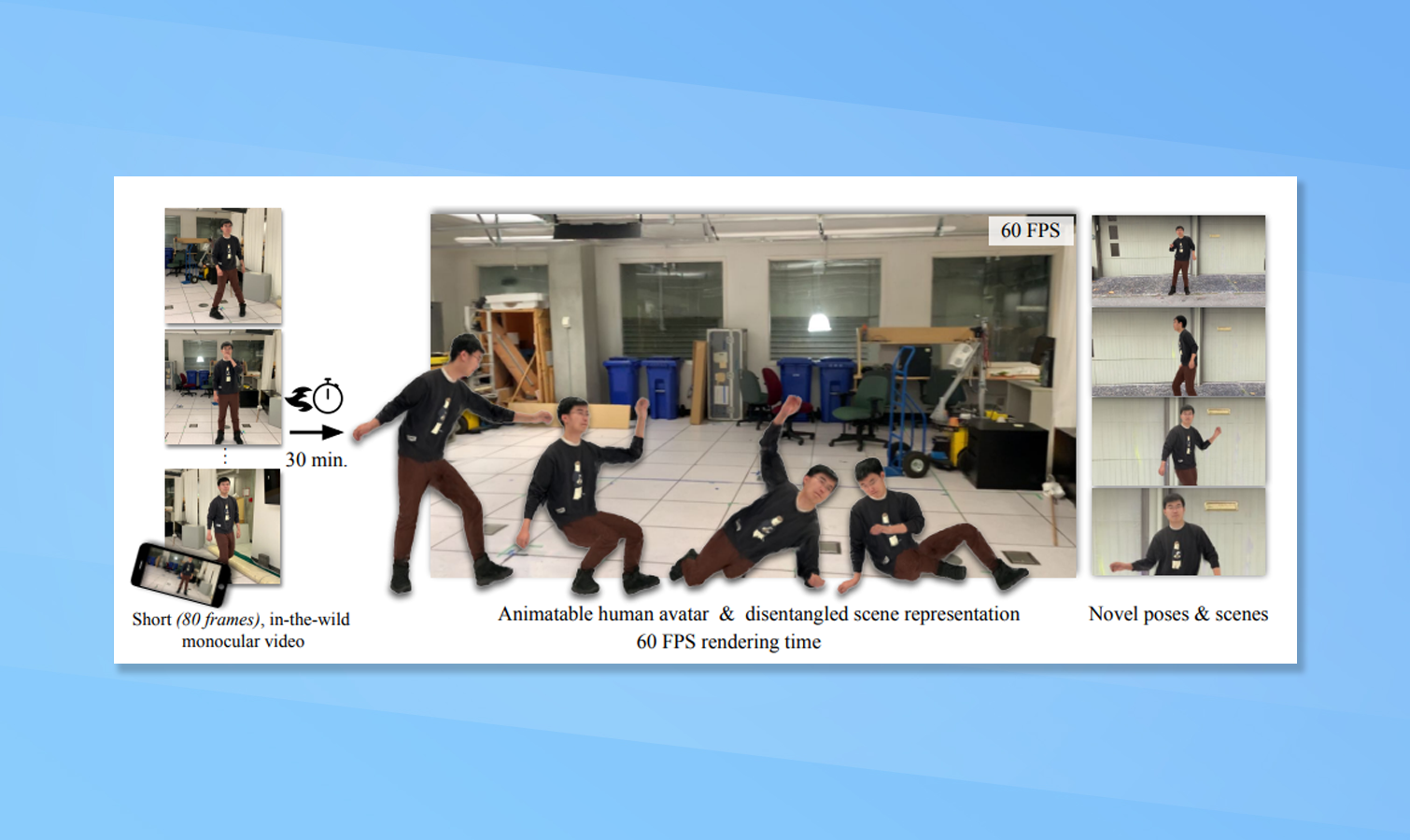

HUGS, or Human Gaussian Splats, is a machine learning method that can scan real-world footage of a person, create an avatar from the footage, and place it into a virtual environment.

Apple's big breakthrough is in creating the character from as little as 50 frames of video and doing so in about 30 minutes, significantly faster than other methods.

The company claims it can create a "state-of-the-art" quality animated rendering of the human and the scene shown in the video in high-definition and available at 60 frames per second.

Once the human avatar has been created it could be used in other scenes or environments, animated in any way the user wants and even used to create new dance videos.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

What are the use cases for HUGS?

Imagine you have your Vision Pro headset on (or a cheaper version rumored to be coming next year) and you're playing a third-person game like GTA 6 or Assassin's Creed.

Now imagine that instead of the built-in character, or an avatar that has your face but not your body, you have a clone of your full self but in the digital world.

That is already possible but requires a long processing time or expensive cameras. If HUGS becomes more than a research project, it could be a native feature of visionOS where you upload a video of yourself and it turns you into a character that can be used in a game.

When will HUGS be available?

HUGS is unlikely to be available anytime soon. This is another Apple research paper and while the company might be incorporating aspects of it behind the scenes, it is early stage work.

What we might see at WWDC in 2024 is a form of this technology available for Apple Developers building apps and interfaces for the Vision Pro.

The reality is that for now, Apple’s Digital Personas are likely to be headshots that can sit in the frame for a FaceTime call. But this is a good indication of where the company is going.

More from Tom's Guide

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on artificial intelligence and technology speak for him than engage in this self-aggrandising exercise. As the AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover. When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing. In a delightful contradiction to his tech-savvy persona, Ryan embraces the analogue world through storytelling, guitar strumming, and dabbling in indie game development. Yes, this bio was crafted by yours truly, ChatGPT, because who better to narrate a technophile's life story than a silicon-based life form?