I got an exclusive look at Nvidia’s RTX 50-Series GPUs — 5 big reveals

PC gaming’s next generation is here, and I got to check it out!

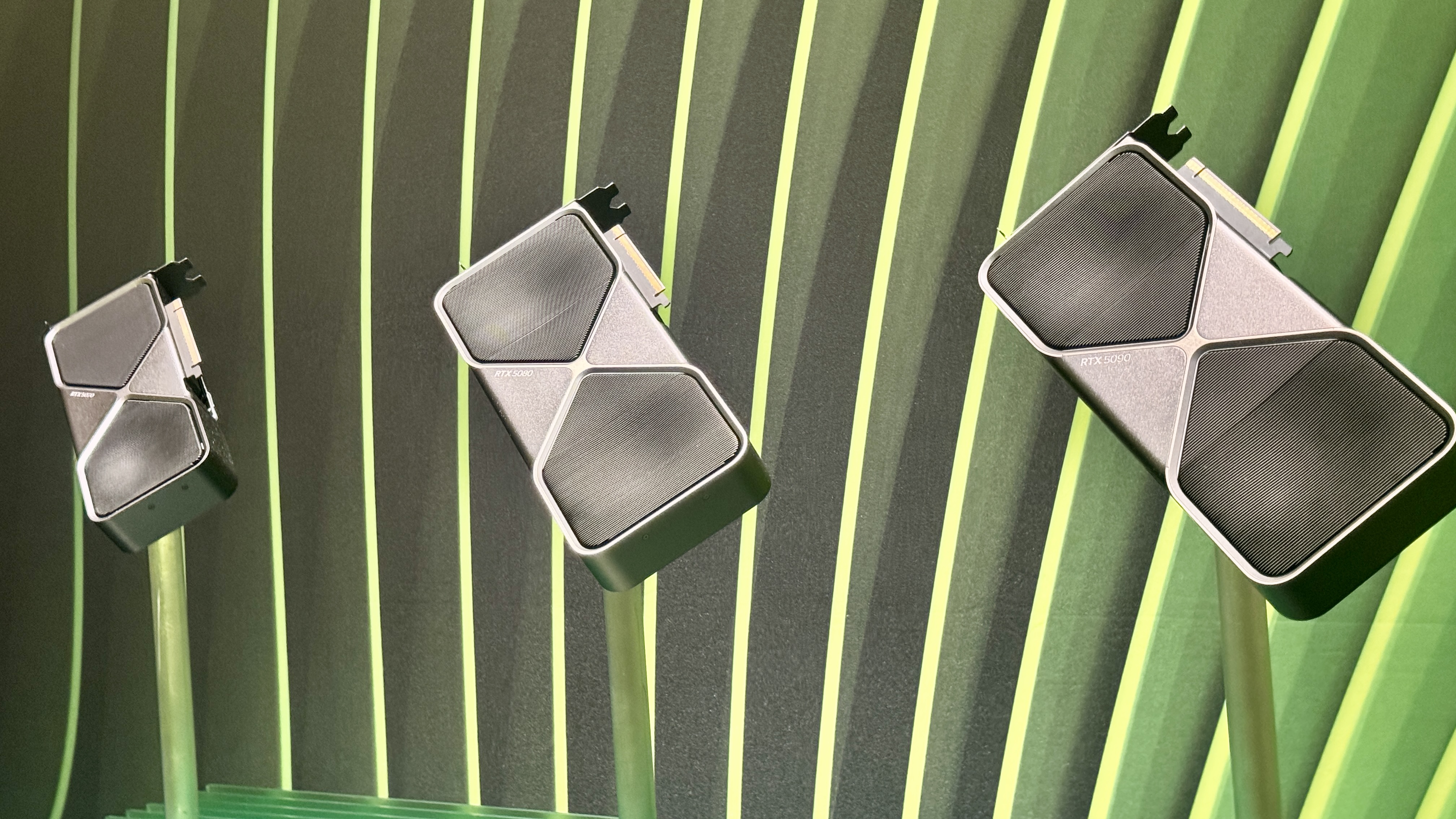

Nvidia’s RTX 50-series GPUs were easily the biggest news of CES 2025 — ushering in the next generation of PC gaming with hardware upgrades and impressive AI-driven improvements.

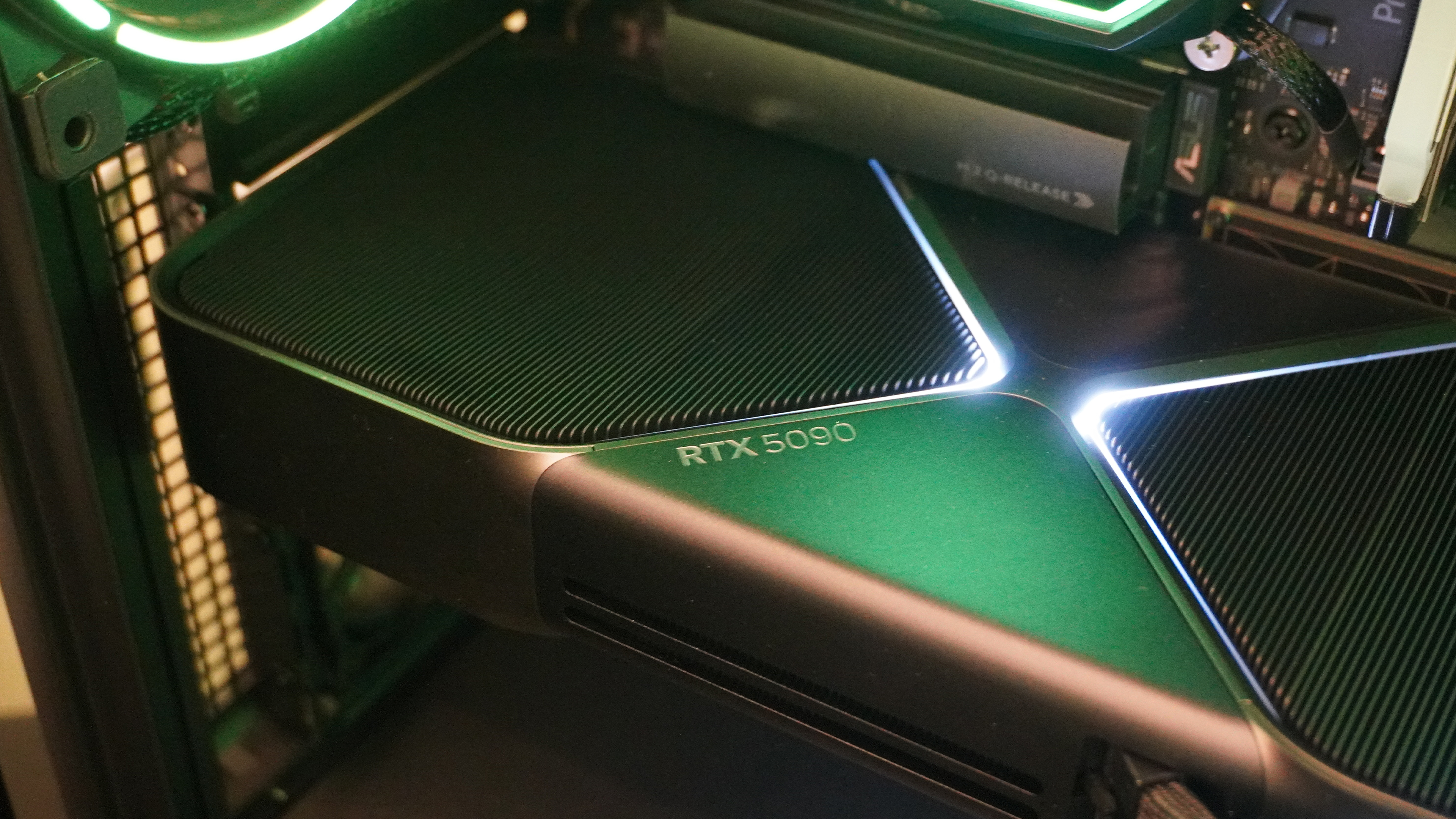

But in terms of going hands-on, we’ve only been able to go skin deep. Sure, I got to try out the RTX 5090 with Black Myth: Wukong and Black State, but one of those demos only showed the framerate and the other we just had to guess with no on-screen data.

So to get more time and experience with these new cards, I got to go behind the scenes at a private Nvidia event to find out a whole lot more about the new RTX Blackwell Architecture, and even see some particularly strenuous tests.

Cyberpunk 2077 at over 200 frames per second (FPS)

With 4K 240hz monitors very much being a thing (I got to try Asus' impressive option out too), Nvidia’s new GPUs are targeting this standard. In the slides shown to us, internal benchmarking suggests the company hit it, and in my own time with it, I can confirm that is absolutely the case.

So what is the first demo I rushed to for verification of this? Cyberpunk 2077 has become the 2020's equivalent of “can it run Crysis?” Of course I made a beeline over there to see what the new GPUs can do, and to say I was blown away would be an understatement.

This is at maxed-out settings, and sprinting around Night City, I saw a maximum of around 265 FPS, and a low of 245 FPS. Every detail is crisp, the fidelity is incredible and the smoothness is fantastic without much in the way of a noticeable latency — all thanks to DLSS 4 (more on that later).

On top of that, I also saw Black Myth: Wukong and Black State either come damn close to or even exceed this 240Hz target at 4K max settings.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

One thing to note is that changing between the various quality and performance implementations of DLSS (quality = better picture with fewer frames, and performance = prioritizing frame rate) only resulted in a 10-15 FPS difference in my hands-on experience.

DLSS 4 is the key

So with the RTX 50-series GPUs comes Deep Learning Super Sampling (DLSS) 4 — AI game enhancement tech that uses a machine learning model trained on the game to boost resolution and improve frame rates, while easing pressure on the GPU.

Yes, these features are also coming to older GPUs too (I’ve popped the graph below to show you support all the way back to RTX 20 series), but you’re getting everything with the 50s.

The secret sauce to this is changing from a Convolutional neural network (CNN) to a transformer model. Let me explain — in older versions of DLSS, the CNN works to find patterns in the in-game image to do things like sharpen up the graphics and increase the frame rate.

A CNN relies on the layers it sees before it, and while it's a solid system, there are some issues that come from it like ghosting (seeing the outline of a fast-moving item on screen follow behind it).

Meanwhile, a transformer model is similar to what you see in the likes of ChatGPT and Google Gemini, and Nvidia is using this for DLSS 4. According to Nvidia, this “enables self-attention operations to evaluate the relative importance of each pixel across the entire frame and over multiple frames.”

Put simply, this new version of DLSS is able to think a few more steps ahead. No, it’s not predicting the future like CEO Jensen Huang claimed in an interview recently, but the end result of moving to a more intelligent transformer is drastically better frame rates and image quality, while also tackling some of the key gripes I had with older versions.

Heading back to that Cyberpunk demo, for example, the neon holograms are the enemy of older DLSS versions and AMD’s FSR tech. It’s been challenging for any CNN to render them fully without flickering and fuzzy textures, as they are a tricky mix of frame generation, upscaling and ray tracing to predict.

With DLSS 4, however, 99% of that is gone. You’ll have to be looking hard to find anything wrong. The leaves on this holographic tree are completely clear and there is zero sign of any flickering around it. The only spots I saw were some slight jitters on in-game HUD elements and the tiniest bits of ghosting around bright screens in especially dark areas.

But make no mistake about it — there is a marked upgrade here. The Super Resolution and Ray Reconstruction transformer really help give even the tiniest of details a real sense of existence in these in-game worlds, while massively upping the FPS. And on top of that, the new transformer model is 40% faster, uses 30% less video memory. That means Nvidia can redeploy that memory to other things.

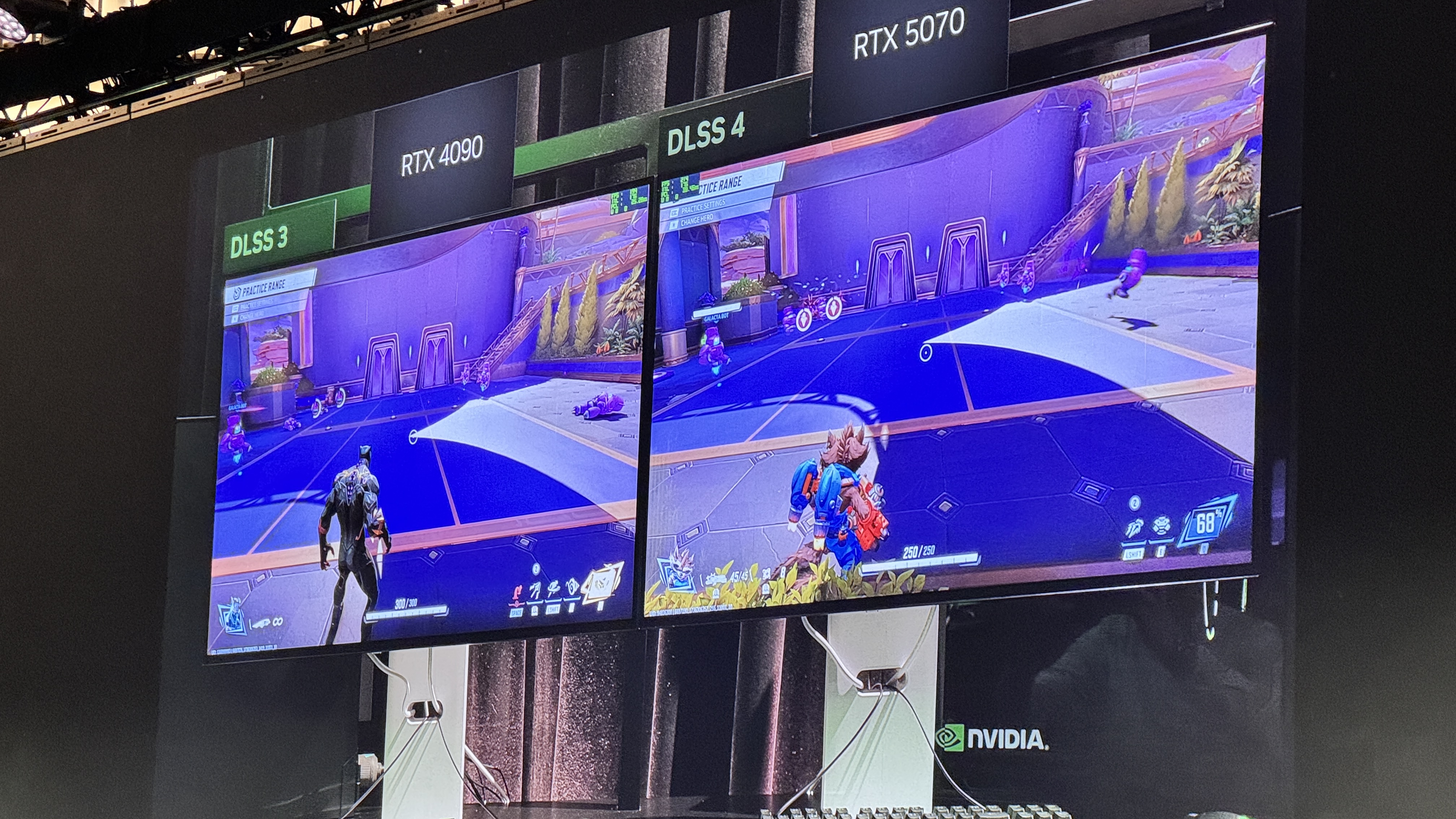

4090 performance in RTX 5070? There are strings attached

Trust me when I say my eyes lit up when I saw Jensen exclaim you’re going to get the performance of the $1,599 RTX 4090 in the $549 RTX 5070. And after talking to our Global Editor-in-Chief Mark Spoonauer who was in the crowd at the conference, the reaction was loud.

This would be huge for the price to performance ratio, but of course I needed to see this for myself. And in short, Huang was telling the truth…sort of. It comes down to that Multi Frame Generation aspect of DLSS that is available exclusively on RTX 50-series cards. With DLSS 4 on the 50-series, you’re not just limited to generating one additional frame, that transformer model can now generate three.

For the comparison, Nvidia booted up Marvel Rivals and the frame rate differences are stark. With RTX 4090 generating one additional frame, you’re seeing around 180 FPS, whereas on 5070, that goes up to nearly 250 FPS — all on the same graphics settings across both cards (only difference being that Multi Frame Gen).

In other games, Nvidia was quick to emphasize that the two GPUs are more equal in terms of frames, and that Marvel Rivals was picked for the demo due to it being exceedingly strong in frame generation.

And there’s the twist. Jensen’s claim is entirely dependent on developers supporting Multi Frame Generation. If the game doesn’t support it, then of course the RTX 4090 with its vast amounts of additional power and VRAM is going to be better.

But I feel conflicted in calling it a twist, because what Nvidia has pulled off here is seriously impressive. Provided that enough developers end up putting Multi Frame Gen in their games, then this could easily be the best GPU you can buy.

RTX is going next-level on AI

If you thought Nvidia was going hard on AI game enhancements before, the company’s taking things up a notch.

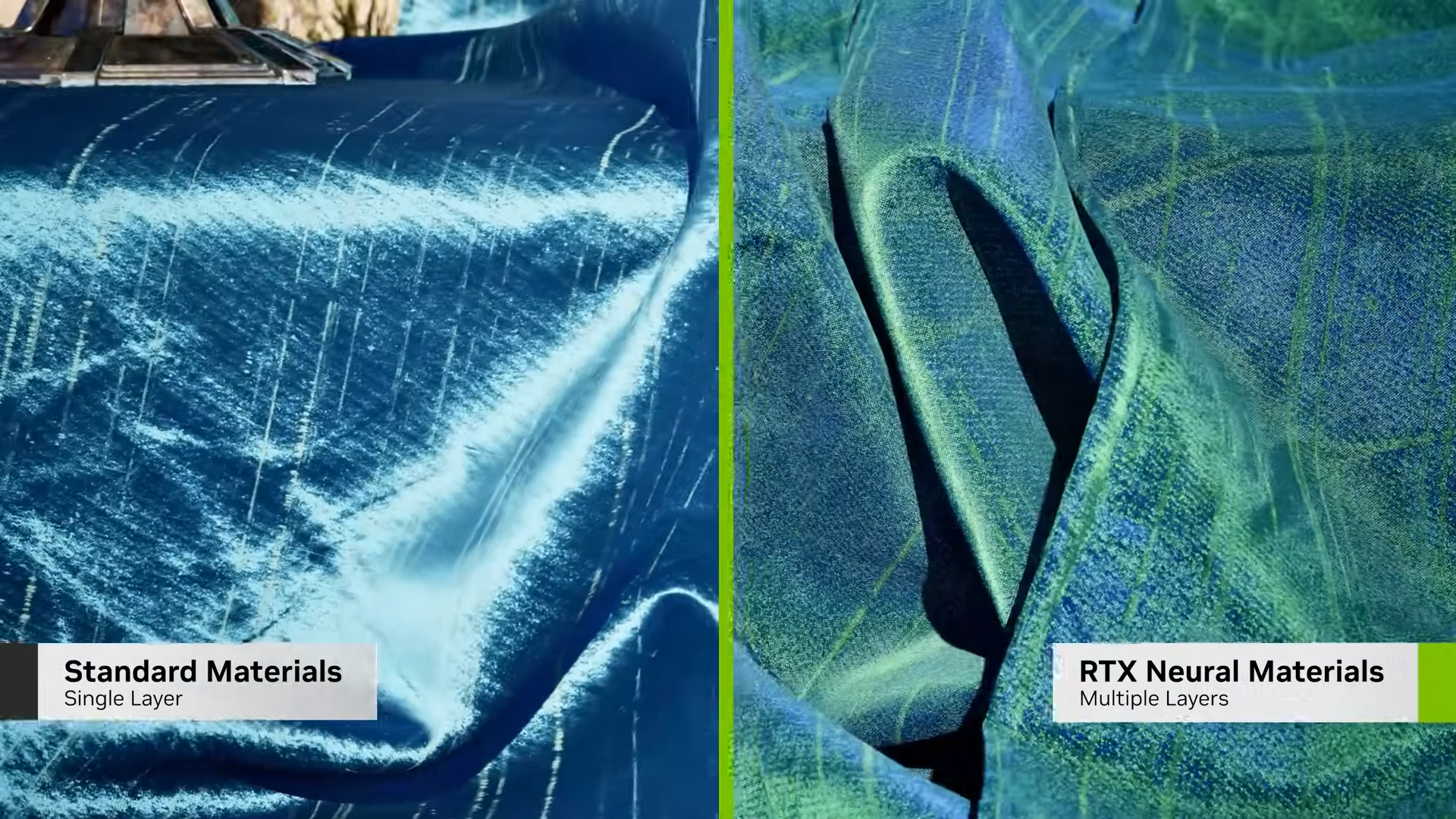

You’re seeing a lot of new RTX features coming from Nvidia with the word “neural” in the name. From Neural Rendering for full ray and path tracing and Neural Shaders using AI to enhance in-game textures, to Neural Faces using generative AI trained on real faces to cross that uncanny valley of facial expressions.

RTX Neural materials was the first standout feature for me looking at the demos in person. Typically, a game developer will have to bake in the texture of an object or surface and the rules of how it interacts with the rest of the world’s lighting and ambiance. Now, with RTX Neural materials, AI compresses that code and makes processing of it up to 5x faster.

The end result from what I saw is that multiple layered items like the silk pictured above looks dramatically more realistic to what it should look like — rather than just a shiny foil-like piece of cloth, there’s a thread count and the color changes with light diffraction.

Coming along to the party too is RTX Mega Geometry that massively increases the amount of triangles that build everything you see in a game for insane levels of granular detail.

Typically, if a game is adding more detail to the scene, it has to go through a rebuild of all the on-screen elements, which can be very costly to the GPU. It’s something you see happen in cutscenes, as developers up the detail on characters in close-up shots talking, and then reduce that load when you don’t need it.

With Mega Geometry, Nvidia’s offering a way to stream in these details in real-time without needing to rebuild everything else. This means two big things for you, the gamer:

- First, it means your games can look at otherworldly levels of impressiveness. I got to check out the dragon demo in person, and to see this monster rendered at 1 triangle per pixel is truly bonkers.

- Second, it actually eases pressure on the graphics card in some areas while ramping up the demands in other areas — AI is helping the RTX 50-series do more with less.

The typical stat people look for on a spec list of a GPU is GDDR video memory (VRAM) — the memory that stores essential graphical instructions in the background that the game will need to constantly refer back to. These could be anything from textures to lighting, facial expressions to animations. To run most of the AAA games at their highest settings, you need a lot of it, and Nvidia does deliver that on the RTX 5090 with 32GB of the fastest GDDR7.

But what the onboard AI is actually capable of doing here is really quite clever — textures can be compressed to save up to 7x of that previous video memory, while the Neural Materials feature works in tandem to process materials up to 5x faster.

As for the size of some key textures in the demo Nvidia showed me, they have gone down from a demanding 47MB to 16MB. That’s a huge reduction that frees up the memory needed for Mega Geometry.

It became abundantly clear that Nvidia’s taking the “work smarter, not harder” approach, and while I know there are going to be some PC gaming purists who would prefer to turn off all these AI features and just have pure rendering on the GPU itself (I saw you in the comments), this is the direction the company has decided to take. And based on what I’ve seen, I think they’re on the right track.

Latency kept nice and low

Latency is something you hear a lot about when it comes to Nvidia’s DLSS tech. While the frame generation technique in the background does make the game look smoother, it does not alter latency, since it’s a piece of AI trickery rather than brute forcing the issue with raw horsepower on the GPU.

So watching DLSS 4 generate an extra two frames beyond the one of DLSS 3 did ring some alarm bells amongst us at the event — it could cause additional latency to stick more frames in there.

However, those fears were quickly put to bed by running around Night City. As you can see, those additional two frames in Multi Frame Gen don't add any additional latency on top of what you saw in DLSS 3.

And this potential issue is a relative one. If you’re playing at a lower framerate with DLSS, you’re probably going to notice it more as the latency time between frames increases. But if you’re going for the 100+ FPS target (and let’s be honest, that’s probably what you’re going to get pretty much all the time), then most people will not notice it.

But there is an additional weapon in Nvidia’s arsenal in the way of Reflex 2 — the second generation latency reducing tech that aims to make games more responsive. This one is definitely more for the esports crowd looking to reduce those milliseconds, but in my time with The Finals, that latency time was so small!

And the way it’s being pulled off is seriously awesome. The GPU can analyze what the next frame will be based on what your mouse and keyboard inputs are, while ensuring a precise synchronization of rendering graphics across your whole PC. If competitive gaming is your thing, this is a generational step forward for reducing latency.

Outlook

And that, in a generously sized nutshell, is what RTX 50-series is all about — big impactful changes to the way gaming graphics works to usher in the next generation. Games of today will be able to render faster, look sharper and run smoother. And games of the future could be packed with so much detail it would take you pressing your face on the screen to actually find the pixels.

Plus, while conversation around DLSS may lean towards it being a workaround in the more power-hungry areas of the PC gaming community, over 80% of RTX players activate it while gaming — a huge adoption statistic that may suggest this has all been a bit blown out of proportion.

Trust me, for that 80+% of you out there, you’ll love what Nvidia’s cooking here.

More from Tom's Guide

- Nvidia RTX 5050 laptop GPU just showed up in LG Gram 2025 — but there's bad news

- Nvidia GeForce RTX 5090 vs RTX 4090: 5 biggest differences

- We may never get a Steam Deck 2, and I’m okay with that — here’s why

Jason brings a decade of tech and gaming journalism experience to his role as a Managing Editor of Computing at Tom's Guide. He has previously written for Laptop Mag, Tom's Hardware, Kotaku, Stuff and BBC Science Focus. In his spare time, you'll find Jason looking for good dogs to pet or thinking about eating pizza if he isn't already.