iPhone 12 Pro and LiDAR — why this exciting tech will beat Galaxy Note 20

Here’s how a LiDAR scanner could supercharge AR in the iPhone 12

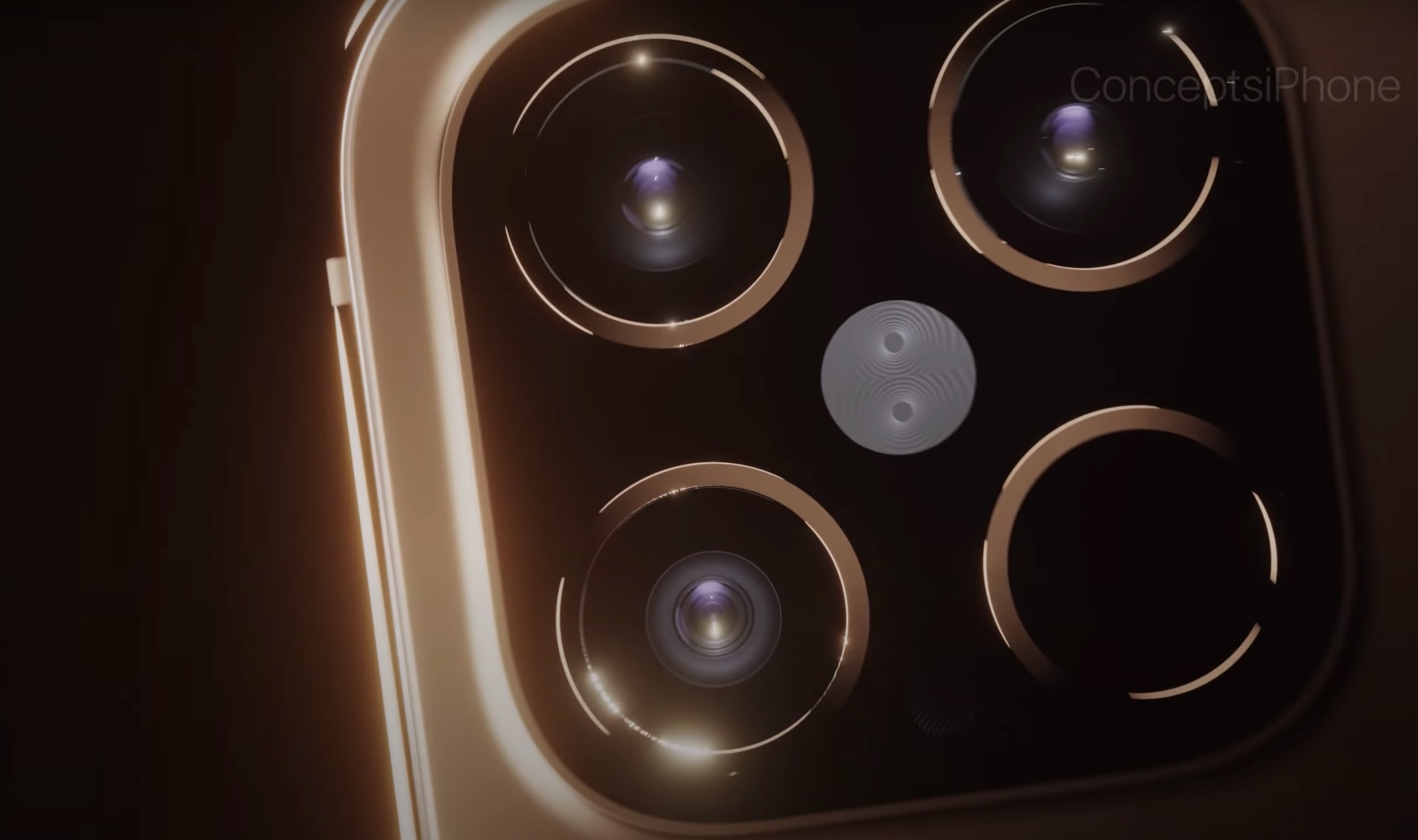

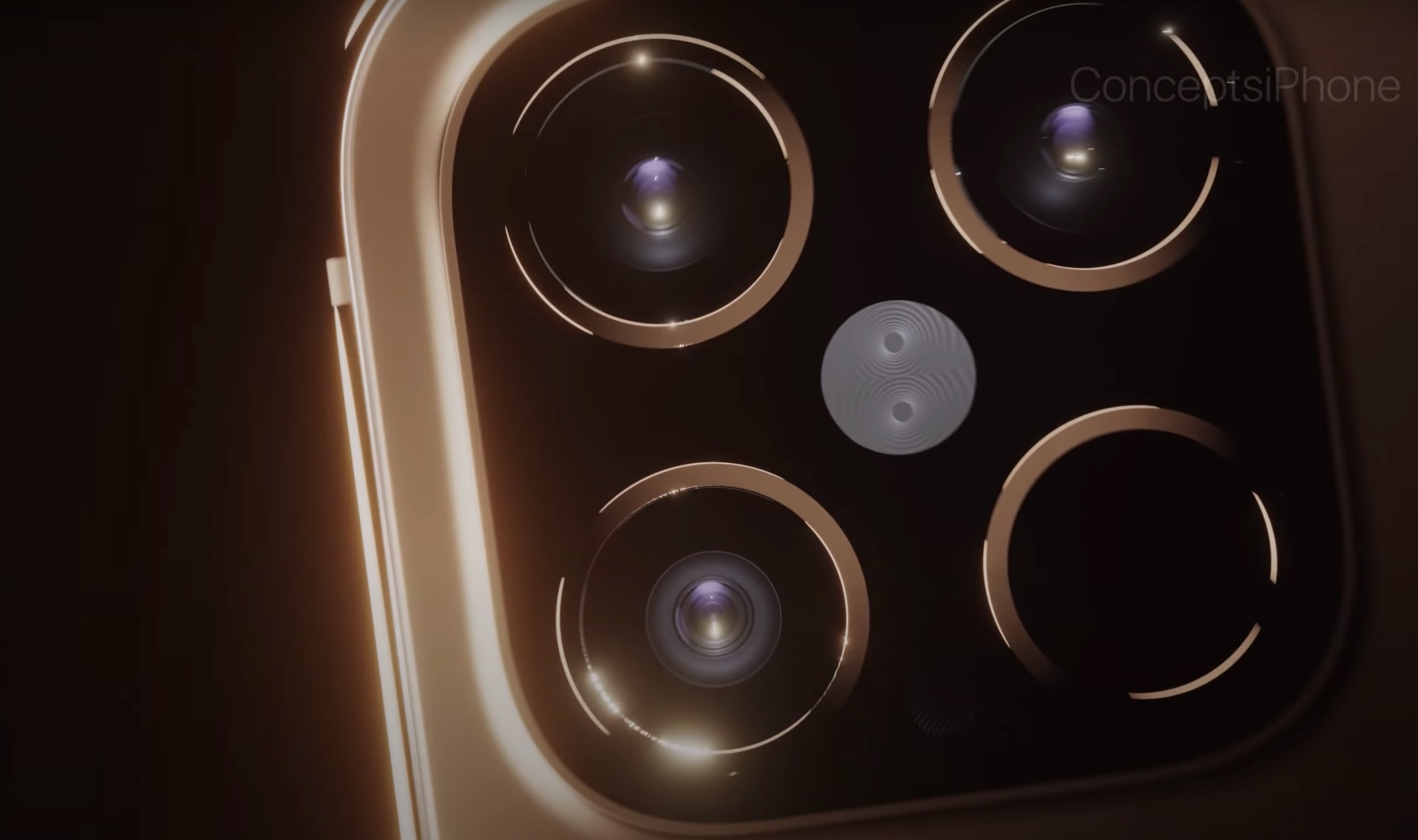

For a while now the iPhone 12 Pro, or the iPhone 12 Pro Max at least, has been tipped to get a LiDAR sensor much like that found in the rear camera array of the iPad Pro 2020.

It might seem like an odd move to put technology commonly used in vehicle navigation into a smartphone. But phone camera tech is so good these days that phone makers are trying to find new ways to improve it and that’s what Apple appears to be doing with its foray into LiDAR tech.

- These are the best iPhone AR apps you can download now

- Apple Glass rival could steal the iPhone 12's coolest feature

- Just In: Every new iPhone12 model just leaked

So you’re still wondering just what the heck a LiDAR sensor is, and why an iPhone or iPad would need one? Allow us to explain.

What is a LiDAR scanner?

In short, LiDAR stands for Light Detection and Ranging. It’s basically the means by which lasers are used to figure out distances and depths. Laser beams are shot out of the LiDAR scanner and it times how long they take to bounce back to the sensor, then works out the distance and depth of objects that the laser light has bounced off. All this happens very quickly — not quite the speed of light, but faster than you could probably guess a distance is.

LiDAR has been used in aircraft, space, and military applications for ages. But more recently it’s popped up in consumer gadgets. If you have a robot vacuum cleaner, then that probably uses LiDAR to work out where it’s going. And LiDAR is one of the major ways driverless cars and self-driving systems navigate roads safely.

As the tech improves and the computational power in smartphones and tablets gets boosted, it’s possible to equip them with LiDAR sensors.

Why use LiDAR in smartphones?

Phones like Samsung’s Galaxy S20 Ultra and Galaxy Note 10 Plus have time-of-flight (ToF) sensors that a measure how long it takes infrared light to bounce back to the sensor. That allows for greater depth sensing.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

So you might ask why a LiDAR scanner is needed on the current iPad Pro and likely the iPhone 12 Pro? Well, the latest LiDAR systems use multiple laser pulses to effectively scan parts of an environment very quickly, whereas ToF sensors tend to use a single beam of infrared light.

As a result, LiDAR systems effectively scan more stuff and take in more data pertaining to the scene the scanner is being pointed at. Better range and more scanning potential means LiDAR is better at detecting an object in front or behind of other objects. Known as ‘occlusion,’ this process allows for LiDAR to build a richer picture of the environment being scanned.

And thanks to the use of computer algorithms and computational photography, LiDAR could deliver photos with a deeper sense of depth. But this isn’t something we’ve seen in the iPad Pro, which doesn’t even support a portrait mode with its rear-camera array.

Really, LiDAR on the iPad Pro is more about improving augmented reality experiences and apps. The greater scanning potential means virtual objects are more realistically superimposed over a real environment, and the ability to digitally measure objects more accurately.

While we found the LiDAR scanner in the iPad Pro to work well with some AR apps, like IKEA Place, it doesn’t allow 3D objects to be scanned with enough accuracy to say, send that data to a 3D printer for replication, something that would certainly make the iPad Pro more ‘Pro.’

And the range of apps that can tap into the LiDAR tech on the iPad Pro is pretty slim. The developers of the Halide camera app did a deep-dive into the iPad Pro’s LiDAR system and noted there are no APIs to allow developers to access the data below that provided by what’s scanned at 3D surface level.

Apple does have its ARKit to provide developers with a framework to create AR apps for its devices. But so far AR apps don’t seem particularly prolific.

Why put LiDAR into the iPhone 12 Pro?

The answer to that isn’t easy, as the use of LiDAR in smartphones and other mobile gadgets is a bit of a chicken and egg situation. Without a slew of AR apps, clever LiDAR tech could be wasted. But without the extra sensing power of a LiDAR system, AR apps may end up being lackluster experiences.

So putting a LiDAR scanner into the iPhone 12 Pro, which is very likely to sell a lot more than the iPad Pro, is a case of ‘if you build it, they will come.’ With an iPhone 12 Pro with a LiDAR sensor in the hands of hundreds of thousands of people, developers may be more compelled to create AR apps that work with LiDAR.

A recent report detailed how the iPhone 12 Pro will use a LiDAR sensor provided by Sony. And through the use of light pulses, the LiDAR sensor will measure the distances of objects the phone is pointed at.

At its most basic, this use of LiDAR should enable better Portrait mode shots, with the extra depth data allowing for better blurring of backgrounds while keeping the subject of the shot in clear focus. Potentially this could help the iPhone 12 Pro deliver shots that are up there with DSLR cameras, a milestone that smartphone photography has yet to truly reach.

The LiDAR sensor should also be able to help with video, as it would be able to track the depth of objects or subjects as they move in the frame.

In the short-term, this could mean more AR tools for measuring rooms and seeing what a corner might look like if a side table was added to it or what your friend might look like in a new jacket. And AR games would feel a lot less clunky and more compelling.

Over time, if the tech really takes off in smartphones, we could then see apps that allow you to scan an object to be remade in a different material or scan 3D models to be sent to manufacturers to build. There’s a real chance that LiDAR could enable a whole range of creative apps and services.

The iPhone 12 will have some serious camera competition from the upcoming Samsung Galaxy Note 20, which is expected to pack a similar camera array to the Galaxy Note 20 series. That means it could include a 12MP ultrawide camera, a 48MP telephoto lens and a whopping 108MP wide lens complete with a time-of-flight sensor. But the iPhone 12's LiDAR scanner could allow for those special experiences that go beyond raw resolution or power.

Of course, this is all speculation based on the rumor that the iPhone 12 Pro will indeed have a LiDAR scanner; Apple could simply opt to put a new lens in the rear-camera array instead. But it offers us a glimpse at how LiDAR can further make its way out of industrial and aeronautical machines and into your very hands.

Roland Moore-Colyer a Managing Editor at Tom’s Guide with a focus on news, features and opinion articles. He often writes about gaming, phones, laptops and other bits of hardware; he’s also got an interest in cars. When not at his desk Roland can be found wandering around London, often with a look of curiosity on his face.