I tried Runway's upgraded motion brush and its easier than ever to create compelling AI video

AI video is easier to control than ever

Artificial intelligence video startup Runway has launched a new tool within its AI video generator that lets you create more advanced motion within a video.

Runway has been gradually improving its feature set since the launch of the Gen-2 text or image-to-video model last year. The new Multi Motion Brush only works with images as the initial input, but allows you to define different areas to animate.

This solves one of the biggest problems with generative AI video tools, the ability to control how different objects within a video interact with each other or their surroundings.

One example is a group of people playing cards. With a normal AI video model on default settings, you’ll most likely get the camera moving around the table with some basic hand movement — but you could equally get excessive hand movement and hands merging.

How does Runway Multi Motion Brush work?

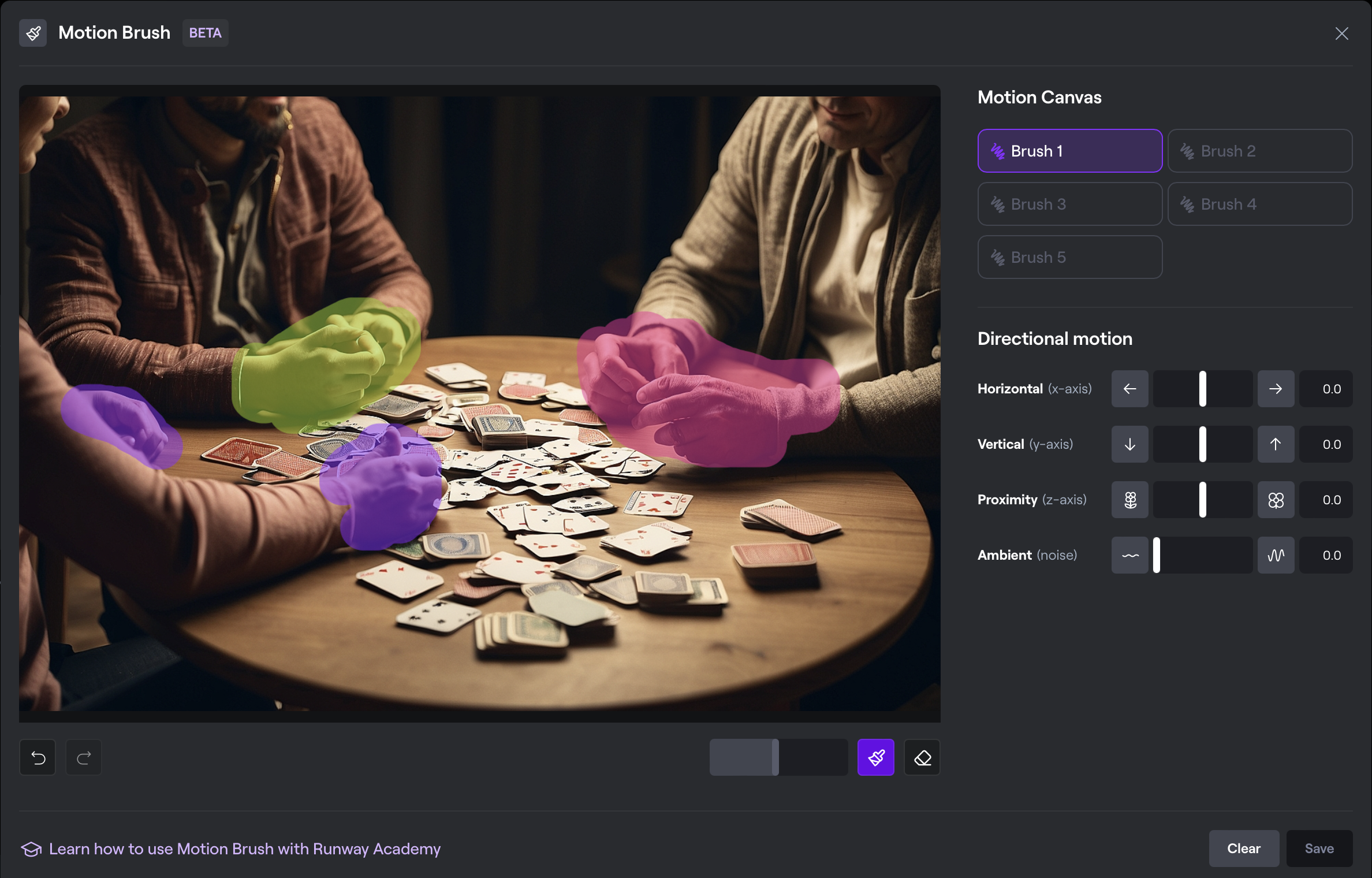

When uploading an image to Runway you can enter the Multi Motion Brush mode and paint up to five regions within the picture. For example the hands of each person at the card table.

As you paint the hands you can then adjust the motion settings for that specific part of the image and Runway will generate that motion independently of the overall video.

Settings include proximity, ambient motion (general noise), as well as directional movement in the vertical and horizontal. These can be set independently for each of the five brushes.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

How well does the Multi Motion Brush feature work?

Like any tool, Multi Motion Brush works to your instructions. The better you are at managing the different degrees and types of motion, the better the overall outcome.

However, it is generative AI, and particularly generative AI video so it does have a mind of its own and can do the total opposite of what you wanted or expect.

I uploaded an image showing Jupiter in the background seen from one of its moons. I tried to animate each band and the spot on Jupiter independently, and then add another brush for the flowing liquid on the surface of the moon (I took liberties with reality).

Instead of the individual bands spinning at different speeds and in different directions, the entire planet moved off screen like the moon was rotating on its axis at high speed. This is likely as much to do with my clumsy use of the settings as issues with the tool, but it was fascinating.

Generative AI video has advanced rapidly in under a year. We've gone from a handful of experiments running in Discord to fully functional products with intricate controls. What will be fascinating is to see where it developers over the next 12 months.

More from Tom's Guide

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on artificial intelligence and technology speak for him than engage in this self-aggrandising exercise. As the AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover. When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing. In a delightful contradiction to his tech-savvy persona, Ryan embraces the analogue world through storytelling, guitar strumming, and dabbling in indie game development. Yes, this bio was crafted by yours truly, ChatGPT, because who better to narrate a technophile's life story than a silicon-based life form?