I've spent 200 hours testing the best AI video generators — here's my top picks

From anime to photorealism

The journey began in February 2023 when Runway introduced Gen-2, the first commercially available AI video generator, transitioning from its initial Discord-based testing phase. This milestone was quickly followed by Pika Labs launching Pika 1.0, and the emergence of several services based on Stable Video Diffusion. A significant breakthrough occurred earlier this year with OpenAI's unveiling of Sora, demonstrating that extensive computational resources and vast training datasets are crucial for achieving realism and fluid motion in AI-generated videos.

Currently, Sora is accessible, albeit in a more limited form than initially anticipated. However, the field has seen the rise of several models that match or surpass OpenAI's flagship. Notably, Runway's Gen-3 Alpha offers major improvements in fidelity, consistency, and motion over its predecessor.

Similarly, Luma Labs' Ray2 introduces advanced capabilities, producing videos with fast, coherent motion and ultra-realistic details, marking a new generation of video models. Additionally, models like Kling and Hailuo MiniMax have emerged, contributing to the diverse and rapidly advancing landscape of AI video generation.

What makes the best AI video generators?

Why you can trust Tom's Guide

All of the best AI video generators are now as much a “platform” as they are a place to make a few seconds of motion from text or an image. For example, most now include some form of motion brush, lip-syncing, and different model types and unique features such as keyframing.

Regardless of additional features, a good generative AI video platform needs to be able to create high-resolution clips with clear visuals, minimal artifacts and reasonably realistic motion.

It will follow the prompt you give it, whether that is in the form of text or an image and will also offer reasonably quick generation times at a not unreasonably high price.

| Header Cell - Column 0 | Credits with free plan | Cost of cheapest paid plan | Credits with cheap basic plan | Commercial use on basic plan? |

|---|---|---|---|---|

Luma Labs | limited | $9.99 | 3200/month | No |

Pika Labs | 150/month | $10 | 700/month | No |

Runway | 125 total | $15 | 625/month | Yes |

Haiper | 10/day | $10 | Unlimited | No |

Kling | login bonus | $10 | 660 | Yes |

Sora | N/A | $20/month | 50/month | Yes |

Hailuo | purchasable | $14.99 | 4000/month | Yes |

Tips for generating video with AI

Creating video content with AI isn’t ‘that’ different to creating AI images. You need to be descriptive and paint a picture with words. The biggest difference is you also need to specify motion and describe how the scene and objects in the scene should move.

The best way to utilize these tools, especially the more advanced ones capable of 10 or more seconds of video from a single prompt, is to use cinematography language. Describe the placement and motion of the camera, outline lighting and explain scene changes if needed.

For example, you could create a video of a couple dining by describing the camera slowly panning from a wide shot of the room to a close-up of their smiles and gestures. Add details like warm candlelight, a softly blurred cityscape through the window, and natural movements like one pouring wine while the other laughs.

You could use this prompt: “A cozy restaurant with dim, golden lighting. The camera begins with a wide shot, capturing the elegant dining room and softly blurred cityscape through the window. It slowly pans towards a couple at a table, smiling and laughing, as one reaches out to pour wine into the other’s glass. The warm candlelight flickers gently on their faces, creating an intimate and inviting mood.”

- Use Cinematic Language: Include film terms to help guide the AI such as camera angles, movements and lighting

- Specify Motion and Actions: Describe how elements within the scene should move including objects and characters

- Define the Environment and Atmosphere: Use detailed descriptions of the setting to set the context and mood including lighting, weather and background items

- Maintain Temporal Consistency: Set a logical sequence of events that are coherent and match the progression of the video and action you want to see

- Iterate and Refine Prompts: Experiment with different prompt structures and details to achieve the desired outcome. Review the generated videos and adjust your prompts accordingly to improve quality and relevance. This iterative process helps in fine-tuning the AI's output to match your vision.

My favorite AI video platforms

I’ve pulled together a selection of the best AI video platforms I’ve used over the past nearly two years. For each model, I’ve generated a video with the same prompt to share the quality difference between each one.

The list only includes models I’ve personally tried and put to the test. It also only features synthetic video models, excluding avatar models like Synthesia and Hey Gen.

The prompt for the videos I've shared with each of these entries is: "A lone cyclist on an empty rural road at golden hour, the light casting long shadows on the asphalt. Surrounding fields of tall grass glow with a warm orange hue, and the cyclist, in a bright jersey, rides steadily toward the camera. Dynamic perspective with cinematic depth."

Best for visual realism

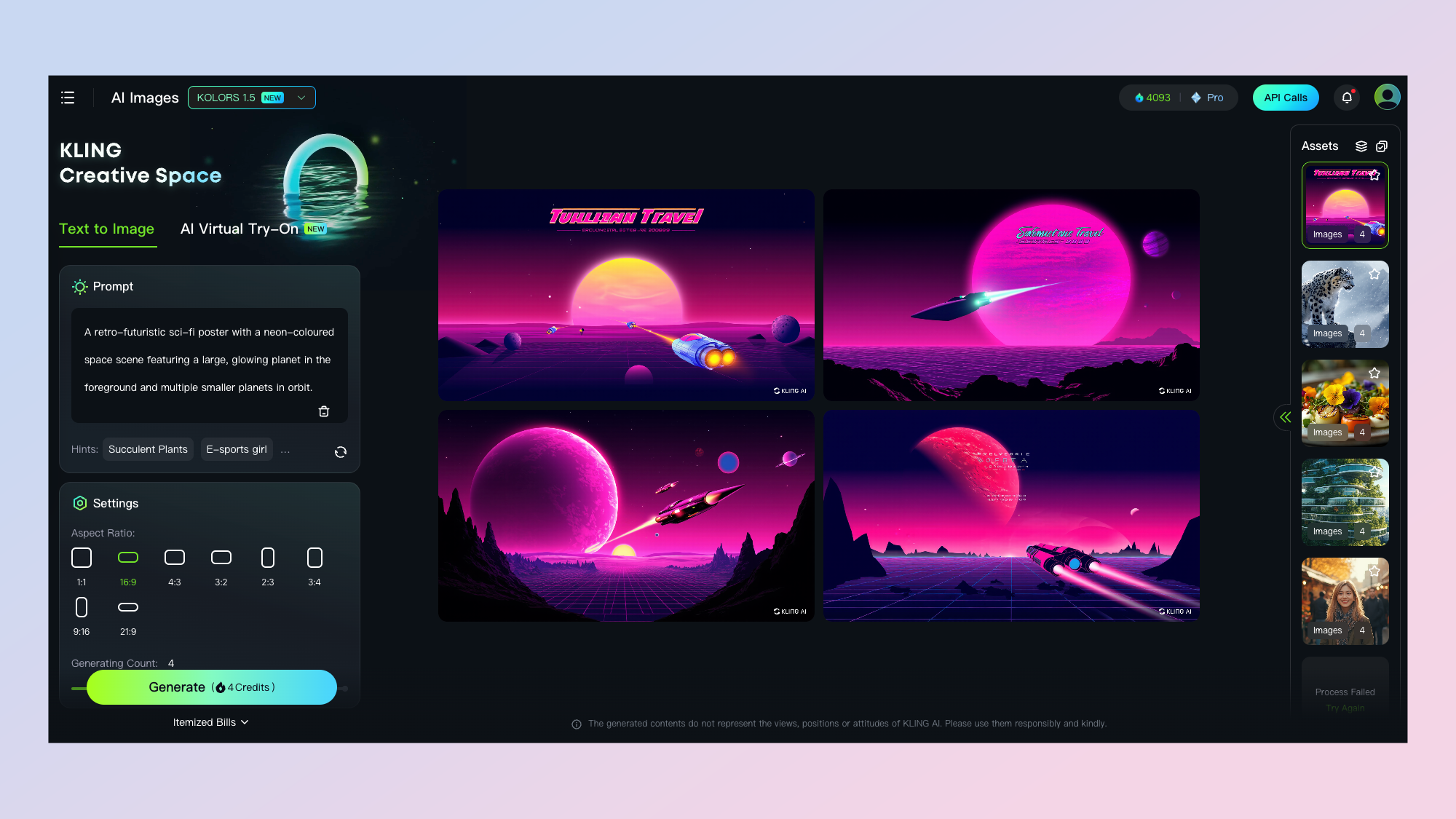

Kling

Our expert review:

Reasons to buy

Reasons to avoid

Kling is one of the best AI video models currently available, excelling in visual realism and smooth motion. It offers advanced features like lip-syncing for dialogue, virtual try-on tools for fashion applications, and, at least for the older model versions, the ability to extend clips.

According to Kling the latest release has an uncanny ability to follow complex instructions including specific camera movements, timing changes and visual structure of the scene. Kling version 1.6 offers improved sharpness and clarity, eliminating the blurriness present in earlier versions. Advanced color accuracy and dynamic lighting adjustments, result in more lifelike visuals. Users will also find smoother and more natural motion rendering, particularly in scenes involving water, fire, and human activities.

Even more enhancements include custom face model training for consistent character representation across multiple videos. Users will notice refined facial expression rendering, capturing subtle emotions and movements, enhanced lip-syncing capabilities, aligning mouth movements accurately with speech patterns.

New creative control features have been recently introduced. A "creativity slider," allows users to balance between strict prompt adherence and artistic interpretation.

A one-click feature enabling users to extend generated videos by an additional 4.5 seconds, maintaining dynamic and coherent content flow.

I’ve found that Kling videos tend to look more real. They include better texturing and lighting than other models with more consistent motion. It still falls foul of many of the same issues around artifacts, people merging and subtle motion difficulties, but overall it is more good more often than others.

Built by the Chinese video platform company Kuaishou, Kling also comes with the KOLORS image model. You can generate images for a fraction of the cost to get an idea of how the final visual might look if you decide to then turn it into a video.

It comes with a free plan that rewards you with daily credits when you log in and the standard plan, with 660 base credits is $5. It costs about 35 credits for a professional 5 second video or 20 credits if you don't mind lower resolution.

Best for Prompt Adherence

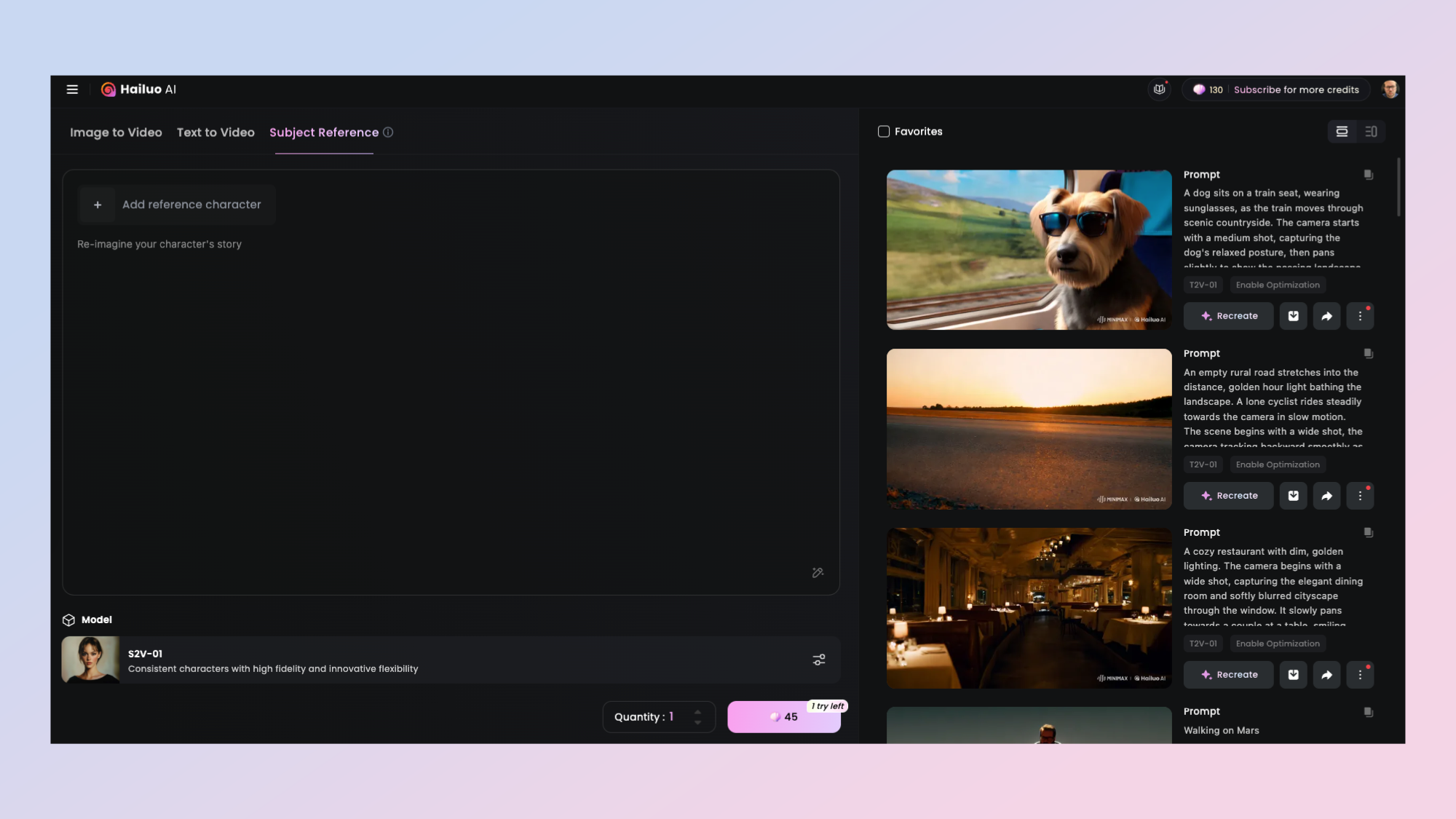

Hailuo MiniMax

Our expert review:

Reasons to buy

Reasons to avoid

Hailuo is one of my favorite AI video platforms to use. It launched early in 2024 and shines when it comes to prompt adherence. It also matches the visual quality of Kling.

When it first launched it was largely in Chinese and nothing more than a small box. It is now a full featured AI platform with a chatbot, AI voice cloning and a video generation model.

Beyond its core video generation capabilities, Hailuo AI now offers a suite of AI-driven tools, including:

Chatbot functionality: An interactive AI chatbot that assists users in real-time, providing guidance and support throughout the content creation process.

AI voice cloning: A feature that allows users to generate realistic voiceovers, enhancing the auditory appeal of their videos.

Character reference model: A notable addition is the character reference model, enabling users to upload an image of a person and have that individual appear within the generated video. This feature offers a personalized touch, similar to Pika Labs' 'Ingredients' functionality.

Director mode for enhanced control: Hailuo AI has introduced a 'Director Mode,' granting users greater control over video generation. This mode allows for detailed customization of scenes, movements, and character interactions, ensuring the final output aligns closely with the user's vision.

The free plan includes daily credits every time you log in and the base subscription is $9.99 per month for 1000 credits, bonus credits for daily login and no watermarks.

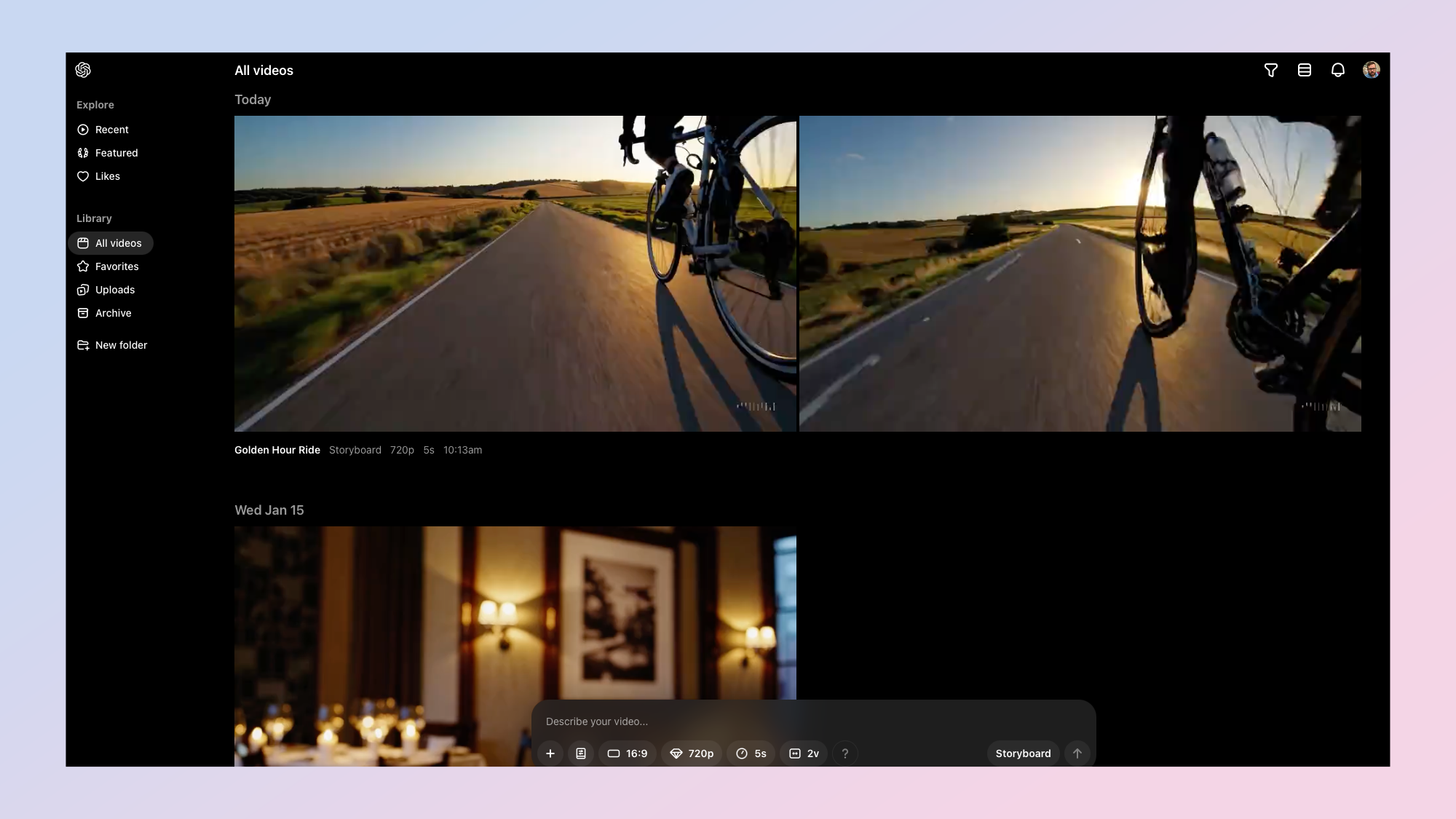

Best for Storyboarding

Sora

Our expert review:

Reasons to buy

Reasons to avoid

OpenAI's Sora offers users the ability to generate short videos from text prompts. While the current version may not fully match the initial previews, it introduces several notable features:

Remix: Modify existing videos while preserving core elements.

Storyboard: Plan and structure scenes by placing image or text prompts at specific points within the video, facilitating the creation of coherent clips.

Style presets and blending: Apply predefined styles and blend elements from multiple videos to achieve desired aesthetics.

OpenAI has implemented strict content moderation policies, including blocking the generation of explicit content and restricting the depiction of realistic human faces to prevent misuse.

Available in text and image-to-video versions, it can take your prompt and turn it into between 5 and 15 seconds of compelling video. Motion is largely accurate and visual realism is impressive, although it isn’t as good as its initial promise as other models seem to have caught up.

Some of the features of Sora make it stand out. For example, the platform includes features such as Remix, which allows users to modify videos while preserving their core elements, and Storyboard, which aids in planning and structuring scenes.

There’s also a style preset function and an ability to blend elements from multiple videos. Although for me the storyboard is the standout. This lets you put an image or text prompt at any point within the video duration and it builds the clip from that.

Sora is integrated into OpenAI's ChatGPT subscription plans. The ChatGPT Plus plan, priced at $20 per month, supports up to 50 videos per month at 720p resolution and five seconds in duration. ChatGPT Pro plan at $200 per month provides unlimited video generation, resolutions up to 1080p, longer durations of up to 20 seconds.

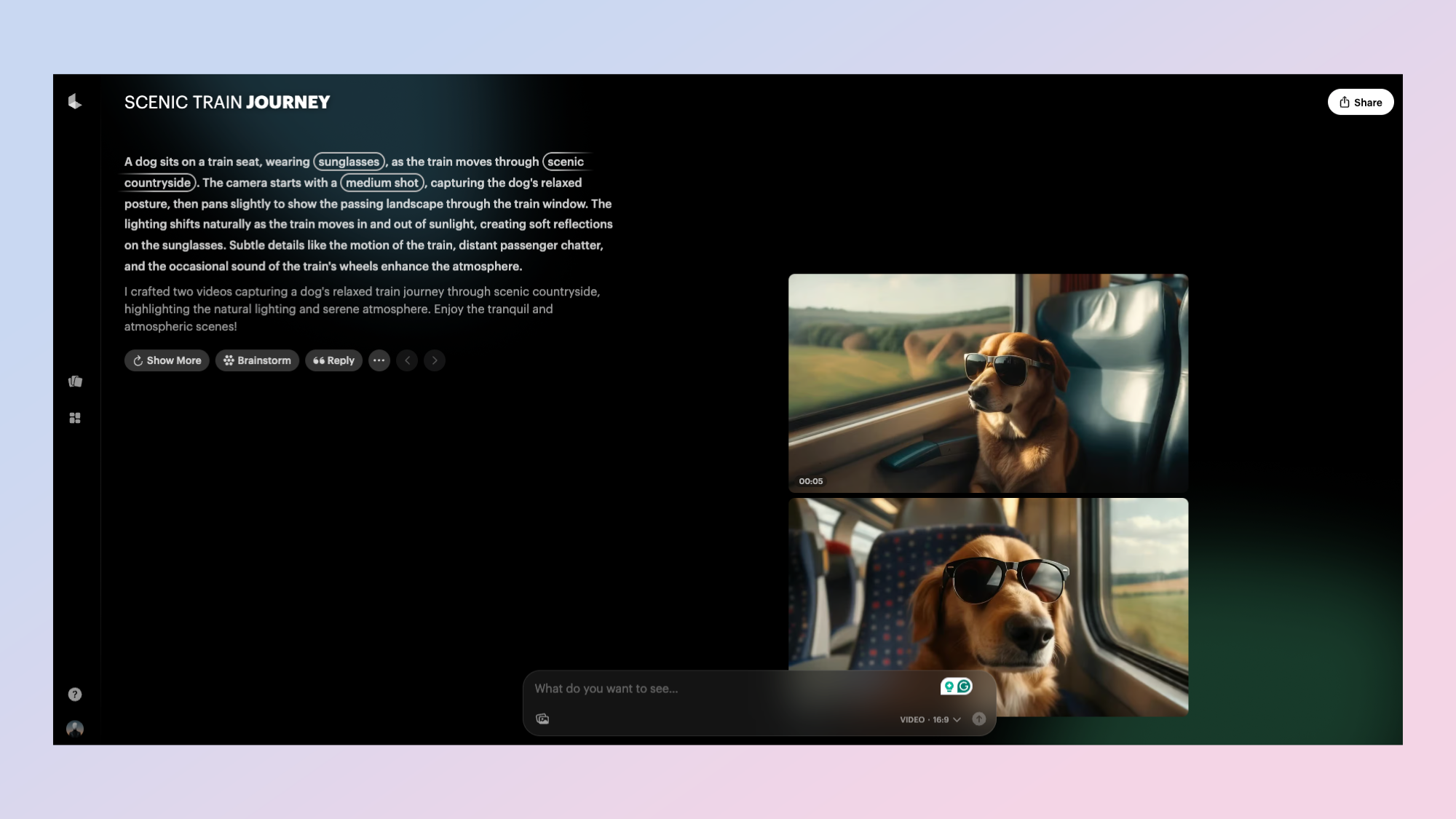

Best for Collaborating with AI

Luma Labs Dream Machine

Our expert review:

Reasons to buy

Reasons to avoid

Luma Labs' Dream Machine is one of the best interfaces for working with artificial intelligence video and image platforms. It can be used to create high-quality, realistic videos from text and images. It is able to create videos in seconds and you can iterate on the original idea just as quickly.

Even with the rapid generation of both images and video, the quality is impressive. This includes accurate and natural motion as well as photorealistic visuals.

A significant advancement in Dream Machine's capabilities is the introduction of the Ray2 model. Ray2 enhances realism by improving the understanding of real-world physics, resulting in faster and more natural motion in generated videos.

The Photon image model is designed for rapid and high-quality image generation. It enables users to produce detailed visuals efficiently, facilitating quick iterations and creative exploration.

The platform now includes 'Boards,' allowing users to organize and manage their projects effectively. The 'Brainstorm' feature assists in idea generation, providing creative prompts and suggestions to inspire users.

Users can now generate consistent characters across multiple images and videos by providing a single reference image. This feature ensures uniformity in character depiction, which is particularly useful for storytelling and branding purposes.

To enhance accessibility, Dream Machine has launched a mobile application available on iOS devices. This allows users to create and edit content on-the-go, ensuring flexibility in their creative process.

Despite its advanced features, users may encounter generation issues, such as stalled or failing outputs. Luma Labs provides comprehensive guides to troubleshoot these problems.

The built-in Photon image model is also incredibly impressive. Luma Dream Machine is incredibly useful for working out prompts. These could then even be used with another model.

Best for Character Consistency

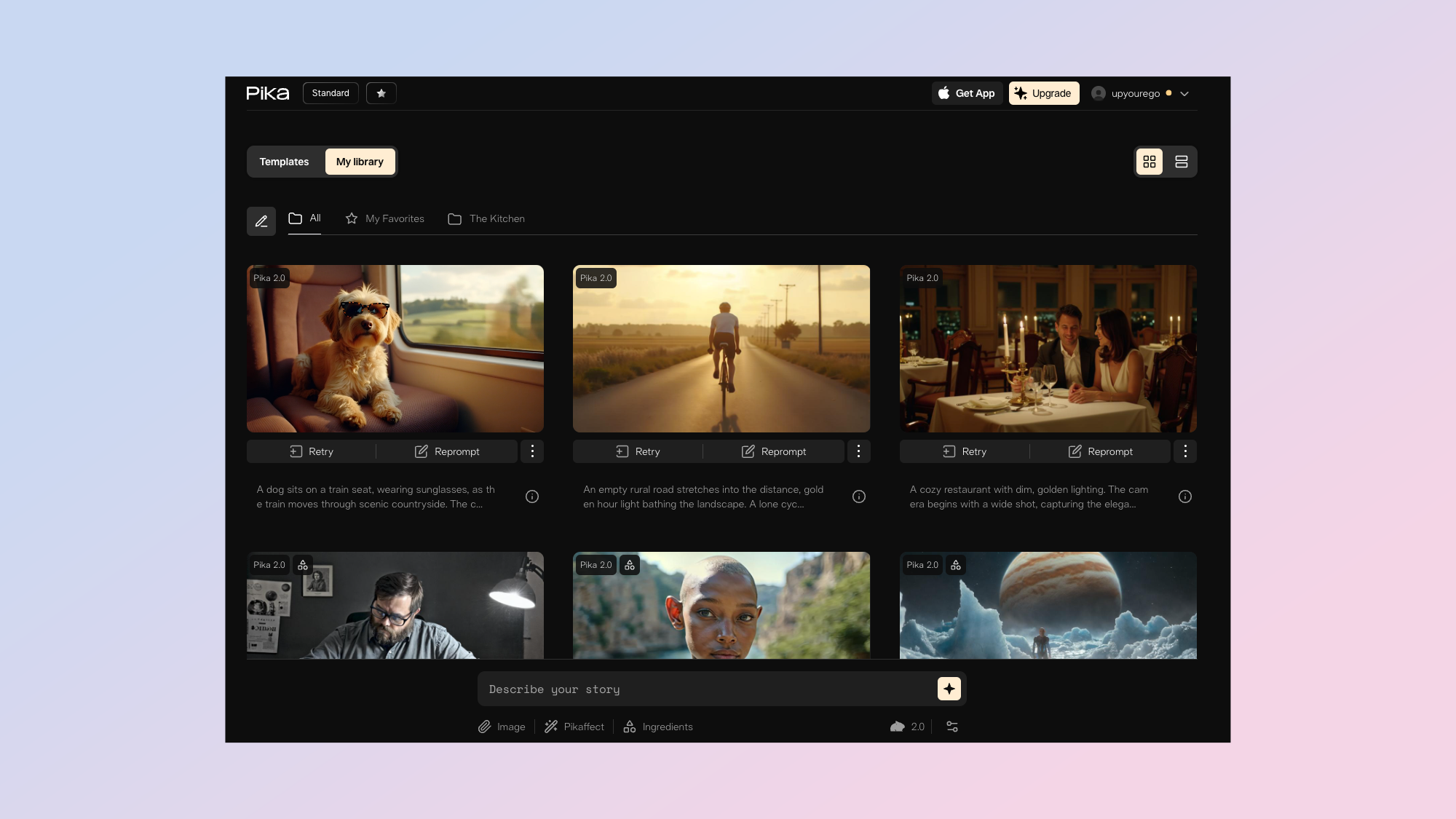

Pika Labs

Our expert review:

Reasons to buy

Reasons to avoid

Pika Labs is one of my favorite AI video platforms. Its most impressive feature is one of its most recent — ingredients. This feature lets you give it an image of a person, object or style and have it incorporate them into the final video output.

This was launched with Pika 2.0. This gave us improved motion and realism but also a suite of tools that make it one of the best platforms of its type that I’ve tried during my time covering generative AI.

The latest version, Pika 2.1, launched on February 3, 2025, introduces high-definition 1080p video generation, enabling users to create more detailed and visually appealing content.

The new Pikadditions feature allows users to seamlessly integrate any person or object into existing videos, expanding creative possibilities.

No stranger to implementing features aimed at making the process of creating AI videos easier, the new features in Pika 2 include adding “ingredients” into the mix to create videos that more closely match your ideas, templates with pre-built structures, and more Pikaffects.

Pikaffects was the AI lab’s first foray into this type of improved controllability and saw companies like Fenty and Balenciaga, as well as celebrities and individuals, share videos of products, landmarks, and objects being squished, exploded, and blown up.

Pika Labs continues to offer a range of pricing plans to accommodate different user needs. The Free Plan provides 250 initial credits with a daily refill of 30 credits, allowing users to explore the platform's capabilities at no cost. For more intensive use, paid plans offer additional features such as higher resolution outputs, increased credit allocations, and commercial use rights.

Best All-Rounder

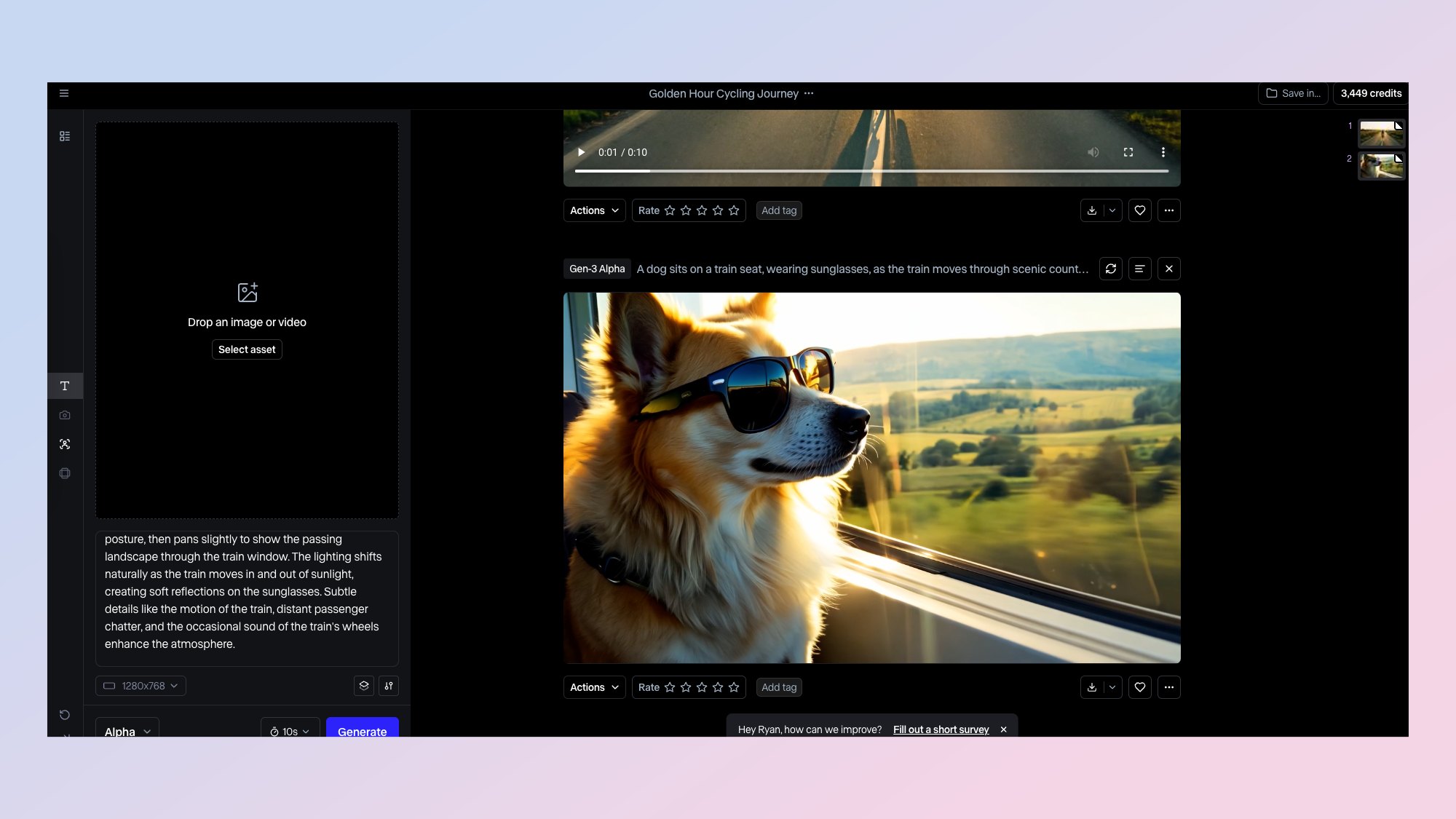

Runway

Our expert review:

Reasons to buy

Reasons to avoid

Runway was the original AI video model. It is now on Gen-3 and has improved by leaps-and-bounds over the original model. This includes the ability to control the exact motion of the final video generation.

With the Gen-3 Alpha model, users can input text or images to produce unique video clips. You can set the image input as the start, middle or end of the final output, further steering exactly how it should look.

Runway's tools have been used in various projects, including films and music videos, showcasing their impact on modern storytelling. Imagine exploring a huge, invisible world full of creative possibilities — this tool turns that into a reality.

Another recent feature is essentially "outpainting" for AI video. This lets you convert a portrait video into landscape or the reverse with nothing but a simple prompt. It matches the layout of the original model.

In November 2024, Runway unveiled Frames, a new image generation model that offers unprecedented stylistic control and visual fidelity. Frames excels at maintaining stylistic consistency while allowing for broad creative exploration, enabling users to establish a specific aesthetic for their projects and generate variations that adhere to their chosen style.

Runway has entered into a partnership with Lionsgate, the studio behind franchises like "John Wick" and "The Hunger Games." This collaboration aims to integrate generative AI into Lionsgate's film and TV production processes, potentially streamlining tasks such as storyboarding and special effects, thereby enhancing creative workflows and reducing production costs.

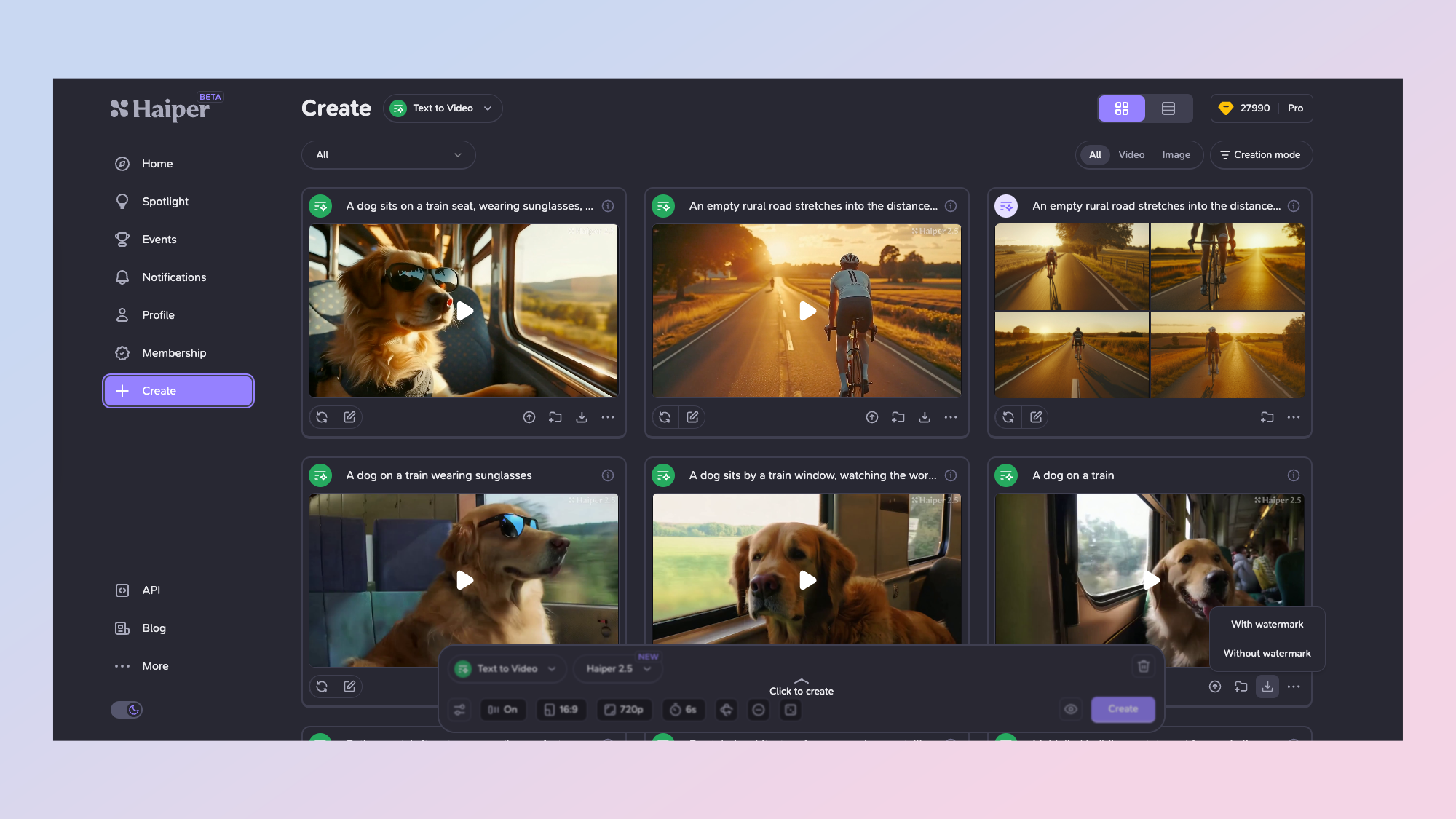

Best for Experimenting

Haiper

Our expert review:

Reasons to buy

Reasons to avoid

Haiper is a bit of an underdog in the AI video space but it is shipping a range of impressive features including templates and motion consistency.

It includes a user-friendly interface and is one of the cheapest platforms, offering unlimited generations on even the lower tier plans. It also includes an AI painting tool, which allows users to modify specific areas of a video by adjusting colors, textures, and elements, thereby enhancing and transforming visual content.

Despite its robust features, Haiper has some limitations. Free users must contend with watermarked videos, which can be a drawback for those looking to use the content commercially. You also need to pay for the top-tier plans to. have commercial usage rights for the video you generate.

By leveraging a proprietary combination of transformer-based models and diffusion techniques, Haiper 2.0 improves video quality, realism and production speed. This update adds more lifelike and smoother movement, potentially setting a new standard for the best AI video generators.

Since its launch, Haiper has continued to push the boundaries of video AI, introducing several tools, including a built-in HD upscaler and keyframe conditioning for more precise control over video content. The platform continues to evolve with plans to expand its AI tools, including features that support longer video generation and advanced content customization.

Want to know more about using AI for creative work? Here's our breakdown of the best AI image generators.

More from Tom's Guide

- Apple is bringing iPhone Mirroring to macOS Sequoia — here’s what we know

- iOS 18 supported devices: Here are all the compatible iPhones

- Apple Intelligence unveiled — all the new AI features coming to iOS 18, iPadOS 18 and macOS Sequoia

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on artificial intelligence and technology speak for him than engage in this self-aggrandising exercise. As the AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover. When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing. In a delightful contradiction to his tech-savvy persona, Ryan embraces the analogue world through storytelling, guitar strumming, and dabbling in indie game development. Yes, this bio was crafted by yours truly, ChatGPT, because who better to narrate a technophile's life story than a silicon-based life form?