Windows Recall: How it works, how to turn it off and why you should

Microsoft backpedals after well-earned backlash over Recall

It's been months since the Microsoft Build 2024 developer conference in Seattle, yet one of the big new Windows 11 features unveiled at that event is still in the news.

I'm talking of course about Windows Recall, a new feature that briefly arrived in preview form on Copilot+ PCs this summer before being yanked by Microsoft due to user concerns.

It's easy to see why: Recall promises to save images of your desktop every few seconds, scan and analyze them with AI help, then make that data searchable using natural language.

Windows Recall is set to return in October 2024, and it could be a huge privacy risk for users, even if it could also be hugely useful.

There's been a lot of wailing and gnashing of teeth over this feature across the Internet, especially among tech journalists and privacy advocates (rightly) concerned that all this easily searchable personal data on your PC will be an attractive target to bad guys.

Microsoft has taken steps to address said concerns, including what reads like an emergency response to the backlash in a blog post outlining how the company is going to be stricter and more careful with Recall — but is it enough?

Let's dig into the details of how Recall is supposed to work, what people are worried about and what Microsoft is doing (or not) about it. I'll also walk you through how Recall works, so if it shows up on your PC you'll be ready to handle it.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

How Windows Recall is supposed to work

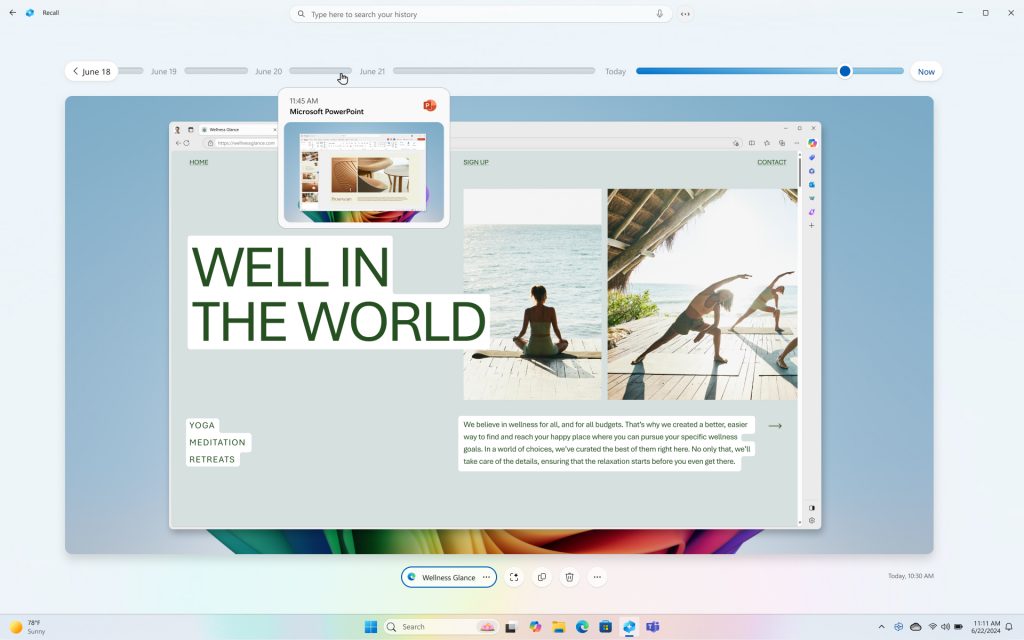

Microsoft's pitch is that Recall helps you find things better by letting you search your PC for anything you've seen on it, using natural language. To accomplish this, Recall takes "snapshots" of your screen at regular intervals, though it won't do so if the content on-screen is identical to the previous snapshot.

These snapshots are literally images of whatever is on your PC screen at the time, and they're stored locally on your computer, analyzed and indexed for easier searching. In simple terms, algorithms scan the image and try to identify what's in it (colors, shapes, images, text, etc), then make that data searchable by you.

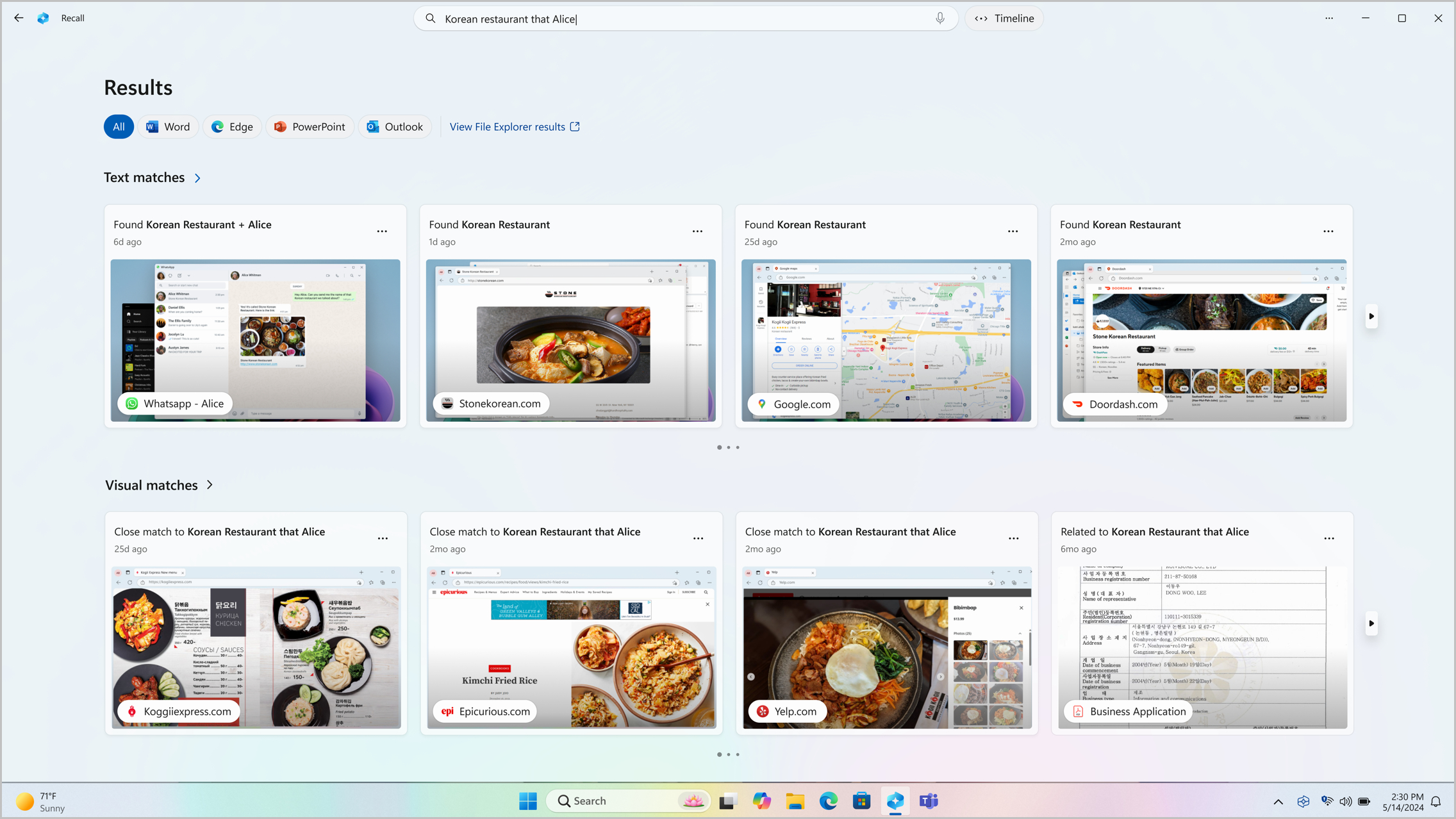

According to Microsoft, this will enable you to search through the history of your time on the PC and find anything from a document or a message someone sent you via DM last month to a pair of shoes you were considering buying last week.

I got a chance to go hands-on with some of the new AI features of Copilot+ PCs at Build last month, including Recall, and it seems to work well enough. Punch in something as specific as "boots" or as vague as "shopping site with pink background" and Recall will show you a visual timeline of every instance it can find in your snapshots.

You can scroll back and forth through the timeline, and when you click into a snapshot you can do things like click directly on the webpage you were looking at to open it again, or copy text out of a DM and paste it somewhere else. Microsoft is also working with developers to get them to integrate the Recall API in their apps, so that down the road Recall may be more capable and may allow you to do more with snapshots of apps, like jumping back into a game you were playing or opening the playlist you found in Spotify last week.

Why you should be worried about Windows Recall

It's a neat feature, and one I could see coming in handy on a semi-regular basis. Unfortunately, I don't think I'll be using Recall much for anything other than work because I don't trust Microsoft with my data.

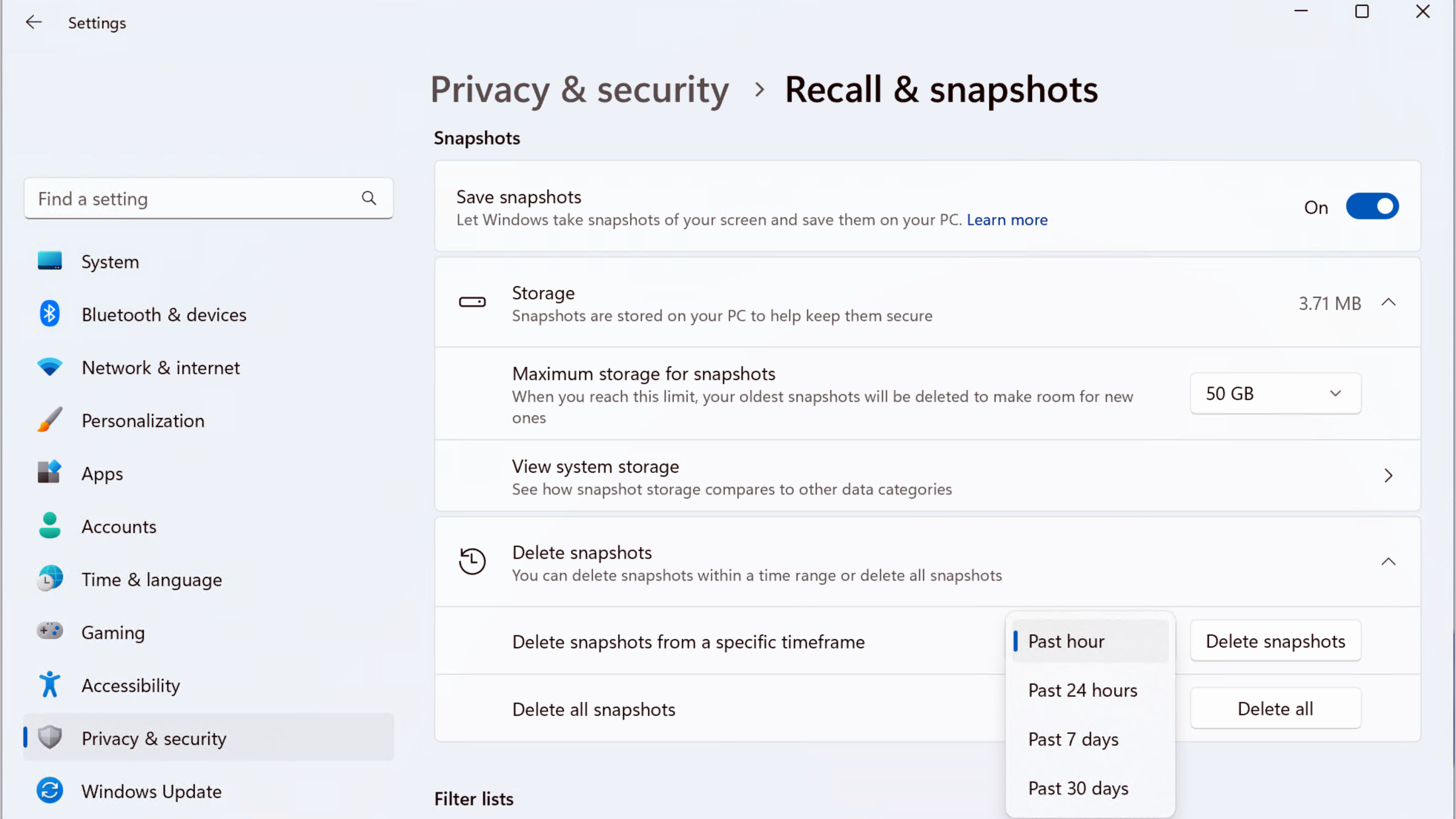

I don't mean to imply that Microsoft will be snooping on my Recall snapshots or anyone else's — the company has already promised that Recall snapshots are stored locally on your PC, never sent to a remote server for analysis and easy to delete whenever you want. You can also delete snapshots within a specific timeframe, and set limits on which websites and apps will be filtered out and thus not photographed by Recall — which is absolutely mandatory when it comes to things like banking websites and password managers.

But there are still a lot of reasons not to trust Microsoft with such an intimate record of your time on your PC. Microsoft has a checkered track record when it comes to user data, to say the least; just type "Microsoft data breach" into your search engine of choice and you'll see what I mean. When the company unveiled Recall at Build I know I wasn't the only journalist in the room to cringe and make a face, and since then we've seen at least half a dozen fiery op-eds, dozens of Reddit threads and at least one Change.org petition calling for Microsoft to ditch Recall.

Which is why, weeks after announcing it, Microsoft has taken additional steps to show its trying to make Recall safe to use. In the blog post/mea culpa I linked earlier (which was published June 6) Microsoft exec Pavan Davuluri explains that additional safeguards are being layered on top of Recall.

So while it wasn't true at first, it now sounds as though when Windows Recall arrives June 18 it will be opt-in (meaning you have to choose to turn it on) rather than opt-out. According to Davuluri, Microsoft is overhauling the Recall set-up process to "give people a clearer choice to opt-in to saving snapshots using Recall", which is great!

It should have been that way in the first place!

Microsoft is also layering some additional encryption safeguards into the Recall experience, which is a direct response to widespread complains that Windows Recall as first revealed was not secure at all.

While Recall was originally pitched as safe because all snapshots would be stored locally and encrypted to the extent your Windows hard drive is encrypted—which can be pretty well to not really at all, depending on whether you have BitLocker encryption enabled — privacy heads and hackers quickly pointed out many ways in which thieves and hackers could access your private data.

Obviously, having a searchable index of everything you've looked at on your laptop over the last month or so would be a treasure trove if someone managed to get ahold of it and sign into your account. But according to security researcher Kevin Beaumont, that's far from the only way malefactors could steal data from you through Recall.

In a recent blog post Beaumont outlined his experiments installing a version of Recall on a laptop that does not qualify as a Copilot+ PC, including the revelation that his version of Recall was writing all the data it managed to pull from screenshots of his PC into a database that's saved as a plain text file on the hard drive.

Microsoft told media outlets a hacker cannot exfiltrate Copilot+ Recall activity remotely.Reality: how do you think hackers will exfiltrate this plain text database of everything the user has ever viewed on their PC? Very easily, I have it automated.HT detective pic.twitter.com/Njv2C9myxQMay 30, 2024

That data could then easily be accessed, either by a hacker using some kind of info-stealing malware to remotely copy the file from your PC or by someone who gets admin privileges on it. According to Beaumont's post, "I have automated exfiltration, and made a website where you can upload a database and instantly search it. I am deliberately holding back technical details until Microsoft ship the feature as I want to give them time to do something."

It seems like Microsoft has taken time to do something, as it has added a new requirement that you must enable Windows Hello in order to use Recall.

Windows Hello, if you're not familiar, is the biometric authentication system in Windows which can do things like use the fingerprint reader or IR camera built into your laptop to scan your fingerprint or face and automatically log you into your PC. It now must be enabled if you want to use Recall, and every time you search or view things in Recall you must use Windows Hello to verify it's really you doing it.

Finally, Microsoft promised to encrypt the Recall search index database (that plain text file that Beaumont found so easily) and implement "just in time" decryption on Recall snapshots, so that they're only decrypted when you authenticate it's really you sitting there looking at them.

So when Windows Recall finally starts showing up on Copilot+ PCs as a Recall (preview) app you can launch from the Start menu, it should be a bit safer to use than Microsoft initially promised.

But you should still be leery of this new feature, and cautious about using and setting it up when it arrives. While the additional encryption safeguards are great to have, the fact remains that Recall is fundamentally photographing everything you do on your PC and saving a record of it. You need to keep that in mind at all times when using it, and if you do use it you should know how to turn it off or fine-tune its photography habits.

We're looking forward to checking out the preview version of Recall for ourselves when we get a chance to review Copilot+ PCs like the new Surface Laptop 7 or Surface Pro 11. When we do we'll publish full guides on how to disable Recall or manage its many features. But in the meantime, we've managed to glean some key details from Microsoft's support page for Recall.

How to disable Windows Recall

As noted above, Microsoft's support website has a full guide to how Recall works and how to manage it, which is great. The guide outlines key details like which browsers support the option of excluding specific websites from Recall's eye (Chrome, Edge, Firefox and Opera) and how to change how much storage space it takes up (25GB by default, can go up to 150GB), as well as how to add websites and apps to the list of things Recall filters out.

Perhaps the most important information in Microsoft's guide is how to turn off Windows Recall, and it looks pretty straightforward.

To disable Windows Recall, open the Settings app and navigate to Privacy & Security > Recall & snapshots. Then simply turn off the toggle next to Save snapshots and presto, you should be free and clear!

If you want to delete all the snapshots that Recall have been saved on your PC, you can do that in the same menu by clicking the Delete all snapshots button.

Outlook

Microsoft seems to have really stepped in it this time.

While Windows Recall was sort of scary right from the start, it was especially terrifying to learn how flimsy and full of holes it is from a security perspective. While I'm glad Microsoft is taking steps to address the public backlash around Recall, I'm concerned that the company is going to push this AI-powered feature and others forward without doing enough security testing to ensure it's bulletproof.

The very idea of telling Windows users that your new feature photographs everything they do on their PC seems upsetting enough that I'd assumed Microsoft would have been extra careful when developing Recall, and that there would be iron-clad security in place for those that use it. I was wrong!

Now the company is on the back foot and reacting to public outcry, when it should be clearly showing us what future it envisions for PCs and how it plans to get us there. I like Windows a lot, but I'm afraid that in Microsoft's seemingly single-minded drive to push "AI" into everything it sells it's going to make Windows worse while opening us up to all sorts of new threats. And I don't see why I'd want that in return for things like a supercharged new search feature that I just don't need.

More from Tom's Guide

- I interviewed Microsoft's Surface exec about Copilot+, the future of AI in Windows and how Apple factors in

- Zotac just unveiled the best gaming handheld I’ve ever seen

- I tested a cheap Apple Vision Pro knock-off and it’s worse than you can imagine

Alex Wawro is a lifelong tech and games enthusiast with more than a decade of experience covering both for outlets like Game Developer, Black Hat, and PC World magazine. A lifelong PC builder, he currently serves as a senior editor at Tom's Guide covering all things computing, from laptops and desktops to keyboards and mice.

-

hackdefendr Except for one small caveat. You can't disable it. Microsoft seems to be accidentally re-enabling Recall during system updates. I'm sure they'll stop doing this until we forget about it.Reply -

USAFRet Reply

CoPilot and Recall will be only available on a tiny subset of new devices.hackdefendr said:Except for one small caveat. You can't disable it. Microsoft seems to be accidentally re-enabling Recall during system updates. I'm sure they'll stop doing this until we forget about it.

It is not yet a thing with current Win 10/11 devices.

And apparently, MS is making it opt-in, rather than opt-out.

We shall see. -

hackdefendr Reply

No sorry, don't believe everything you read on the Internet. It absolutely did exist in the bleeding edge branch of Window 11. It was on my VM of Win 11 running on my MacBook. I manually disabled Recall twice, and on the first update it was re-enabled.USAFRet said:CoPilot and Recall will be only available on a tiny subset of new devices.

It is not yet a thing with current Win 10/11 devices.

And apparently, MS is making it opt-in, rather than opt-out.

We shall see. -

TaskForce141 Government, corporate, and institutional customers will insist that their version Win 11 not have any Recall at all. Not just disabled.Reply

Especially, hospitals, billers, and healthcare providers subject to HIPAA privacy laws.

How can you operate with MS' take on pcTattleTale stalker-ware, built into the operating system?

Does no one at MS have the common sense to look at features, *from the customer's perspective* ?

Whoever at MS dreamed up this Recall feature, deserves to be fired.

And the execs who approved it, deserve to be let go as well, for wasting resources on this. -

agb2 Maybe I'm a suspicious reader, but the Microsoft announcement ( https://blogs.windows.com/windowsexperience/2024/06/07/update-on-the-recall-preview-feature-for-copilot-pcs/ ) says the snapshots are encrypted and the database is encrypted to. I conclude Microsoft sees the snapshots and database as separate things. Further down they say that the snapshots are never exported off the computer. They don't say that about the database. My suspicious self sees a loophole so that Microsoft can export that data for, I don't know, telemetry reasons...Reply

Please let me know what you think. Am I over-suspicious or is MS setting up a way to get access to everything we do on our computer?