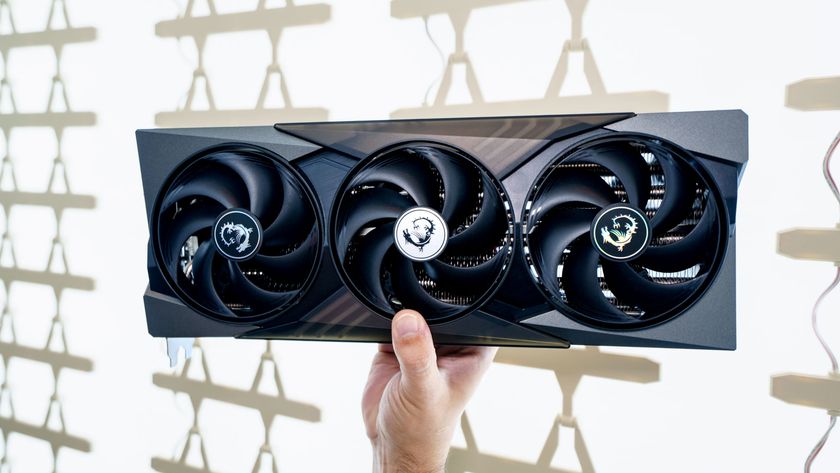

I wasn’t planning on building a new gaming PC but the RTX 5080 just changed my mind

The Nvidia RTX 5080 looks like the right GPU for me

I wasn’t surprised when Nvidia finally unveiled the GeForce RTX 50-series GPUs during CES 2025. Not only had I seen several upcoming PCs with “next-gen Nvidia” graphics cards at events before CES, but there had also been months of rumors and leaks—many of which were accurate. Yes, I knew RTX 50 cards were coming, but I didn’t know the reveal would convince me to upgrade my gaming PC.

I bought a gaming PC with an Nvidia RTX 3080 Ti almost three years ago, and it has done an excellent job of running the best PC games at 4K resolution and over 60 frames per second with settings set to High or even Max. I was so satisfied with the experience that I skipped the Nvidia RTX 40 series. However, I’ve had to lower graphical settings more frequently as games have become more graphically demanding. That’s a sign that it’s time for me to upgrade.

Nvidia GeForce RTX 5090, RTX 5080, RTX 5070 Ti and RTX 5070 graphics cards will be available soon. Of those four, I have my eyes on the Nvidia GeForce RTX 5080. I’ll explain why below.

My PC is (kind of) ancient

My current gaming PC might not have impressive specs now but it was kind of a monster back when I had NZXT build it for me. It has an 11th Gen Intel Core i7 11700KF CPU, an Nvidia GeForce RTX 3080 Ti, 32GB of GDDR4 RAM, 1TB of SSD storage and an ROG Strix Z590-E motherboard.

In all fairness to my rig, it’s still quite capable of handling most games so long as I properly tweak the settings or let the Nvidia Experience app configure them. But with games becoming more demanding, my PC is showing its age. Given that and what the RTX 50-series promises to deliver, I think now is the best time for me to upgrade my gaming rig.

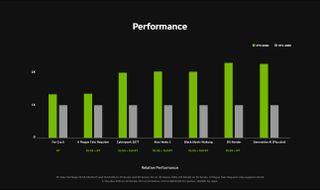

Balance of price and performance

I’m sure you’re wondering why I’m not eyeing the Nvidia RTX 5090 i.e. the king of the RTX 50-series lineup. Don’t get me wrong, the RTX 5090 is very tempting with its monstrous 32GB of VRAM and enhanced AI capabilities. However, if you’ve researched the RTX 5090 then I’m sure you already know why I’m opting for the RTX 5080. The RTX 5090 is one heck of an expensive GPU!

| GPU name | Starting price | Graphics memory |

| RTX 5090 | $1.999 | 32GB GDDR7 |

| RTX 5080 | $999 | 16GB GDDR7 |

| RTX 5070 Ti | $749 | 16GB GDDR7 |

| RTX 5070 | $549 | 12GB GDDR7 |

At launch, the Nvidia RTX 5080 will cost an eye-watering $1,999. That’s almost half what I paid for my current rig back in 2022. Toss in other components I want, such as a 14th Gen Intel Core i9 14900K CPU, 64GB DDR5 RAM, 2TB of SSD storage — along with a motherboard, power supply, and PC case (among other things), and I’m likely to spend a small fortune on a new gaming rig.

If I can lower the cost of my next gaming PC by $1,000 by opting for an RTX 5080 instead of a 5090, that seems like the smarter play.

Outlook

While I’m excited about the new Nvidia GeForce RTX 50-series cards, I won’t make a final purchasing decision until we’ve tested the new GPUs ourselves. Not only do I want raw performance metrics, but I also want to see how graphically demanding games like Cyberpunk 2077 benefit from DLSS4 — especially at high graphical settings.

The Nvidia RTX 5080 and RTX 5090 arrive on January 30. If the former card lives up to the hype, then it’ll be time for me to build an all-new rig. If not, perhaps I’ll save some extra money and buy its more powerful sibling. Either way, 2025 should be the year when I upgrade to a new PC — and it’s all due to Nvidia and its impressive (on paper) graphics cards.

More from Tom's Guide

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Tony is a computing writer at Tom’s Guide covering laptops, tablets, Windows, and iOS. During his off-hours, Tony enjoys reading comic books, playing video games, reading speculative fiction novels, and spending too much time on X/Twitter. His non-nerdy pursuits involve attending Hard Rock/Heavy Metal concerts and going to NYC bars with friends and colleagues. His work has appeared in publications such as Laptop Mag, PC Mag, and various independent gaming sites.

-

daftshadow I'm kind of in a similar boat.Reply

-3080 12gb

-13700k

-64gb ddr5

-asrock z790

Fortunately all i need to upgrade is the gpu. So I've been eyeing the 5080 too. Just gonna wait to see the real world benchmarks and reviews. Hopefully it lives up to the hype.

The zotac 3080 12gb has served me admirably for several years but has shown its age in more recent graphically demanding titles. Especially if things like ray tracing are enabled. Not having frame generation tech sucks. -

syleishere I heard TSMC is trying to screw world over right now on 2nm wafer prices, I heard Intel and Samsung are going to come in to compete, with Intel going directly to 1.8nm with panther lake. Might be better to wait for these 2nm and 1.8nm chips, and hopefully pcie version 6.Reply

Yeah I think 1k extra for 5090 is ridiculous, for pitiful extra 16gb vram for AI. I've run lama 70billion models on just CPU and and memory cause nothing fits on these cards anyways. If you heavy into video editing I'd say just go for it, should get a huge speedup on gaming and converting your movies to av1 -

PFord59257 Just out of curiosity, when does the return on investment turn sour on these new GPUs?Reply -

syleishere Reply

When the 2nm wafers start production. Think apple told TSMC to go to hell this year on their prices and a few others. 2026 or end of 2025 looks like when they'll hit, so I wouldn't be surprised with a 6090 for next year on 2nm process.PFord59257 said:Just out of curiosity, when does the return on investment turn sour on these new GPUs?

Then again they may wait for PCIe version 6 to become mainstream before releasing it which would make 5090 the best for many years to come. Panther lake is out, but only to select people, Intel finding overheating issues on PCIe version 6 atm, so we may not see new cpu's hit market till 2026, then 2027 as a mass adoption period when motherboards are out for it.

Can blame TSMC for holding world back this year, sad really. Karma going to bite them for their greed I suspect though, they could have lowered their wafer prices and made tons of money, now everyone will ditch them and get Intel, Samsung and some Japanese company to make them when their processes mature by end of year I suspect -

Deadllhead My concern is the 16gb of the 5080. Is that going to be enough for the next 4-5 yrs? Is it even enough now for some games?Reply

I'll need to rebuild mine as well as it's running. 5900x so will bottleneck on the new cards. -

jimi411 "it’s still quite capable of handling most games so long as I properly tweak the settings"Reply

Uhh no, your computer is quite capable of playing any game released for quite some time. Calling a computer ancient because it can't run every game on ultra is silly. There's also little reason to upgrade an entire PC that's 3 years old instead of just swapping the graphics card. -

syleishere Reply

You'd need to upgrade from PCIe version 4 to 5 to use the new cards. I have 5950x as well, my only option on this old PC is a 4090.Deadllhead said:My concern is the 16gb of the 5080. Is that going to be enough for the next 4-5 yrs? Is it even enough now for some games?

I'll need to rebuild mine as well as it's running. 5900x so will bottleneck on the new cards.

I ordered 128gb of ecc memory for it last week so I'll repurpose it in basement as a NAS when ready to upgrade. -

bignastyid Reply

Incorrect, pci-e is forwards and backwards compatible. A pci-e 5.0 gpu will work in a 4.0 slot it will just be limited to pci-4.0 bandwidth.syleishere said:You'd need to upgrade from PCIe version 4 to 5 to use the new cards. I have 5950x as well, my only option on this old PC is a 4090 -

PFord59257 Reply

I think my question was when do these new GPUs become too expensive to be worth the cost? It seems to me that newer, faster, more powerful hardware gives developers license to be sloppy with their code. I've also read that players are starting to move away from resource-intensive games with fine details in favor of less intensive gameplay releases that are easier to play within a wider community. I'm not a gamer, so I don't know. Duke Nukem, Quake, Doom, and Tetris are about my speed. I'm just curious. It seems at some point the expense doesn't provide much reward. And where does that place console gaming systems in the mix?syleishere said:When the 2nm wafers start production. Think apple told TSMC to go to hell this year on their prices and a few others. 2026 or end of 2025 looks like when they'll hit, so I wouldn't be surprised with a 6090 for next year on 2nm process.

Then again they may wait for PCIe version 6 to become mainstream before releasing it which would make 5090 the best for many years to come. Panther lake is out, but only to select people, Intel finding overheating issues on PCIe version 6 atm, so we may not see new cpu's hit market till 2026, then 2027 as a mass adoption period when motherboards are out for it.

Can blame TSMC for holding world back this year, sad really. Karma going to bite them for their greed I suspect though, they could have lowered their wafer prices and made tons of money, now everyone will ditch them and get Intel, Samsung and some Japanese company to make them when their processes mature by end of year I suspect

Thanks...