Siri 2.0 is expected this June at WWDC — here's how Apple's family of AI models might fit in

All eyes are on Apple's next AI move

Apple just released eight new large language models (LLMs) designed to run on smartphones just weeks before WWDC. Held in June, many believe the company will make significant announcements related to generative AI.

In a paper published on Hugging Face about the family of Open-source Efficient Language Models (OpenELMs) last week, Apple researchers showed off their new LLMs' decent results despite their relatively tiny size.

They were trained on data from Reddit, Wikipedia, and ArXiv among other publicly available sources. OpenELM’s variants range from 270 million to 3 billion parameters which makes them quite nimble compared to GPT-4’s over 1 trillion parameters. That’s good news for iPhone users who may be in for AI upgrades later this year, including ones related to Siri.

Apple’s voice assistant was ok, but can GenAI make it great? While the rumor mill certainly believes so, we will hopefully know more between June 10 and 14 during Apple’s 2024 Worldwide Developers Conference.

Apple is joining the public AI game with 4 new models on the Hugging Face hub! https://t.co/oOefpK37J9April 24, 2024

Meeting the June deadline means that Apple will be entering the public generative AI space many months after rivals OpenAI, Microsoft, and Google but the company can seize the building hype for a massive comeback.

However, nothing is to be taken for granted. The phones to beat are the Samsung Galaxy S24 and Galaxy S24 Ultra which come with practical AI abilities and have been well received. So anything which doesn’t at least match the Samsung AI experience will hurt iOS 18’s (and eventually the new iPhone’s) AI score.

Onboard power combined with third party innovation

What is more certain is that the latest iPhones will be able to run LLM models without relying on the cloud with benefits for privacy and speed. These would run on the Apple Neural Engine, a processor that runs LLM models smoothly in an energy efficient way.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

However, more advanced AI features such as generating images or longer pieces of text may still require cloud processing, perhaps even using third-parties such as OpenAI's ChatGPT or Google Gemini. While Apple has been testing an internal ChatGPT-style chatbot, an analyst had poured cold water on hopes that we’d be able to see something public in 2024.

Developers have already been given a signal to start thinking about using generative AI to build apps when news leaked about Apple’s next version of Xcode. If this is confirmed, the leap to have developers start thinking about creating generative AI apps themselves may be made easier. It would then be a matter of when not if we’ll see AI apps in the App Store.

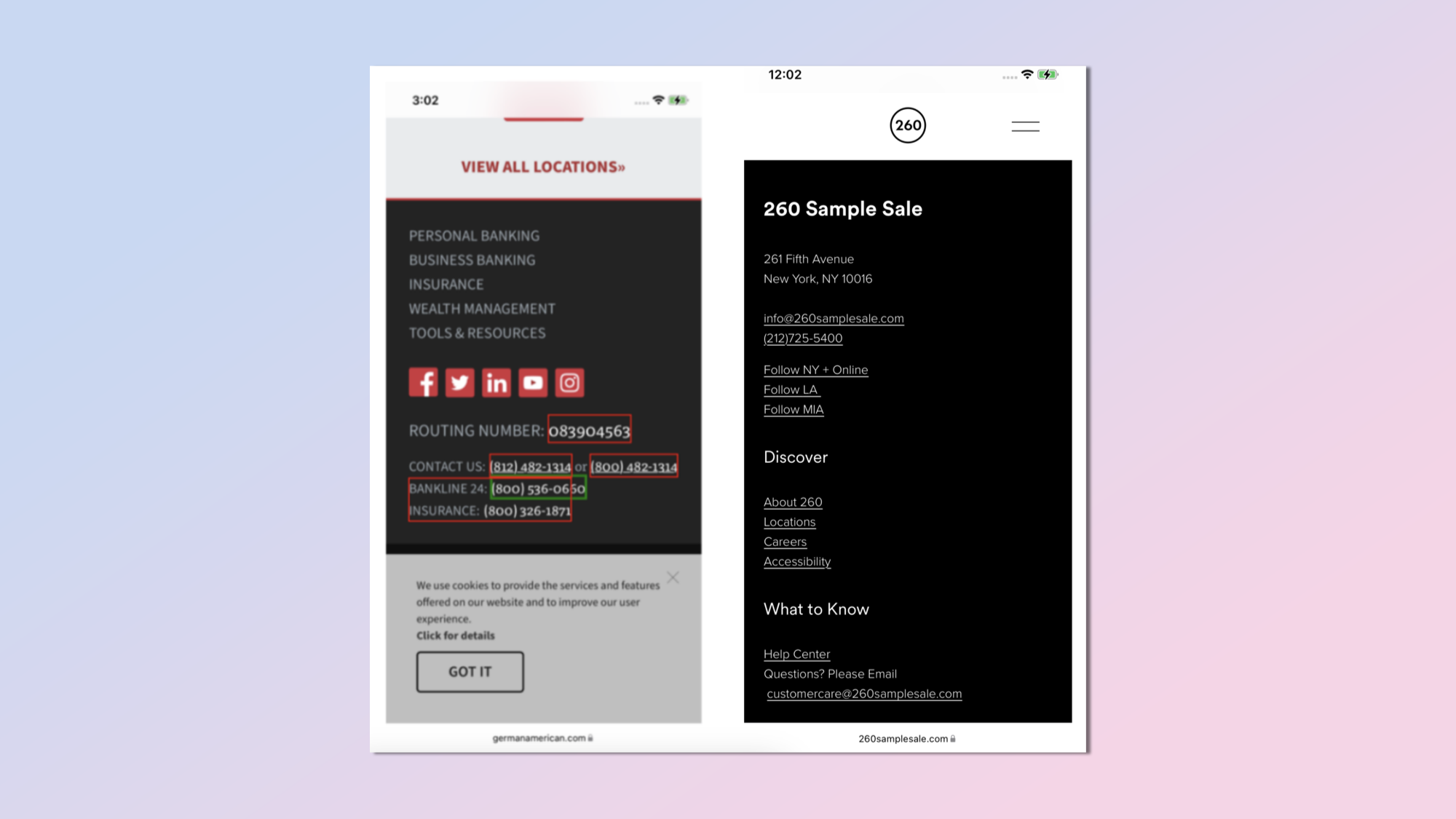

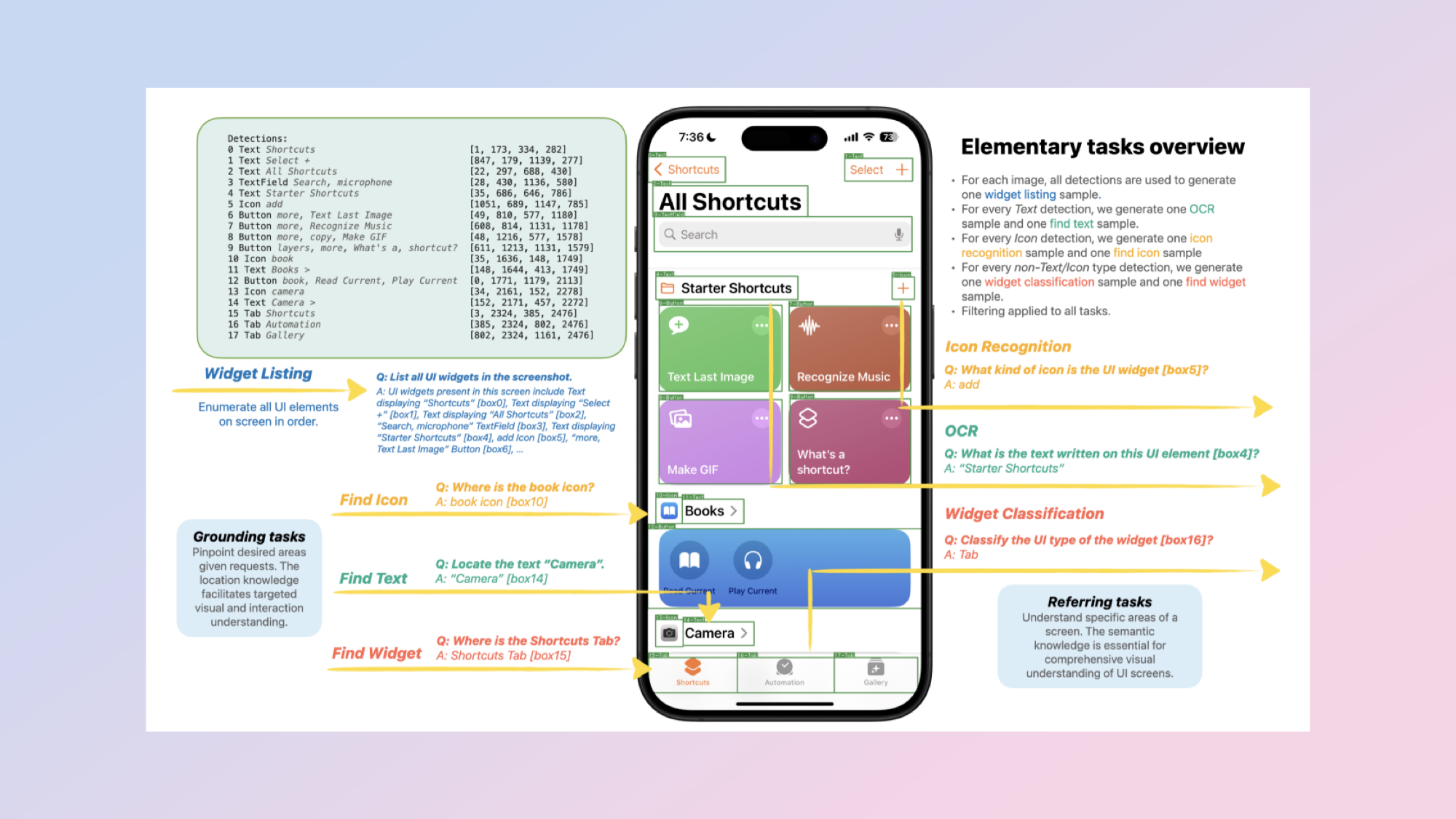

Apple's AI will be able to analyze your screen

The average user is more likely to end up interacting with Apple’s iPhone-screen-reading Ferret-UI LLM, at least for now. In theory, such a model could complement tools like Safari’s ‘Find on Page’ with an ‘Ask this Page’ feature allowing users to ask Apple’s AI to summarize what’s on their screens, contextualize it, or provide additional analysis. If such a feature is too ambitious, it could be scaled down to focus on improving accessibility for people with impaired hearing, sight, or vision.

Similarly, Apple’s new small language model called ReALM (Reference Resolution As Language Modeling) could help make Siri 2.0 smarter by helping it to understand context and ambiguous references. It reportedly rivals GPT-4 so if it’s rolled out, you should be able to interact with Siri in ways you haven’t been able to before. Some tasks could even be done autonomously.

Features more likely to debut in June include generating playlists in Apple Music, creating Keynote slides and generating text in Pages or messages.

The road ahead

Now may be a good time to start thinking if you’ll want to upgrade to the iPhone 16 which should come with an A18 processor which may be needed for running exclusive AI tasks. However, if the most important new AI features come with iOS 18, older iPhone models should still be able to perform relatively well. They may need to rely more on cloud services though.

While the hype and speculation is rife, things should become clearer over the next months. What’s certain is that Apple CEO Tim Cook promised investors they will hear more about how the company plans to put generative AI to use before the year is over – technology he sees could lead to a “major breakthrough”. This year’s WWDC will be one you won’t want to miss.

More from Tom's Guide

- iPhone 16 AI features: All the biggest rumors so far

- Siri creator says it’s about to become a ‘real force’ in the AI race against ChatGPT, Copilot and Gemini

- Apple's new OpenELM teases the future of AI on the iPhone

Christoph Schwaiger is a journalist who mainly covers technology, science, and current affairs. His stories have appeared in Tom's Guide, New Scientist, Live Science, and other established publications. Always up for joining a good discussion, Christoph enjoys speaking at events or to other journalists and has appeared on LBC and Times Radio among other outlets. He believes in giving back to the community and has served on different consultative councils. He was also a National President for Junior Chamber International (JCI), a global organization founded in the USA. You can follow him on Twitter @cschwaigermt.