See how NVIDIA RTX AI PCs can leverage local processing and more with hybrid AI workflows

With AI technologies becoming increasingly more prevalent, there are countless ways to access these tools. Some tools can run locally on RTX AI PCs, which feature dedicated hardware for AI inference on-device to get low-latency and secure data handling. But some tools have to tap into vast amounts of computational power to work fluidly. It can be more than a little confusing figuring out where you should turn to get the most out of different AI applications. Some might run easily on local hardware in your RTX AI PC, tapping into your GPU to provide speedy results with minimal latency, but others could take so much processing that only a data center can handle them in a reasonable amount of time. NVIDIA is working to make finding the right channel for these AI workloads easier with its hybrid AI infrastructure.

A hybrid AI setup can allow an AI model to run in different environments with easy switching between those environments. If you start with a small project, you can just run the models locally on your RTX AI PC, but if you start pulling in bigger dataset or process more, the hybrid AI workflow can let you move over to a workstation or data center, so you can scale up the resources to fit the task. With hybrid AI, you can use the hardware that makes the most sense at any given time, providing flexibility and scalability to projects.

NVIDIA already has a host of tools and technologies that work with its hybrid AI systems. These tools cover a broad range of applications as well, including content creation, gaming, and development.

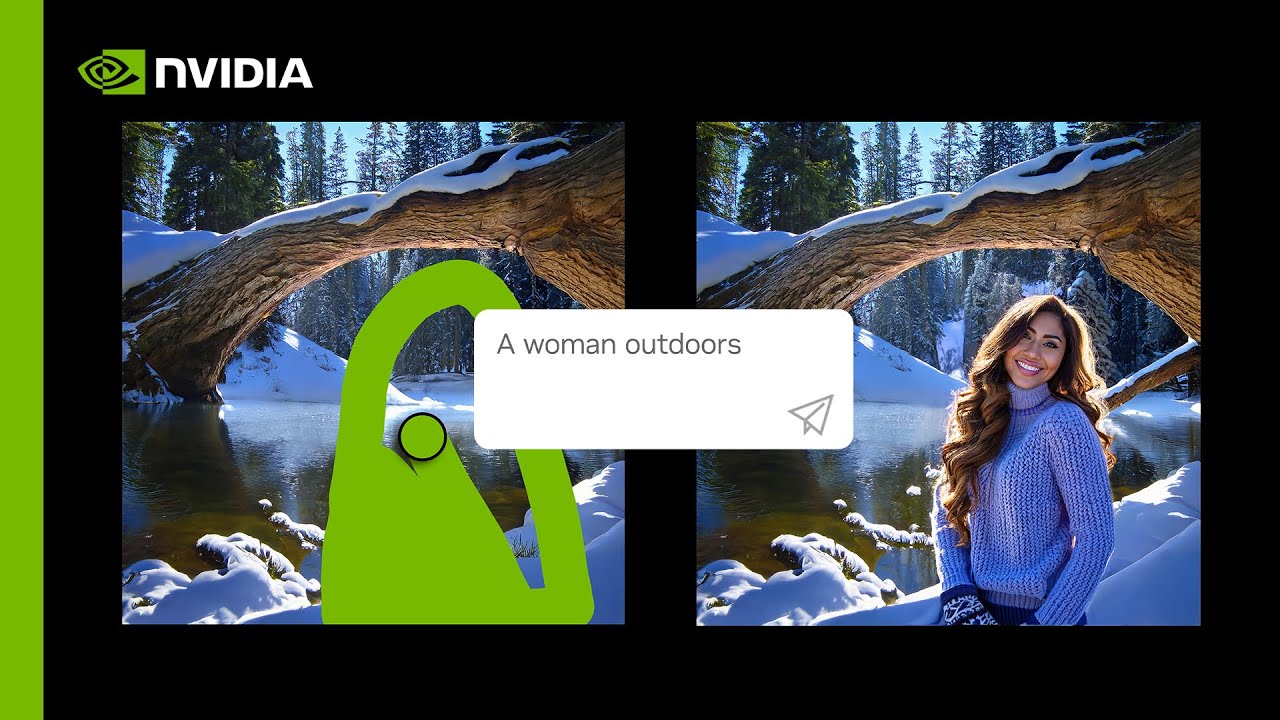

Generative AI with iStock is one tool that leverages a hybrid AI workflow. It provides cloud-based processing to generate visuals, modify them, restyle them, and expand their canvases using models built with datasets trained using licensed content. Artists can take these cloud-powered creations to process and work with locally on an RTX AI PC using any of the numerous apps that support AI acceleration.

Taking a different approach, NVIDIA ACE works in games to give characters in games a spark of personality and adaptive reactions through the use of generative AI. Characters can be given backstory, personality, and more. With datasets that can grow considerably to fit vast open worlds and deep customization possible, the flexibility of hybrid AI to train on a workstation or server and deploy on local machines helps make NVIDIA ACE faster and more scalable.

NVIDIA AI Workbench takes direct advantage of hybrid AI. This tool simplifies the process of customizing large language models (LLMs) like Mistral-7B and Llama-2. As each step in your projects can call for different needs and resources, the NVIDIA AI Workbench makes it easy to quickly move the project between local hardware, workstations, and cloud servers.

To stay up to date with the latest developments in AI and get simple explanations of the complex topics within, keep an eye on NVIDIA’s blog for the AI Decoded series, which explores the nuts and bolts of AI and the latest tools in an easily digestible way. You can learn more about it all here.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.