Robots are about to have an 'iPhone moment' — and its all thanks to AI

Technically we're there. We just need to clear these hurdles

Many of our first interactions with robots have watching a robot vacuum cleaner negotiate its way around our couch and make perilous journeys across our rugs.

With recent advancements in AI, we may be getting closer to the moment that brings larger and more advanced humanoid robots into our homes at scale.

Long popularized in decades of science fiction novels and movies, humanoid robots would be functional like robot vacuums but would be paired with advanced capabilities to interact with us and the rest of the world.

Last week, University of Leicester AI and robotics lecturer Daniel Zhou Hao wrote that giving robots an AI upgrade would bring them closer to what he described as their “iPhone moment” as they break their way into the market. From a technological standpoint, we’re certainly getting there.

AI bringing a gear change

Hao explained that large language models (LLMs) are great at processing and using large amounts of data and are key to embodied intelligence — allowing robots to use their limbs with autonomous purpose, just like we control our own bodies.

For this to work, LLMs would need to be able to communicate with visual AI systems to help the robots make sense of what is in the space around them. Hao highlighted Google’s PaLM-E multimodal language model as an example of how such a system would work in practice.

The lecturer predicted that this new range of robots could not only be used for space exploration and helping in factory assembly processes but they could also be used in our homes. Tasks the humanoid robots could perform include cleaning, cooking, and caring for the elderly.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

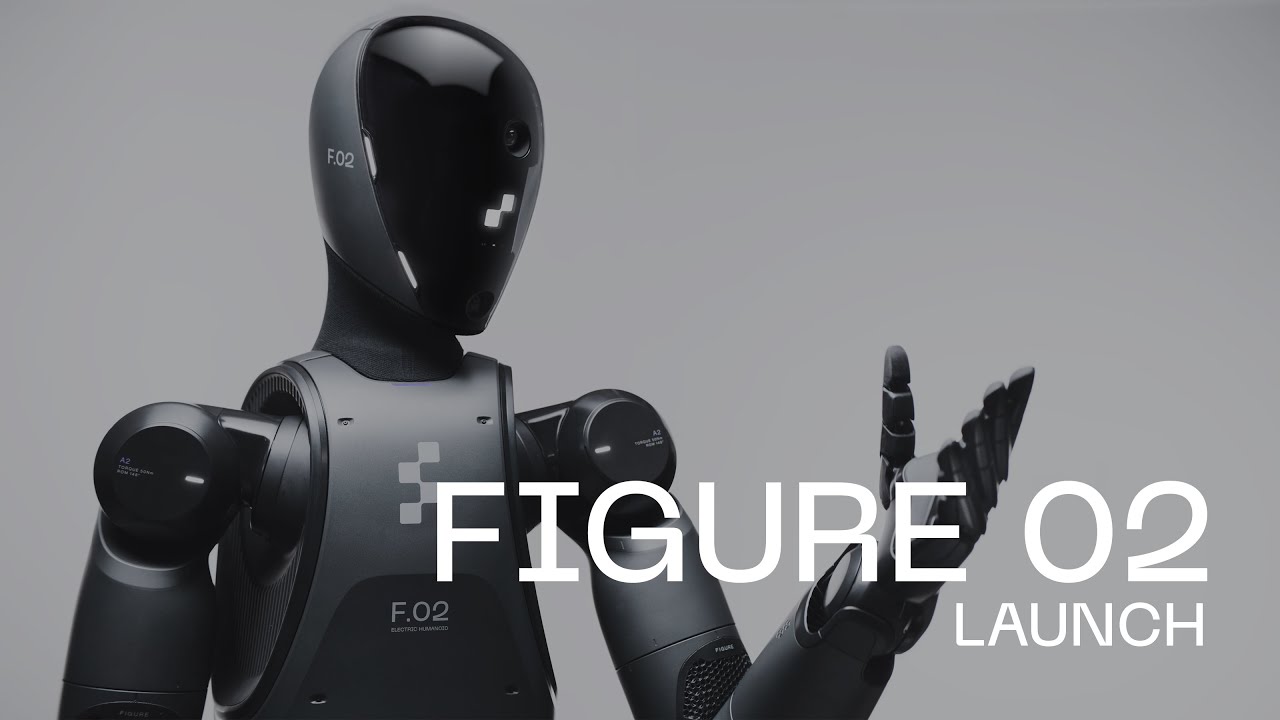

Companies like Google DeepMind, Tesla and Figure are already exploring ways to install language models as a way to help robots learn from the real world. Figure's new 02 model includes OpenAI GPT-4o for real-time natural conversations.

Hao highlighted Stanford’s Aloha Robot as an example of where the field was heading. The robot is already able to autonomously cook shrimp, rinse pans, and push chairs back in toward a table.

Earlier this year startup Mentee Robotics unveiled the MenteeBot. It is powered by computer vision and AI models to allow for real-world interactions. The company pitched it as being used in the home for heavy lifting, as a greeter in as upermarket or for factory work.

Elsewhere, OpenAI is also backing the creators of Figure 02, another AI robot capable of performing everyday tasks which is an improved version of the already impressive Figure 01 and Elon Musk is investing heavily in Optimus at Tesla.

When will this happen?

Hao made the case that from a technical standpoint, advanced humanoid robots no longer need to be considered as some far-fetched dream. The main question remaining is about if and when we’ll actually be able to buy them.

“While the technological potential of humanoid robots is undeniable, the market viability of such products remains uncertain,” Hao said. “Several factors will influence their acceptance and success, including cost, reliability, and public perception,” he added.

A crucial hurdle that the robots must overcome is for their benefits to outweigh their costs, Hao argued.

Lastly, as with much of AI technology, there are also ethical considerations. Who will have access to a robot’s data collected during private moments of our lives? Could they displace jobs?

The robotics lecturer said that on the brink of this technological frontier, we should consider not only what is possible but what we want our future to look like.

More from Tom's Guide

- Robots are dominating at CES 2024 — these are the 9 best so far

- Meet MenteeBot — this AI helper robot could be walking around homes next year

- Is this GR00T? Nvidia is giving robots a brain thanks to new AI model

Christoph Schwaiger is a journalist who mainly covers technology, science, and current affairs. His stories have appeared in Tom's Guide, New Scientist, Live Science, and other established publications. Always up for joining a good discussion, Christoph enjoys speaking at events or to other journalists and has appeared on LBC and Times Radio among other outlets. He believes in giving back to the community and has served on different consultative councils. He was also a National President for Junior Chamber International (JCI), a global organization founded in the USA. You can follow him on Twitter @cschwaigermt.