Meta Connect Live: Meta Quest 3s, Orion holographic glasses and biggest announcements

Here's everything that got announced at Meta Connect 2024

Meta Connect 2024 has just finished, and this was one for the ages to say the least. While the leaks proved to be true with Quest 3S, Meta AI updates, Ray-Ban Meta AI upgrades and Orion being announced, their significance cannot be understated.

We are currently just processing everything that was announced, so keep it locked for our analysis of these huge developments and any other announcements that may be coming out of the more specific developer keynotes.

Put simply, Meta has locked down a lot of holiday purchases of mixed reality headsets with the $299 Quest 3s, and they are indeed looking to the future with some huge bets on what computing will look like.

Meta Connect 2024 Biggest Announcements

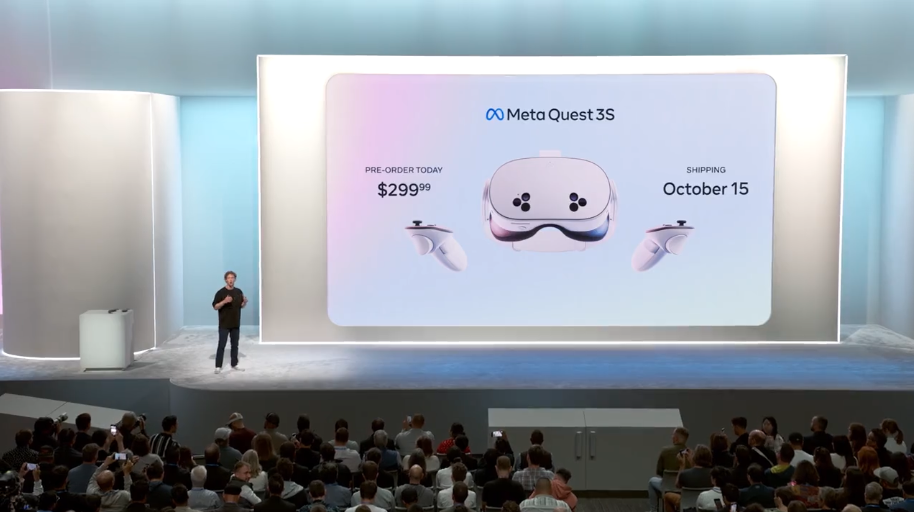

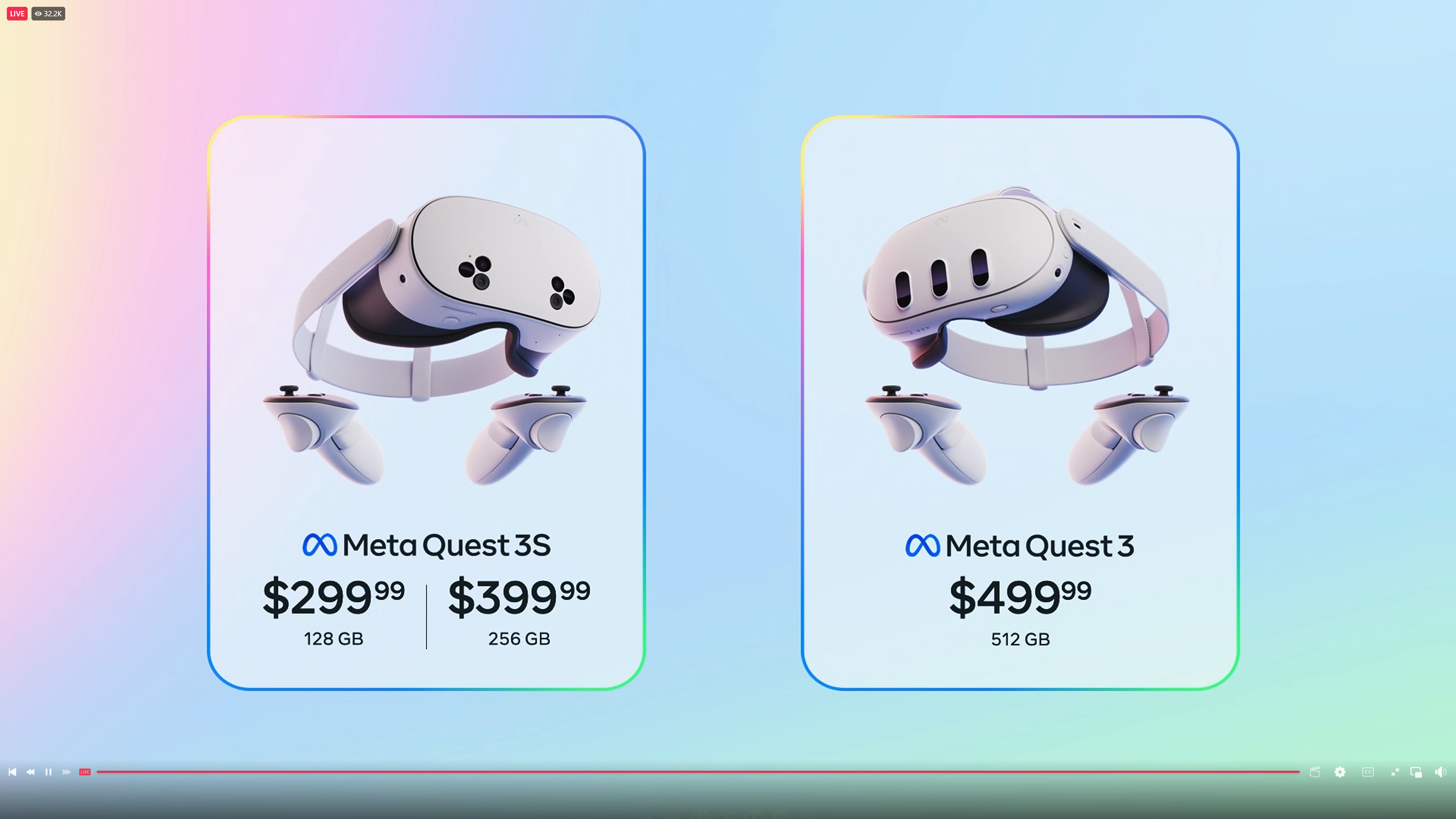

- Meta Quest 3S: $299 for 128GB and $399 for 256GB - with a free copy of Batman Arkham Shadow

- Meta AI with Voice: natural conversational AI via the multimodal Llama 3.2

- Huge AI updates to Ray-Ban Meta glasses: live translation, natural conversation, and they can remember things too!

- Orion smart glasses: Full holographic AR glasses, and we are blown away by their capabilities.

Watch Meta Connect 2024 live

Welcome to the blog!

Welcome to the Meta Connect 2024 live blog! We're kicking off a little early to go through some details of what to expect!

First off, the Meta Quest 3S. This cheaper headset should replace the Meta Quest 2 (we've seen this older headset go out of stock everywhere), and the price looks set to be $299.

What Ray-Ban Meta updates do we want to see?

There's a lot of rumblings about Meta AI getting some big upgrades at Connect, and these should be coming to the glasses too. We could see these become the ultimate piece of AI hardware if the Zuck announces 5 key things!

What about Project Orion?

Yes, the picture I'm using here is of the RayNeo Air 2s, but this will just be setting the standard for just how much of a leapfrog concept Project Orion would be.

Simply put, think of the Ray-Bans but with an AR display and wireless connectivity to give you that blend of digital and physical. There's been a lot of buzz, and with the successes Meta is seeing in mixed reality headsets and glasses infused with AI for a display-free augmented reality, the Zuck and co could be in the best position to make something big of these.

Meta Quest 3S has been leaked a few times before the event

Leaks are very commonplace for our industry, and Meta ended up seeing its new low-cost headset revealed this way too. The Quest 3S was accidentally posted on the Meta Store (then deleted), and most recently, an Amazon ad went live too early.

What these things showed us were the design you see above, a bit of a peek at the specs (basically, the same Snapdragon XR2 chipset but with a weaker display and smaller battery), and a $299 price.

That last one would be huge for getting more people to jump into Mixed Reality!

I'm hoping Quest 3S gets Travel Mode — it's a game changer!

Travel mode on Quest 3 is something I got to test recently during my trip to Computex. And while I can't speak for the improvements that came to the Apple Vision Pro's equivalent mode in visionOS 2, at the time, Meta stood head and shoulders above the Cupertino crew with its implementation.

From the rigid anchoring of windows and recognition of surfaces even under turbulence on this 15-hour flight, to the capabilities of what you could actually do offline, it makes any flight go by in a flash and I hope it comes to the 3S too!

New avatars could be coming to the metaverse

Of course, Connect 2024 is going to be about a lot of big things, but some small but significant developments look set to be announced. One key one has been leaked ahead of the event, which shows we may be getting an overhaul to Meta Avatars.

Reported by UploadVR, a new pop-up appears when editing your avatar in the Meta Horizon mobile app, which tells you that these new-look avatars are "landing soon." This is a very strong indicator that we'll see these at Connect — roughly a year later than was promised by the Zuck. But hey! Better late than never!

Could Meta AI get its own Advanced Voice Mode?

Earlier this year, we were wowed by GPT-4o and its Advanced Voice Mode — allowing you to have significantly more natural, free-flowing conversations with AI.

Well with Connect nearly here and significant announcements around Meta AI being touted, could this be the time we something similar to this coming to the various models underpinning the company's bigger efforts?

This could be huge for using your Ray-Bans on-the-go — giving more natural prompts to find out more about the world around you or taking more direct action on your behalf as agentic AI. The possibilities are endless.

Let's talk about the Meta Quest 3S' design

As you can see, if we put the Quest 3 alongside the Quest 3S, you can spot a lot of differences in the overall design between these two headsets.

But the main question is how many of these give the game away on what compromises Meta will make to drive the cost down to the 3S' $299 price. First, it looks as if the amount of mixed reality sensors across the front is set to decrease in number. How that will impact the user experience, we can't be sure.

Meta Reality Labs - Project Ventura/PantherMeta Quest 3S - Final Design pic.twitter.com/6Tc9ig2hJoAugust 11, 2024

On top of that, this headset does seem to be a bit bulkier in construction compared to the Quest 3 too — probably to house a larger, cheaper display array and lens construction.

However, one interesting new element not found on the standard Quest 3 is that new button underneath the volume switch. It could be the case that we may see an iPhone 16-esque Action button that could be programmed to any function.

For more, we've done a comparison between the two options based on the leaks and rumors we've all seen so far!

Could we see an "Xbox-inspired" VR headset running Meta Horizon OS?

First announced back in April, Meta revealed it was opening up its OS to third-parties, the most of exciting of these being Xbox. Dubbed “Meta Horizon”, this mixed reality operating system will run across a variety of, limited edition VR headsets.

Could we catch a glimpse of one of these third-party headsets at Meta Connect 2024 today? Keep those digits crossed.

A Meta blog post from April confirmed we would see new headsets from both Lenovo and Asus ROG, yet it’s the Xbox one that intrigues me the most.

It’s been possible to play some of the best Xbox Series X games on large screens in mixed reality since Meta teamed up with Microsoft to bring Xbox Cloud Gaming to Meta Quest. And the knowledge that Meta are actively “working together (with Microsoft) to create a limited-edition Meta Quest, inspired by Xbox” is a downright salivating prospect.

Just stick a massive Master Chief helmet on the front, make sure there’s a “117” inscribing on each of this Xbox headset's straps and you can have all my money, Meta.

Snap Spectacles vs Meta Orion AR: who wins the AR glasses face-off?

In September, Snap unveiled the next-gen version of Snap Spectacles. Bringing enhanced AR features its predecessor lacked, it’s set to wage war on Meta's Orion AR glasses. And with features that include letting you watch SnapChat videos on the equivalent of a 100-inch screen right in front of your eyeballs, Snap’s specs certainly sound like they stand a chance in this conflict. Though your optometrist is probably going to be livid.

The revised frame of the new Spectacles consists of a duo of Snapdragon processors, four cameras that focus on hand-tracking and vapor chamber cooling. Sounds cool, right? Sadly there’s a big ol’ catch.

Currently, Snap is only making its new AR glasses available to developers, for the eye-watering price of $99 over a minimum 12 month contract. Ouch. Though you won’t be popping them over your nose for the foreseeable, know that Spectacles run on Snap’s own OS platform, the glasses support voice commands, while battery life is said to hover around 45 minutes.

How will this Snap Spectacles vs Meta Orain AR glasses face-off play out at this year’s Meta Connect? We’re unsure, but we can’t wait to find out.

The Bat is out of the bag as Walmart leaks key Meta Quest 3s specs

Ever since Meta aired its VR Games Showcase last month, we’ve been wondering which, if any, of those 15 titles could be included with the Meta Quest 3s, which we fully expect to be shown off later today at Meta Connect 2024. Now, a leaked image of a Walmart display seems to have cleared that question up.

It looks like Batman: Arkham Shadow will be bundled with both the Meta Quest 3 and Quest 3s going forward. Well, that's provided the info in the leaked image is accurate, which was taken by Reddit user "CaptainKenway1693" (thanks, Upload VR). Let’s hope The Dark Knight’s new VR quest can remove the bad taste Suicide Squad: Kill the Justice League left in so many Bat fans’ mouths.

The Walmart display also seemingly confirms the specs of the upcoming Meta Quest 3s. The resolution of the headset will be (1832 x 1920) pixels per eye, compared to the 4K resolution you get with the Meta Quest 3.

The new headset will offer either 128GB or 256GB of storage, with rumors that the Meta Quest 3s price will start at $299. That’s certainly a whole lot cheaper than the existing Quest 3, which starts at $499.

Could Ray-Ban Meta glasses get a hardware refresh? I'm not sure

Personally, I love my Ray-Ban Meta glasses — the AI feature set grows with every incremental update and it's legitimately came in clutch when on vacation in Costa Rica.

But there's been some rumblings in the rumor mill that at Meta Connect later today, there will be more than just software update announcements — there could be a hardware refresh too. Namely, some sources have reported a small AR display appearing on these for quick notification referencing.

Well, not to be the party pooper, but I just want to stop that talk dead in its tracks and simply say I don't think this will happen — at least this year. You've got a lot coming from Project Orion on this, which will be kept to just a developer level while they all figure out software support.

Instead, for the consumer-facing side of these specs, we'll see more impactful AI updates to change how you use these glasses going forward. Here are five I want to see!

What are going to be the big topics (based on looking at the developer keynote schedule)?

When it comes to events like this, you can start to draw some general conclusions on what may be talked about in the main event by taking a look at the developer-centric keynotes that are happening afterwards.

It's not a perfect science, but since Meta Connect's announcements have basically been leaked extensively, we can start to tie some threads together. Here are 3 things that jumped out at me.

- "Learn about Meta Quest devices." — the most obvious slam dunk sign here is how they're not specifying a product number here. Looks more and more deadset that we'll get Quest 3 and 3S side-by-side.

- "Introducing the new Meta spatial app framework." — this seems to be more of a deep dive into the way that Meta's development platform for making spatial apps works. While it talks about Meta Quest, I wouldn't be surprised if Zuck announced that the Orion project specs run Horizon OS, and devs get introduced to a new augmented fashion of apps.

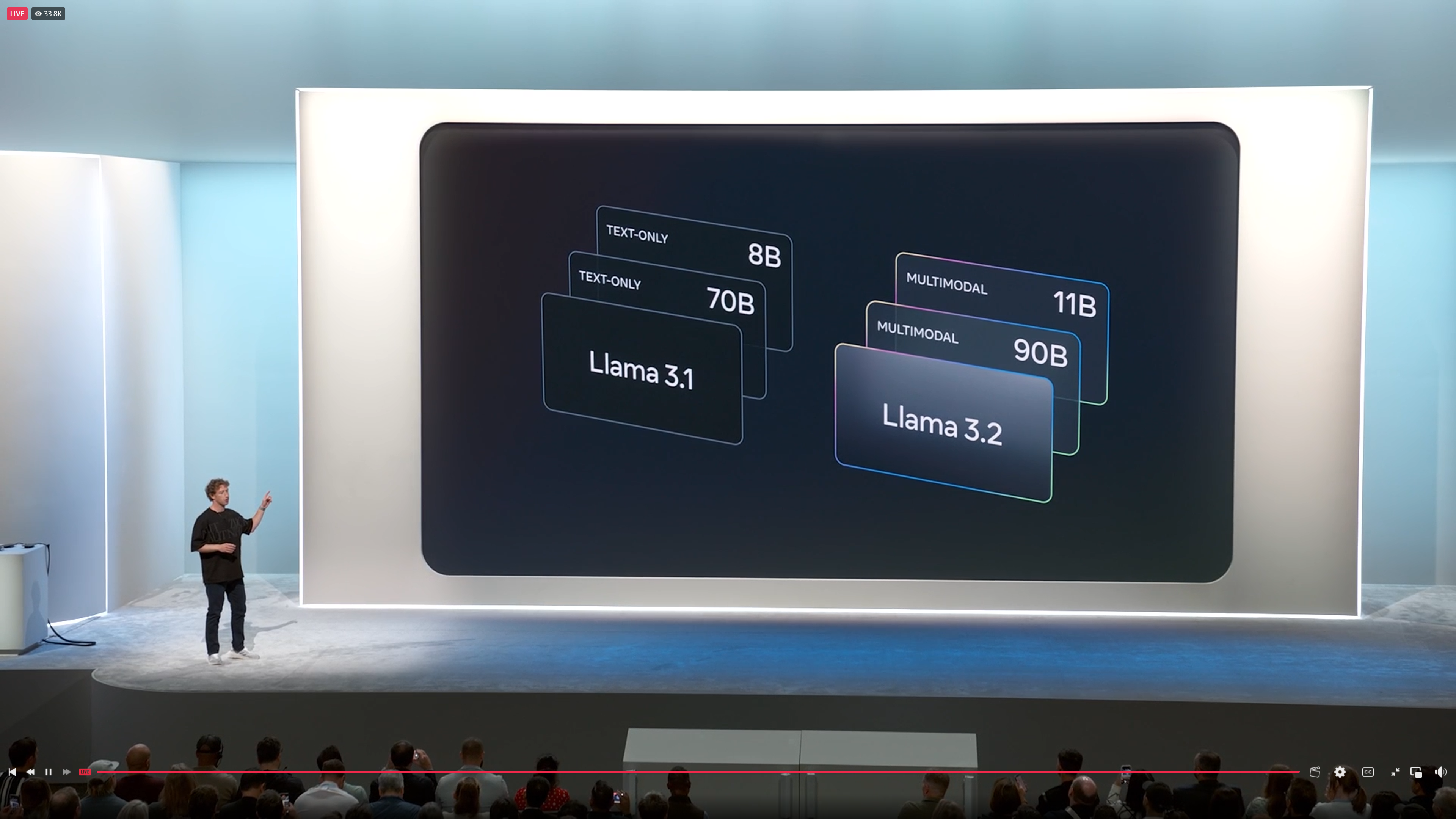

- "How Meta technologies are built with Llama models." — It's already a given that Llama runs through all of Meta's apps. But I have no doubt that mentions of the 405B model and reasoning capabilities do open the door to suggesting we'll see more doors opened in Meta AI.

Why natural language interaction would be huge for Ray-Ban Meta smart glasses AI

The next big frontier for AI has been natural language interaction. So far, you've had to frame your questions essentially as the kind of prompts you'd type into the likes of ChatGPT.

But more and more, companies like OpenAI and most recently Rabbit with its new Large Action Model (LAM) have been dabbling with reasoning and logic trees to make sound conclusions on what you mean if you were to ask a question that vaguely relates to whatever you may be requesting.

So far, Meta AI on the Ray-Bans has been good, but you still need to remember the trigger phrases such as "Hey Meta, look and..." followed by your query. If Meta's AI models get this conversational aspect built into them, it could be huge for how you could just have a back and forth chat with your glasses about whatever you're looking at. And most importantly, turn that into action.

This is one of a few things I'd love for Meta to announce on stage at Connect.

ICYMI — the Quest 3S was leaked in a store display

If you're just joining us on the countdown to Meta Connect 2024, welcome! One thing already seems to be a dead cert for an announcement, which is the Meta Quest 3S. And we say its a certainty because a Walmart display of this headset has already been leaked.

Here's what we know about it based on this shop display:

- The included game will be Batman: Arkham Shadow (I called it a few days ago while talking to the guys).

- Unlike the Quest 3, the Quest 3S will have a slightly lower resolution per-eye — 1832 x 1920 pixels compared to the 4K resolution.

- Pricing looks set to start at $299.

How to watch Meta Connect 2024

It's a good question that Meta keeps hella vague on the run up to Connect itself. According to the company, you can head over to the developer Facebook page and see the live stream.

However, a reliable source of where you'd actually see the stream is on Meta's broader Facebook page here. Once it goes live, we'll embed it up top!

What direction will LLaMA be heading in?

Meta's Llama LLM (large language model) has been making big strides forward over the last 12 months. LLaMA 3.1 is probably the biggest version so far because of the efficiencies brought with it in making this trillion parameter model half the size.

You're already using it in the form of Meta's AI chatbot in WhatsApp (available in the US only), as it gives you access to image generation and other features.

So the big question is, where does LLaMA go next? Meta's done a lot of work on the back end to make your text prompts feel natural with the model, so my prediction would be to see this move over to voice as well. Maybe even speaking directly to WhatsApp to have this logged conversation.

Meta Quest 3S: Final rumors

We're less than an hour from the start of Meta Connect 2024, which means we're about to hear all of the good stuff about the Quest 3S. The most recent rumor spilled many of the beans, with a store display spelling out most of the specs. Here are the final specs we expect from the Meta Quest 3S based on the leaks and rumors:

- Price: $299

- Processor: Qualcomm Snapdragon XR2 Gen 2 processor

- Resolution: 1,832 x 1,920 per eye

- Refresh rate: 120Hz

- Included game: Batman: Arkham Shadow

- Controllers included: Yes

- Storage: 128 and 256GB options

- Design: Cameras in small triangular clusters on both sides

Those are the key details we've had leaked so far, and they've all come from reasonably reliable sources, so we're confident they're accurate. Of course, there's always a chance Meta could throw a curveball, and that's why it's essential to stay tuned to the live blog as Meta officially announces the headset.

Ray-Ban Meta smart glasses: Final rumors

As the event approaches, your mind can't help but wander toward the Ray-Ban Meta smart glasses. Sure, a new VR headset is exciting, but AR glasses that are actually wearable and stylish sound promising. We really liked the last version of the Ray-Ban Meta smart glasses, so we're eager to see what the companies have changed.

There are a few rumors regarding the Ray-Ban Meta smart glasses and what kind of new features they'll get. Here are the key things we think Meta will announce about the glasses:

- New color options and styles

- New Meta AI software powering the glasses

Project Orion AR glasses: Final rumors

We're unsure whether Zuckerberg and company will show off its Project Orion AR glasses, as the latest rumors suggest we're still far away from seeing them. Some early speculation suggests it'll run Horizon OS with support for various apps and augmented reality features.

In the end, it sounds like these could be the Ray-Ban glasses and an AR headset. Hopefully, Meta's glasses are something like the Snap Spectacles in terms of features — but more visually appealing.

Five minutes!

The event starts in just five minutes. We're going to keep updating as Meta announces new information. Whether you're excited about virtual reality, AI, AR or anything else Meta could bring to the table, we'll keep you posted.

If you want to watch the event, you can see it below. If you want the latest info without watching for yourself, simply refersh this live blog.

Fashionably late

Looks like we've got a short delay going on here. For now, just enjoy the rather zen music, which almost sounds AI generated (won't say for certain though).

1 minute to go... finally!

So after one countdown and a whole long delay, we are now finally in a second countdown to the event. Our global Editor-in-Chief summed it up best.

And we're live!

The Zuck is taking the stage. Let's see what he has to say!

Meta Quest 3S announced!

Right off the bat, Mark has announced the Quest 3S — launching October 15 for $299! It will sport the same processor as Quest 3, and the pancake lenses have been switched to reduce the price.

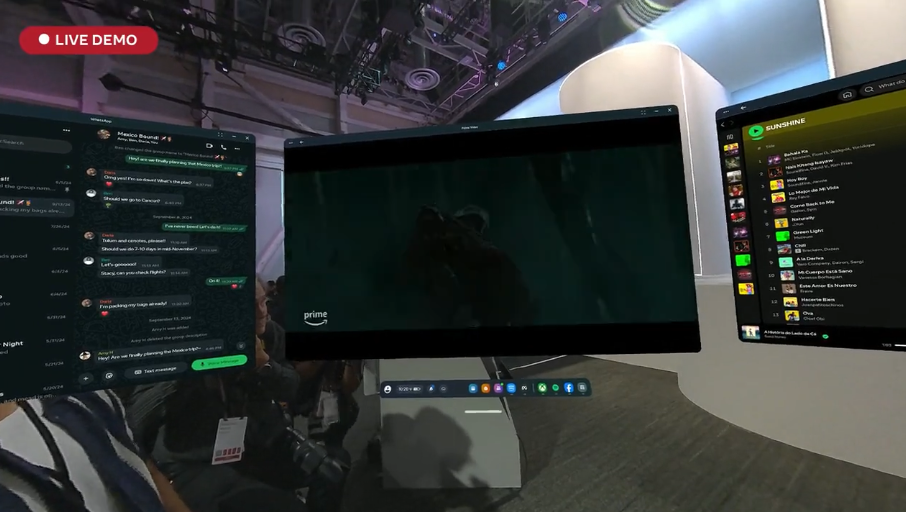

Here is how sharp the picture is, thanks to a live demo. Plus Dolby Atmos surround sound is coming to the headset too! Check out our news story all about Quest 3S

Windows support is coming to Meta Horizon! We've got multi-screen support and the ability to interact directly with what's happening on your monitors.

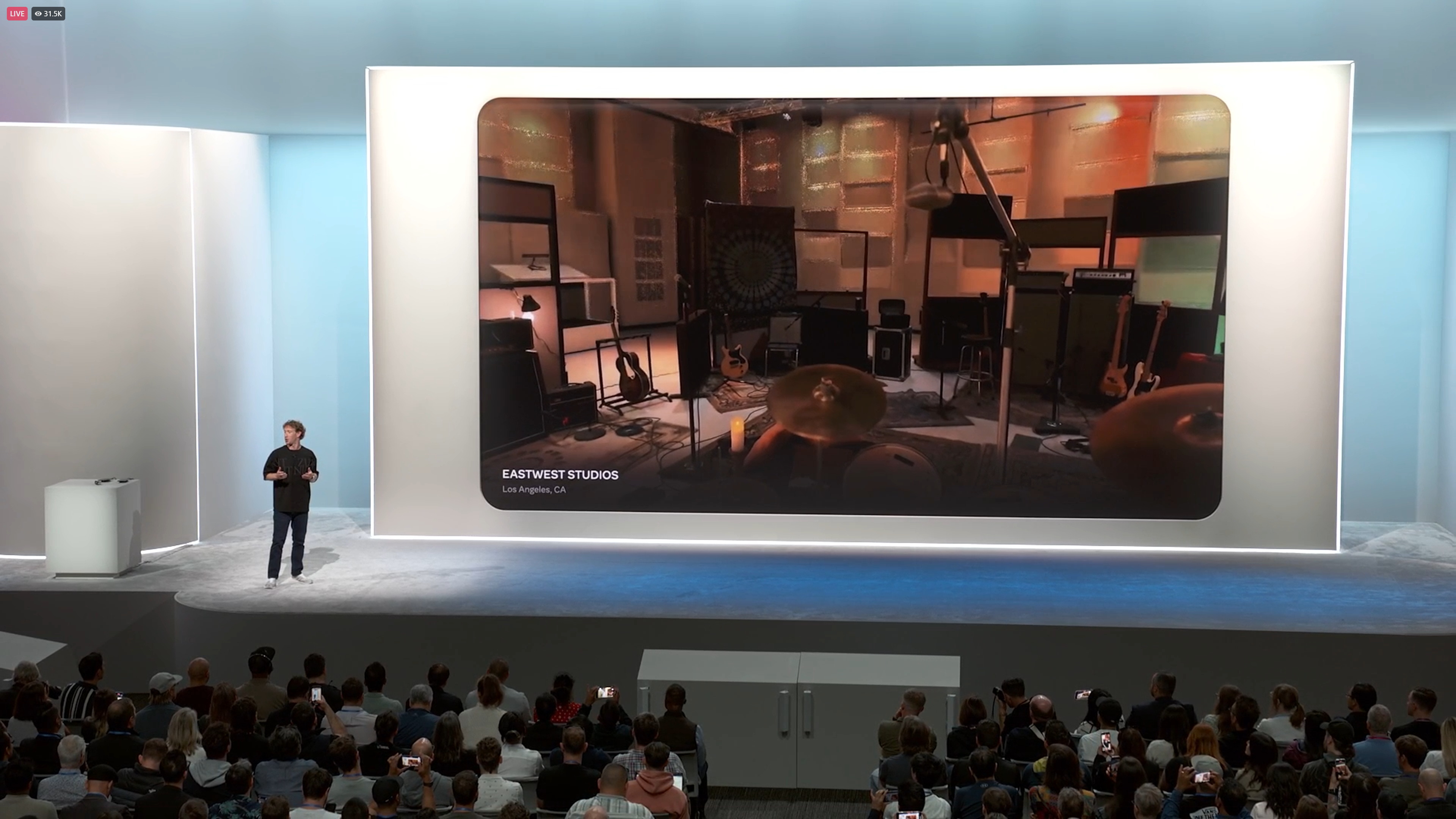

Introducing Hyperscape - this is seriously cool!

To create even more realistic metaverse settings, Meta has introduced Hyperscape. All you have to do is scan a room with your phone, scan the room you're in, and you can sit within it in your headset.

Here comes the multimodal AI model from Meta — named LLaMA 3.2!

And here comes interacting with voice! Not only that but Meta's adding some celebrity voices such as John Cena and Awkwafina!

BTW we ran Zuckerberg's tee through Google Translate, and it says "either Zuck or nothing." ...take from that what you will!

Wow this is moving fast! Almost forgot to post this. Here is the pricing for the Quest 3 family heading into the holidays. Meta's making a big bet on these being a huge hit!

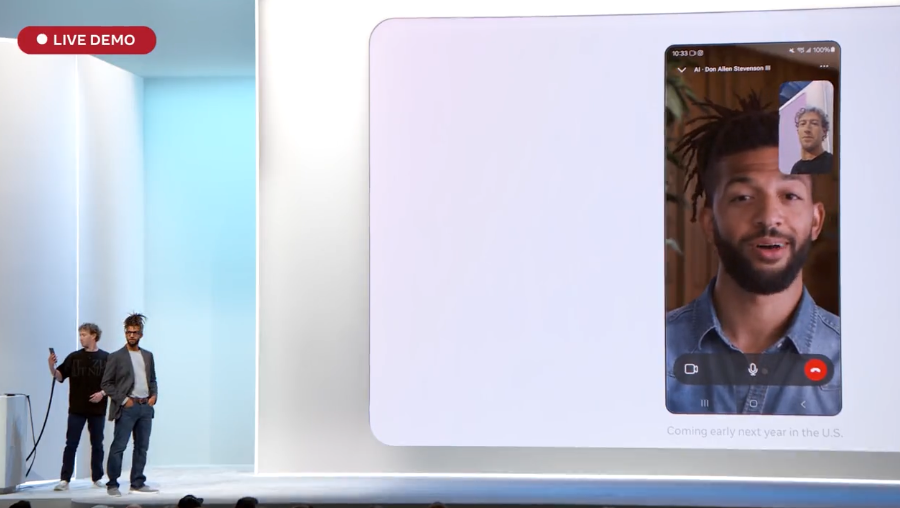

So this is a little weird...

Here we have a live demo of an AI version of Don Allen Stevenson answering your questions. It's a rather weird one that gives serious uncanny valley vibes. Turns out he doesn't know about agriculture.

Meta takes on HeyGen with AI translation

We've seen a lot of translation work from other AI companies, but this is a new dimension of it, which has only been hit by HeyGen.

Instead of just translating the voice, it will also map lip movements to it too. Seriously impressive!

Llama 3.2 is a rather large multimodal model! For comparison (ahead of us talking about the updates to Ray-Ban Metas), Apple Intelligence is a 3b parameter model, whereas the smallest of Llama is 9b

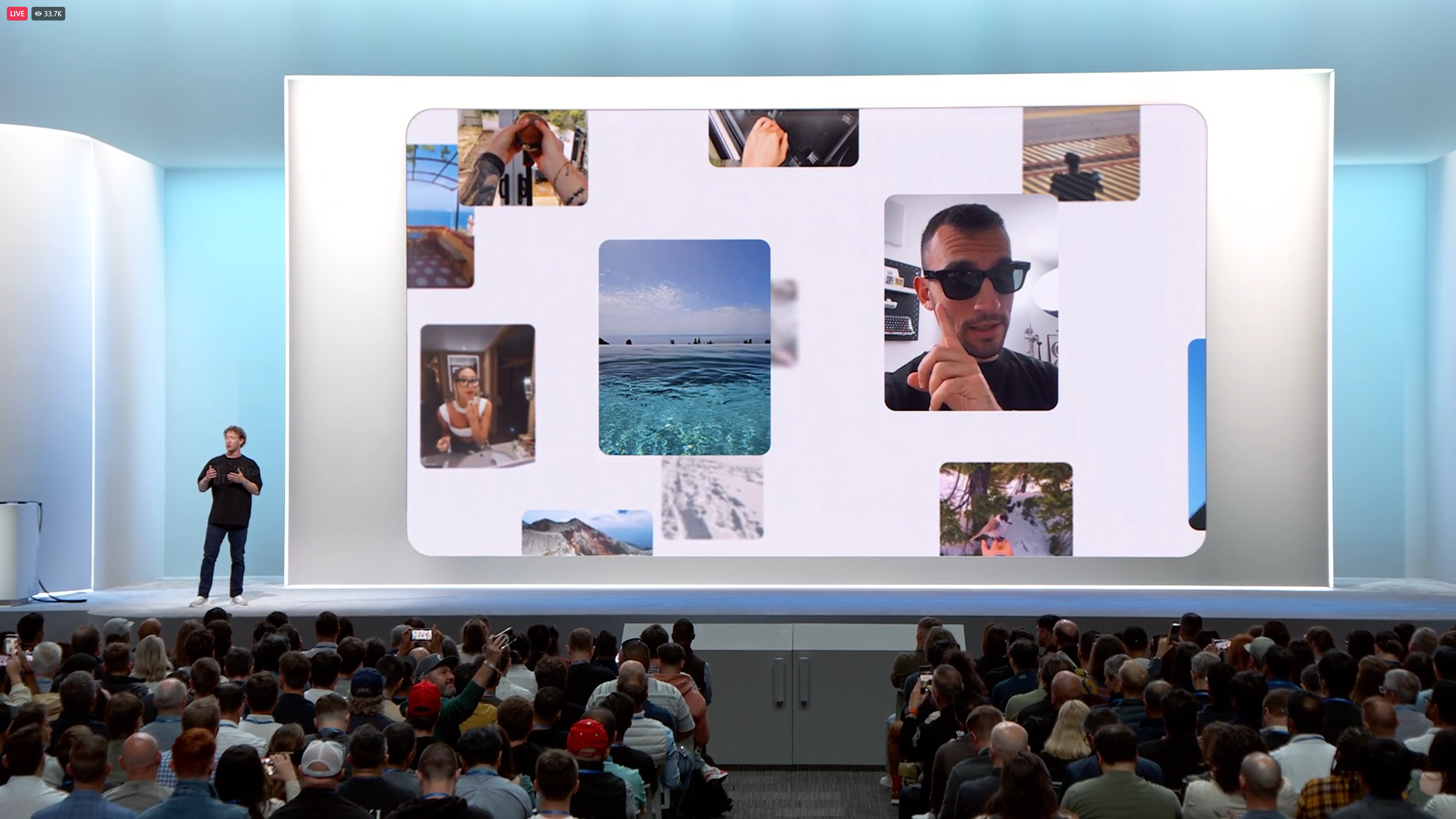

Time for Ray-Ban Metas

Integrations with more apps such as iHeartRadio, and there are some big AI upgrades coming too! Stand by...

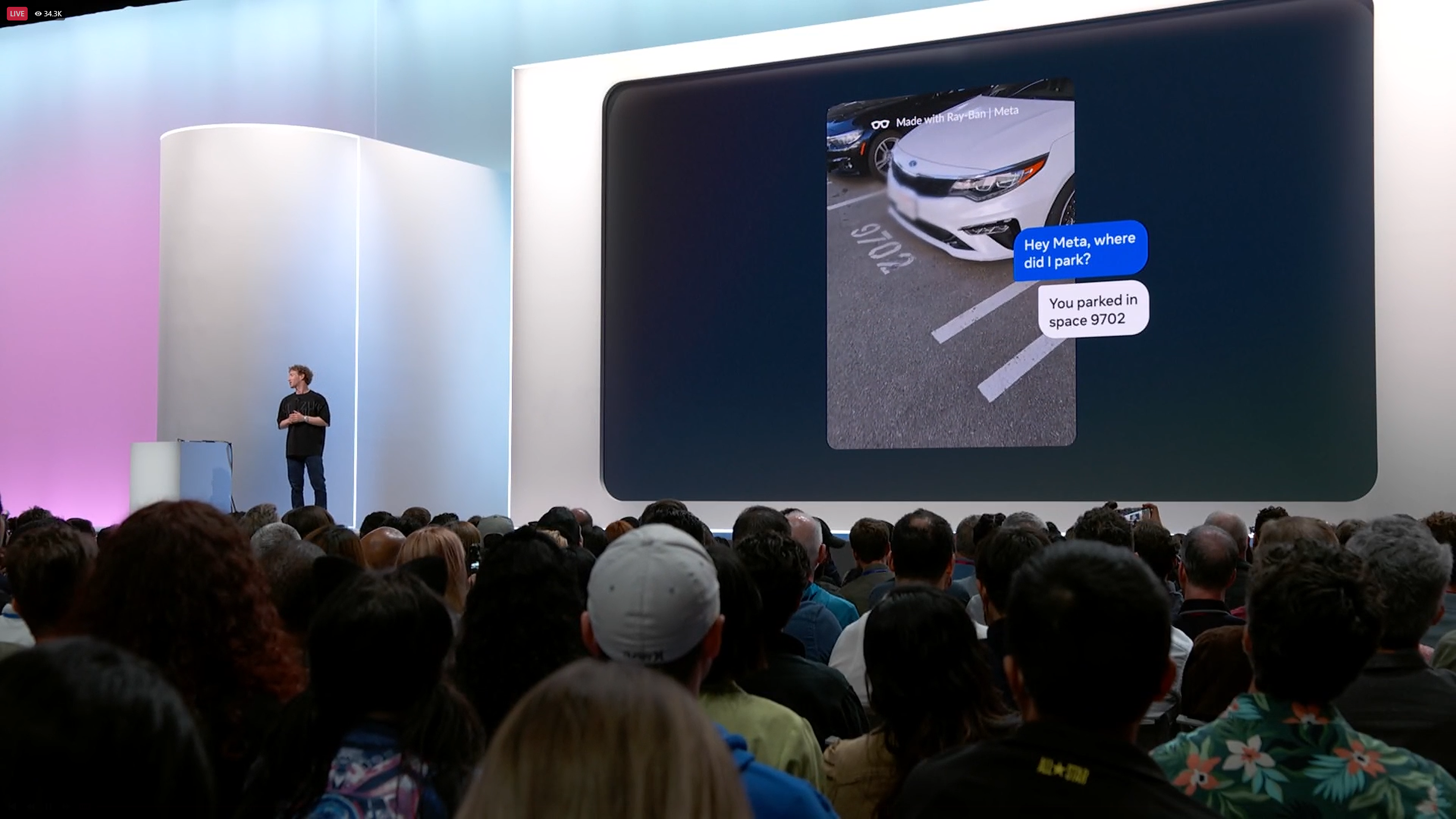

It is now more natural and conversational

Instead of having to say "hey meta, look and..." you can just ask a natural question without remembering this prompt. Plus you can ask follow-up questions without having to say "hey meta" again.

Your Ray-Bans will be able to remember the location of items too with a simple prompt!

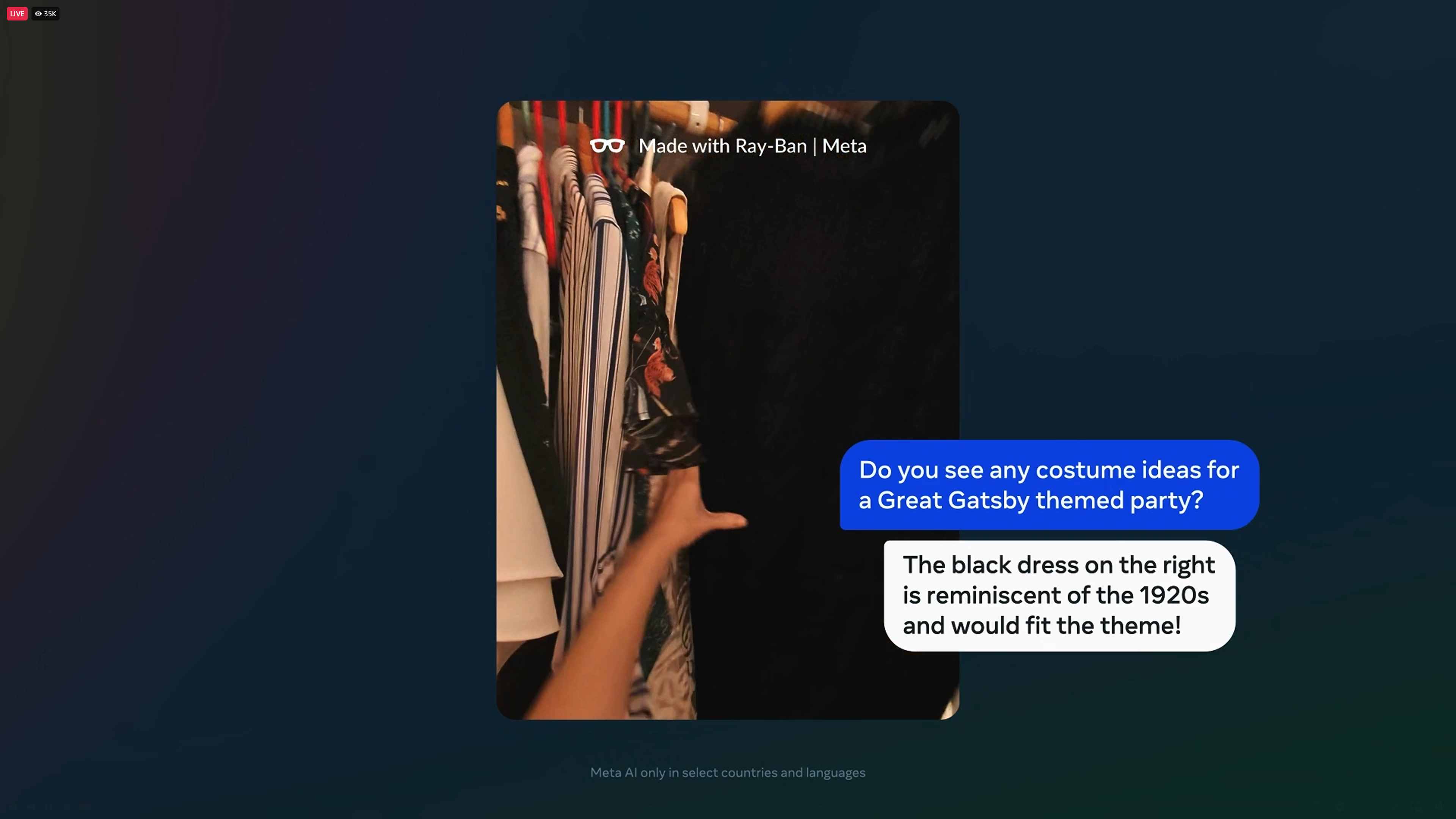

OK, this is HUGE!

Multimodal video AI will be able to actively give you advice through video — as pointed out here by directing the shopper to a different dress to the right!

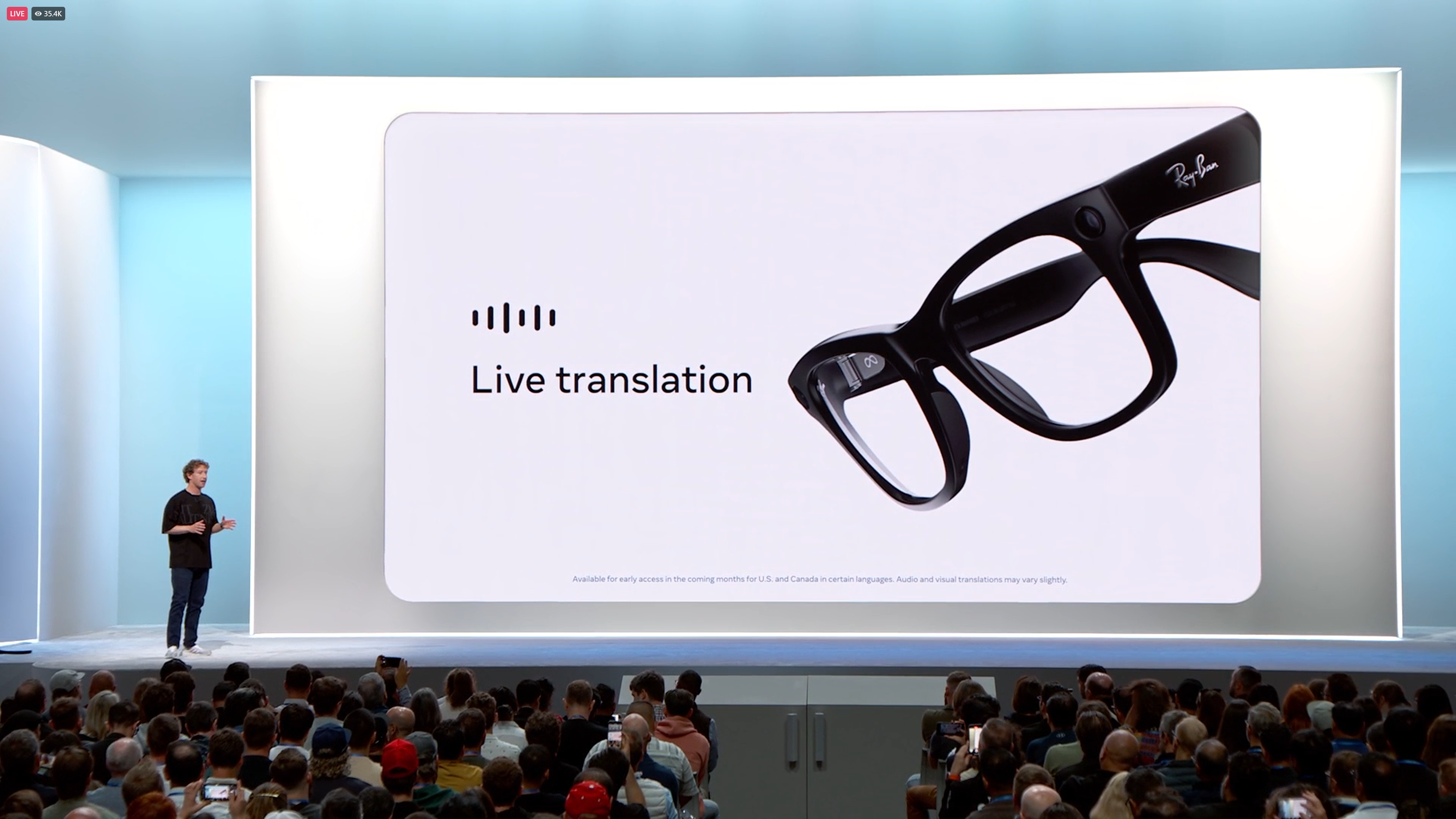

Live Translation just blew the Tom's Guide team away! In a demo, we saw a conversation in both English and Spanish. The responses were rapid and the translation was swift between the pair. And on top of that, you can see Meta AI continue to translate and speak over the top of Mark saying more.

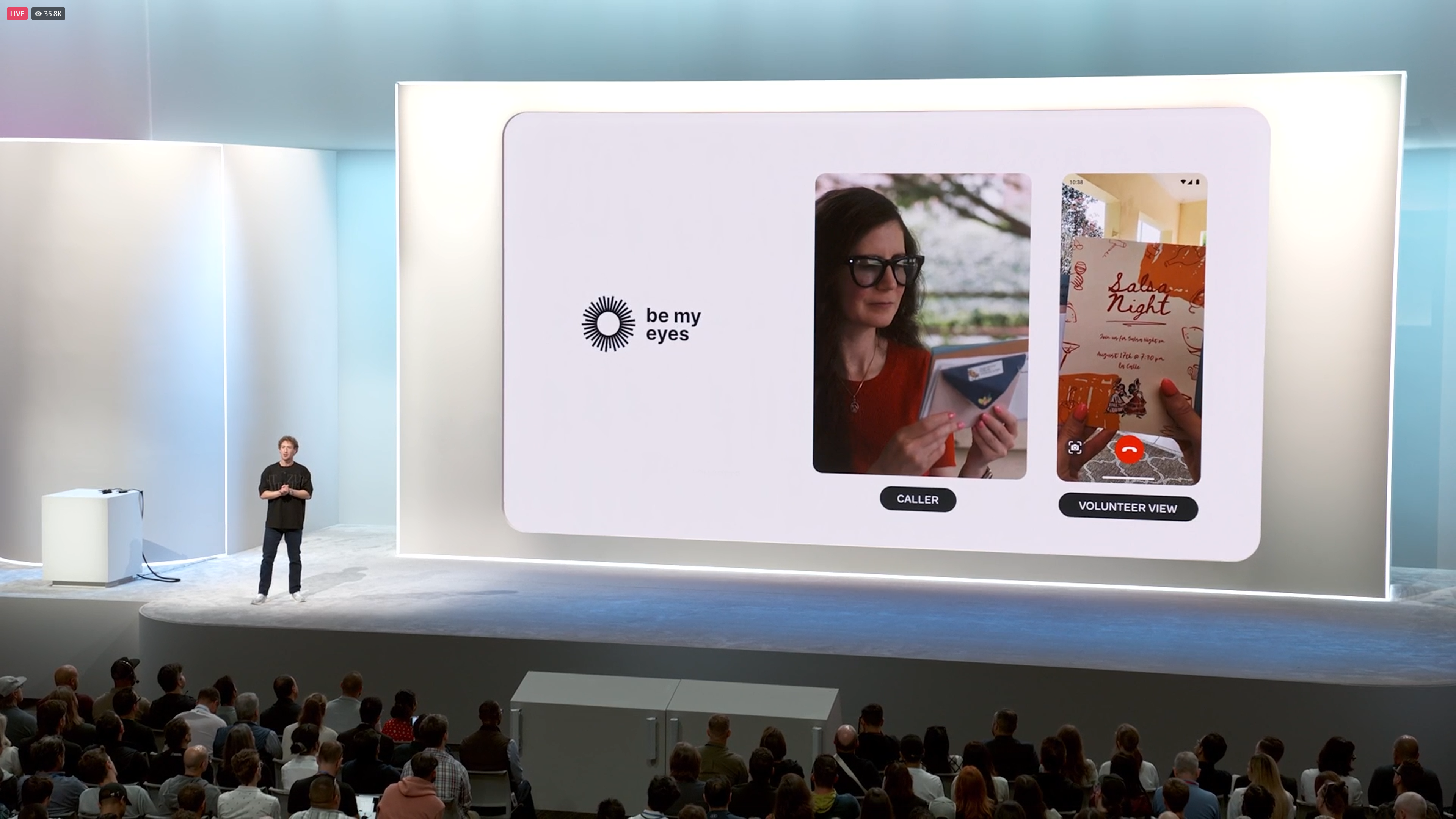

Working with Be my Eyes, the Ray-Bans are able to connect to someone live who can talk you through everything going on in front of you. This will be significant for people with partial blindness.

OMG THAT CLEAR DESIGN

I already have a pair...but my God Meta's making a good case for me buying another pair!

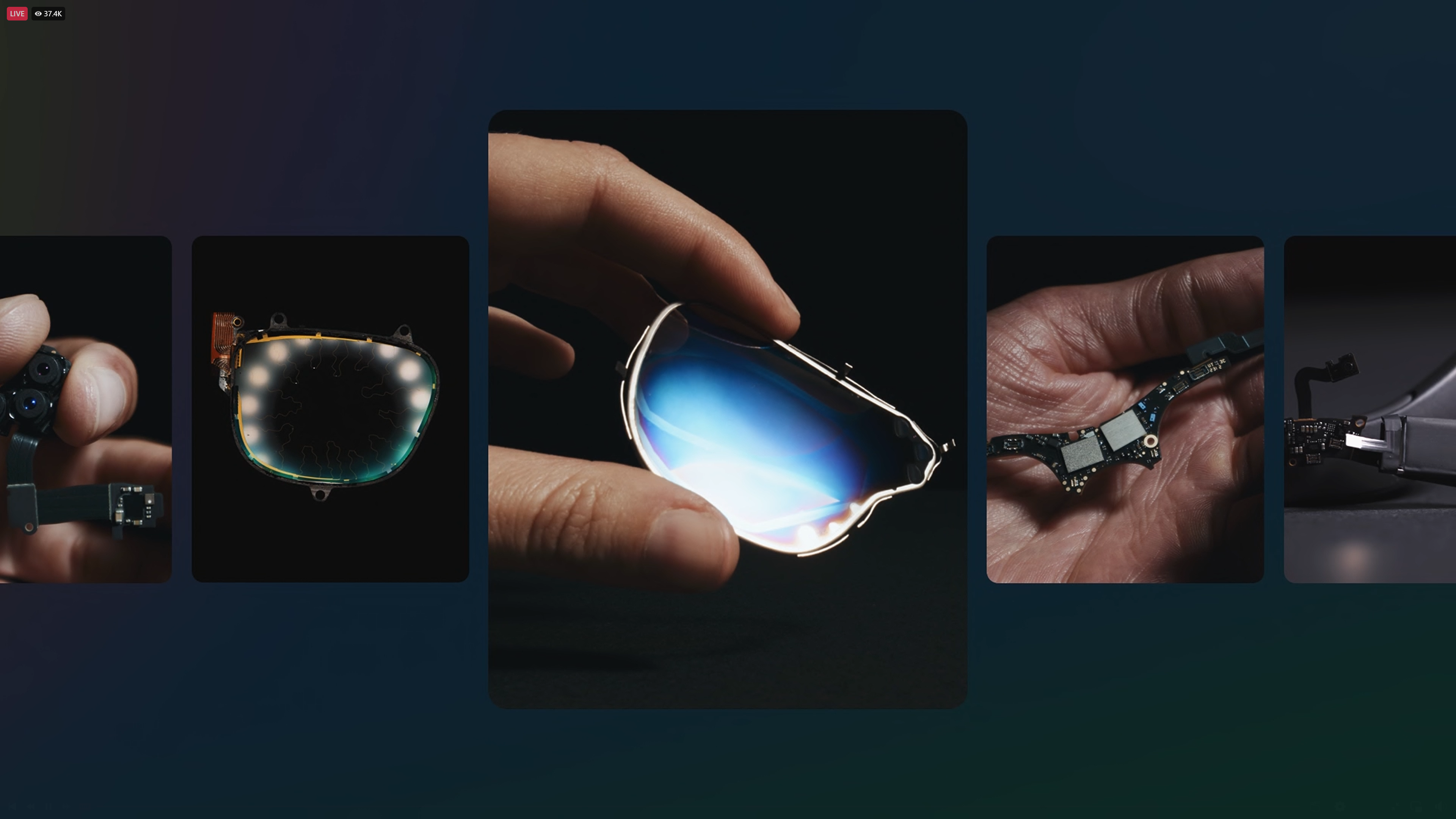

Here comes Orion!

Currently being carted out in a handcuffed case for the drama.

"This is the physical world with holograms laid over them." This is actually insane.

To make this display, there are projectors in the arms of the glasses, projecting content onto the nano-printed lenses — making them able to project images not just in a 2D space, but also in 3D.

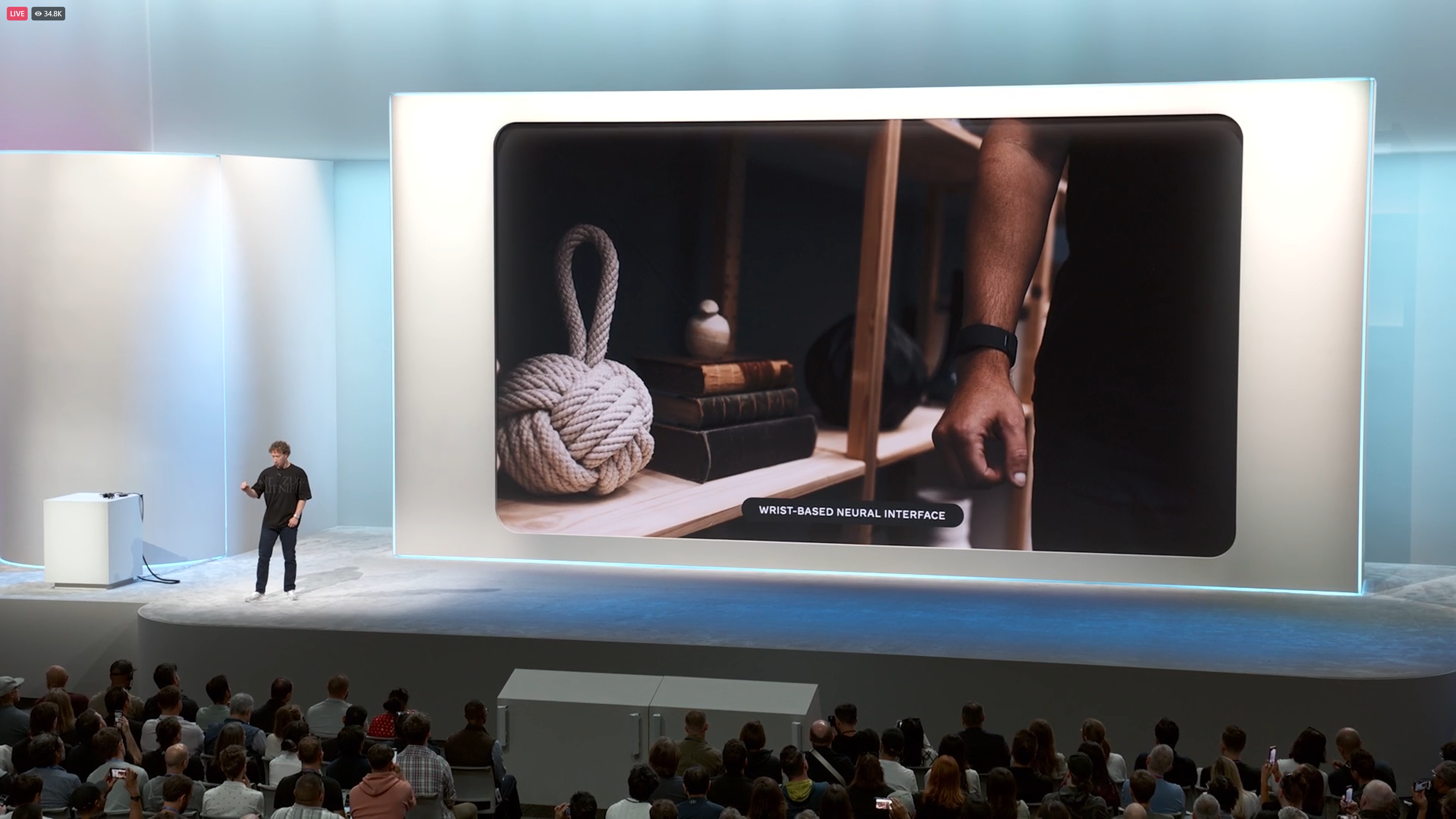

Wait...did he just say neural interface?

Yep, he certainly did! A wrist-based Neural Interface. The wrist controller understands your gestures to interact with the device. So it's not actually plugged into your brain (don't worry), but rather its gesture based through your wrist muscles

And yes, everyone is blown away by it. Don't expect to see it in consumer's hands any time soon. Orion will start as a dev kit — mostly internally — but a handful of partners will get their hands on one too.

"The next version will be our first consumer full holographic AR glasses."

And now for the summary

- Meta Quest 3S: $299

- Meta AI voice

- Llama 3.2

- Ray-Ban Meta with new styles and AI upgrades

- Orion: the full holographic AR glasses

This has been a significant one for Meta to say the least!

And that's a wrap!

Keep it locked on our live blog and across Tom's Guide for all our analysis following the event!

A visual summary

@tomsguide ♬ original sound Tom’s Guide

If you're a visual learner, our TikTok rundown embed above offers all the critical information in a bite-sized video.