Look out, Photoshop: Apple just released a new image-editing AI model

Apple's not snoozing on the AI arms race

Apple has been working with UC Santa Barbara researchers to develop an AI model capable of editing images based on your instructions, and the result is now available for anyone to try out as open-source software.

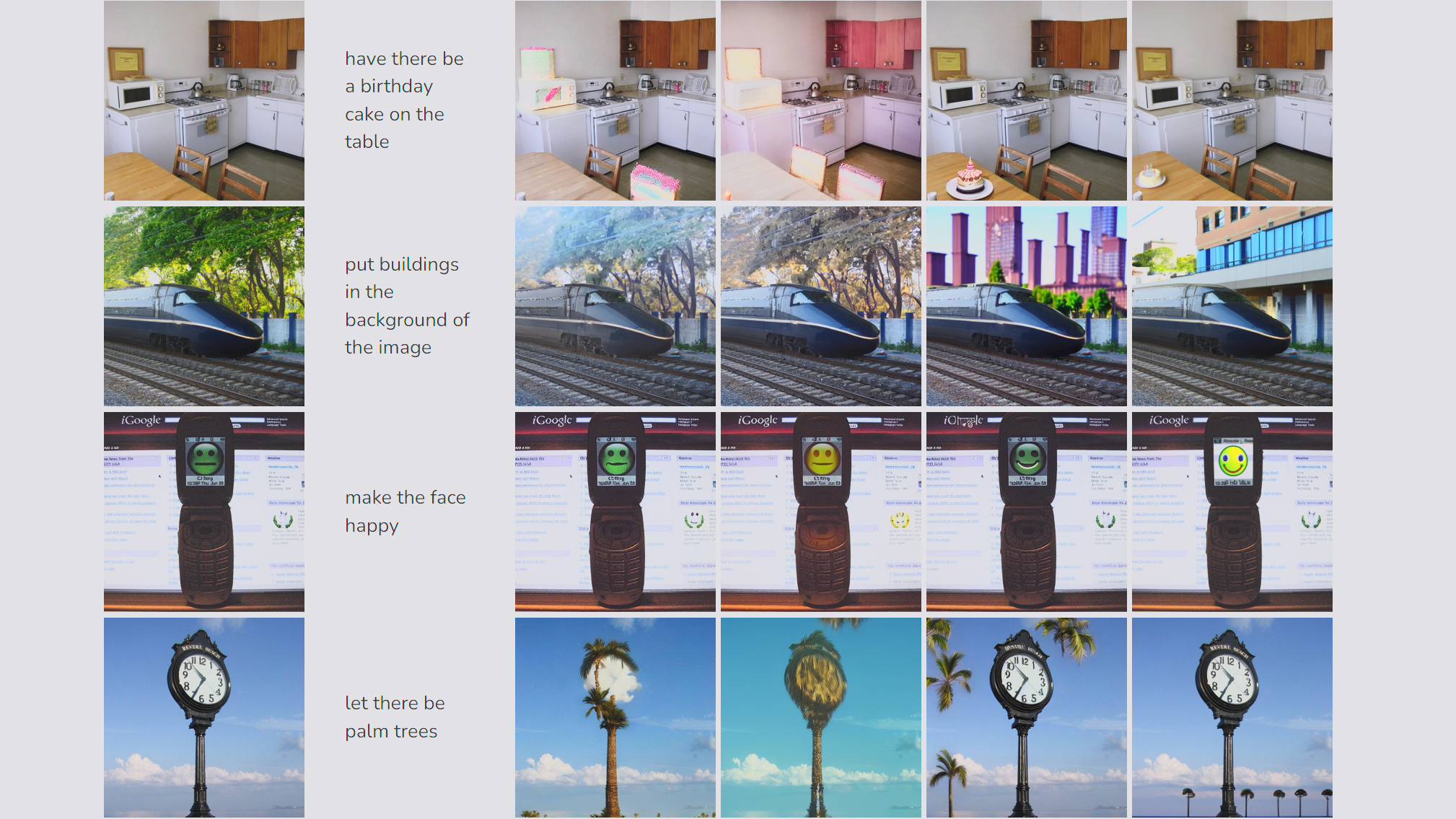

This new AI model uses multimodal large language models (aka MLLMs) to translate requests written in natural language (like "make the frame red") into actionable instructions, then act on them to generate and modify images. The new MLLM-Guided Image Editing model (or MGIE for short) is open-source software available for download via GitHub, and it's also available as a web demo on the Hugging Spaces platform that you can check out yourself.

That demo allows you to generate an image via text prompt or upload one yourself, then ask the model to make changes to it using natural language. The MGIE model then generates the edited image and presents it to you for further edits.

This could be a big deal, not just because Apple's involved, but because this new AI model could be a more capable and more effective tool than existing AI image editors.

That's potentially huge because in Silicon Valley the race is on to build better photo and video editors using AI tools, with titans like Adobe putting generative AI into Photoshop and Meta confirming AI video and image editing are coming to Instagram and Facebook.

Apple has kept a relatively low profile in the AI arms race thus far, but the company is reportedly ramping up AI acquisitions and recruitment and it teased big AI plans ahead of the Vision Pro launch, so there's a good chance you'll hear more about AI out of Cupertino in 2024, particularly when it's time to preview iOS 18 at this year's Worldwide Developers Conference.

Outlook

Apple researchers and their UCSB colleagues teaming up to release this MGIE model under an open-source license is a significant step forward in AI research, but it's yet unclear whether this advancement will impact Apple products in 2024.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

I say that because the MGIE model appears to have been developed using DeepSpeed, a suite of libraries and tools released by Microsoft to help developers train and optimize AI models. DeepSpeed doesn't play well with Apple silicon, so it seems unlikely the MGIE model as it exists now could run locally on Apple hardware.

But this research does hint at where Cupertino's AI research is headed. Apple doesn't publicly crow about AI the same way companies like Google and Microsoft do, but the MacBook maker has been quietly building technologies we now lump under the "AI" umbrella into its devices for years. The "Neural Engine" built into every slice of Apple silicon is optimized for AI work like blurring video or editing images, and Apple has been using it to help you auto-enhance your photos with one tap as early as the iPhone 11.

So while you won't likely see Apple's upgraded image-editing AI tool launching as a standalone app on the App Store anytime soon, I expect you will see the fruits of this research cropping up as upgrades to the built-in image editing tools of the best iPhones, the best iPads and the best MacBooks in the years ahead.

More from Tom's Guide

Alex Wawro is a lifelong tech and games enthusiast with more than a decade of experience covering both for outlets like Game Developer, Black Hat, and PC World magazine. A lifelong PC builder, he currently serves as a senior editor at Tom's Guide covering all things computing, from laptops and desktops to keyboards and mice.