Google I/O 2025 LIVE — all the details about Android XR smart glasses, AI Mode, Veo 3, Gemini, Google Beam and more

Google's annual conference goes all in on AI

With a running time of 2 hours, Google I/O 2025 leaned heavily into Gemini and new models that make the assistant work in more places than ever before. Despite focusing the majority of the keynote around Gemini, Google saved its most ambitious and anticipated announcement towards the end with its big Android XR smart glasses reveal.

Shockingly, very little was spent around Android 16. Most of its Android 16 related news, like the redesigned Material 3 Expressive interface, was announced during the Android Show live stream last week — which explains why Google I/O 2025 was such an AI heavy showcase.

That's because Google carved out most of the keynote to dive deeper into Gemini, its new models, and integrations with other Google services. There's clearly a lot to unpack, so here's all the biggest Google I/O 2025 announcements.

Google I/O 2025: All the biggest announcements

Here's a quick summary of what Google announced during its keynote

- Android XR smart glasses: Not only will Android XR be available to headsets for that VR-like experience, but Google's also rolling its new platform to smart glasses. A working prototype was shown off running impressive demos of how Gemini is deeply integrated into the platform, but Google also announced partners it's working with to make these Android XR smart glasses

- Project Astra upgrades: We got a deeper look how Project Astra uses Gemini with a camera to help solve problems. Most notably, a video showing off a person trying to fix their bike with the help of Project Astra by using its new Gemini integration to apparently make phone calls on your behalf to inquire about a part.

- AI Mode comes to search: Google Search results have been amplified by AI overviews over the past year, but this year Google introduces an AI Mode with search. One really cool feature with AI Mode is its agentic online shopping mode, which can create AI generated images of yourself trying on different fashion wear you might buy.

- Google Beam adds depth to video chat: Google introduced its Project Starline at last year's Google I/O event, but it's much more fleshed out with Google Beam. This used AI, 3D imagining, and other equipment, to create a projection of yourself to appear 3D-like with depth and presence.

- Veo 3 and Flow redefines filmmaking: Veo 3 is the company's latest video generation model, with more realism than ever before. It can now generate sound effects, background sounds, and voices. Meanwhile, Flow is its new AI filmmaking tool that lets you create and stitch entire scenes much like a movie director editing a movie.

- Google and Xreal announce Android XR partnership: Aside from announcing that the Project Moohan headset is coming out later this year, Google will also be partnering with Xreal for a pair of Android XR glasses, dubbed Project Aura.

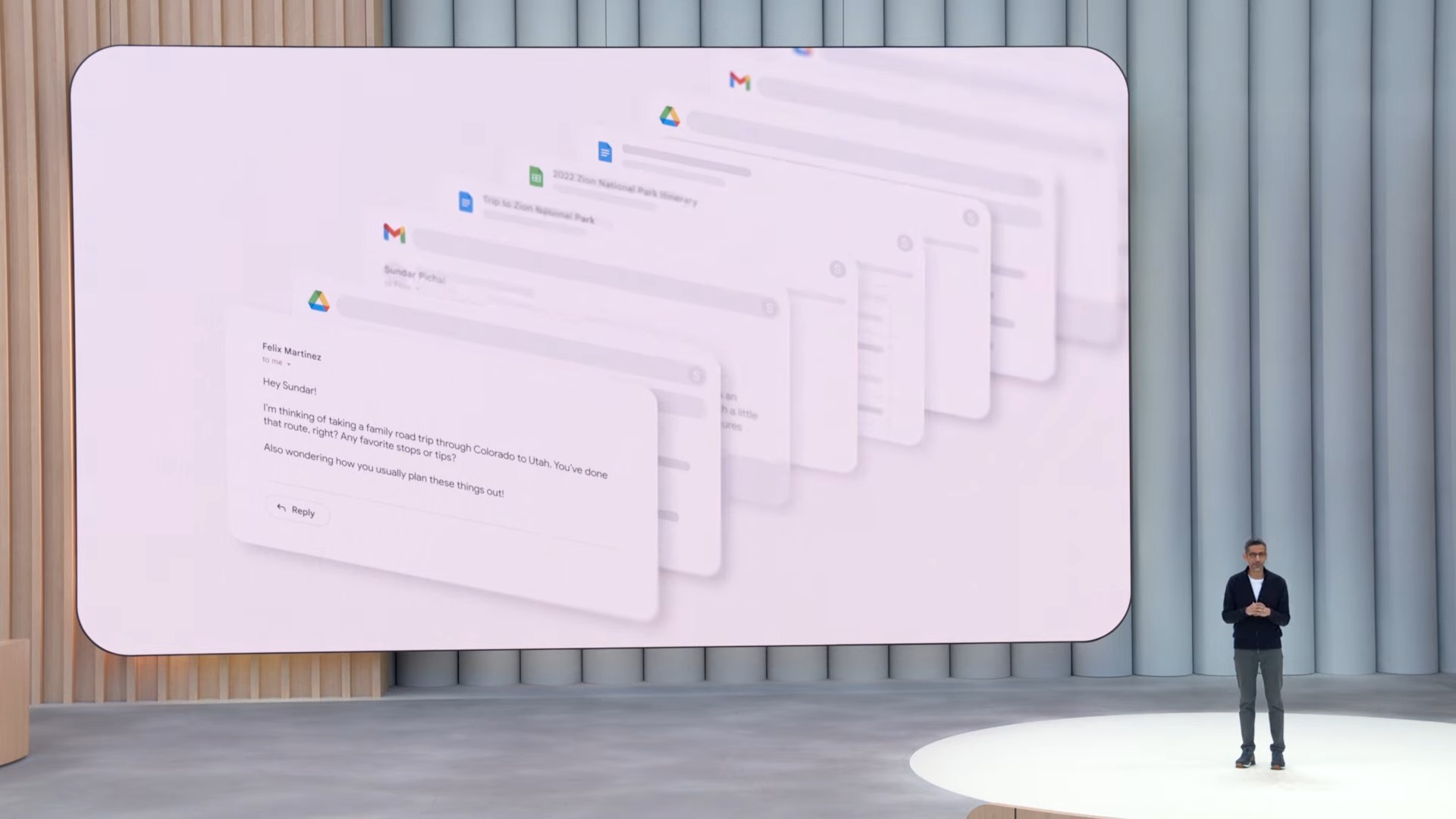

- More Gemini integration with Gmail: Gemini already has its foot inside of Gmail, but now it's planting itself deeper with new features that are personalized. It'll deliver personalized smart replies based on how you write, along with being able to research other emails for better contextual responses.

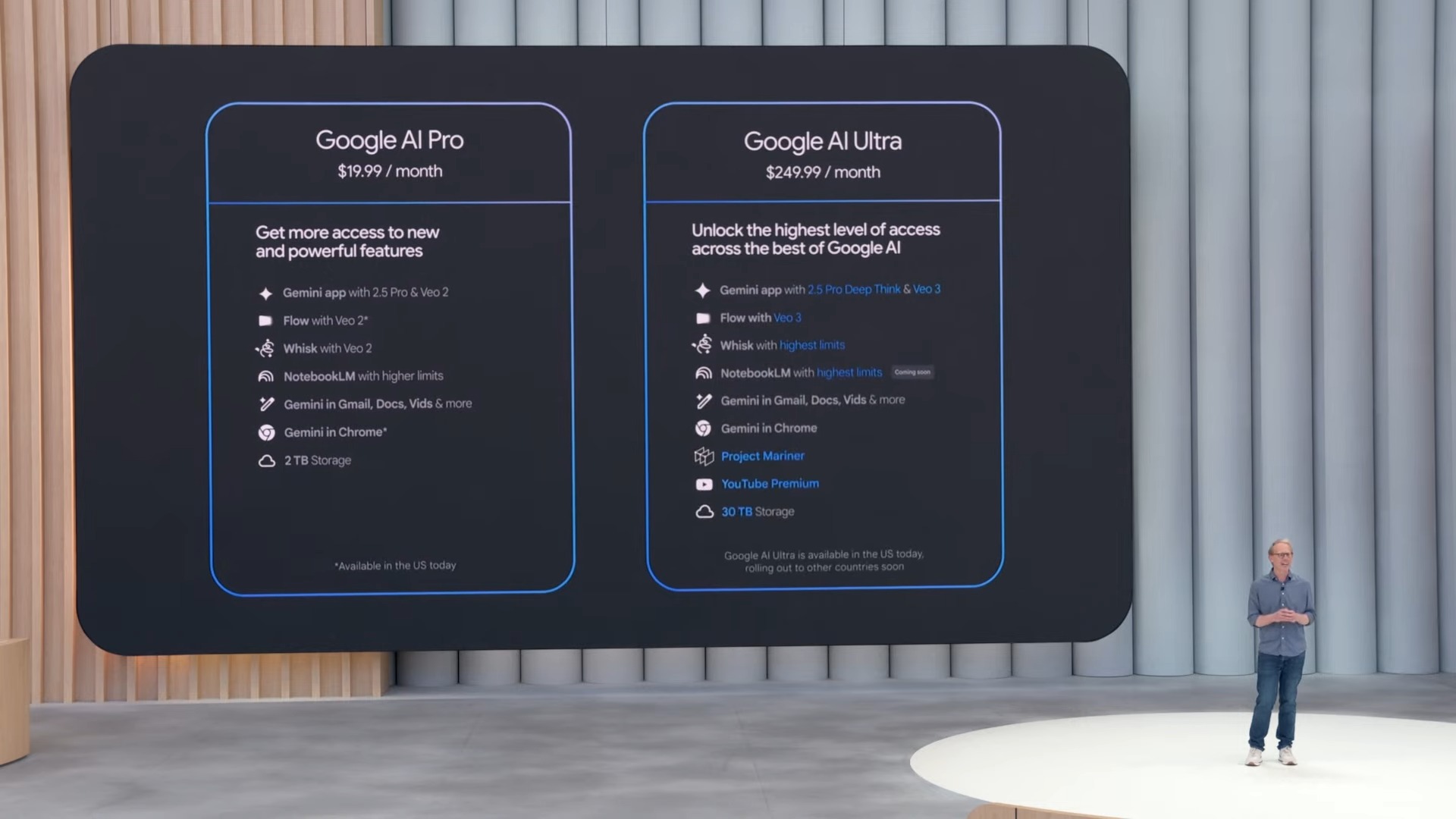

- New Gemini subscriptions revealed: Google went hard into Gemini and all the new stuff it can do. However, you'll have to pay for a Gemini subscription to access them. One of them is called Google AI Ultra, which costs $249/month.

Google I/O 2025: How to rewatch

The Google I/O keynote started at 1 p.m. ET / 10 a.m. PT / 6 p.m. BST on Tuesday. You can rewatch the keynote from the Google I/O website or from a YouTube live stream that we've embedded here.

In addition to the regular keynote, a developer keynote follows at 4:30 p.m. ET / 1:30 p.m. PT / 9:30 p.m. BST that will also stream on the I/O website. This figures to be a deeper dive into the main topics from the first keynote, plus more developer-centric news.

Project Astra making phone calls on your behalf is a big deal

One of the more intriguing announcements during Google I/O 2025 was the upgrades given to Project Astra. In the video that Google played, it showed how you could use your phone to interact with Gemini to help fix a broken bike.

Not only does Project Astra lean on a phone’s camera to see what you need help with, but apparently it could also make phone calls on your behalf — somewhat like how Google Assistant can call a restaurant to make a reservation. However, this integration takes the extra step because it can call multiple locations and relay the information back to you when it’s ready. This seems like a big deal.

How much will these Android XR smart glasses cost?

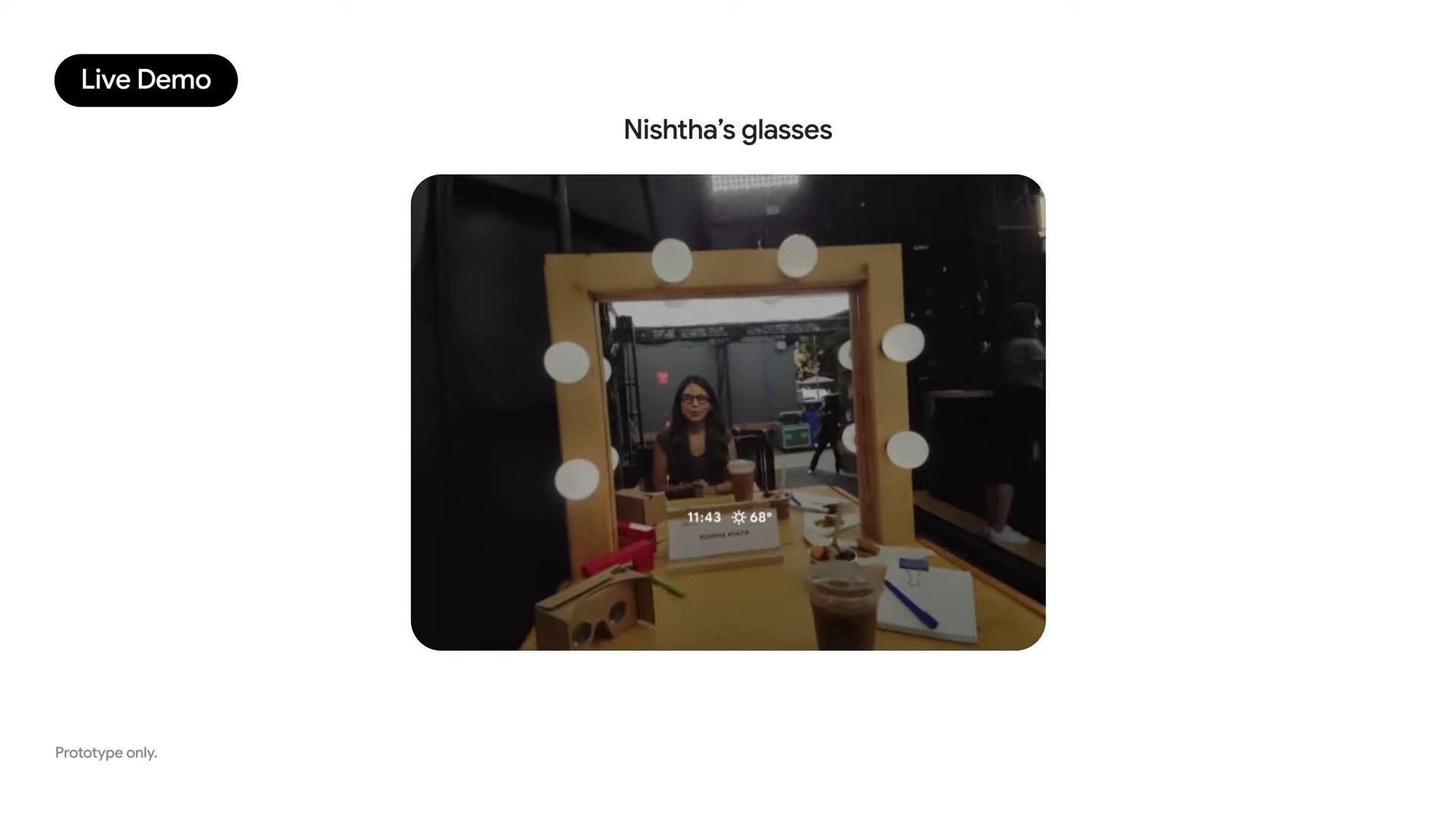

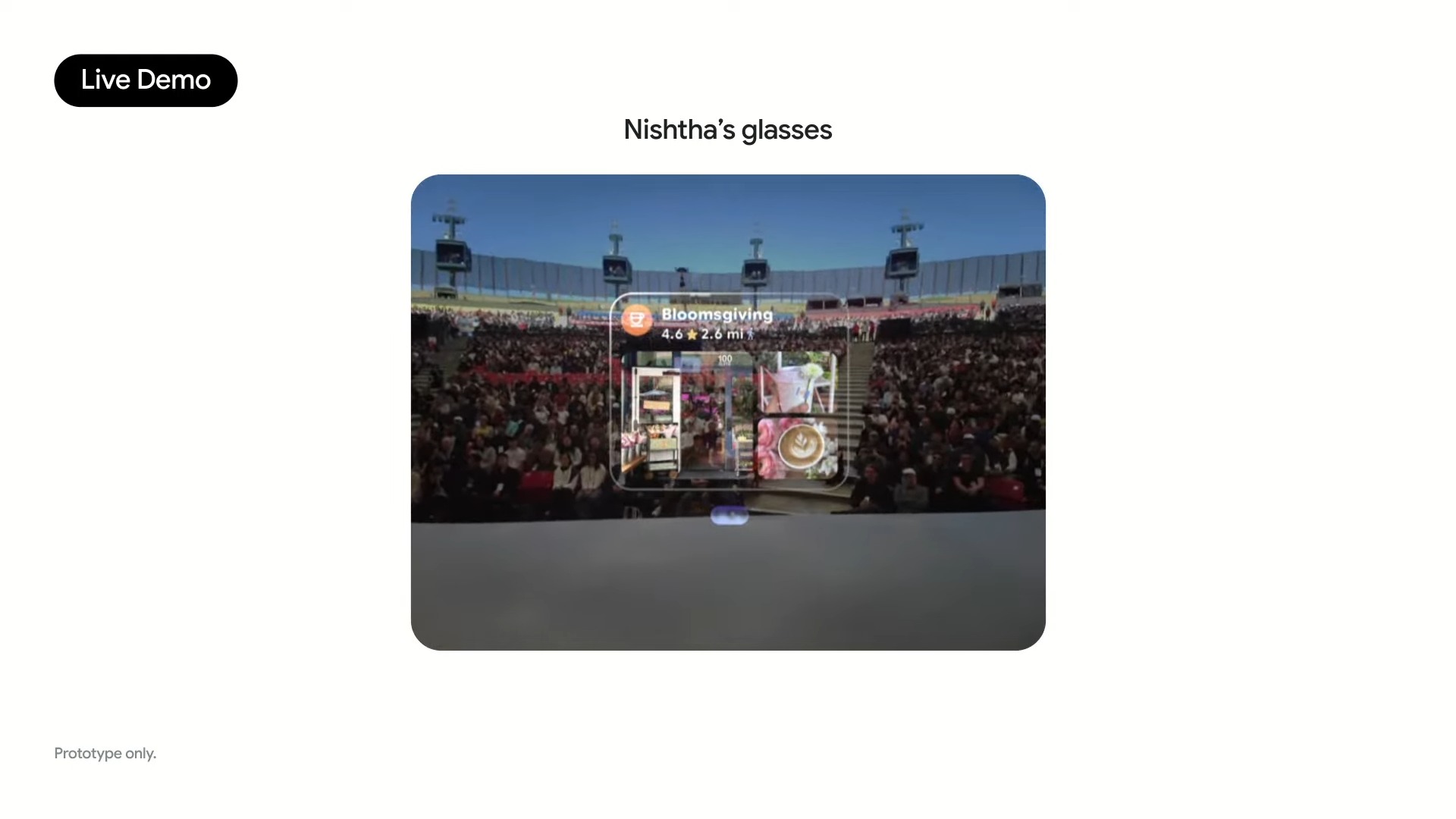

Saving the best for the end, Google revealed its Android XR smart glasses with an impressive demo that shows off its capabilities. The design of the smart glasses equally deserves recognition, but the tease left us wanting to learn more about them.

Most importantly, there was no mention about its price, which we imagine would easily exceed the cost of the Ray-Ban Meta — largely in part to how it appears to include embedded display in the lenses. That alone should drive the cost much higher, but how much would you be willing to shell out to have AI-powered glasses?

Android XR smart glasses demo show off its practical use

@tomsguide ♬ original sound - Tom’s Guide

Without question, the most impressive part about Google I/O 2025 was when it showed a live demo of its Android XR smart glasses. Even though there was no mention on who's making it, the demo does show off the practicality of using Gemini for a number of things. From getting directions to translating a conversation in real time, it makes us really excited for them.

That's a wrap — Google Gemini stole the show, but Android XR has us excited

After 2 hours, Google I/O 2025 is finally at a close. There's been a lot of announcements regarding its AI ambitions with new models for Gemini, but the biggest reveal has to be Android XR smart glasses. Stay tuned for our in-depth reporting on the biggest news.

Android XR smart glasses reveal leaves more to be desired

Google took the wraps off its Android XR powered smart glasses, which we have to admit looks like an ordinary pair of glasses. It even showed off some practical demos to the live audience, but it was a short reveal that leaves more to be desired.

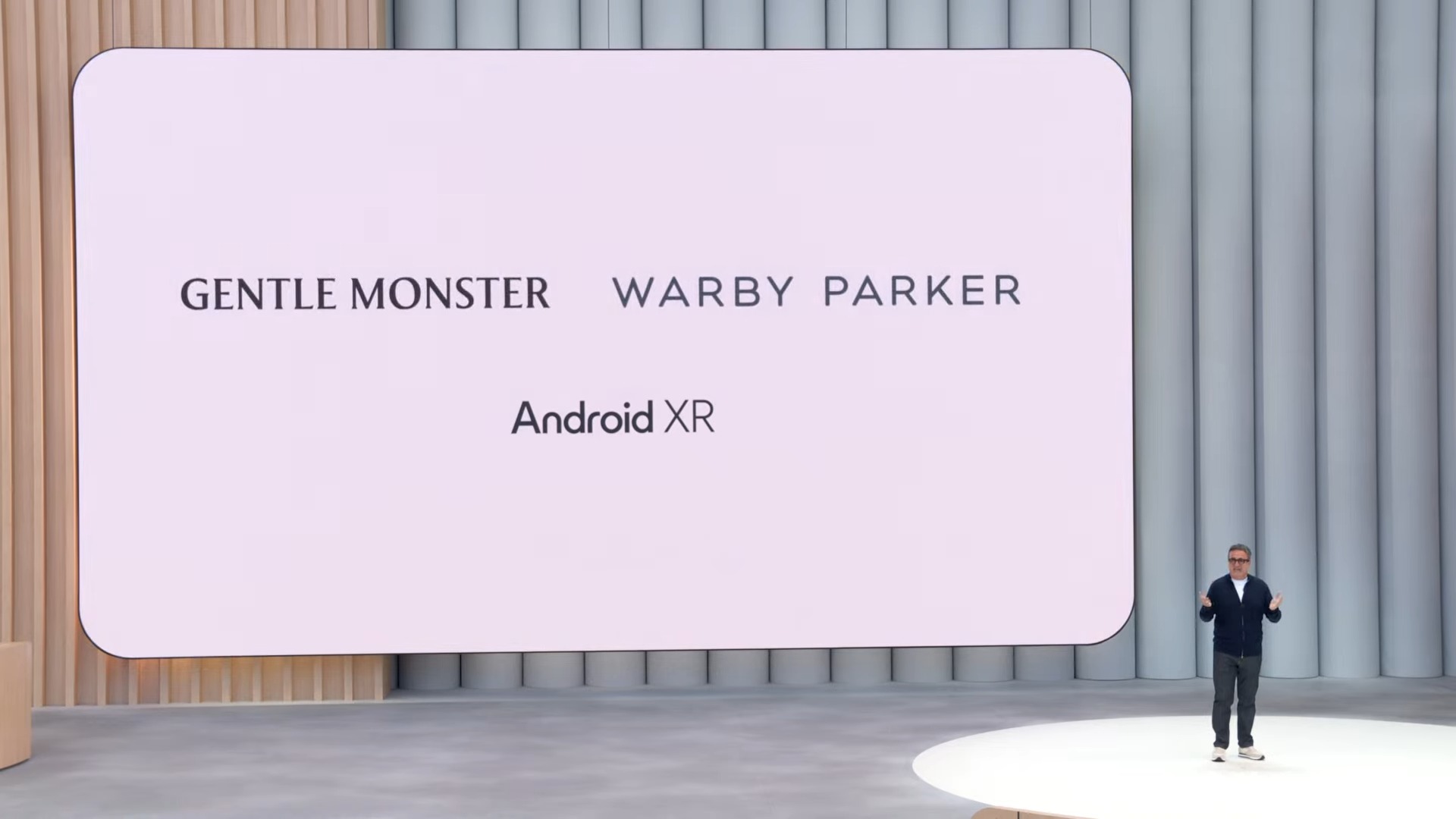

Google partners with Gentle Monster and Warby Parker for Android XR glasses

Google is creating the reference hardware platform for Android XR smart glasses. Gentle Monster and Warby Parker will be the first eyewear partners to create glasses with Android XR.

Android XR demo wows the crowd, looks like a normal pair of glasses

There's been a lot of anticipation around Google's Android XR powered smart glasses, but the company didn't disappoint with its demo on the stage. From asking for a shop recommendation, to getting directions to that location, and including a real-time demo of live translation between different languages, this demo shows off the many case uses for Android XR.

Android XR demo shows its deep integration with Gemini

Google finally shows off a demo of its Android XR glasses, which appears to lean heavily with Gemini to do an assortment of things.

Project Moohan headset finally gets a release timeframe

Project Moohan has been teased already, but now we have confirmation that it's coming out later this year. However, no pricing has been revealed.

Android XR is being built with headsets and glasses in mind

After nearly 1.5 hours on talking about AI and Gemini, Google is pivoting to Android XR, like how it is going to span headsets and glasses.

Google details new Gemini subscriptions costs

In order to use many of these new Gemini features introduced at Google I/O 2025, you'll need to subscribe to its new subscription services below:

Google AI Pro - $20/month

Google AI Ultra - $250/month with the highest level of access

Flow puts you in the director, editor, and videographer role — all at once

Flow is a new video creating tool that basically is an advanced video creator and editor, choosing what scenes you want, and including music. It's sort of like your traditional video editing software, but all the clips, transitions, and sound effects, and music are generated with AI.

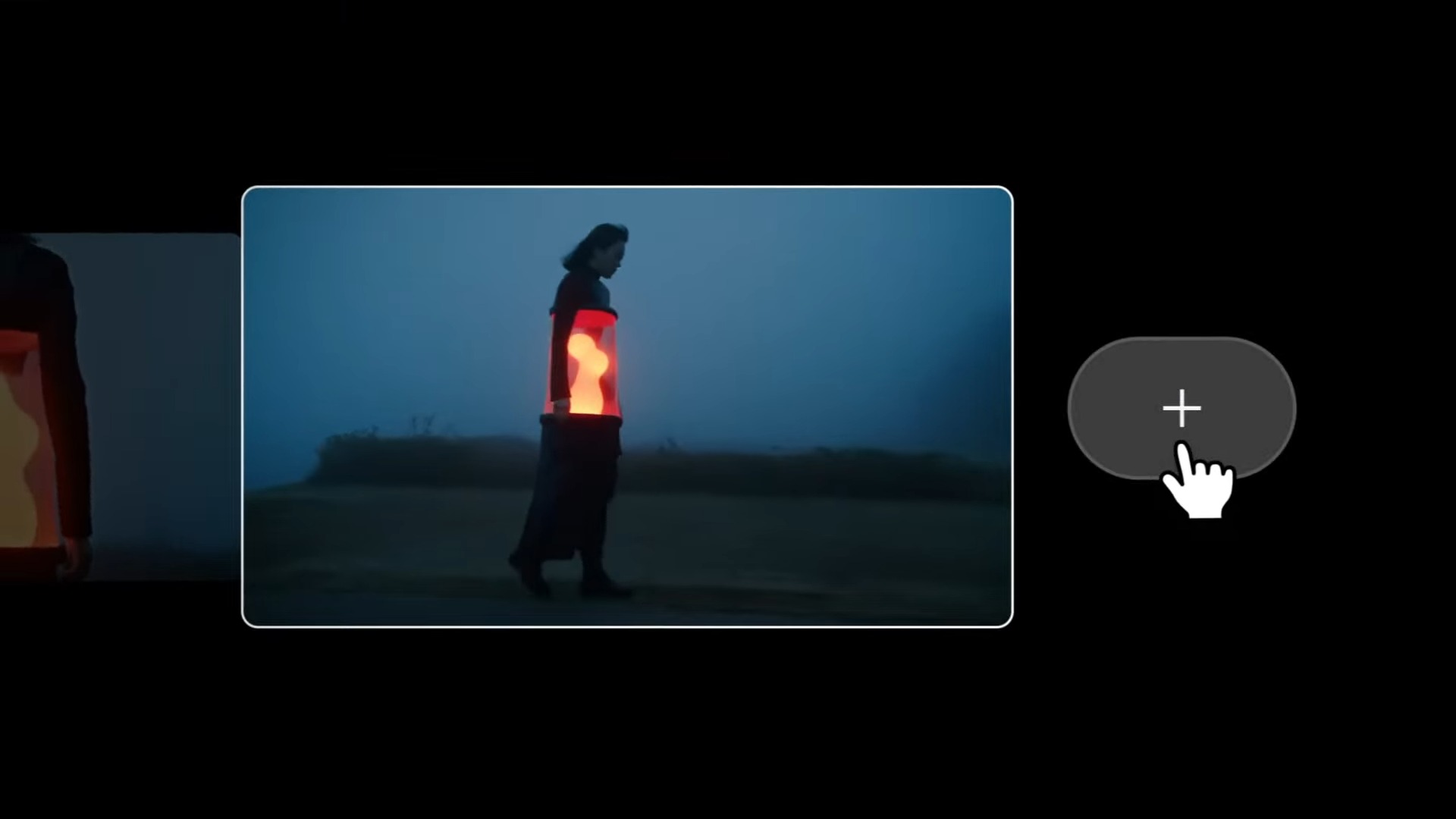

Darren Aronofsky short clip shows off the power of Veo

Google showed off a shorty Darren Aronofsky clip that shows off the power of Veo to create hyper realistic video. The short clip looks believable, complete with real people, titles, and more.

Veo 3 video generator can now create sounds and voices in video

Veo 3 comes with native audio generation, like sound effects, background sounds, and voices to videos it creates. These photo realistic videos complete with motion and sound makes these videos feel more immersive.

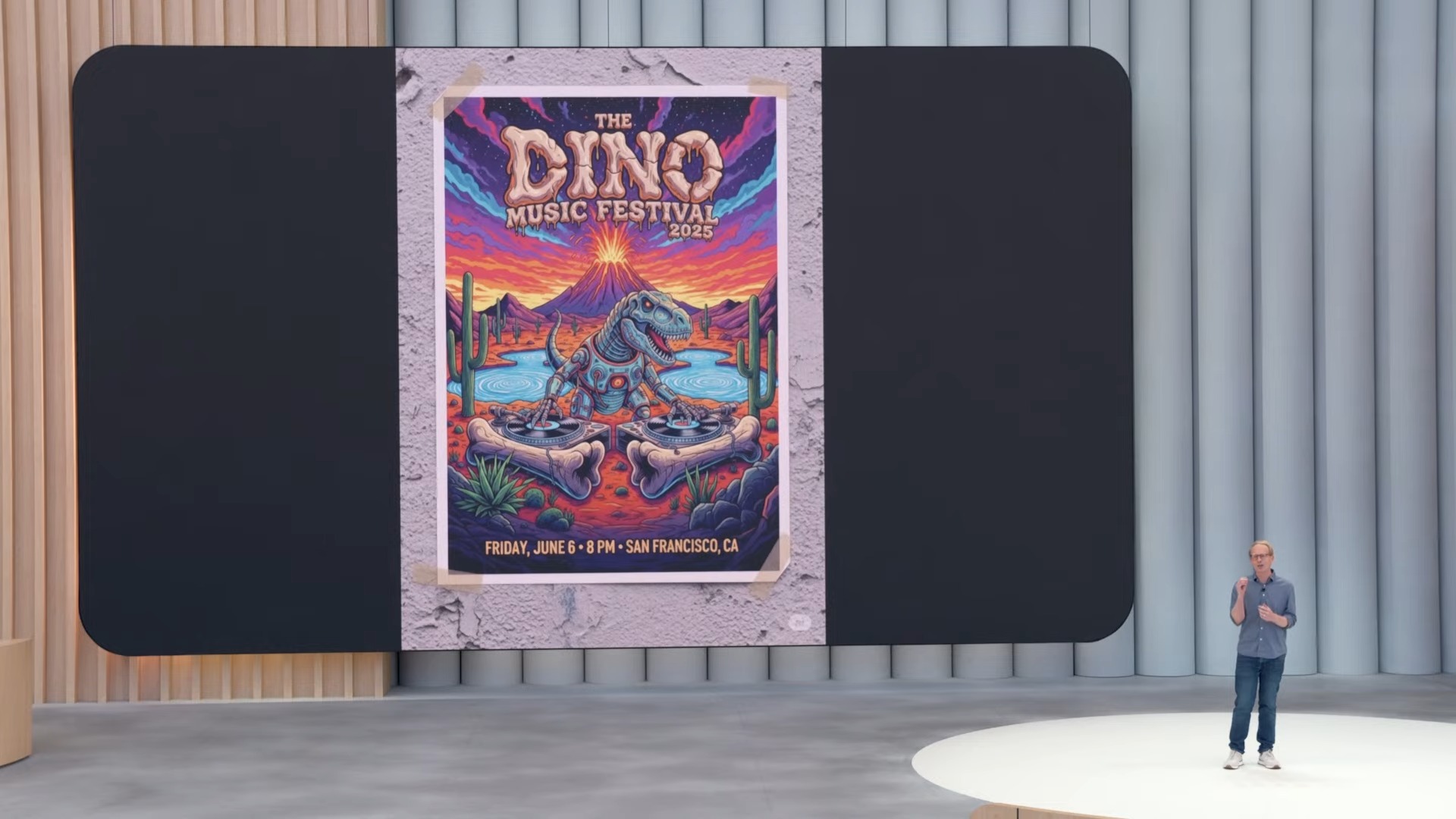

Imagen 4 generated images are more realistic and detailed — along with better typography

Imagen 4 is coming to the Gemini app, with richer images and fine grain details. They appear to be more realistic than ever before, including with text and typography to your images.

Canvas is coming to Gemini for co-creation

Canvas is a co-creation tool that will help you create info graphs, podcasts, and more to get the experience you're looking for with Gemini.

Gemini is becoming a more proactive assistant through Personal Context

Personal Context connects your search history with Gemini to craft responses that are personalized. Gemini can also remind you about an upcoming exam you might have, by again looking at your history.

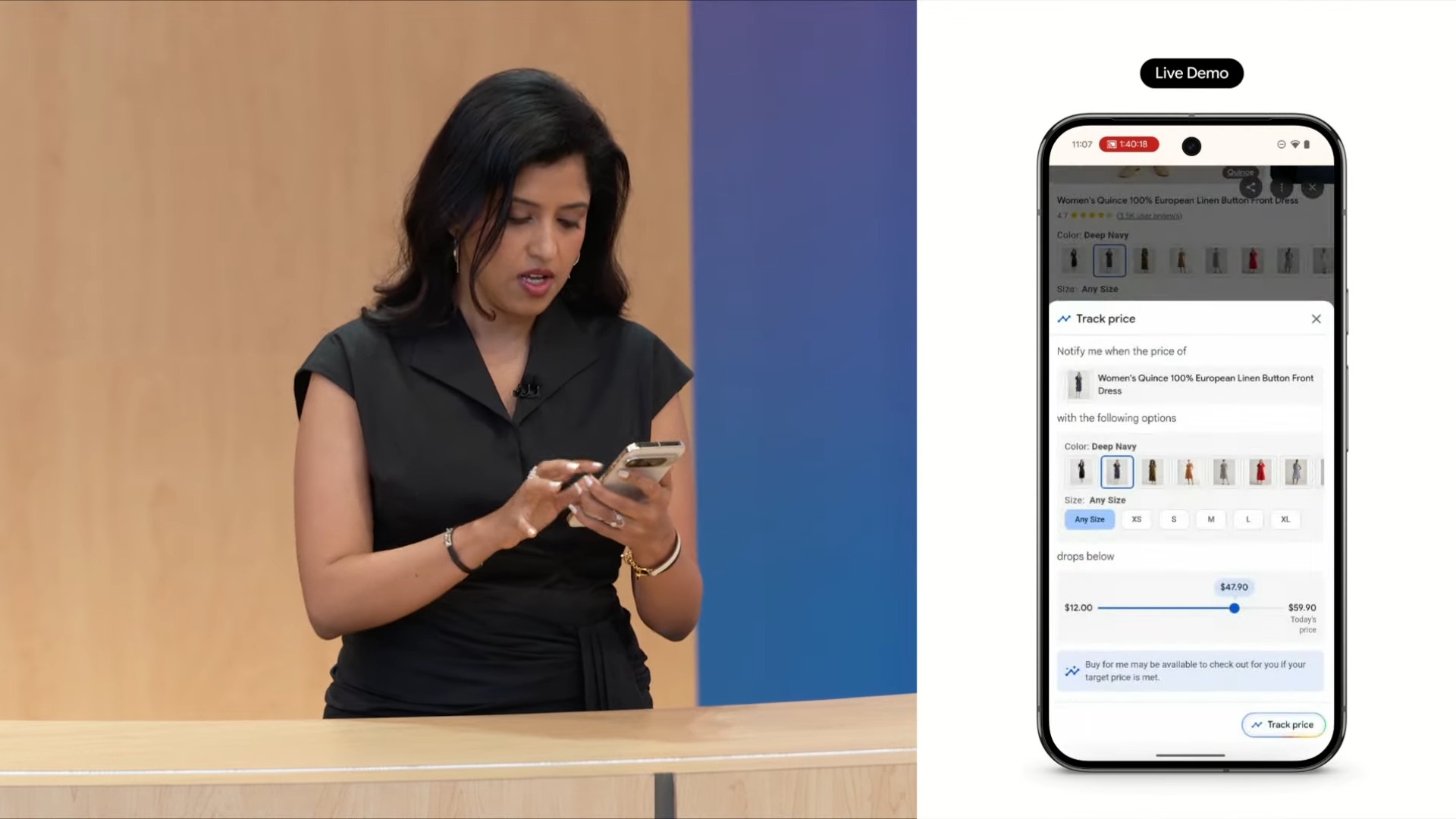

Wear it before you buy it — Google's new AI shopping model makes it easy

A new try it on image generation model with search specifically works on creating images for fashion and clothes you want to wear, complete with diffusion models that can tailor the image for different fabrics.

This new tool will also continually check web sites to keep a watch out on their price, especially when it drops and it'll send you a notification.

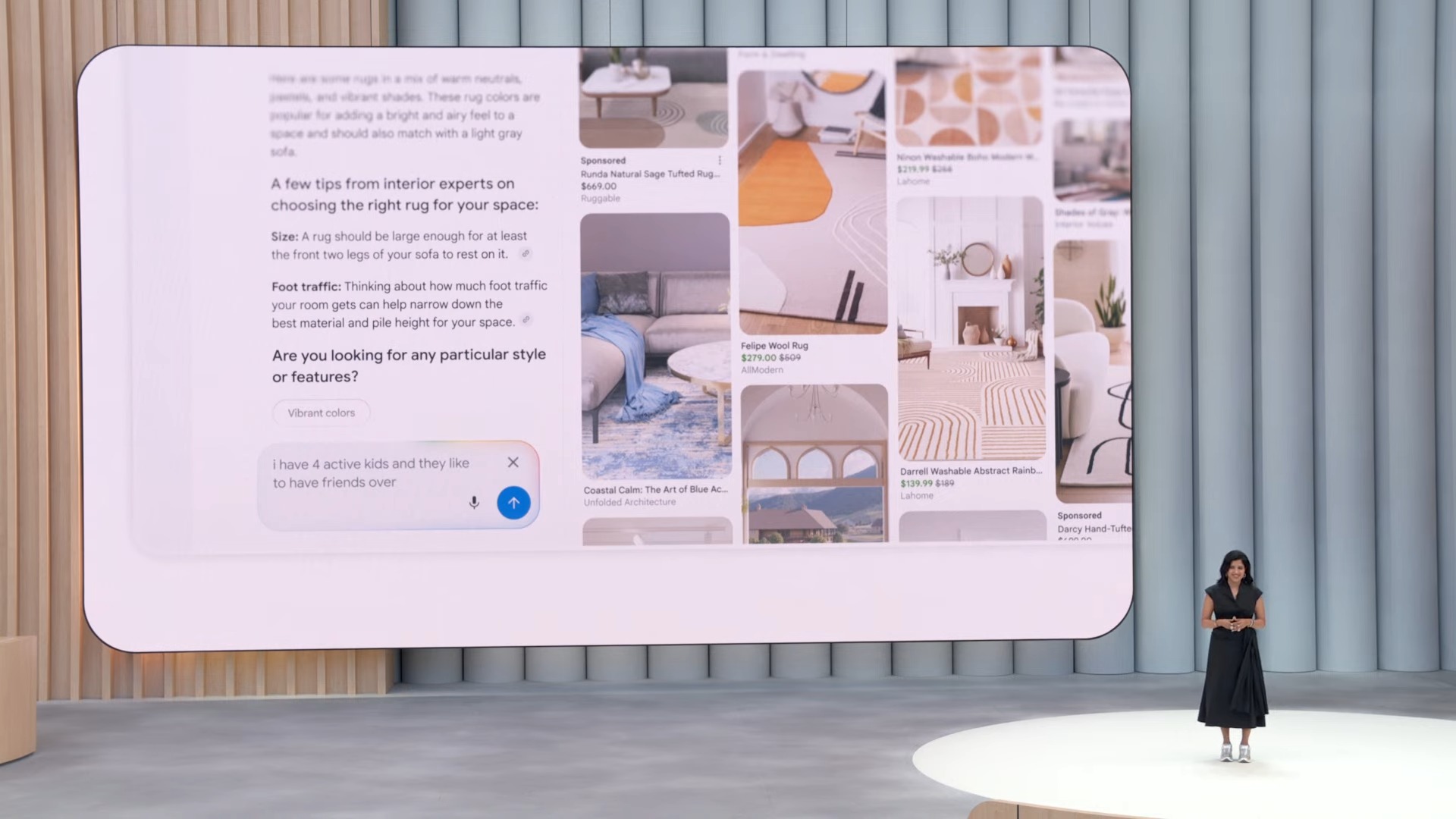

Shopping Graph could be the ultimate shopping assistant

Search will deliver personalized results, complete with image galleries. It can also recommend specific items based on your preferences/needs. Google's demo shows how this can be used to find a rug that can specifically handle kids at home, rather than a simple decorative one.

Search Live uses your camera to answer questions

Search Live uses Project Astra with AI Mode, so you can use your phone's camera to delivering meaningful search results. It's a new way to perform searches with the camera, rather than typing it all out.

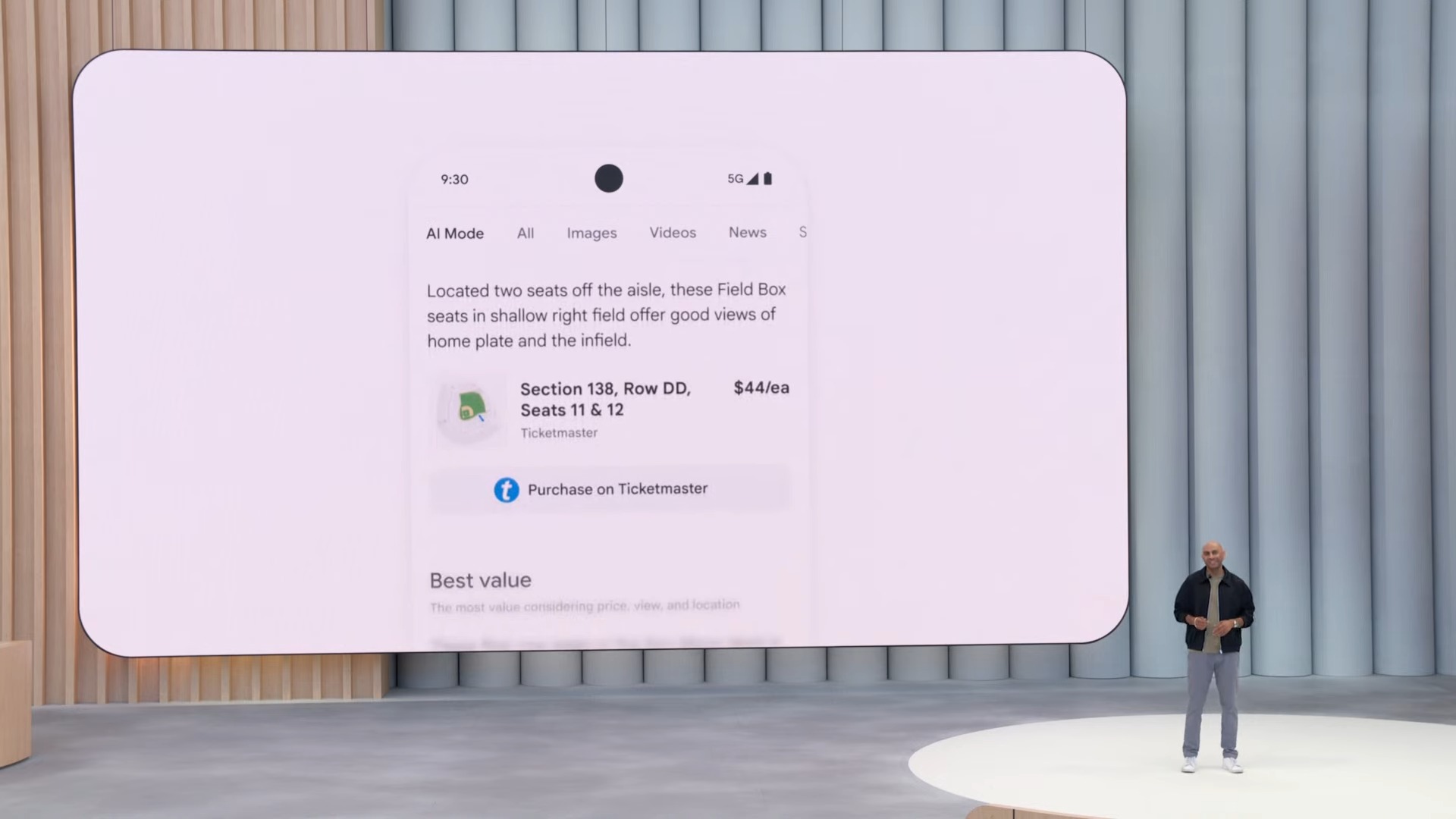

AI Mode can help search for tickets, reservations, and more

Project Mariner capabilities are coming to AI Mode this summer, like being able to search for tickets to a baseball games -- complete with filling out forms to buy tickets.

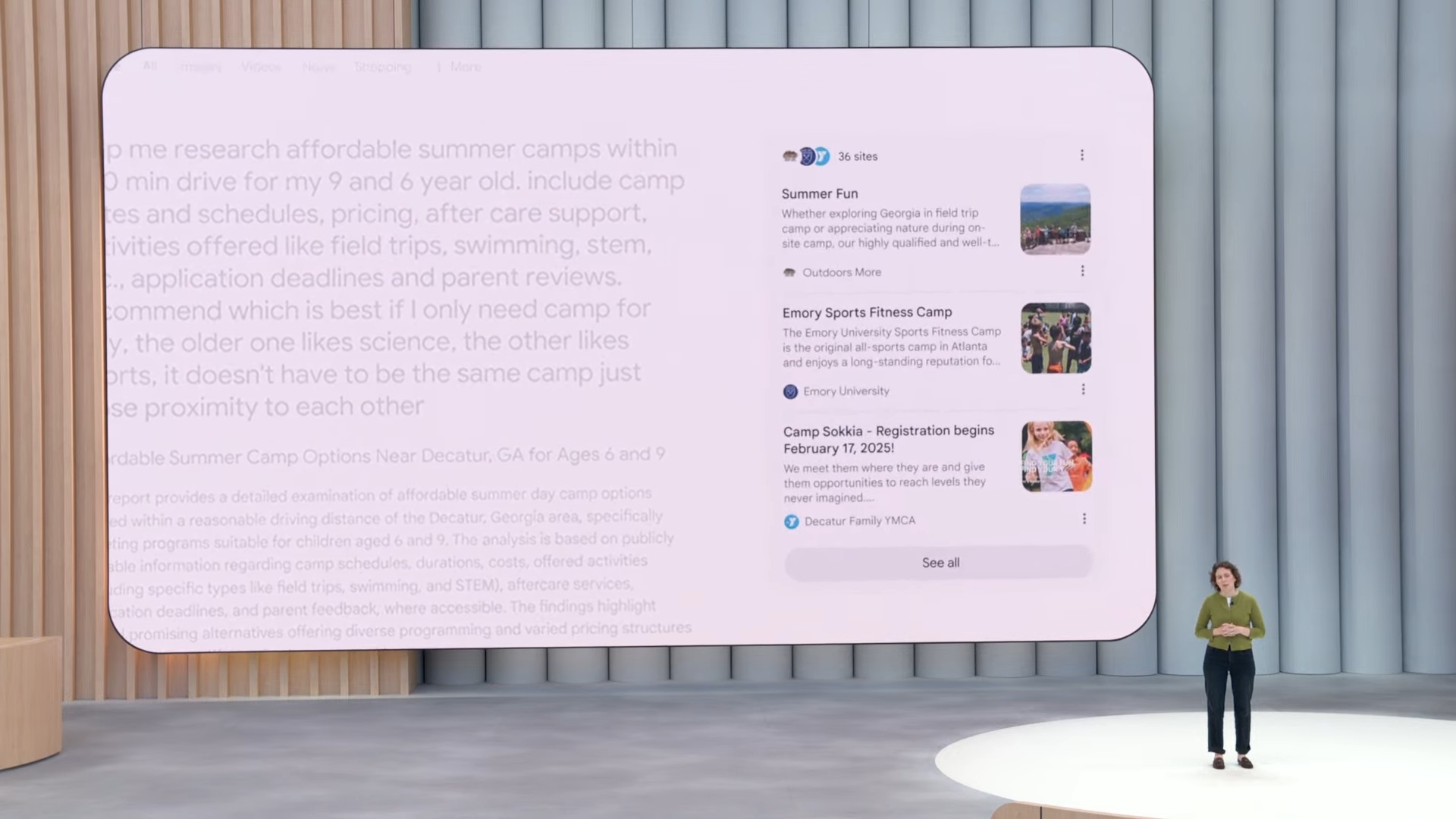

DeepSearch will deliver more more relevant content with Search

Deep Research is coming to AI Mode, which Google is calling DeepSearch. This model will better curate research topics with logical search results and hyper relevant content.

AI Mode will help to answer Google Search requests

Gemini models are going to be integrated with Google Search. It's going to add intelligence with AI Mode, a new powerful AI search that will help answer questions. It's a new tab within search and will also be included with AI overviews.

Project Astra helps with accessibility

Google played a video where Astra's tech is being used to help someone with limited vision to help them see the world, like reading signs to tell them what they say and much more.

Project Astra can help you fix your bike and make phone calls for you

Another cool video demo Google shows off is how Project Astra makes it easier to do research for you, like figuring out how to do some bike work. Gemini does the research and can guide you with instructions, plus it can apparently make phone calls to bike shops about specific parts that are needed.

Using Gemini Live camera in an unconventional manner

@tomsguide ♬ original sound - Tom’s Guide

Google showed off a rather unconventional demo of Gemini Live with camera access. It's honestly funny, so take a look.

A faster, more efficient AI research model

Gemini Diffusion is coming to text with a new research model. This new model is much more efficient and faster at answering prompts.

Take sketches and create 3D animations with Gemini 2.5 Pro

Gemini 2.5 Pro can take a sketch you've drawn and code 3D animations into an existing app, along with updating files in order to generate the animation with AI Studio.

Native audio output with Gemini Live

Gemini Flash and Pro shows off how Gemini Live can speak in different tones and languages, like being able to switch intermittently between them, to have a more natural conversational tone.

A closer look at what Google Beam can do

@tomsguide ♬ original sound - Tom’s Guide

Google showed off a deeper demo of Google Beam, its new video conferencing tool that makes it seem like you're talking to someone in person. Check out the video above to see it in action.

Personalized Smart Replies uses Gemini to respond to emails

Personalized smart replies will analyze messages that use Gemini to search past emails and the way you write, to deliver a personalized reply to a message. It's coming to Gmail and Gemini subscribers this summer.

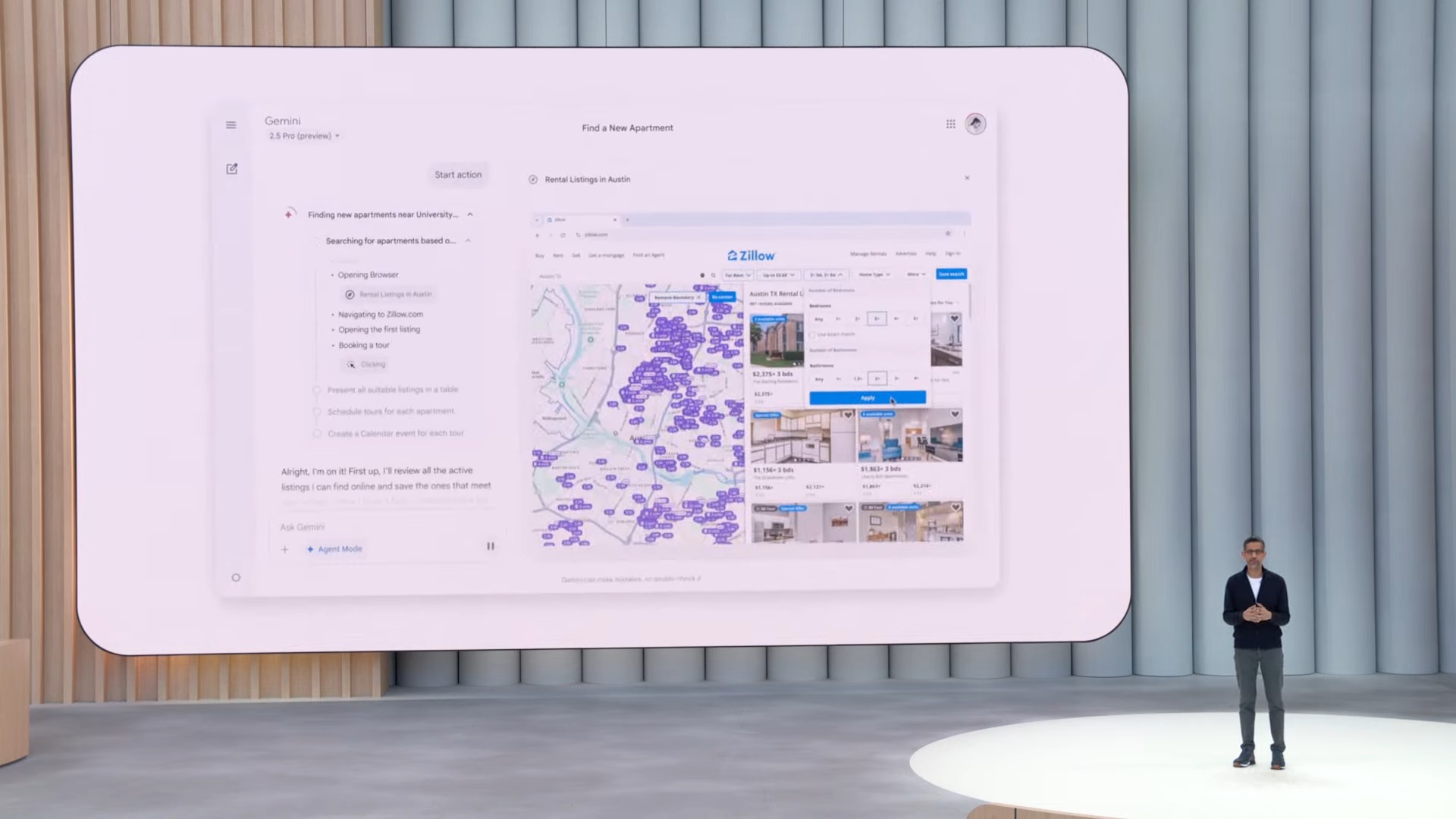

Agent Mode with Gemini is your realtor

Agent Mode is a new feature with Gemini that helps you find apartments and rental listings, doing all the research for you and filtering exactly what you're looking for. It'll continue to search for listings until you're done. An experimental version of this Agent Mode is coming soon.

Gemini Live camera and screen sharing coming to Android and iOS

While Pixel phones and some other Android phones have been able to use the camera with Gemini Live, Google announced that this feature will be coming to all Android phones and iOS.

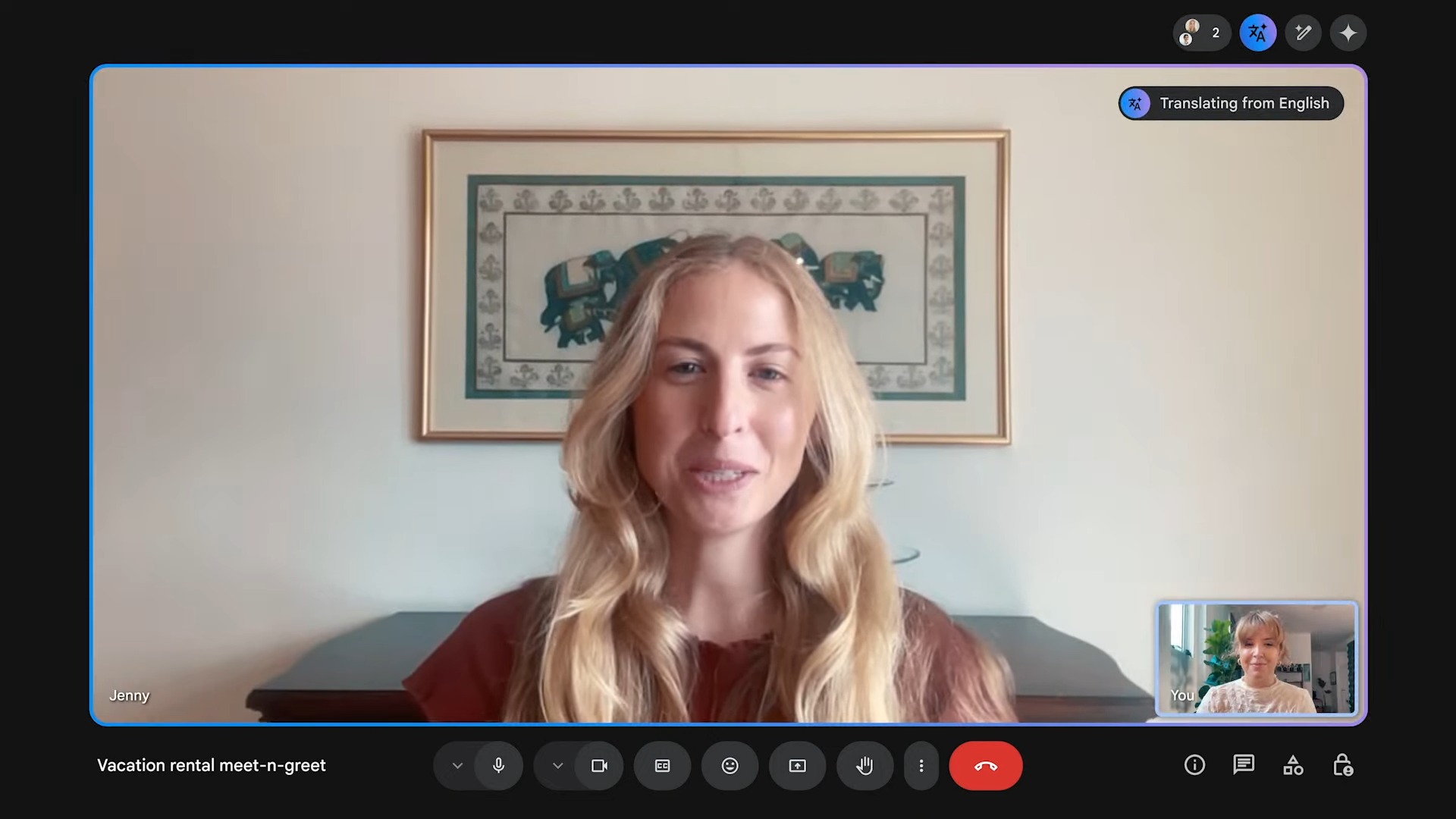

Google Meet gains live translation

Google Meet will have the ability to translate languages for a more intuitive conversation between two people. A quick demo on stage shows how it can be used between two people to communicate, despite speaking different languages.

Google Beam is an AI-first video conferencing platform

Project Starline was first introduced lasty year, but now there's Google Beam that aims to transform video conferencing using an array of cameras. The first Google Beam devices will be coming soon, but no price has been revealed.

More intelligence for everyone, everywhere

More people are using AI, so Google's AI models have a solution for everyone. Interestingly, there's been a 50x increase in the amount of tokens being processed.

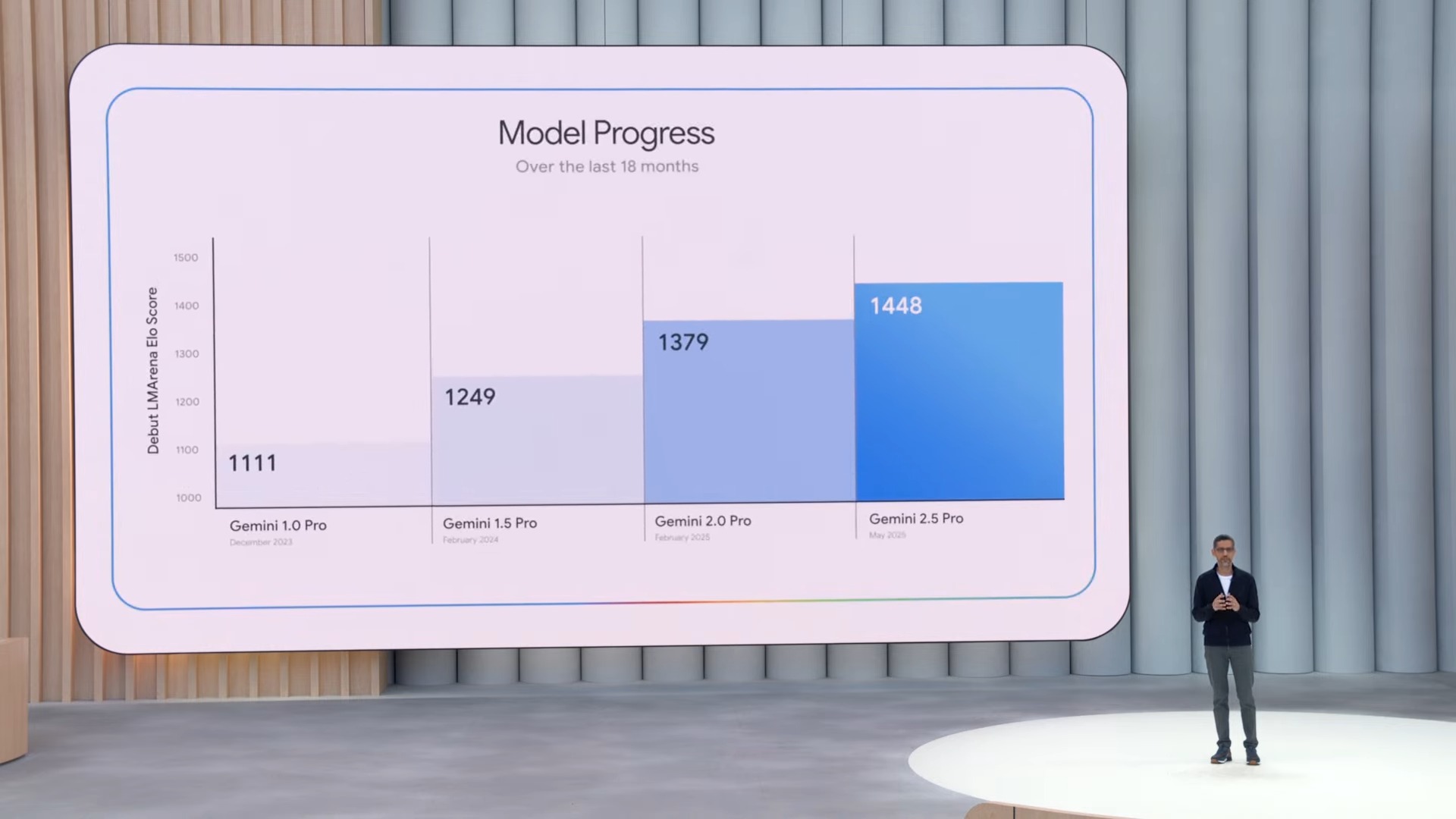

Google Gemini continues to take top honors

Google CEO Sundar Pichai kicks off the keynote highlighting the achievements of Gemini, mentioning some of its highlights of its AI model.

You get what you give kicks off I/O with AI generated clip

After a quick countdown, the event kicks off with the New Radicals 'You Get What You Give' song accompanied by AI-generated videos.

The Google I/O 2025 keynote has started!

There's excitement all around the Shoreline Amphitheatre, as the Google I/O 2025 keynote is under way. We expect a lot of AI announcements and Android XR, but we also suspect to hear a bit more around Android 16. Stay tuned for all the biggest announcements!

Some beats whipped up by Toro y Moi for the I/O crowd

@tomsguide ♬ original sound - Tom’s Guide

We're nearing the start of the keynote, but the Google I/O 2025 crowd is getting a treat from Toro y Moi, who's warming up the audience by creating some Gemini-inspired beats. Take a listen!

Google could again set the bar for AI in our phones — and Apple better be worried

There's no arguing how Google has consistently set the bar for AI in our phones. From Circle to Search to Gemini Live, the company's AI ambitions have been solid over the course of the last few years.

And with Google I/O 2025, it's yet another opportunity to show the world why Android is the premier platform for AI. Apple definitely needs to be worried, especially given the bumpy rollout of Apple Intelligence.

Google's strategy has been ironclad, as many of the new AI features it typically announced at its developers conference trickle down to Android phones in a timely fashion.

Will Android XR headsets and glasses replace our phones?

We expect a lot of juicy announcements around AI and Android XR, but the biggest question is where do these headsets and smart glasses fit into our live. More importantly, could they one day replace our smartphones?

It's tough to say, but we could have better insights about this probability with the Google I/O 2025 keynote. For decades now, the smartphone has been the de facto gadget that people bring with them everywhere.

There's a lot of challenges in making an Android XR powered headset or smart glasses that could replace phones, but only time will tell.

Treat your AI with the same respect you want to get

We're in our seats at the Shoreline Amphitheatre waiting for the festivities to begin, which should be about 45 minutes from now. What's interesting is this sneak peek at what appears to be developers using Gemini Advanced to create a camera app.

But rather than just demanding the AI assistant to do it, the prompt does it by adding a courteous response with 'please.' It's an intriguing small detail, given how OpenAI has recently adapts to polite language with ChatGPT. Could Google be following suit?

What more can AI do for Pixel phones?

Google has an amazing set of AI features in its Pixel phones. From transcribing and summarizing voice notes, to generating images from scratch with Pixel Studio, to even remembering details with Pixel Screenshots, Google proves why its Pixel phones are the AI phones to beat.

Another unique AI-assisted tool is Call Screen, which got a big upgrade with the release of the Pixel 8 Pro. Not only does it let Google Assistant take phone calls, but it's intelligent enough to provide you with contextual responses to send to your caller. No other phone has a similar feature like it, so it makes us wonder what else Google could have in store with AI on mobile.

Ray-Ban Meta days could be numbered

The Ray-Ban Meta has been the spotlight for years now, showing us the practical applications of AI integration — which is probably why it continues to remain as a best smart glasses contender, despite newer models coming out.

However, its days could be numbered if Google does drop a hardware reveal. At last week's Android Show stream, Android chief Sameer Samat revealed a pair of sunglasses. It was a teaser for sure, but we're hopeful that Google will go into deeper detail at Google I/O 2025.

What continues to make the Ray-Ban Meta helpful is that unlike some other smart glasses, it has a pair of cameras that can do more than just capture memories — it integrates with Meta AI to help users. But if Google's smart glasses end up looking similar, but with more features and better a better AI assistant (presumably Gemini), it could spell bad news for the Ray-Ban Meta.

We're on the ground at the Shoreline Amphitheatre!

The Google I/O 2025 keynote is still hours away, but we're on the ground early to see all the buzz that's happening around the Shoreline Amphitheatre. Just as a reminder, the keynote is slated to kick off at 1:00 p.m. ET / 1:30 p.m. PT / 9:30 p.m.

It's going to be a live show, but you can also watch all of the new announcements through its YouTube live stream.

Is it time for Google Assistant to retire?

With the emergence of Google Gemini over the last year, it does beg the question as to whether or not Google Assistant should finally be retired. Based on how conversations through Gemini Live are much more natural, you can do so much with Gemini on your phone. And with the ability to tap your phone's camera to interact more with Gemini Live, a new level of utility has been unlocked that we haven't seen before.

Yet, Google Assistant remains distant in the background the more that Google invests into Gemini. Google Assistant still has deep ties with Google's smart home ecosystem, as it's the assistant that continues to power the company's smart speakers and displays.

Small improvements for Gemini, as well as large ones

It seems that in the near future, Google will offer Gemini users on mobile an easier way to open the app full-screen after opening the assistant's overlay mode, according to developer flags in the Gemini app.

It's nowhere near as flashy as some of the things we expect Google to announce today. But being able to properly read your chat log with Gemini without having to close the overlay and then open the app again is a smart change, if you ask us.

Project Moohan, revisited

Last December, Samsung announced Project Moohan, a virtual reality headset based on the newly unveiled (at the time) Android XR platform. Since then, it’s been a waiting game, as Samsung has slowly teased out more details about its headset plans.

For instance, we know the finished product is coming later this year, and a hands-on by Marques Brownlee from January seemed to suggest that AI is very much integrated into the headset. (That would be in line with last week’s announcement during the Android Show about Gemini finding its way into more devices.)

Does Samsung get some stage time at a Google event to talk about one of its own products? It could happen. But it seems more likely that Project Moohan gets a passing reference, with Google talking up other devices that will benefit from Android XR.

Android 16’s arrival

It feels weird to be headed into a Google I/O with the knowledge that Google’s not going to be spending much time — if any — on Android. But the bulk of the news seems to have been taken care of last week when Google talked up the interface changes coming to Android 16 during its Android Show streaming event. And if that’s not enough for you, the Android 16 beta has already been out in the wild since January, introducing us to such features as live updates, adaptive apps and more.

Really, at this point, we’re just waiting for a release date from Google, and that was offered during last week’s Android Show — at least in broad terms. Google’s Sameer Samat said that Android 16 would be available for Pixel devices first next month, with availability for other phones — including Samsung’s Galaxy S models — coming later in the summer.

Revisiting Project Astra

Project Astra was the headline-grabbing demo of Google I/O 2024, featuring an AI-powered assistant that was smart enough to both see what you were seeing and respond to voice commands. It’s an excellent example of multimodal AI, and while some of its features can be found in Gemini Live, Astra really is seen as an AI assistant for smart glasses.

We’re anticipating a Project Astra update at this year’s Google I/O, so it may be worth revisiting the 2024 demos that included using the camera on a phone to enable the AI assistant to recognize objects, answer questions and act upon the data it recognized.

Meet Gemini 2.5 Pro

Ahead of this week’s Google I/O, we’ve already gotten a hint about at least one software update that should enjoy some attention during the show. Gemini 2.5 Pro Preview (I/O Edition) debuted earlier this month, with Google likely to explain more about the update during its I/O conference.

The update focuses on coding and building interactive web apps, according to Google. Specifically, Google has put the focus on coding-based prompts across the board, with a special focus on code transformation and editing existing code.

Given I/O’s developer-centric focus, it wouldn’t be unimaginable that Google would devote some time during its I/O keynote to this particular topic.

Google’s Pinterest competitor

According to a report in The Information, Google I/O could see Google introduce us to a new service that’s meant to challenge Pinterest.

Specifically, Google is supposedly working on a service that would let people see images related to fashion or interior design. You’d then be able to save images to folders, drawing on them for inspiration later on.

It’s unclear how close this Pinterest competitor is to launching — and honestly, it doesn’t sound like the developer-focused announcement Google would make at I/O. But that’s one of the big rumors circulating about this week’s event ahead of the Tuesday keynote.

Are smart glasses coming to Google I/O?

With all the talk of Project Astra and Android XR, it feels inevitable that some sort of smart glasses will appear at Google I/O, even if it’s just a demo to show off how Google’s AI efforts can work in mixed reality. And Google is doing to tamp down that talk.

At the end of last week’s Android Show stream, Android chief Sameer Samat mentioned a number of exciting demos coming up at I/O this week… as he very noticeably put on a pair of sunglasses. Since we assume it wasn’t to block out the glare of the stage lights, we’re going to treat that as a teaser.

The latest on WearOS 6

Android may have gotten the focus at last week's Android Show event, but Google has other software platforms, and WearOS is getting some changes, too. Google's smartwatch software will soon be updated, with WearOS 6 boasting a number of noteworthy changes.

- The same Material 3 Expressive design coming to Android 16 is also part of WearOS 6, with scrolling animations that match the curved display of Google's watches and shape-morphing elements that adopt to the watch's screen size.

- Full-width glanceable buttons stretch across the width of the watch display to make them easier to tap.

- As part of Google's push to extend Gemini beyond smartphones, the smart assistant is coming to WearOS so you can now use natural language queries to interact with your watch.

- A 10% improvement in battery life is coming thanks to behind-the-scenes changes.

- Dynamic color theming extends the color of the watch face across the WearOS interface.

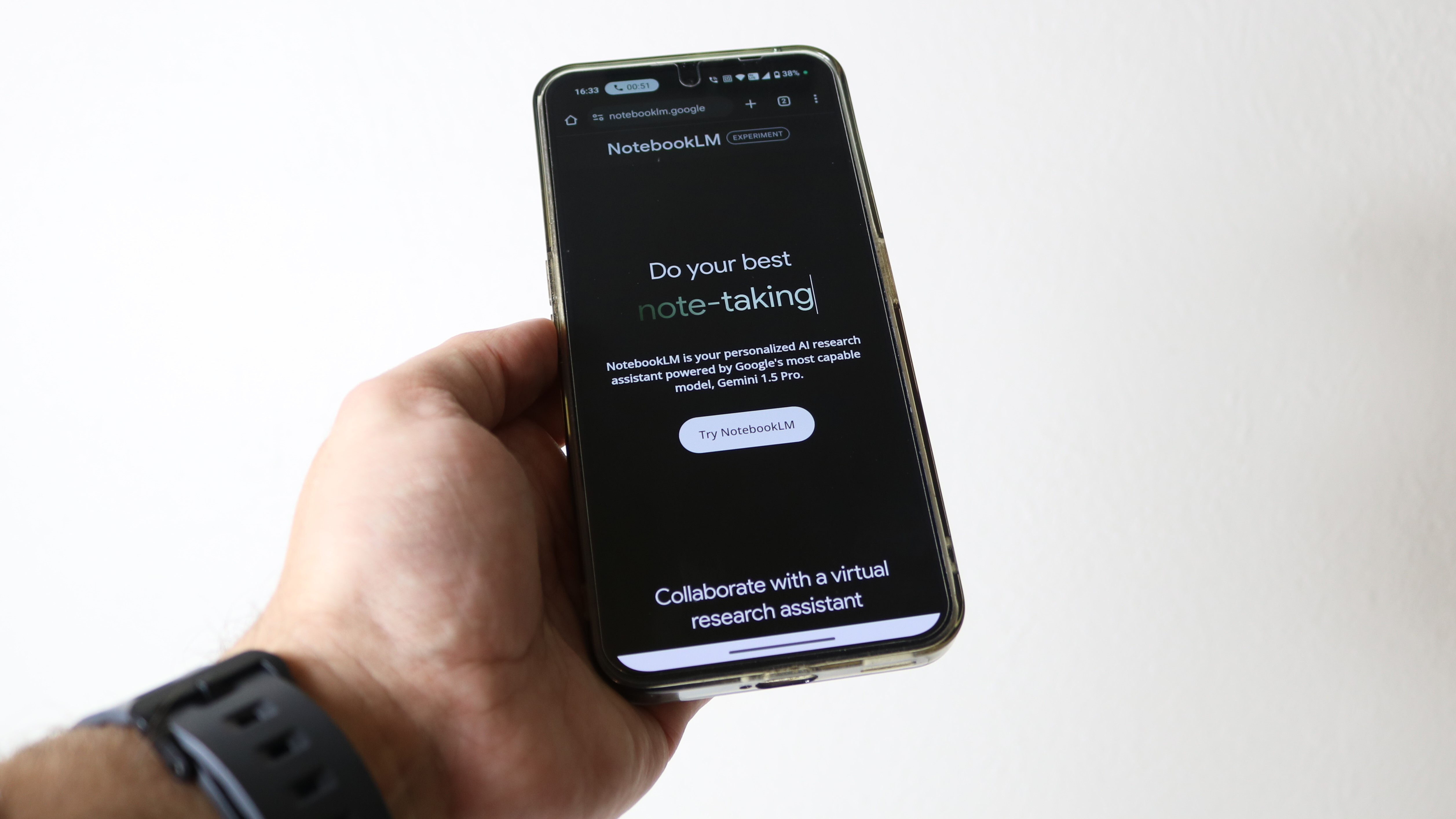

NotebookLM hits Android

Google had some product news just ahead of Google I/O. On Monday (May 19), it announced iOS and Android versions of NotebookLM, its AI-powered research assistant.

If you're not familiar with it, NotebookLM has the ability to process complex information, producing results that meticulously sourced. It's capable of summarizing long PDFs, pulling key details out of your files, doing side-by-side comparisons of different documents and more. The mobile version of NotebookLM puts all those capabilities on your Android device, extending the product's reach.

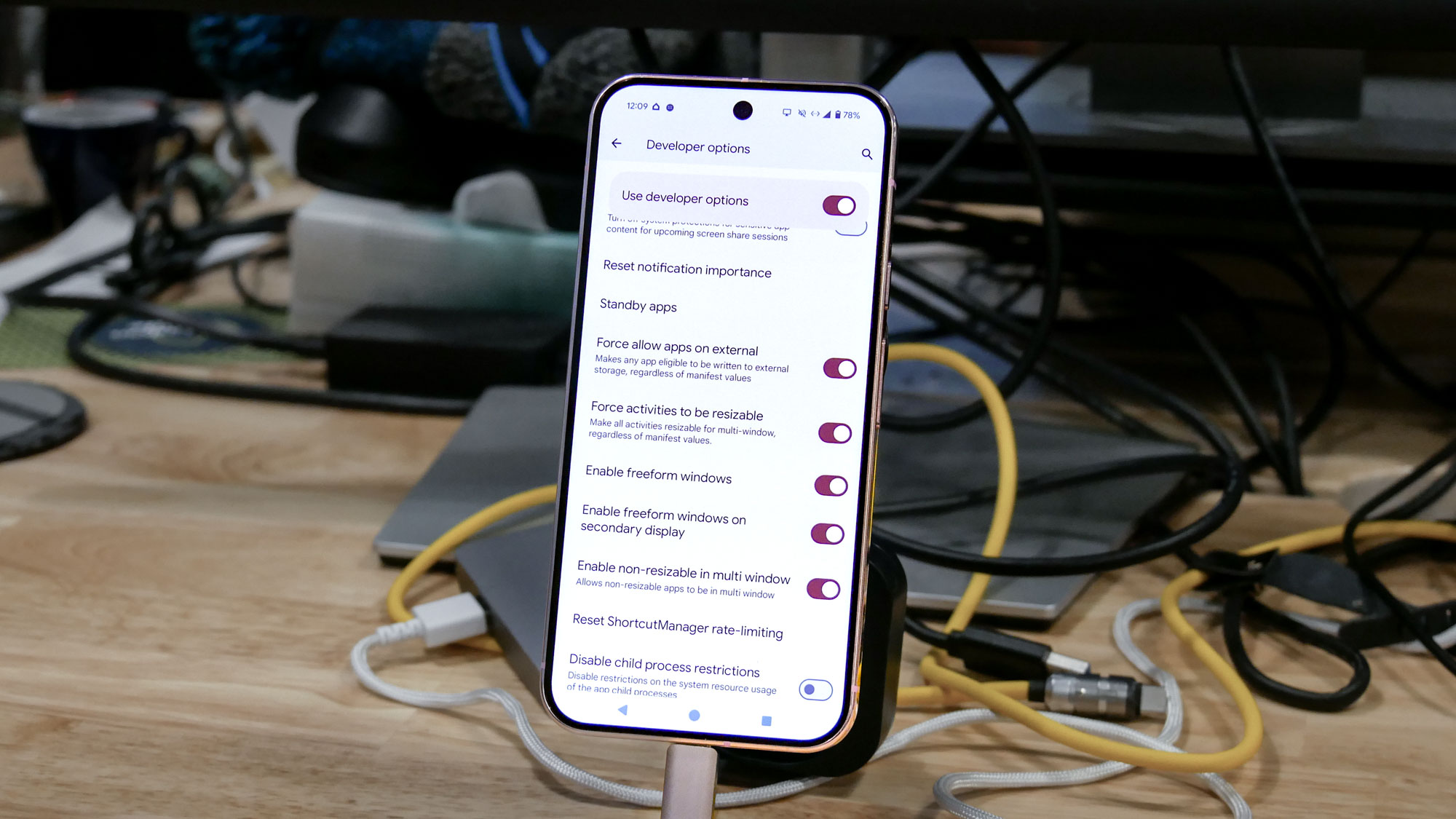

An Android 16 wish

We don't know what Google has left to say about Android 16 — if it has anything to say at all in the wake of a whole event last week dedicated to Android. But if the subject does come up, my colleague John Velasco has a request: he wants to see desktop mode become a permanent fixture for Android.

Samsung offers DeX on its flagship phones for using your phone as a laptop replacement when you plug it into an external monitor. And Pixel phones have that capability, too, though it's a bit of a hidden feature on the latest Pixel 9 models. Nevertheless, John argues, it's something all Android phones could benefit from.

Looking ahead to I/O

Earlier I posted a Google I/O preview, which covers in a little more depth some of the things we're likely to see during the keynote. But I also wrote about the show's heavy focus on AI this year — at least, from all outward signs — and why that's leaving me a little wary.

It's not Google's fault, really. But given the industry-wide obsession with AI as of late — and the very high hype-to-tangible-benefit ratio — I find myself somewhat numb to the promises of what AI can do for me. That especially true after the features that have worked their way to me may impress in some cases, but they haven't really impacted my day to day use of technology in any significant way.

I don't need to see a breakthrough in that regard at Google I/O. But I am hoping Google spends more time explaining the potential benefits of the technology it's showing off instead of just focusing on the wow factor.

Dropping hints

Having a deep think... pic.twitter.com/oZPH2jAWyVMay 20, 2025

On Monday evening, Google CEO Sundar Pichai took to X to tease out the Google I/O keynote that's a few hours away. The picture features Pichai standing on the stage of the Shoreline Amphitheatre in Mountain View — the setting for the keynote — along with Demis Hassabis, CEO at Google DeepMind.

In case the AI heavy implications of that image weren't enough for you, Pichai added a none-too-subtle message — "Having a deep think." If it hadn't been before, it's very clear that AI is very much on the mind of Google executives heading into I/O.

Gemini's reach is growing

One thing we already know headed into Google I/O — Gemini is going to find its way onto a lot more devices than just your phone.

That was a focus of last week's Android Show, where Google executives outlined plans to bring the Gemini assistant to devices like smartwatches, TVs, cars and headsets.

That last product is particularly relevant if, as expected, Android XR becomes a focus at this year's I/O. The way google sees it, its AI can create a more personalized, immersive experience if paired with headsets and glasses running on Android XR. The example cited by Google revolves around vacation planning — while wearing an Android XR device, you can ask Gemini for help researching a trip, and the results of that search from photos to hotel details to potential experiences can float up before you.

There's no telling if we'll see a demo like that in action at I/O, but I'd certainly count on Google expanding about the use of Gemini on devices other than smartphones.