I’ve been using ChatGPT Voice — 7 ways it beats the new Siri with Apple Intelligence

The competition is heating up between AI voice assistants.

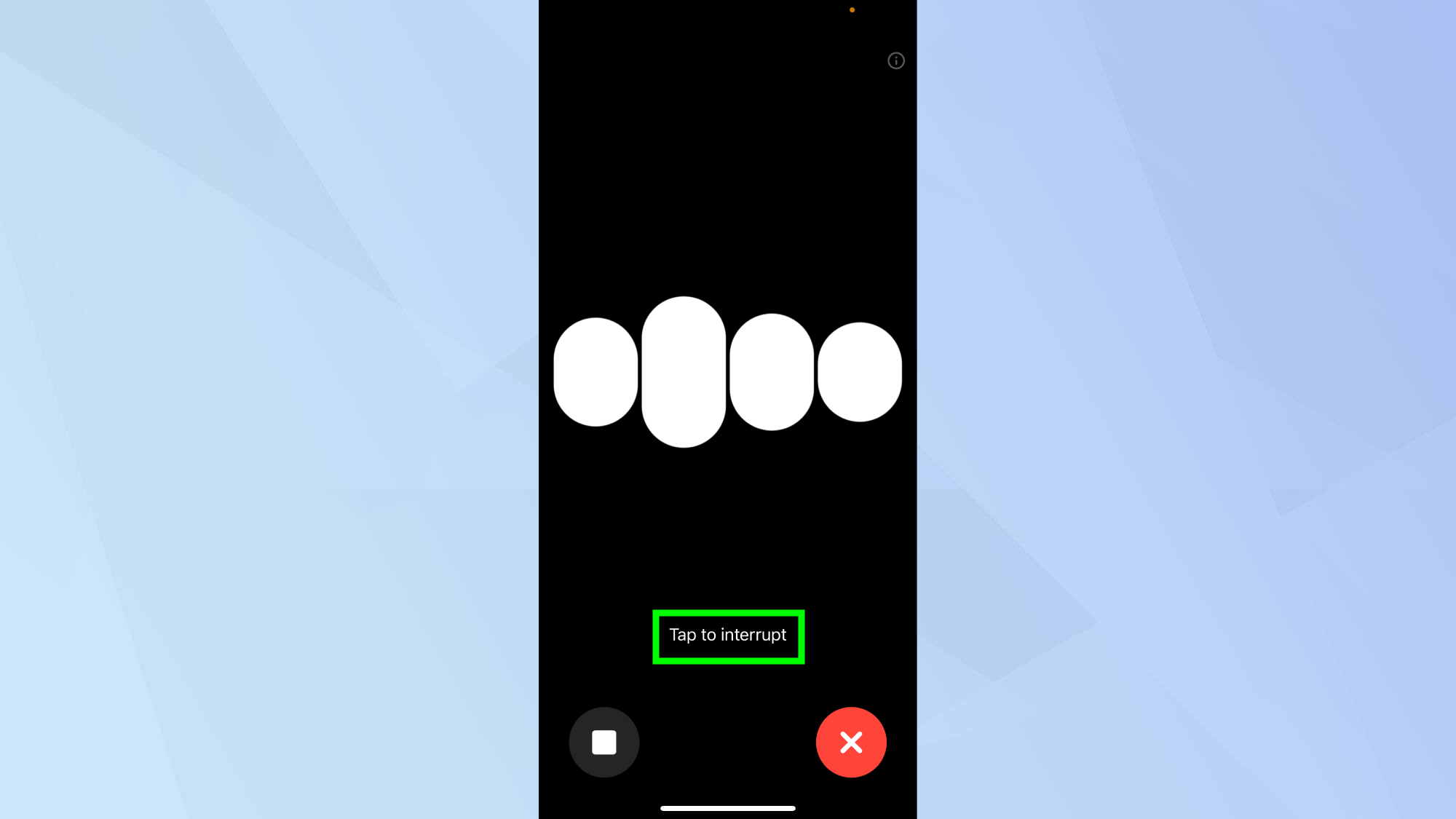

As AI voice assistants continuously improve, I couldn’t help but notice some key differences between two of my favorites — ChatGPT Advance Voice and the new Siri .

Both models have a number of standout features including a new design, faster response times, and the ability to control the app with your voice, however, Apple’s advancements in AI, still fall short in several areas. Its own internal reports suggest it is at least two years behind.

I've pulled together a list of things I find useful in ChatGPT that Apple's engineers should consider adopting to make Siri as good as it can be. This would help it earn its title as the centerpiece of the Apple ecosystem and controller for Apple Intelligence.

1. Engaging in complex conversations

I’ll start with perhaps the most obvious highlight, which is ChatGPT’s ability to maintain long, complex conversations while understanding context. ChatGPT remembers previous details and can use them again in future responses. This makes the conversation far more engaging and human-like.

While Siri is now more adapted to following along if you stumble over your words, it is still not as conversational as ChatGPT. Because it cannot recall previous queries, each time you try to talk with Siri it is like starting over. This can make interactions feel fragmented.

For Siri to compete, Apple needs to improve recall capabilities and memory so the AI assistant can track discussions and offer more natural responses. It also needs to enable better follow-ups as not everything can be handled in the first question.

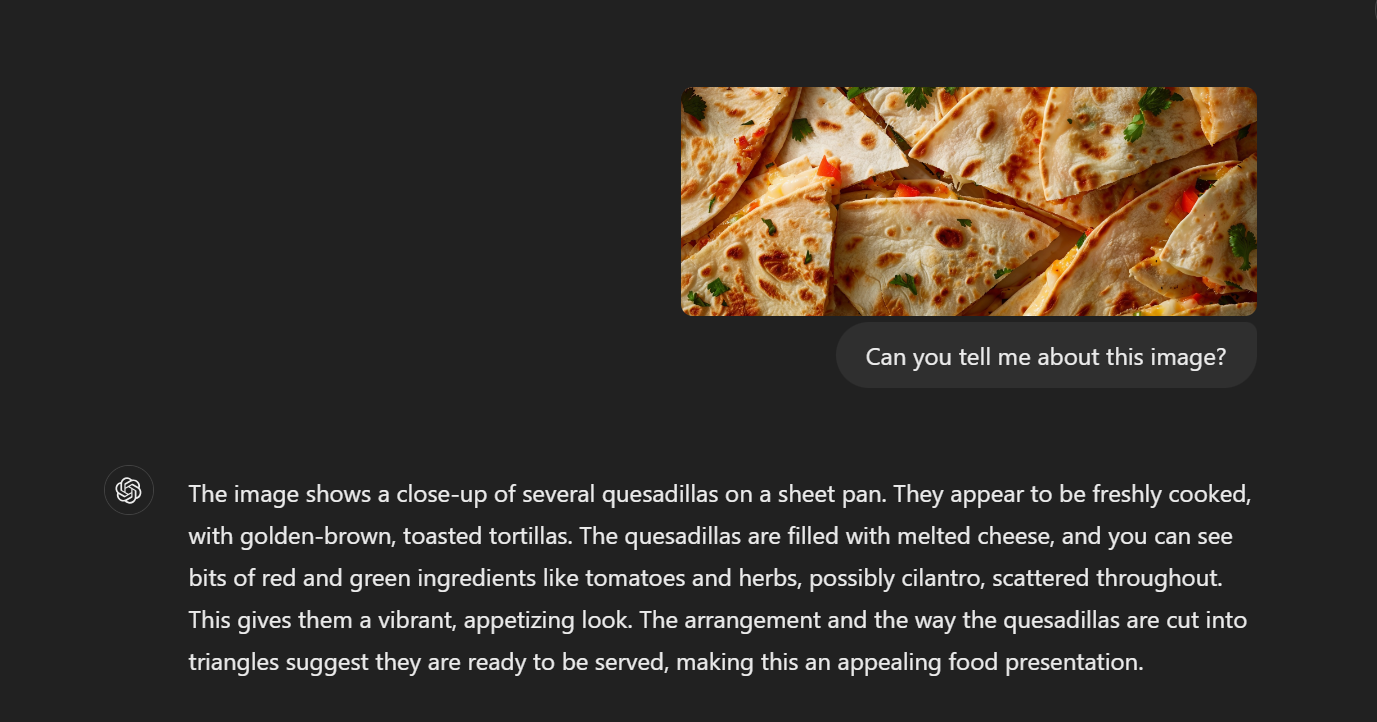

2. Multimodal capabilities

Siri does not yet have visual comprehension. ChatGPT can not only listen and speak, but it can also “see.” Meaning, if users ask the AI model to analyze, describe or generate insights from photos or diagrams, it will provide analysis and contextual information based on the image.

This is something coming to the Apple Ecosystem through Apple Visual Intelligence, which will inevitably be accessible to Siri in the future. Meta AI has a feature that lets it describe what it can see through the smart glasses in response to a query like "What am I looking at". This will be a good way for Apple to give Siri eyes.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

3. Context awareness

This is one area where new Siri has improved. You can ask it what the weather is like and it will give you a response, or you can ask if you need an umbrella and it will tell you whether it is currently raining. However, this doesn't go as far as ChatGPT Voice in terms of contextual conversation. OpenAI's bot will respond with an explanation or more naturally.

What Siri can do is access your location, the current weather and give real information. What it can't do is respond with detail. All you'll get is the temperature in response to "do I need a jacket" or "I don't think its raining" if you ask if you need an umbrella.

While ChatGPT will respond with something like "it might be a good idea as that is a fairly low temperature," you do have to first tell it the temperature as voice mode has no live data.

Apple would need to significantly enhance Siri’s ability to draw contextual connections between queries to match ChatGPT’s fluid conversation model. But then, ChatGPT needs live data in its voice mode to be a useful assistant.

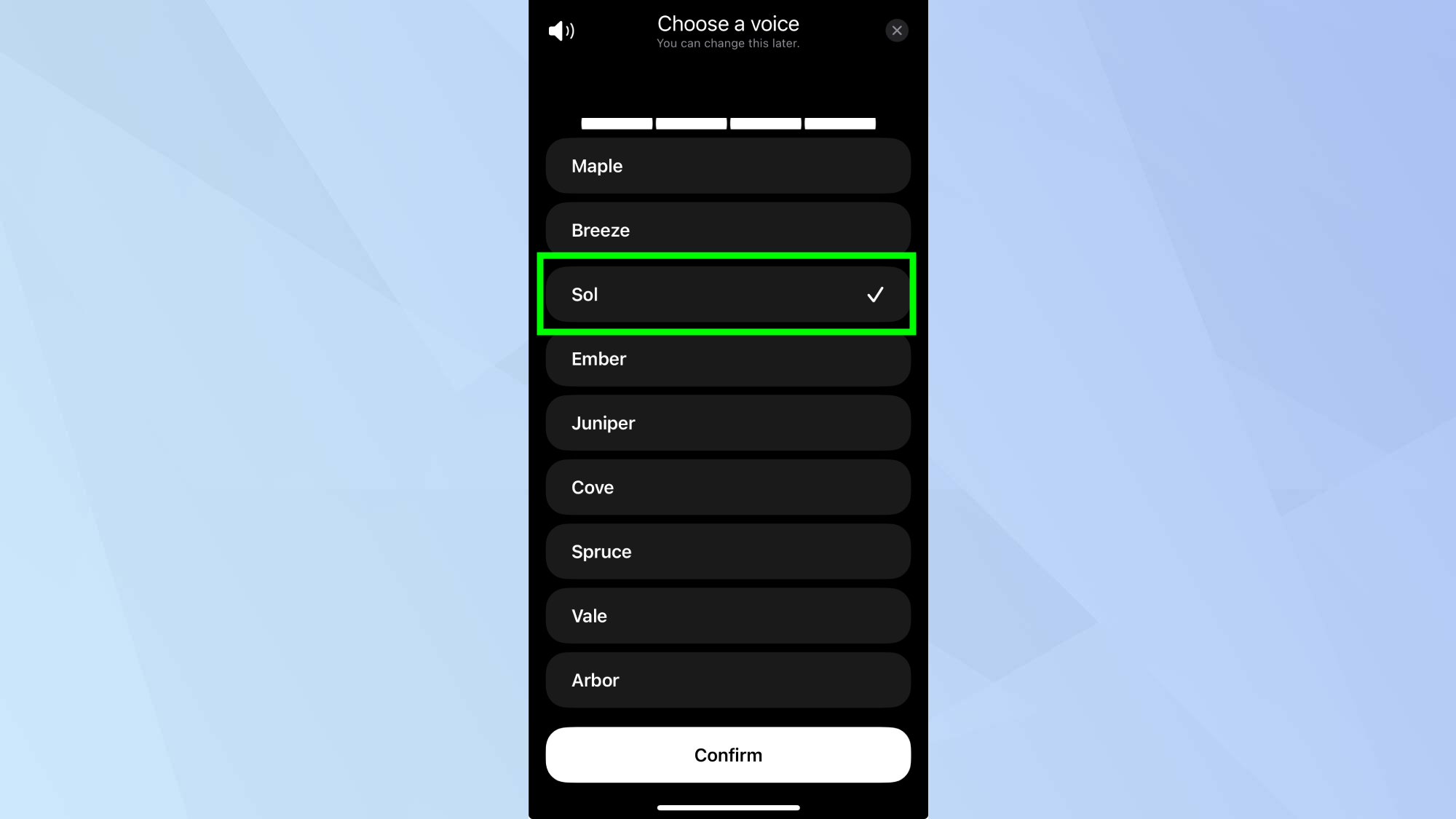

4. Natural, adaptive voice

ChatGPT Voice utilizes advanced natural language processing to adapt its tone, style, and conversation based on the user. It adds human elements such as scoffs, pauses, even ums, that make it feel more natural than Siri.

Although Apple Intelligence has improved Siri’s voice, it still lacks the nuanced adaptability. Apple would need to invest more in natural language processing and speech dynamics for Siri to match what ChatGPT Voice can do. What Siri does have in its favor is that it largely runs locally, so works offline.

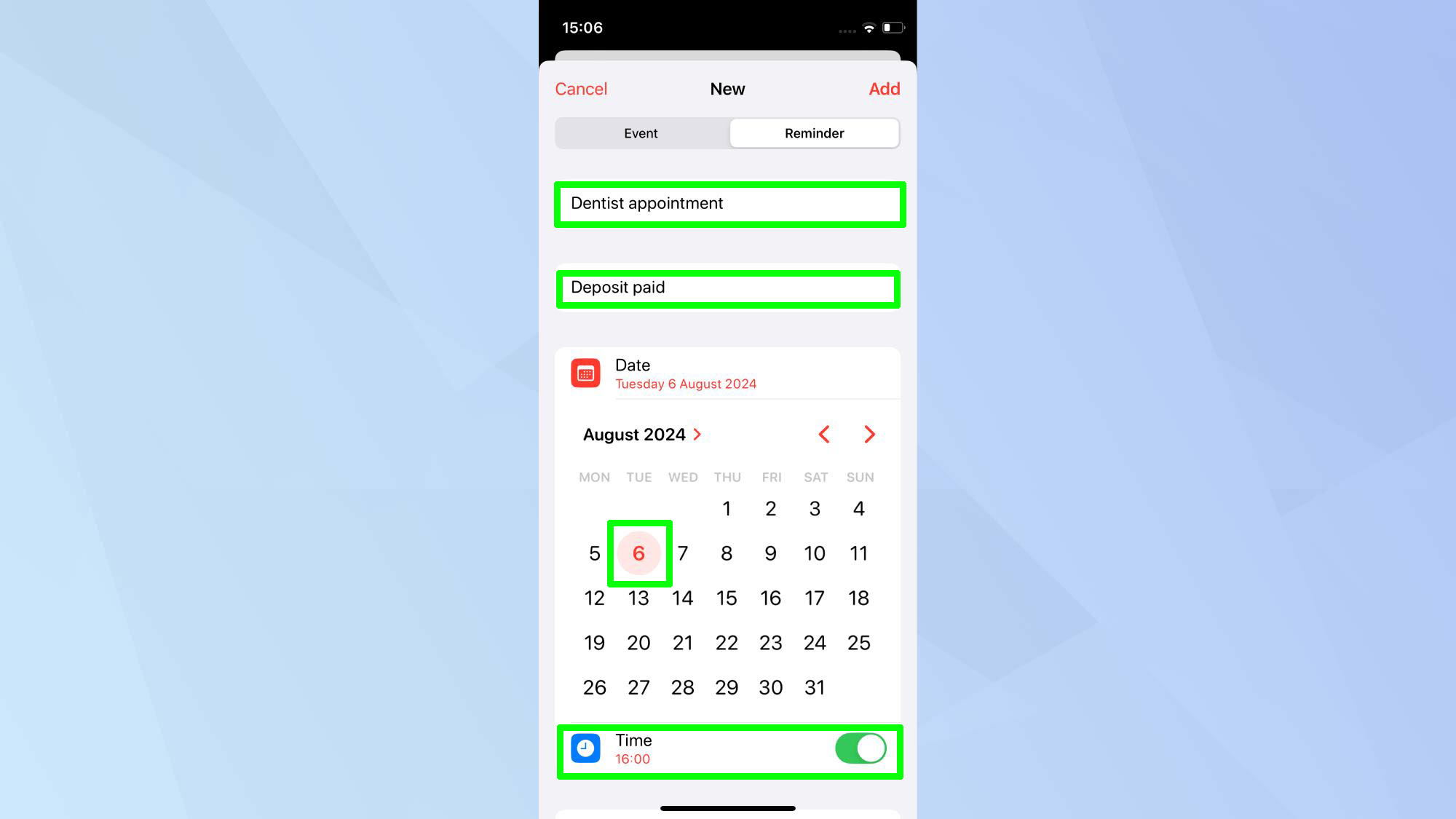

5. Beyond basics

When you want to set a reminder or send a message, Siri is great. It can help you keep track of your medications, what apps you've subscribed to, and even help you share your WiFi password. Siri can send text messages and emails. But you have to tell it what to say. For instance, when Siri starts an email, it asks for the subject line. If you ask ChatGPT to help you write an email, it creates the subject line for you. ChatGPT Voice takes a basic task even further by helping with complex problem-solving. For that reason, ChatGPT Voice can help you write the message you want Siri to send.

The AI model performs tasks that require deeper analysis and understanding that Siri simply cannot do. capability to learn ChatGPT knows me so well it can profile its users. Despite Siri updates and software changes, it doesn’t get to know users in the same way ChatGPT Voice can.

ChatGPT learns from each interaction and improves its responses based on user preferences, making future conversations more tailored. Siri, despite regular software updates, doesn’t learn from individual conversations in real time. Apple would need to implement real-time learning algorithms for Siri to provide more personalized, adaptive responses.

Alternatively, and something I think we will see, is Apple partnering with OpenAI to hand over some of the more complex tasks to the more advanced model.

6. Capability to learn

ChatGPT knows me so well it can profile it’s users. Despite Siri updates and software changes, it doesn’t get to know users in the same way ChatGPT Voice can. Reason being, ChatGPT learns from its interactions in real-time and improves responses as it gathers data. Every interaction helps it to become better attuned to the specific preferences and conversational habits of each user.

While Siri is adept at sending texts, setting reminders, and providing basic answers, ChatGPT Voice can help with complex tasks like problem-solving or composing detailed emails. ChatGPT’s ability to create subject lines and analyze tasks beyond basic commands puts it a step ahead. Siri would need deeper machine learning capabilities to handle more analytical and creative tasks without user prompting.

When Apple does adopt these capabilities Siri will be unstopable as it has something OpenAI can only dream of — huge personal context. That is, a vast library of personal data from emails and text messages to downloads and notes. It can draw on all of that in a safe, locally secure way to improve its responses and be more helpful.

7. Multilingual fluency

ChatGPT Voice handles a vast number of languages fluently and can switch between them in a single conversation. Siri supports multiple languages but doesn’t offer the same flexibility for switching mid-conversation or handling advanced translations.

For Siri to compete, Apple would need to focus on improving its multilingual processing abilities. It does handle translation locally on-device, but this is still an add-on, not deeply integrated into the AI like ChatGPT's voice capabilities.

Bonus: content creation

Siri is limited to responding to questions and performing basic tasks while ChatGPT is generating the outline to your next novel. In short, Siri isn’t capable of creative content generation or customized outputs because it isn’t highly contextual like ChatGPT.

Conclusion

From handling longer, contextual conversations to integrating multimodal abilities, ChatGPT Voice pushes the boundaries of what voice AI can achieve. While Siri remains an integral part of the Apple ecosystem, ChatGPT Voice offers more advanced features in terms of conversation complexity, multimodal capabilities, and problem-solving.

For Siri to close the gap, Apple will need to invest in improving Siri’s memory, contextual understanding, natural speech adaptability, and real-time learning capabilities.

More from Tom's Guide