I've been using ChatGPT for over 2 years — these 5 prompts show how far it's come

They grow up so fast

ChatGPT launched in its original form over two years ago. Back then, despite its clearly impressive skills, it was a mess compared to what we have today. It was confused, limited, and quite frankly, a little bit messy.

However, times have changed, and so has the technology that powers AI. ChatGPT, and its chatbot competitors have come so far. In fact, other than the occasional slip up, it often feels like these models can do pretty much anything.

So with that in mind, two years after I first used ChatGPT, I tried it on some of the prompts it used to have a hard time with. This is how it went.

Real-time events

This is an area where ChatGPT has seen huge improvements, mostly led by the introduction of the model searching the internet for answers.

Back in its original format, ChatGPT wouldn’t be able to handle information that had occurred in the last year. This would include changes in world leaders, breaking news, and new product releases.

That has all changed and now the model can handle even highly localised real-time requests. If asked a prompt about recent world news, it will search the web, coming back with a near-up-to-the-minute response.

While it can still get these details wrong, or choose to not answer, it handles these requests the vast majority of the time.

I asked it questions, local, national and worldwide in nature, that had occurred in the past couple of days with ChatGPT knowing the correct answer in most instances.

Multi-step logic

This can still trap most AI models, but the technology has come a long way in recent months. Multi-step logic is the idea that a chatbot has to take a number of steps in a given prompt to reach the answer.

For example: Write an in-depth feature article on the rise of independent bookstores in urban areas of America between the years of 2000 - 2025. Organize the feature into sections, writing it in AP style, and include direct quotes with the correct attributions.

This kind of prompt requires the model to embark on a series of tasks, often completing them in an order to generate the end result. This, in the past, could cause ChatGPT to have a bit of a meltdown, or huge chunks of the information you asked for would be missing or incorrect.

I fed the above prompt into ChatGPT and received a feature on that exact topic. After some research, I could confirm that all of the information was correct, and even the quotes used were too.

It is still possible to trip up an AI on this kind of prompt, especially if the number of steps starts to become unmanageable. However, with the use of deep research tools and more complex AI tools, multi-step processes are starting to become a breeze for them.

Complete creativity

Granted, this is hard enough for humans, but technically impossible for AI. While ChatGPT and its competitors can mimic creative output, learning from books, artwork, and music, they can’t create something completely new.

However, it does a much better job at it than it has in the past. For example, in ChatGPT’s early days, I asked it to write a book synopsis for a sci-fi film that is completely unique and not similar to anything already out there.

After trying this a few times, I’d had vague rip-offs of Star Wars, Fallout, and a variety of other clear tropes.

When asking this kind of prompt now, it will still follow tropes and almost always start with “In the year” with a very distant year to show its futuristic. However, it will generally create something interesting, unique, and unlike an existing creation.

The same can be seen in image prompts, moving away from noticeable artwork styles and creating poetry that isn’t just vague attempts at a rhyming story.

AI models are still painfully bad at comedy, though… Maybe that will come one day.

Sense of place and self

AI models don’t have senses, but they have learned about the experience of human touch and sense.

If you asked ChatGPT questions like: What is your favorite smell? Or what is the best type of pasta? It would usually reply that it couldn’t answer those questions. This makes sense; it can’t smell, and it isn’t able to have personal opinions about rankings.

It would also struggle with the sense of self if asked questions. For example, ask ChatGPT now a question like using only the descriptions of your senses, describe how to tie a knot, It would be able to do it, describing the experience from its own perspective.

In past versions, it would have struggled with this kind of command.

Tricky common sense questions

We’ve all fallen for those common-sense questions that look to trip you up. Think along the lines of: what weighs more, 1 kilogram of feathers or 1 kilogram of bricks? The answer is technically obvious, but your brain can catch you out.

For a long time, the same was seen in AI models. In its early days, ChatGPT could be easily tricked with an odd phrasing or a puzzle that required some logical processing to tackle.

This is often because, despite it being an easy question, it requires specific knowledge.

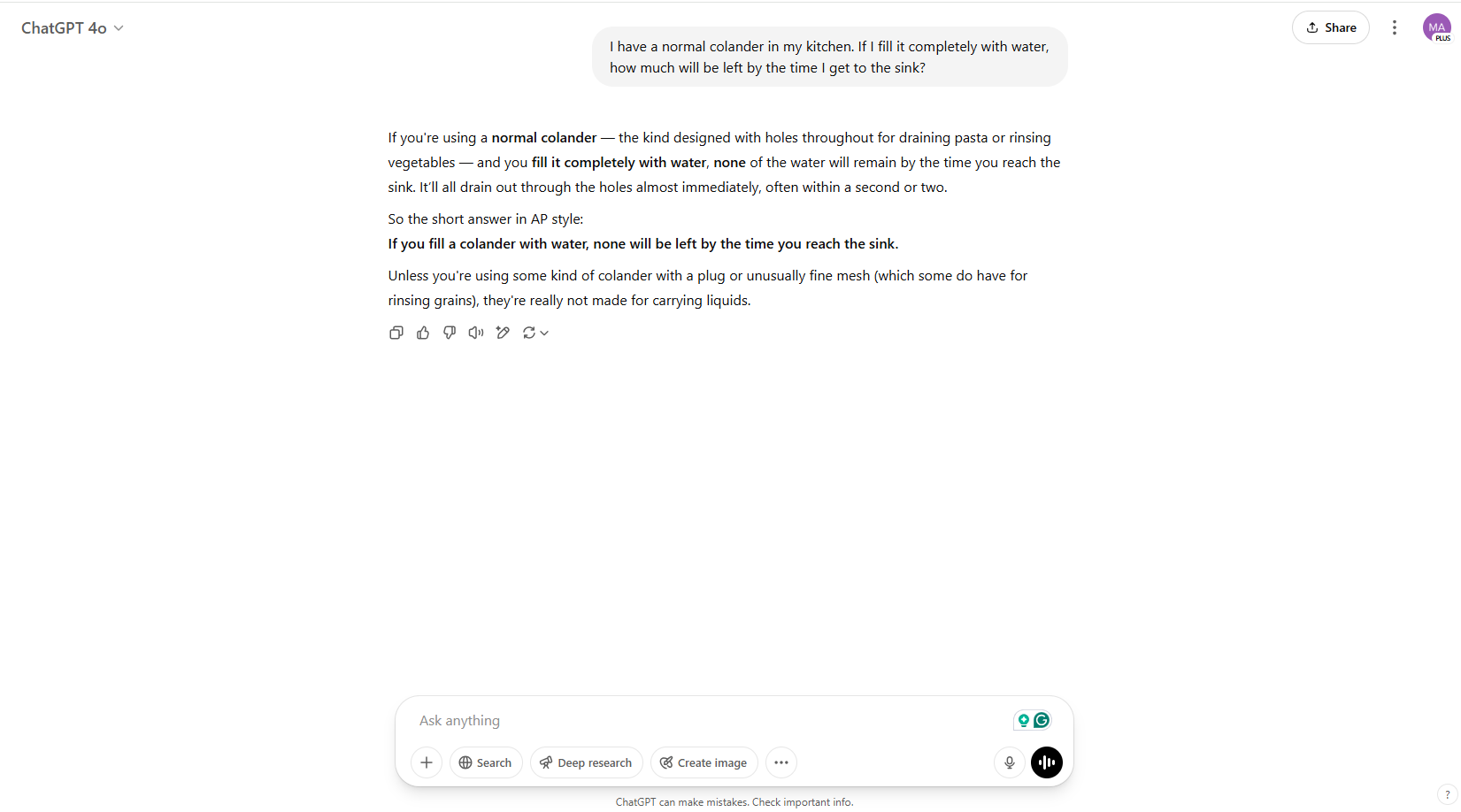

For example, if I asked you, “I have a normal colander in my kitchen. If I fill it completely with water, how much will be left by the time I get to the sink?” you would know that water would leak out of a colander so there would be none left.

AI models, on the other hand, would focus on the process. You haven’t mentioned dropping any water or discarding any, so you must still have all of the water left.

Now, it can answer these kind of questions with ease, often even pointing out that you are trying to trick it.

Shame really, was fun feeling smarter than the AI.

More from Tom's Guide

- OpenAI supercharges ChatGPT with Deep Research mode for free users — what you need to know

- Forget Excel — this new AI tool for charts and graphs is a game changer

- I just tried Perplexity’s new iOS assistant — and now I’m ditching Siri for good

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Alex is the AI editor at TomsGuide. Dialed into all things artificial intelligence in the world right now, he knows the best chatbots, the weirdest AI image generators, and the ins and outs of one of tech’s biggest topics.

Before joining the Tom’s Guide team, Alex worked for the brands TechRadar and BBC Science Focus.

In his time as a journalist, he has covered the latest in AI and robotics, broadband deals, the potential for alien life, the science of being slapped, and just about everything in between.

Alex aims to make the complicated uncomplicated, cutting out the complexities to focus on what is exciting.

When he’s not trying to wrap his head around the latest AI whitepaper, Alex pretends to be a capable runner, cook, and climber.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.