I write about AI for a living — 5 new features I want to see on the next iPad

Running AI on the iPad should be trivial

Apple’s next generation iPad may well be the perfect AI device. Rumors suggest it will have the powerful, AI-first M4 chip and with iPadOS 18 will make heavy use of locally running AI models.

With this power will come a host of new privacy-focused and AI-powered features including better transcription, on-device text summary and improved image editing capabilities.

These features are unlikely to be part of the updated iPad lineup expected to be revealed at the Let Loose event on May 7, as they will rely on updates to iPadOS and the Apple Silicon chipset not likely to be unveiled until WWDC in June.

What can we expect from future iPads?

Future iPads, likely released in the fall, will have a heavy AI focus thanks to the M4-series of chips that, according to rumor, are designed to be able to run powerful AI loads on device.

However, even this generation of iPad makes for a compelling AI device. It is able to run models like Llama 3, handle complex AI tasks such as image generation and video editing and with the camera perform a range of AI vision tasks like those revealed for the new Meta Ray-Bans.

What we can expect from Let Loose is updates to the current family of iPads with better screens, the M3 chip and general hardware improvements across the board. Apple will likely brand them as AI capable, or even the best AI tablet on the market.

What I’m waiting for is the true AI tablet and I don’t think we’ll see that this side of the summer if Apple’s usual next-generation release schedule is anything to go by.

What features I’d like from the next-gen iPads

1. Easy integration of third-party models

Apple is expected to put the M4 into the iPad and with this a major improvement to the neural engine. Running large language models on a neural engine is more energy efficient and often faster than running it on a GPU.

On the Mac, Apple has MLX, a new framework designed to make it easier than ever to integrate third-party AI models into the operating system and work with them through apps. I think Apple should make this a core part of a future iPadOS.

The company has been rumored to be working with companies like Google, Baidu and OpenAI to bring models to the Apple ecosystem and this is likely to make the technology available for developers to integrate into apps without having to rely on sending data to the cloud.

2. Better image editing

AI image editing and image generation has come on significantly since the release of iPadOS 17 last year and Apple has struck a deal to license images from Shutterstock to train a generative AI image model.

What I’d like to see is Photos getting a major AI upgrade with the equivalent to Photoshop’s generative fill as a core feature. There are already some fun AI image features on the iPad including being able to pop out a person from a background or remove a background.

With better generative AI integration we could also see the ability to change the orientation or change the canvas size of an image and have AI fill in the missing pieces — without having to crop to change to landscape or portrait.

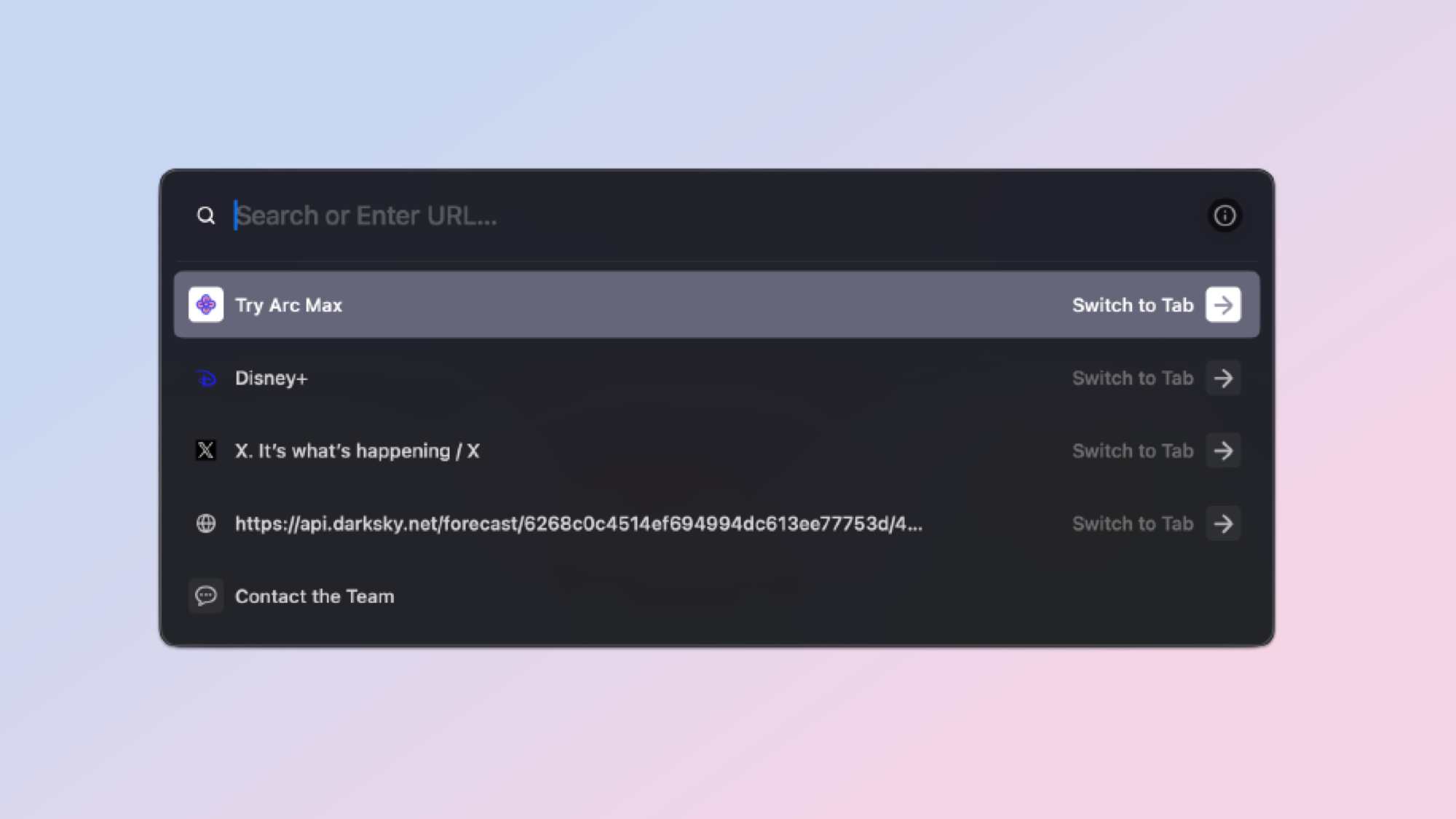

3. Better Spotlight

Spotlight is both the best and worst feature on any Apple device. It has the potential to be game changing, especially if Apple integrates generative AI into the workflow.

Look at what The Browser Company has been doing with Arc, including creating content pages on the fly about a particular search topic with the help of AI. This is something Apple could do with Spotlight, including bringing in information on your iCloud files and data.

An improved Spotlight would make it easier to find content on your iPad, work with that content and even make connections. It could be in the form of a ChatGPT-style chatbot where you use natural language prompts to work with your own content and browse the web.

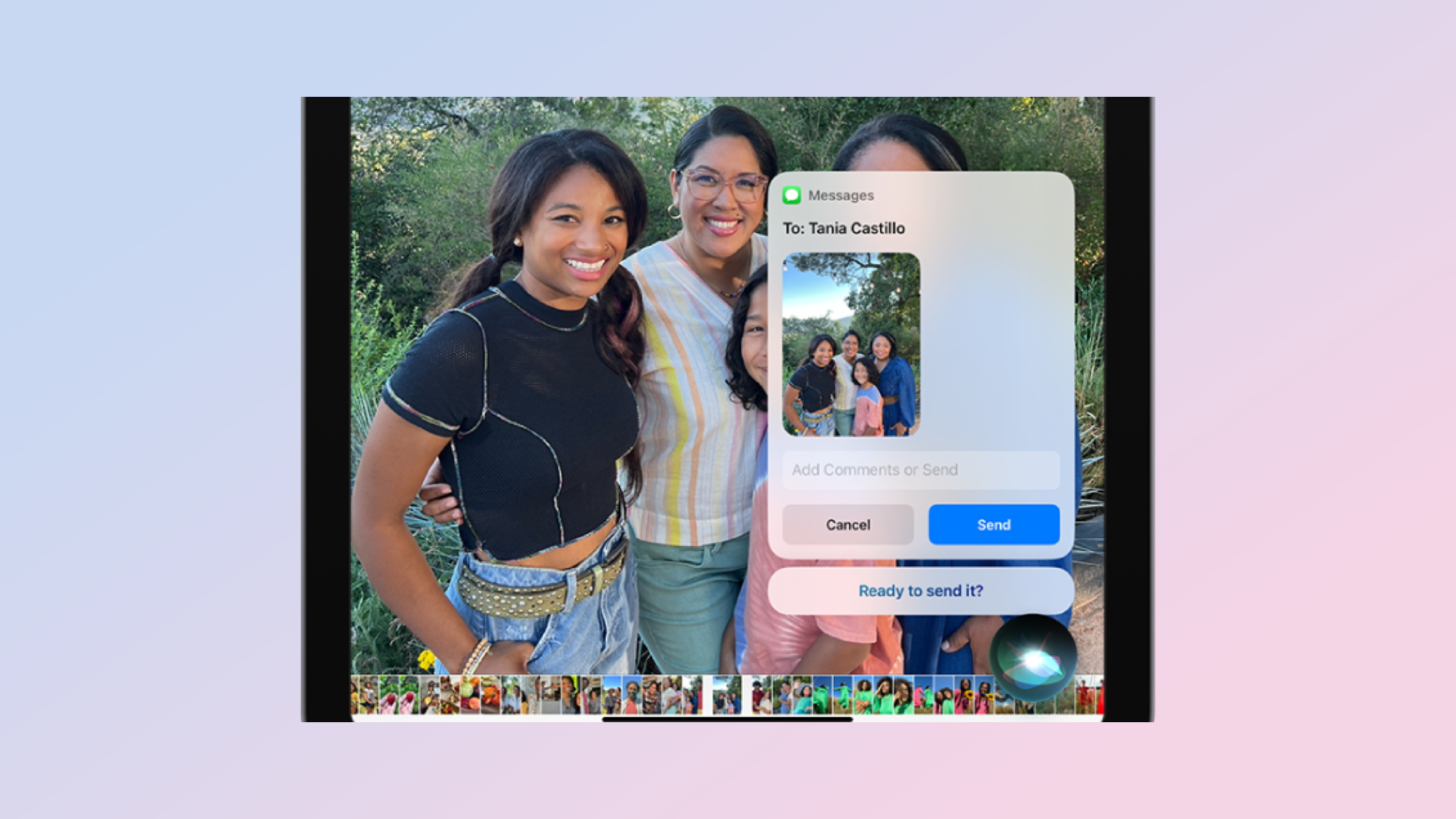

4. Better Siri

I like Siri a lot more than some people seem to on social media. It gets a bad rap in part because Apple sacrificed some functionality in favor of security and running on-device. With the arrival of improved AI chips and on-board models that problem goes way.

Apple should look to what Meta is doing with MetaAI in the Ray-Ban smart glasses as a way to improve how Siri works. It uses on-device processing as well as the cloud, has access to AI vision features to describe the real world and can offer advice.

Apple’s research team has published a series of new models like Ferret-UI that are capable of understanding what is happening on screen and providing analysis. This could be built into a new Siri to allow for a deeper integration with iCloud and the operating system.

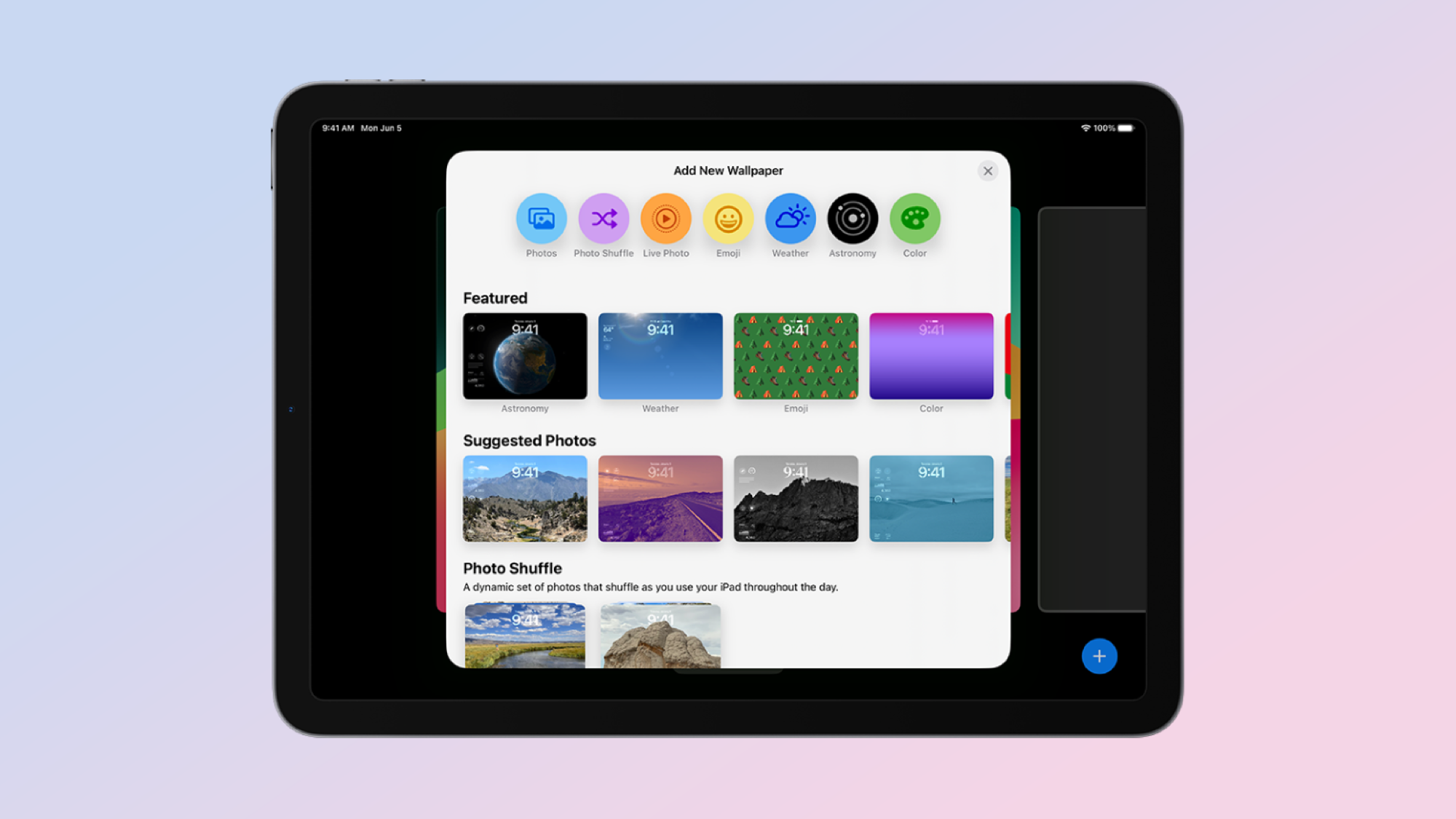

5. Improved customization

Apple settled on a layout for apps and said “good enough” a few years ago. A few minor tweaks such as the addition of widgets aside, it really hasn’t changed much since version one. For me, that isn’t much of a problem as it works — but what if AI could give everyone a layout that works for them?

Bringing together many of the other feature ideas, generative AI and natural language prompts could make it easier than ever to customize every aspect of your iPad from the shape of icons to where they are positioned on the screen.

It could allow you to generate wallpaper from a text prompt and create widgets based on the output of multiple apps instead of having one widget per app. This could also then be integrated into the new-look Spotlight and allow you to change settings by typing something like “automatically make the screen darker at 9pm every night.”

How likely are these changes?

While I think some of this will happen, particularly around GenAI in photos, an improved Siri and deeper integration of AI into the operating system — some of it will be from developers not Apple.

There are rumors of an AI App Store coming and Apple is likely to create a new suite of APIs and developer kits that app makers can use to get the most out of the power of the M4.

We might see some high profile third-party integration like Adobe running GenAI locally in Photoshop or Express for iPad. We also might see Apple’s own apps get an AI upgrade including text completion in Pages or AI editing in iMovie — but the deeper system changes might have to wait until iPadOS 19.

More from Tom's Guide

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on artificial intelligence and technology speak for him than engage in this self-aggrandising exercise. As the AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover. When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing. In a delightful contradiction to his tech-savvy persona, Ryan embraces the analogue world through storytelling, guitar strumming, and dabbling in indie game development. Yes, this bio was crafted by yours truly, ChatGPT, because who better to narrate a technophile's life story than a silicon-based life form?