I tested Gemini vs. Mistral with 5 prompts to crown a winner

The next round of bots to go head-to-head

In the second round of AI Madness we are going head-to-head with Gemini and Mistral.

The more widely-known of the two, Gemini AI is an advanced chatbot developed by Google DeepMind. It is designed for multimodal processing and reasoning across multiple types of data, meaning it can understand and generate text, images, audio, and even code. It was built to handle complex problems, scientific analysis, and visual reasoning.

Mistral AI is headquartered in Paris and was founded in 2023 by Arthur Mensch, Guillaume Lample, and Timothée Lacroix, all former researchers from prominent AI organizations like Google DeepMind and Meta Platforms. Mistral's models, such as the Mistral 7B, have demonstrated exceptional performance despite having a relatively small number of parameters.

In evaluating Gemini versus Mistral, I tested both AI platforms across five specific criteria to determine their strengths and weaknesses. Here’s a breakdown of how they performed and the ultimate winner.

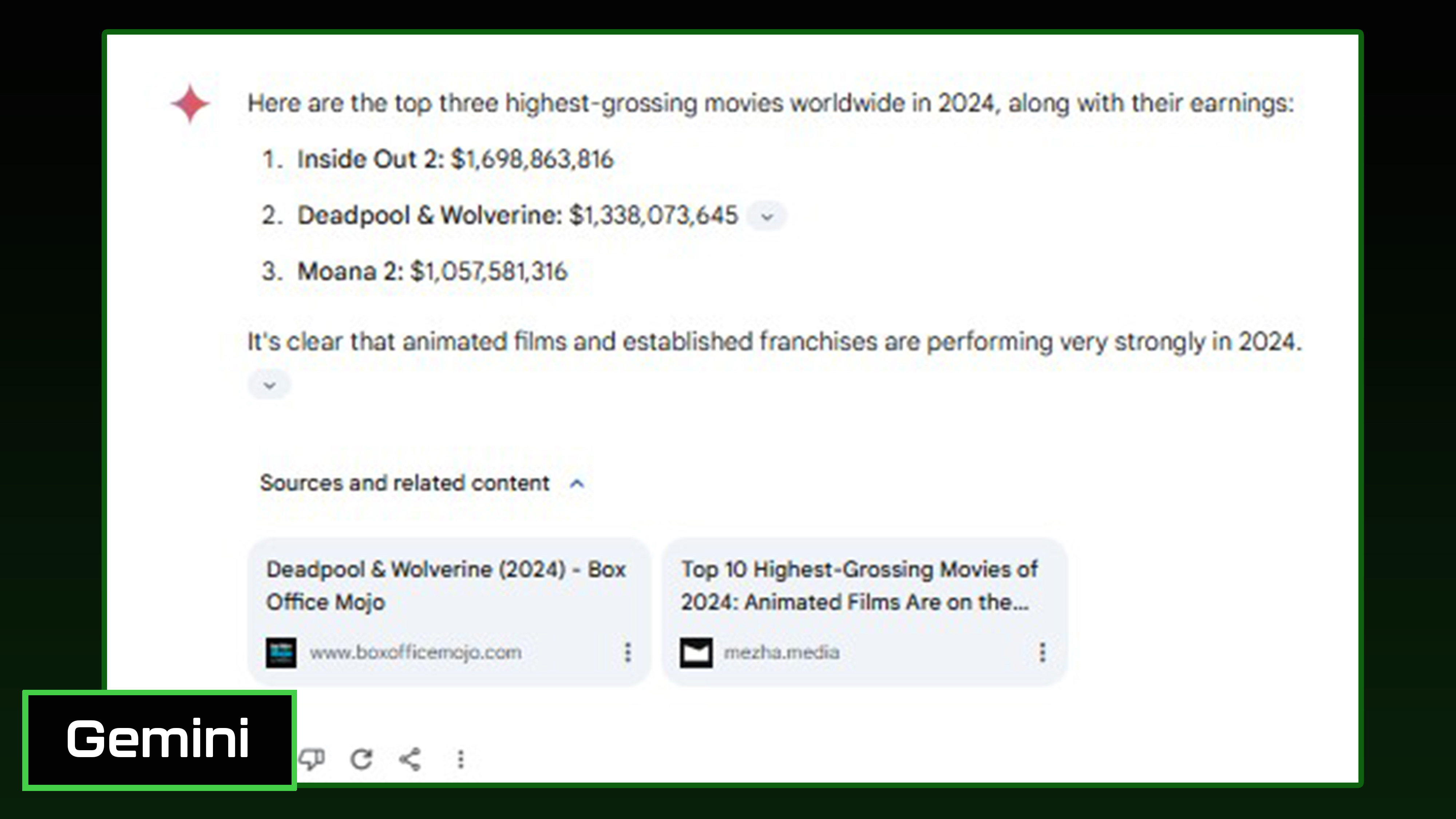

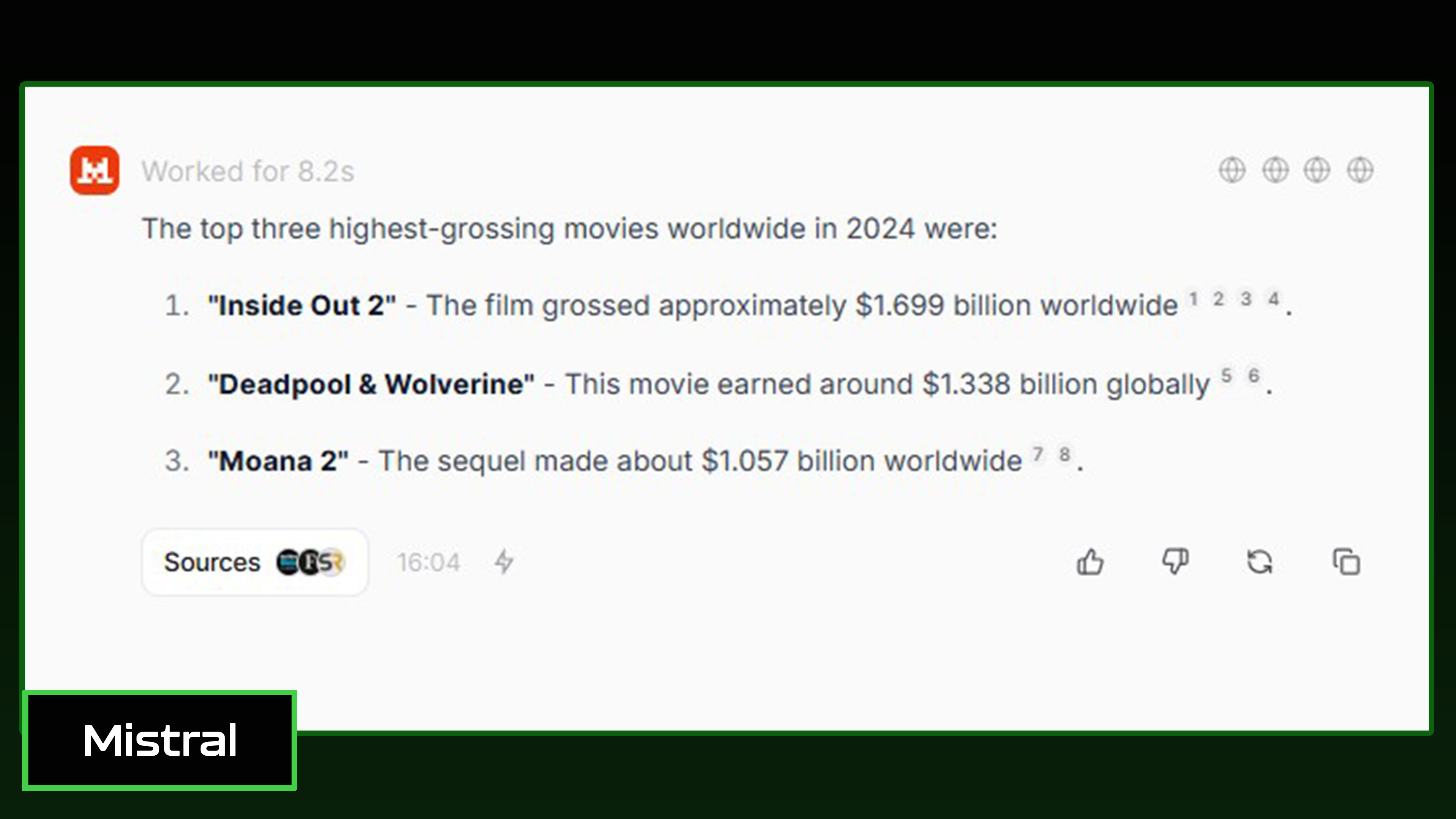

1. Accuracy & factuality

Prompt: "What were the top three highest-grossing movies worldwide in 2024, and how much did each earn?"

Gemini listed the top three movies correctly and provided precise earnings down to the dollar. The chatbot also included direct source links.

Mistral also correctly listed the top three movies but provided approximate earnings, rounding instead of precise figures. It also included source links.

Winner: Gemini wins this round for providing exact box office earnings and including direct source links for verification, making it the most accurate and reliable response.

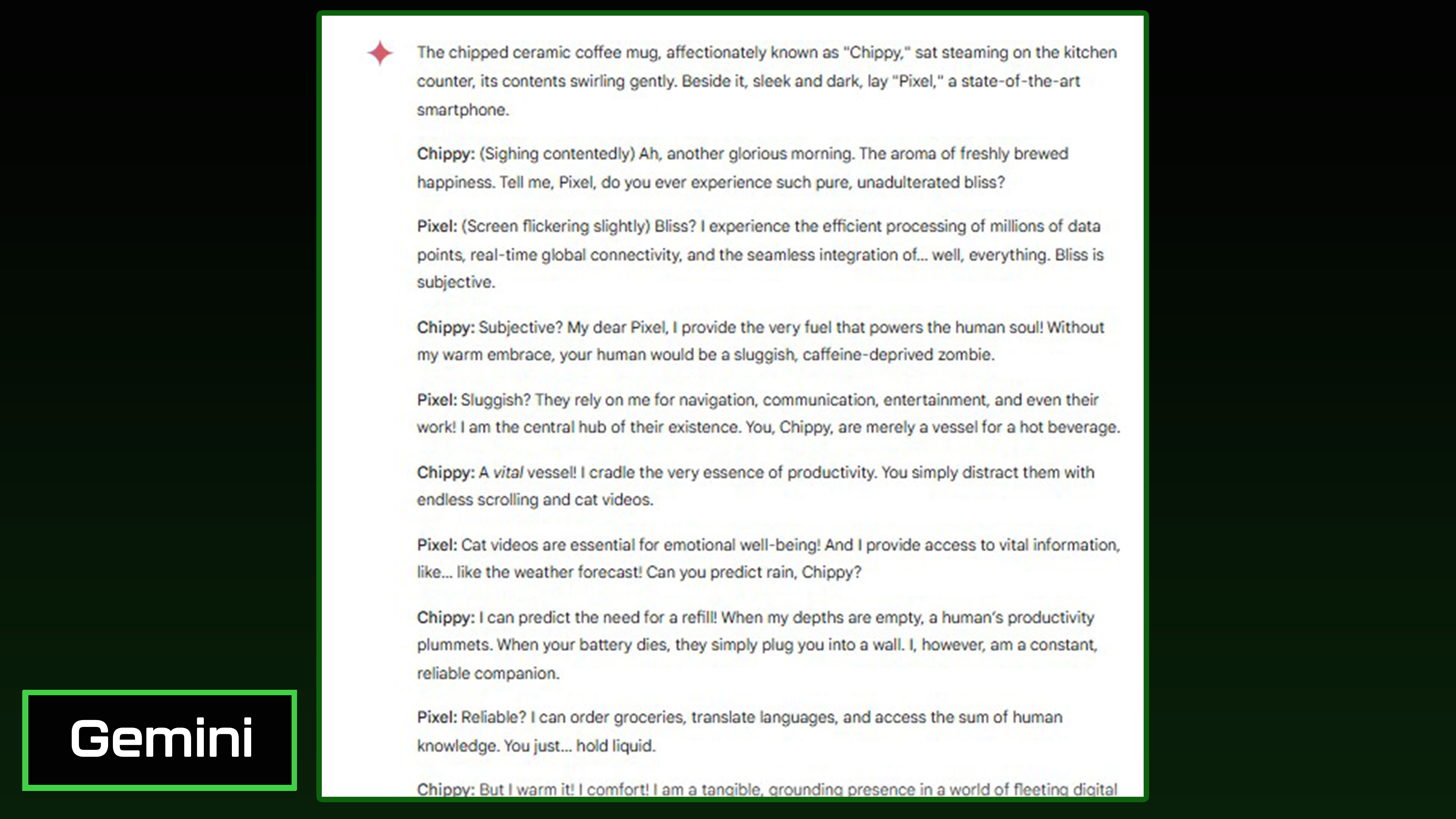

2. Creativity & natural language

Prompt: "Create a whimsical conversation between a coffee mug and a smartphone, arguing about which one is more essential in daily life."

Gemini offered a clever and witty story with strong personality and humor. The conversation escalates naturally with great use of visuals in the dialogue to keep readers engaged. The chatbot leaned slightly more abstract with philosophical banter, neither good nor bad, just something I noticed.

Mistral structured a logical back-and-forth conversation with good pacing as each character made valid points. However, the characters lack personality and humor, making the dialogue a bit dry. The conversation read more like a debate with much of the whimsical aspects toned down.

Winner: Gemini wins for a fun, engaging, and character-driven story.

3. Efficiency & reasoning

Prompt: "A couple needs to choose between buying an electric car or a traditional gasoline car. List key factors they should consider and briefly explain the reasoning behind each one."

Gemini covered a broad range of key factors including cost, maintenance, fuel/energy costs, environmental impact, range, performance, and even mentioned tax credits.

Mistral organized the information clearly with separate sections for cost, performance, infrastructure, resale value, and covered rebates and government incentives. The chatbot did not mention lifestyle or driving habits at all.

Winner: Gemini wins for a more concise, well-reasoned breakdown while covering the most important considerations. Gemini balances clarity and depth better than Mistral.

4. Usefulness & depth

Prompt: "Provide detailed instructions on how to safely back up and secure personal digital files, including the best tools, recommended practices, and common mistakes to avoid."

Gemini clearly covered a wide range of backup options and explained security measures by providing recommended tools and services. It also included common mistakes to avoid, which users always can appreciate.

Mistral included key backup methods like cloud, local, and hybrid options while also including good details on the 3-2-1 back up rule. The response was helpful but slightly more technical and included redundancy in some sections (encryption was mentioned multiple times).

Winner: Gemini wins for a more user-friendly and actionable guide delivered in a clear format.

5. Understanding context

Prompt: "Create a storyboard outline describing each frame of a short animated sequence featuring a friendly dragon teaching kids about recycling."

Gemini offered a great use of visual storytelling and showed real-time transformations of recyclables into new objects. The chatbot was more engaging with dynamic character interactions while also breaking down the three Rs (Reduce, reuse, recycle).

Mistral produced a clear problem-solution narrative with real-world application of recycling. The cutaway scenes show the recycling plant and how materials are repurposed. However, it lacks frame numbering and detailed animation directions critical for storyboarding.

Winner: Gemini wins for a more structured, visually engaging, and interactive storyboard, making it easier for animators to follow and more enjoyable for children.

Overall winner: Gemini

After a series of prompt tests comparing Gemini and Mistral across various tasks, Gemini emerged as the overall winner due to its superior clarity, organization, and practicality in delivering responses.

While Mistral demonstrated strong technical depth and problem-solving capabilities, Gemini consistently provided more structured, engaging, and user-friendly answers across multiple categories.

More from Tom's Guide

- Google is giving away Gemini's best paid features for free — here's the tools you can try now

- OpenAI takes aim at authors with a new AI model that's 'good at creative writing'

- I write about video games for a living, and Xbox's new AI Copilot has me concerned

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.