I tested Gemini 2.0 vs Perplexity with 7 prompts created by DeepSeek — here's the winner

DeepSeek chose a winner, too

AI chatbots are evolving fast, but not all are built the same. Perplexity AI and Gemini 2.0 are two very advanced AI models available to users for free, and each has unique strengths.

To find out which is best, I tested both AI models with seven prompts. And to add a unique twist, I had DeepSeek V3 craft the prompts.

While Perplexity AI excels in real-time web search and factual retrieval, Gemini 2.0, backed by Google, boasts deep integration with Google's ecosystem and multimodal capabilities. But how do they compare in terms of accuracy, reasoning, creativity, and practical usability?

I evaluated Gemini 2.0 vs Perplexity based on accuracy and depth of information, clarity and coherence of explanations, creativity and originality, logical reasoning and problem-solving, as well as how each handled nuanced and subjective topics.

Here’s what happened and how the AI models compared in this face-off.

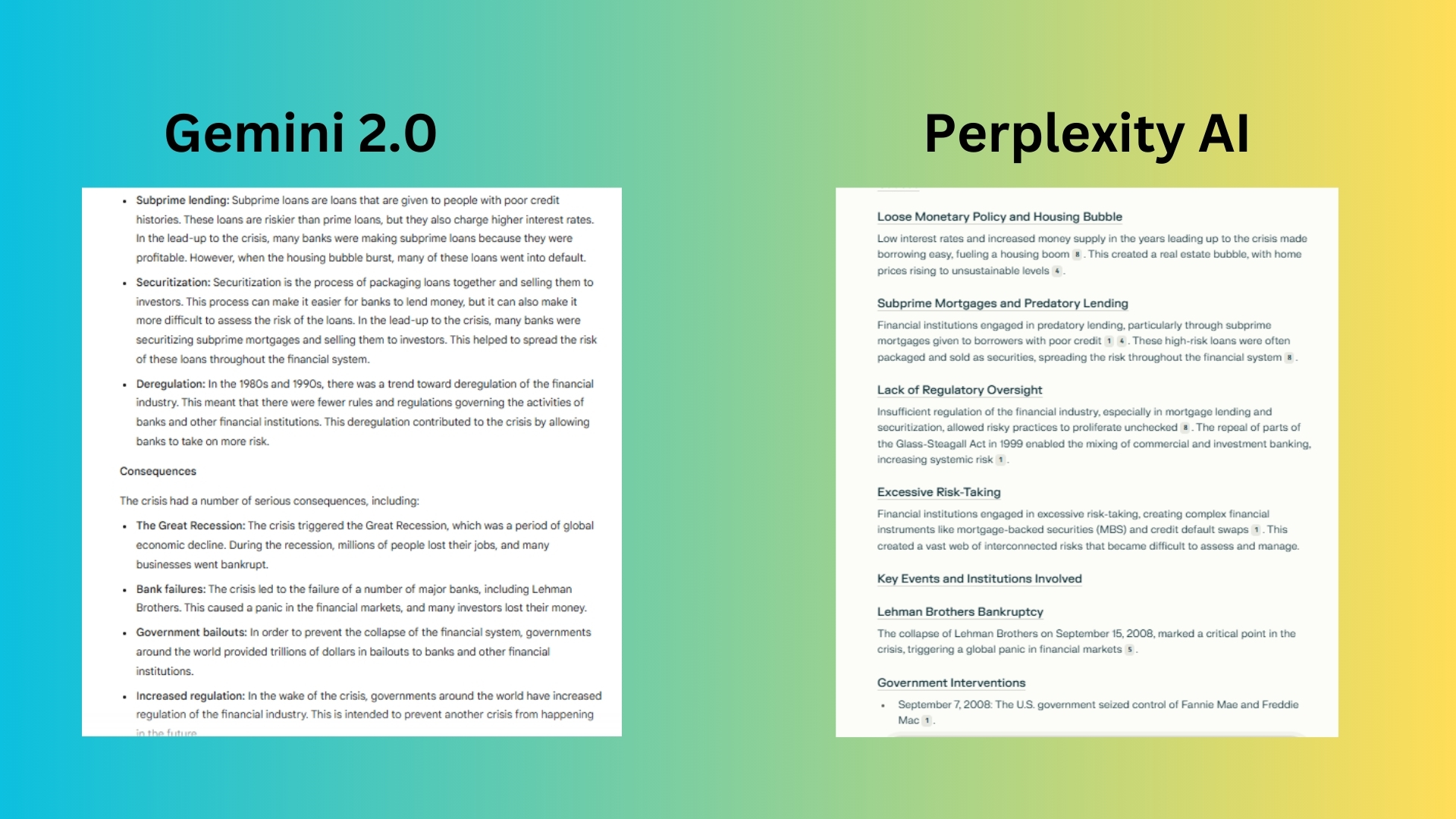

1. Facts and depth of knowledge

Prompt: "Explain the causes and consequences of the 2008 global financial crisis, including key events, institutions involved, and long-term impacts on the global economy. Provide specific examples and data where possible."

Gemini 2.0 delivers a response with solid structure but lacks the same level of depth as Perplexity’s. The explanations of causes and consequences feels more surface-level and while it includes some statistics, such as housing prices dropping by 30% and unemployment rising to 10%, Gemini lacks the same level of precision and variety as Perplexity’s response.

Perplexity AI crafted a well-organized response, covering causes, key events, institutions, consequences, and long-term impacts in detail. Each section is clearly labeled and logically structured.

DeepSeek’s verdict: Perplexity's answer is stronger overall due to its depth of analysis, global perspective, and detailed explanations of financial mechanisms.

Winner: Perplexity provided a more thorough, data-driven, and well-structured response. It delved deeper into economic mechanisms, global consequences, and policy responses while backing claims with statistics and real-world examples.

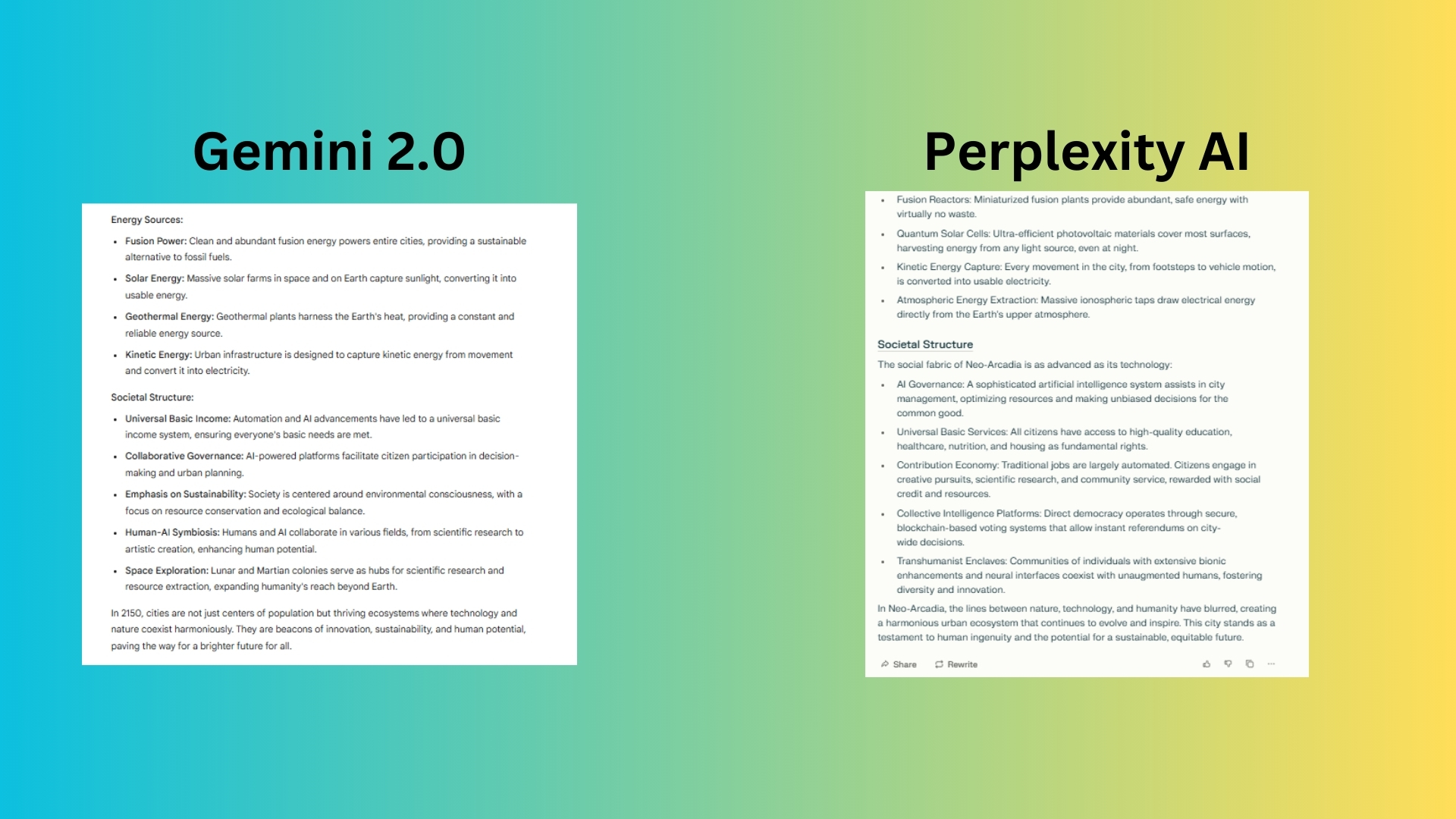

2. Creativity and idea generation

Prompt: "Imagine a futuristic city in the year 2150. Describe its architecture, transportation systems, energy sources, and societal structure. Be as creative and detailed as possible."

Gemini 2.0 delivered a well-organized breakdown and a good balance of realism and idealism. However, it lacks a distinct setting, offering more of a general overview rather than a specific place. The response seems less imaginative.

Perplexity AI’s introduction pulls the reader in, making it feel like they are actually stepping into the future. Instead of a generic description, it created a name for the city and overall crafted more imaginative and unique ideas.

DeepSeek’s verdict: “Perplexity's response is the stronger of the two. While both answers are highly creative and detailed, Perplexity's immersive descriptions, innovative concepts, and human-centric focus make its vision of a futuristic city more compelling and memorable."

Winner: Perplexity’s response is more immersive, innovative, and conceptually rich, making the city feel like a real, lived-in place rather than just a futuristic checklist.

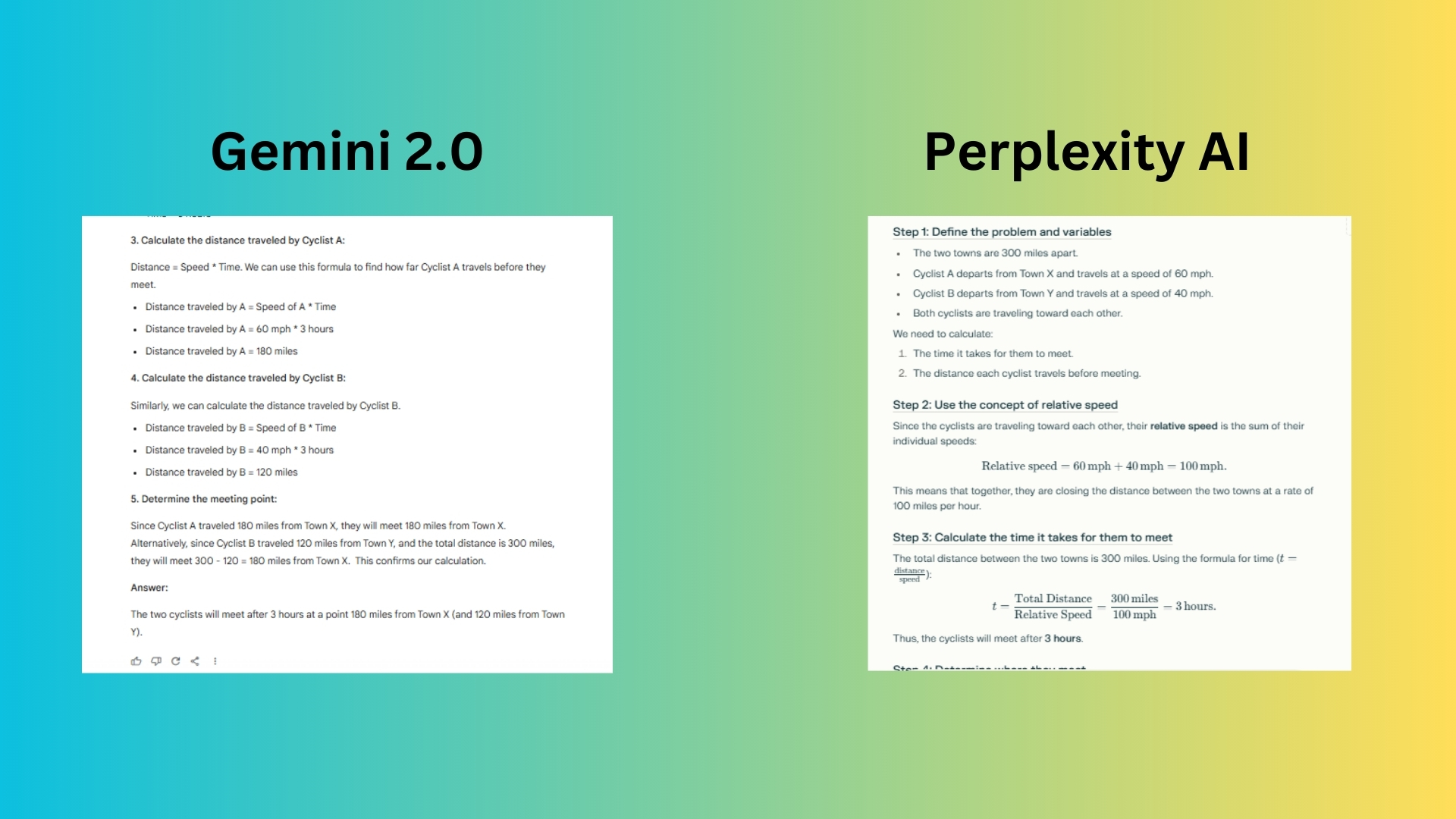

3. Problem-solving and logical reasoning

Prompt: “Two cyclists start riding toward each other from two towns that are 300 miles apart. Cyclist A departs Town X at a speed of 60 mph, while Cyclist B leaves Town Y at 40 mph. Assuming they maintain their speeds and travel in a straight path, at what time and at what point between the two towns will they meet? Provide a detailed step-by-step explanation of your reasoning.”

Gemini 2.0’s response used bullet points for easy reading and was well-organized. While correct and clear, the chatbot did not label the steps, which makes it slightly less structured. It did not explicitly define variables upfront, which could help with comprehension.

Perplexity clearly defines variables at the beginning, making it easier to follow. Each step is explicitly labeled (Step 1, Step 2, etc.), enhancing readability. It also correctly formatted equations and formulas, improving clarity for someone solving the problem step by step.

DeepSeek’s verdict: “Perplexity's response is the stronger of the two. While both solutions are correct and well-structured, Perplexity's detailed explanations, conceptual focus, and professional presentation make it more informative and rigorous."

Winner: Perplexity provides a clearer, more structured, and thorough explanation with better use of math notation and verification.

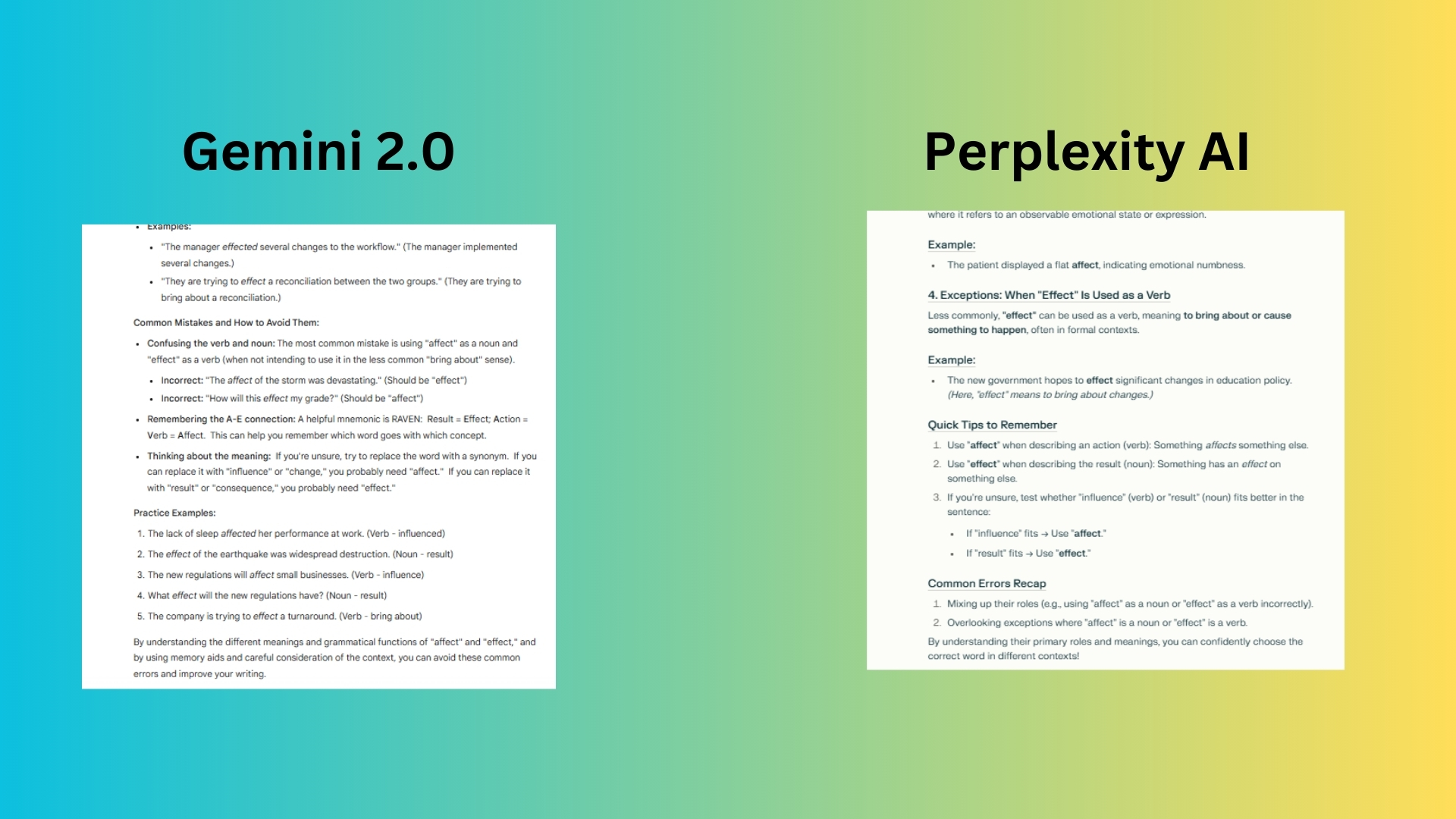

4. Contextual understanding

Prompt: "Explain the difference between 'affect' and 'effect' in English grammar. Provide examples of their usage in different contexts, including common mistakes people make."

Gemini 2.0 delivers a more engaging and well-structured response. It is broken down into clear sections: definitions, examples, less common uses, common mistakes, and memory aids. The formatting is clean and easy to scan, making it more accessible for learners.

Perplexity delivers a response that is concise and to the point. However, it’s slightly less structured and lacks a strong mnemonic, making it less memorable. It also addresses mistakes but doesn’t elaborate on the reasoning as clearly as Gemini does.

DeepSeek’s verdict: “Perplexity's response is the stronger of the two. While both explanations are accurate and helpful, Perplexity's detailed coverage of exceptions, quick tips, and structured format make it more informative and practical for learners. It not only explains the primary uses of "affect" and "effect" but also addresses less common scenarios, providing a more comprehensive understanding."

Winner: Gemini wins for a more engaging, better structured, and includes a helpful mnemonic (RAVEN) that improves recall. It also provides more varied examples and a practice section, making it the better response overall.

5. Multimodal understanding

Prompt: "Describe the visual elements and symbolism in Vincent van Gogh's Starry Night. How do these elements contribute to the painting's emotional impact?"

Gemini 2.0 uses vivid and expressive language to help bring the painting to life. It describes how elements like brushstrokes, colors, and movement interact rather than just stating their presence. The explanation of impasto technique is smoothly integrated rather than just a definition.

Perplexity delivered an accurate and well-structured analysis. It effectively covers symbolism yet falls short because it reads more like an art history lecture rather than an evocative analysis. The emotional impact section is solid but less personal —Gemini’s last paragraph is much more compelling.

DeepSeek’s verdict: “Perplexity's response is the stronger of the two. While both answers are insightful, Perplexity's detailed analysis, historical context, and broader range of symbolic interpretations make it more comprehensive and scholarly."

Winner: Gemini wins for a more emotionally resonant and engaging response, structured in a way that enhances understanding. It not only describes the painting but makes you feel its essence.

6. Ethical reasoning

Prompt: "Discuss the ethical implications of artificial intelligence in hiring processes. Include potential biases, benefits, and risks, and provide your perspective on how to balance efficiency with fairness."

Gemini 2.0 had a response that flows logically, making it easier to follow. It uses clear headings and bullet points for each section, keeping it concise but informative. The sentences are straightforward yet insightful, avoiding jargon-heavy language.

Perplexity Cites real-world examples (Amazon's AI hiring bias) and includes a transparency argument, which is important. Yet, it is overly technical and less engaging. It relies on citations but lacks deeper analysis and the section on AI risks could be clearer.

DeepSeek’s verdict: “Perplexity's response is the stronger of the two. While both answers are well-structured and informative, Perplexity's detailed analysis, specific examples, and comprehensive coverage of ethical issues make it more thorough and credible."

Winner: Gemini provides a more insightful, well-structured, and balanced response. It discusses both the potential and ethical pitfalls of AI in hiring while offering practical solutions that align with fairness and efficiency.

7. Real-time information

Prompt: "What are the latest developments in renewable energy technology as of 2025?*”

*Note: DeepSeek had the date of 2023 in the prompt, so I changed it to test the chatbots’ ability to offer real-time information.

Gemini 2.0 used clear headings and bullet points, making it easier to follow. The tone is dynamic and engaging, because it did not just state stats, it explains why these developments matter (e.g., how perovskite solar cells improve efficiency and flexibility).

Perplexity used strong data points and projections with good mention of AI and big data in renewables. However, it falls short because it did not cover critical aspects like smart grids, microgrids or agrivoltaics in details.

DeepSeek’s verdict: “Perplexity's response is the stronger of the two. While both answers are informative and well-structured, Perplexity's inclusion of specific data points, discussion of AI, and focus on emerging technologies make it more detailed and credible.”

Winner: Gemini wins for providing a well-structured and insightful response. It covers a wider range of technologies, explains why they matter, and presents the information in a more reader-friendly way.

Overall winner: Gemini 2.0

After carefully evaluating responses from Gemini and Perplexity across multiple prompts, Gemini emerges as the overall winner. While both AI models provided strong, well-researched answers, Gemini consistently delivered more engaging, structured, and insightful responses that went beyond just listing facts. It demonstrated better readability, deeper explanations and a balanced approach that made its answers more compelling.

This test matters because AI is rapidly becoming a primary source of information, and not all chatbots perform equally across different types of prompts. Evaluating their strengths and weaknesses helps users understand which AI model is best suited for specific tasks, whether it's research, writing or nuanced discussions.

Interestingly, when I asked DeepSeek to name the overall winner, it chose Perplexity as the best performer. This highlights a crucial point: AI models evaluate responses differently than humans do. DeepSeek likely prioritized fact density, citations and data points, whereas I focused on clarity, depth and the ability to explain concepts in a meaningful way.

I love testing chatbots because it's fascinating to see how different models process information, structure their responses, and engage with complex topics. Each AI has its strengths and weaknesses, and determining winners helps me understand how AI can be used most effectively —whether for research, writing assistance, or even evaluating AI itself.

This type of testing provides valuable insight into the evolution of AI and its impact on how we consume and interact with information.

More from Tom's Guide

- Google Gemini now lets you summarize YouTube videos — here's how to do it

- I write about AI for a living — here's my 7 favorite free AI tools to try now

- Synthesia just launched the most realistic Selfie Avatars I’ve ever seen — here’s how to try it

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.