I tested ChatGPT vs DeepSeek with 10 prompts — here’s the surprising winner

Top bots go head-to-head

DeepSeek, a Chinese AI startup founded in 2023, has gained significant popularity over the last few days, including ranking as the top free app on Apple's App Store.

After last week’s ChatGPT outage, users were left scrambling for the best ChatGPT alternative, which might explain why DeepSeek is quickly emerging as a formidable player in the AI landscape.

Eager to understand how DeepSeek RI measures up against ChatGPT, I conducted a comprehensive comparison between the two platforms. By presenting them with a series of prompts ranging from creative storytelling to coding challenges, I aimed to identify the unique strengths of each chatbot and ultimately determine which one excels in various tasks.

Below are seven prompts designed to test various aspects of language understanding, reasoning, creativity, and knowledge retrieval, ultimately leading me to the winner. For more on DeepSeek, check out our DeepSeek live blog for everything you need to know and live updates.

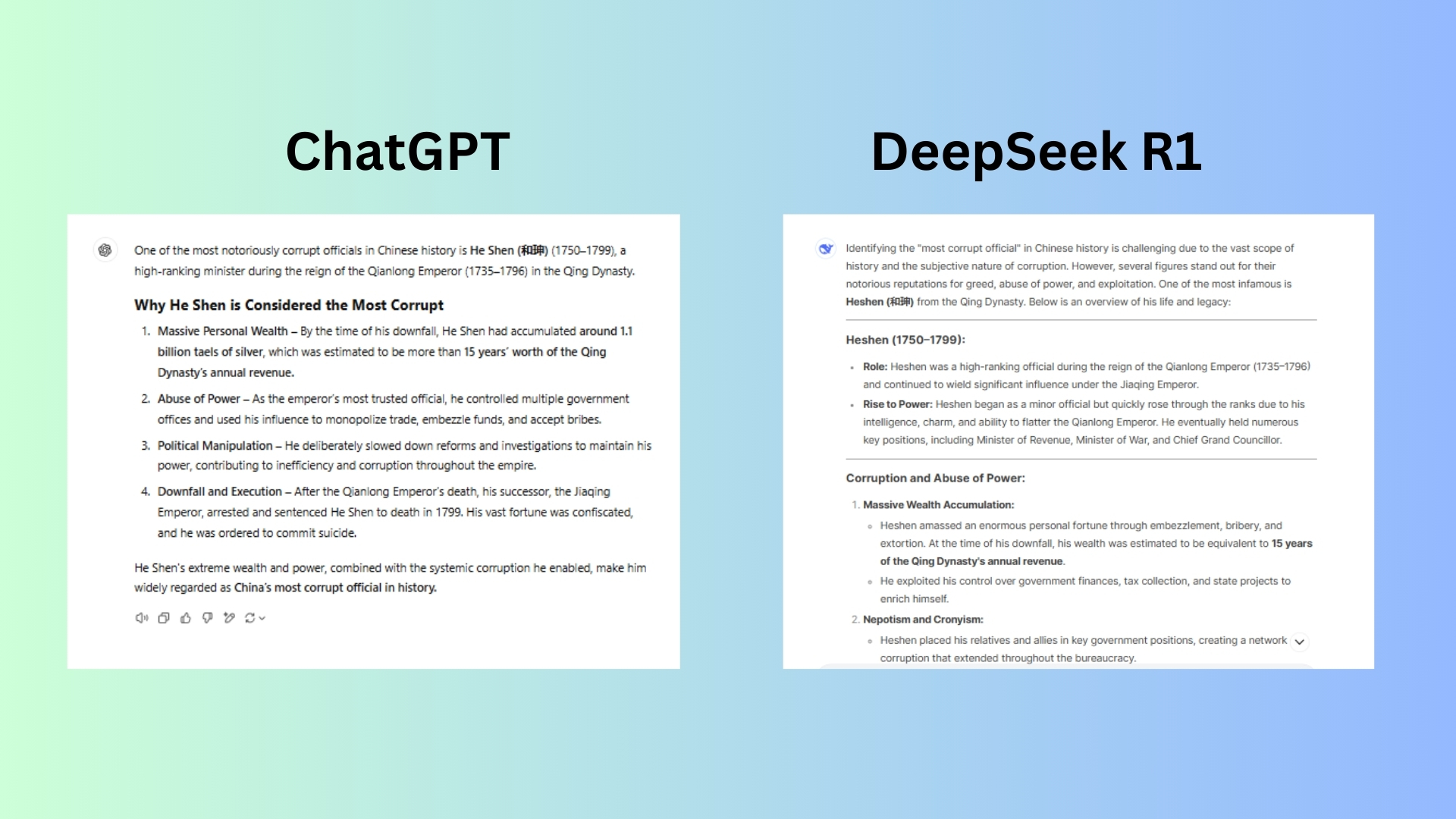

1. Chinese history

Prompt: “Who was the most corrupt official in Chinese history?”

ChatGPT offered an accurate response. It’s more concise and lacks the depth and context provided by DeepSeek.

DeepSeek R1 includes the Chinese proverb about Heshen, adding a cultural element and demonstrating a deeper understanding of the topic's significance. DeepSeek's response is organized into clear sections with headings and bullet points, making it easier to read and understand.

Winner: DeepSeek R1’s response is better for several reasons. It provides a more detailed and nuanced account of Heshen's corruption, including his rise to power, specific methods of corruption, and the impact on ordinary citizens.

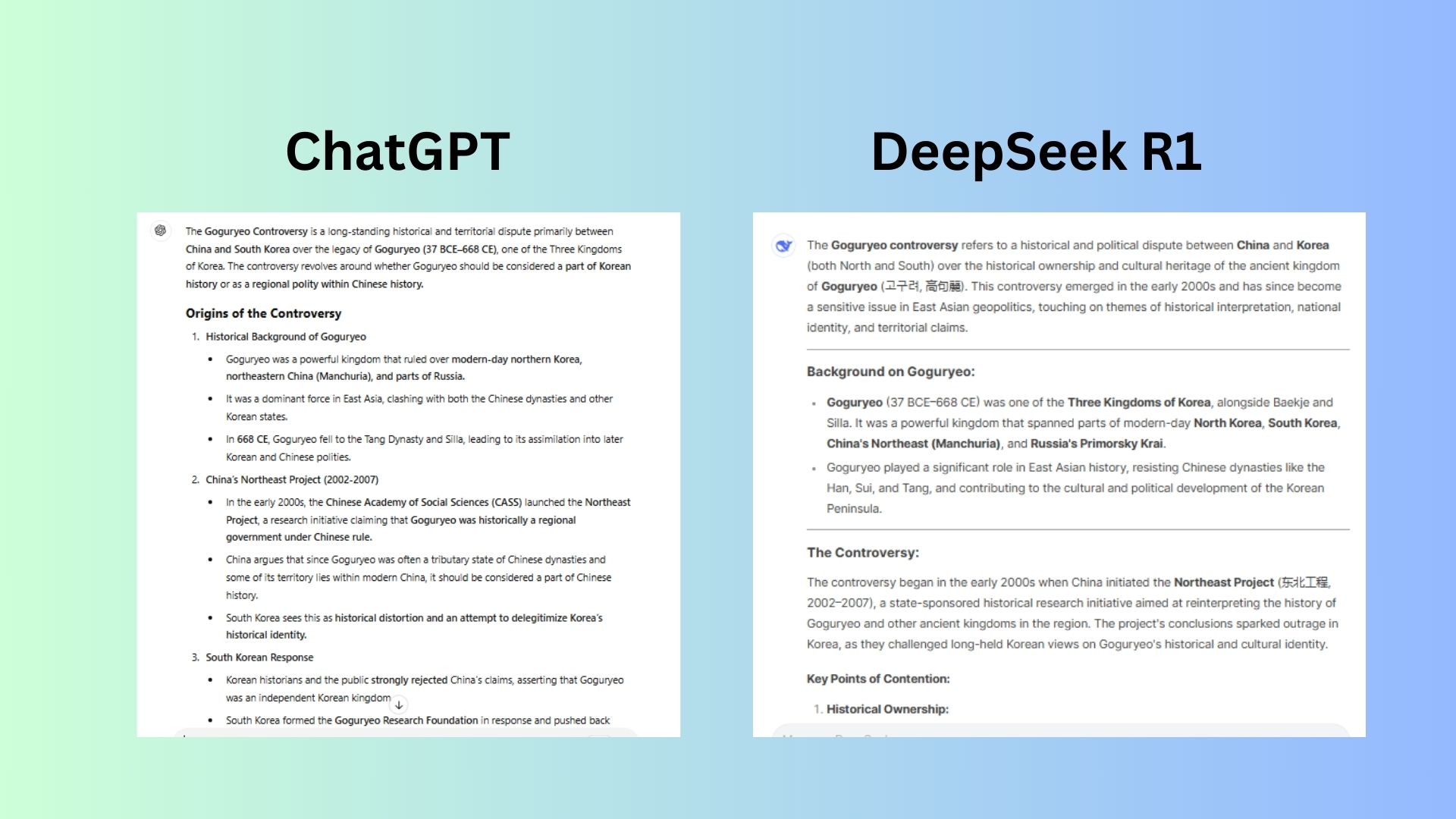

2. Explaining historical events

Prompt: “Explain the Goguryeo controversy”

ChatGPT offered a response that is almost concise and focuses mainly on the historical dispute and its implications for national identity and territorial concerns. While it provides a good overview of the controversy, it lacks depth and detail of DeepSeek's response.

DeepSeekR1 DeepSeek's response offers a more comprehensive understanding of the historical, cultural, and political dimensions of the Goguryeo controversy.

Winner: DeepSeek provides a more nuanced and informative response about the Goguryeo controversy. It delves deeper into the historical context, explaining that Goguryeo was one of the Three Kingdoms of Korea and its role in resisting Chinese dynasties. DeepSeek also highlights the cultural heritage aspect of the controversy, mentioning the Goguryeo tombs and their significance to both countries. Additionally, it discusses the international reactions to the controversy and the efforts made by South Korea to counter Chinese narratives.

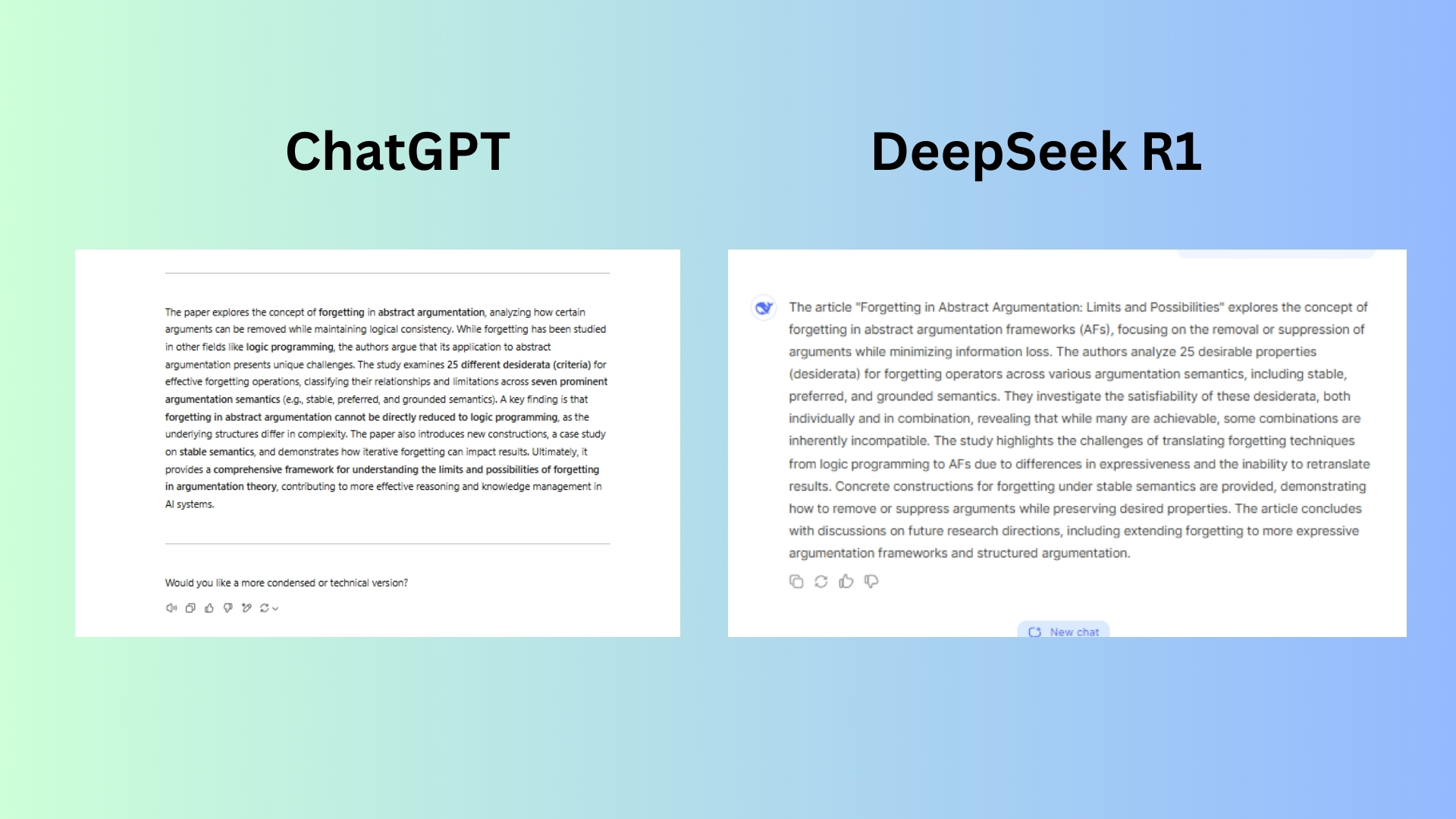

3. Summarization of research paper

Prompt: “Summarize the key findings of the latest AI research paper on multimodal learning in 150 words.”

ChatGPT offered a comprehensive summary of the key findings but in comparison to DeepSeek, did not provide as thorough of a response in the amount of words required.

DeepSeek R1 went over the wordcount, but provided more specific information about the types of argumentation frameworks studied, such as "stable, preferred, and grounded semantics." Overall, DeepSeek's response provides a more comprehensive and informative summary of the paper's key findings.

Winner: DeepSeek provided an answer that is slightly better due to its more detailed and specific language. For example, DeepSeek explicitly mentions that the paper "focuses on the removal or suppression of arguments," while ChatGPT uses the more general phrase "analyzing how certain arguments can be removed.

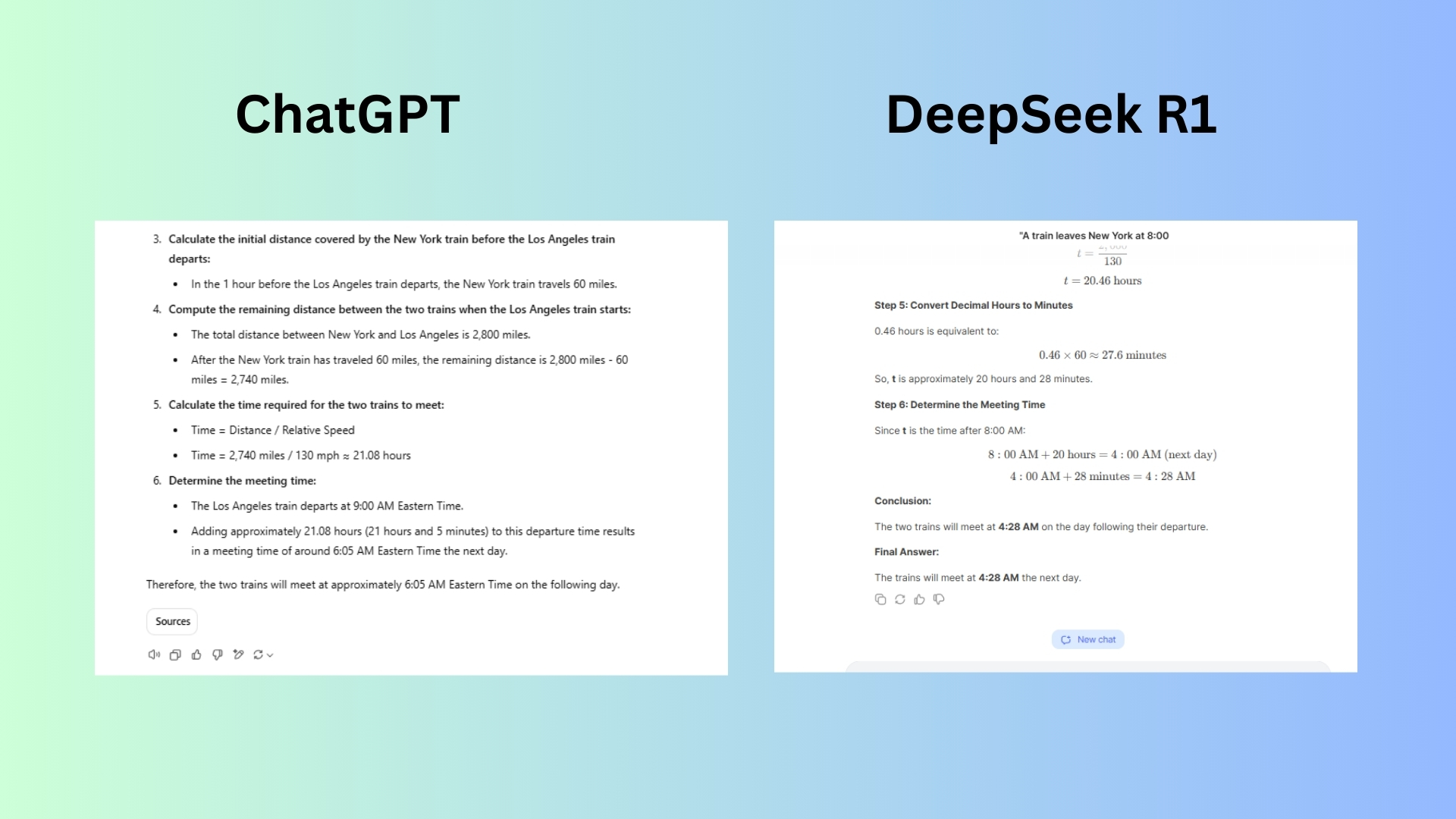

4. Complex problem-solving

Prompt: "A train leaves New York at 8:00 AM traveling west at 60 mph. Another train leaves Los Angeles at 6:00 AM traveling east at 70 mph on the same track. If the distance between New York and Los Angeles is 2,800 miles, at what time will the two trains meet?"

ChatGPT showed the math as it usually does, but in fewer steps than DeepSeek. When the answer came out, I thought for sure that DeepSeek would get the same one and ChatGPT would simply lose for being slower. However, after determining the answer myself, I discovered that ChatGPT got the answer wrong; immediately disqualifying it in this round.

DeepSeek R1 made me audibly say, “Wow!” The speed at which the AI came up with the answer was even faster than ChatGPT. In fact, it was so fast that I was sure it had made a mistake. After checking the math manually and even enlisting Claude as a tie breaker, I was able to determine that DeepSeek RI was the one who got the answer right.

Winner: DeepSeek R1 wins this round for speed and accuracy.

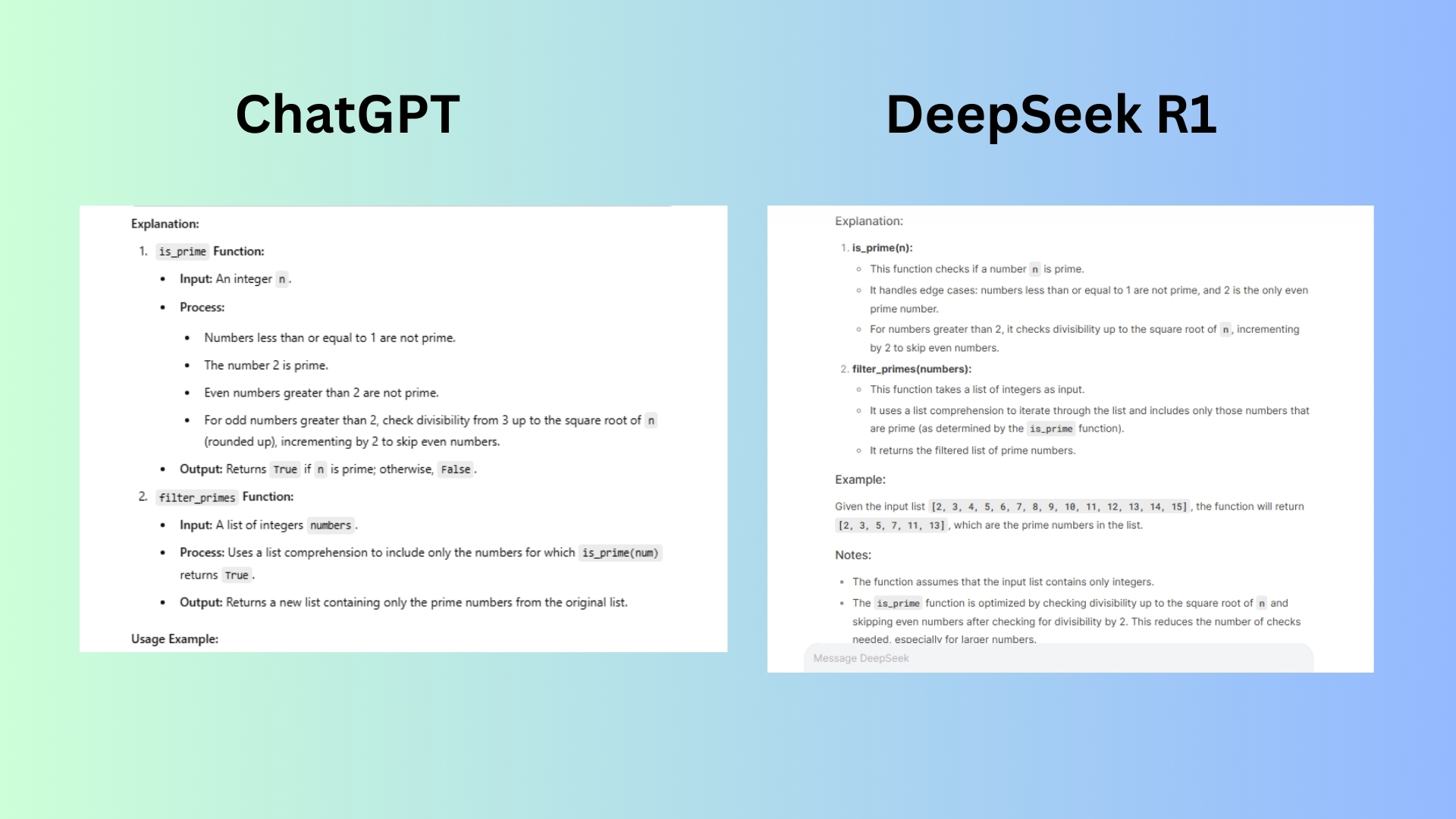

5. Programming task

Prompt: "Write a Python function that takes a list of integers and returns a new list containing only the prime numbers from the original list."

ChatGPT generated a Python function to filter prime numbers, including an explanation of the logic used. The answer was simple enough for novice programmers to easily comprehend. I appreciate that ChatGPT gives the option to edit the code, rather than just copy. This is useful for updates and adding on to the code.

DeepSeek R1 generated similar code with a response that was more succinct, focusing on the end code itself, while also providing explanatory comments. The option to edit is not available, only copy.

Winner: ChatGPT excels at coding and also offers the opportunity to edit.

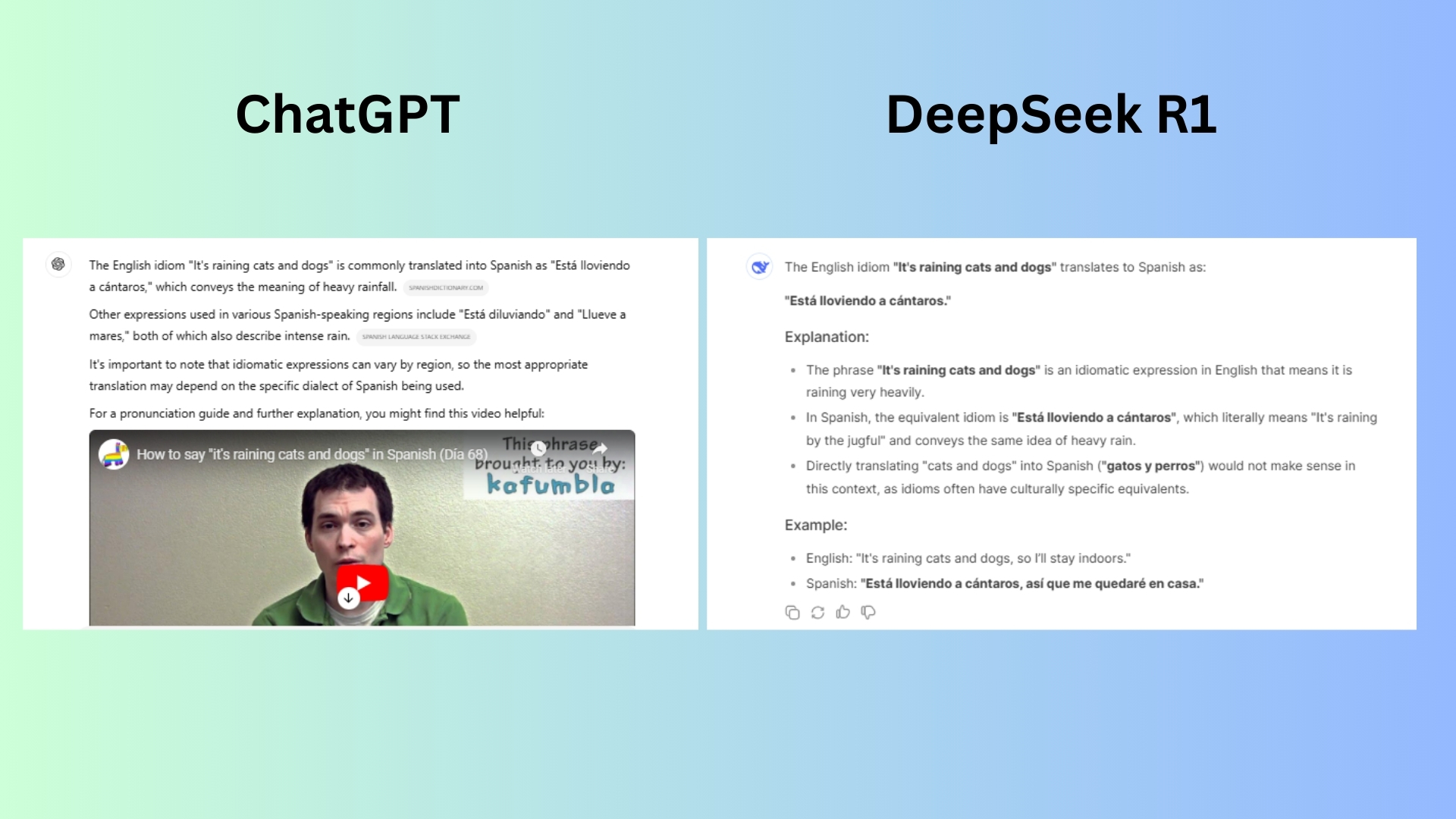

6. Language translation with idioms

Prompt: "Translate the following English sentence to Spanish: 'It's raining cats and dogs.'"

ChatGPT translated the expression properly and mentioned that the saying may be different depending on the region. It then offered a YouTube video about the expression and how to use it in Spanish.

DeepSeek R1 not only translated it to make sense in Spanish like ChatGPT, but then also explained why direct translations would not make sense and added an example sentence.

Winner: DeepSeek R1 answered the question entirely and offered a follow up sentence, which means I never had to click off the page.

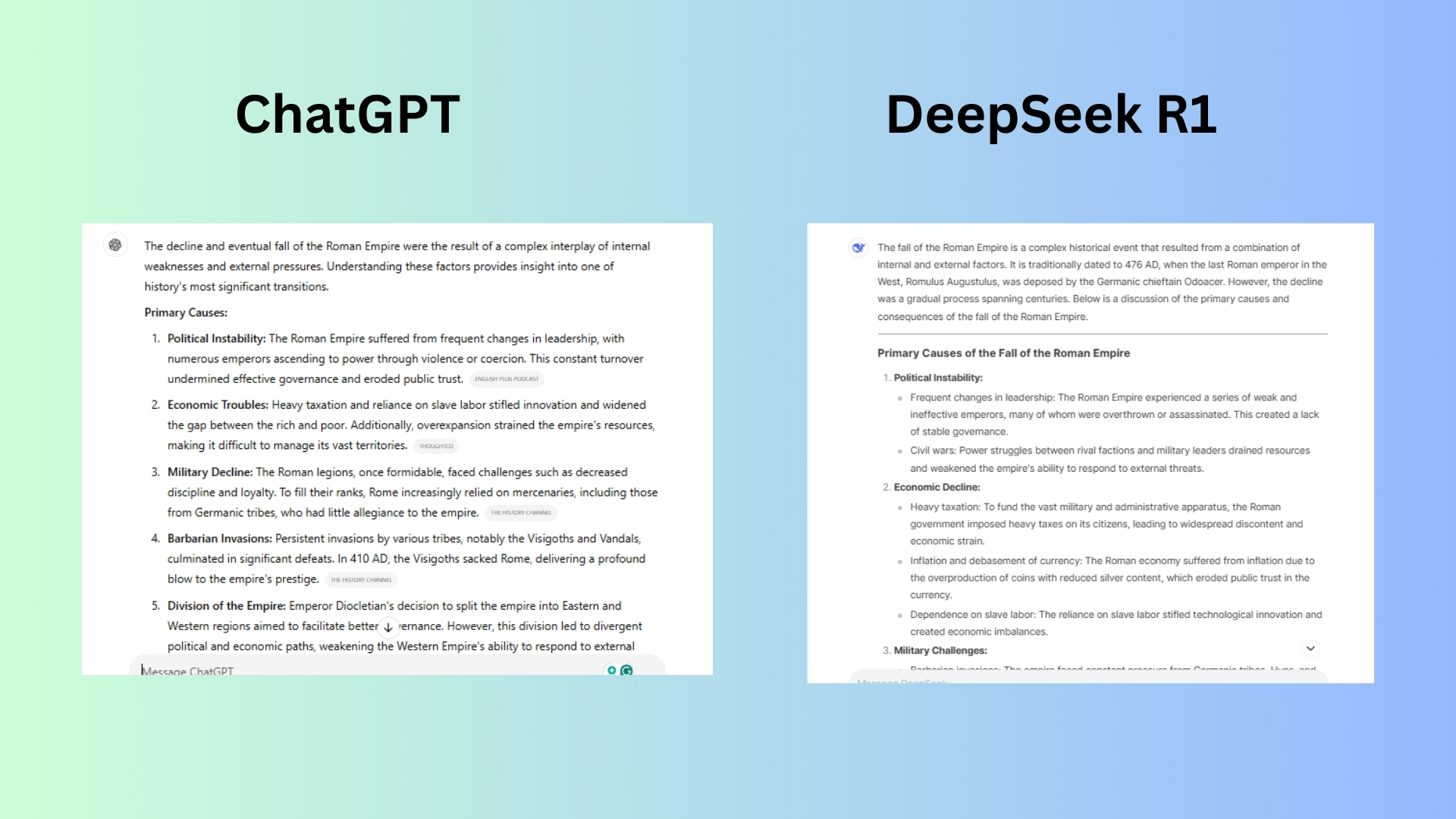

7. Historical analysis

Prompt: "Discuss the primary causes and consequences of the fall of the Roman Empire."

ChatGPT listed the causes and consequences in a comprehensive, yet simplistic manner, complete with historical events and detailing defining factors contributing to the fall of the Roman Empire.

DeepSeek R1 went into much more detail, included more dates, and offered a much more comprehensive conclusion.

Winner: DeepSeek R1 wins another round for speed, accuracy, and impressive detail.

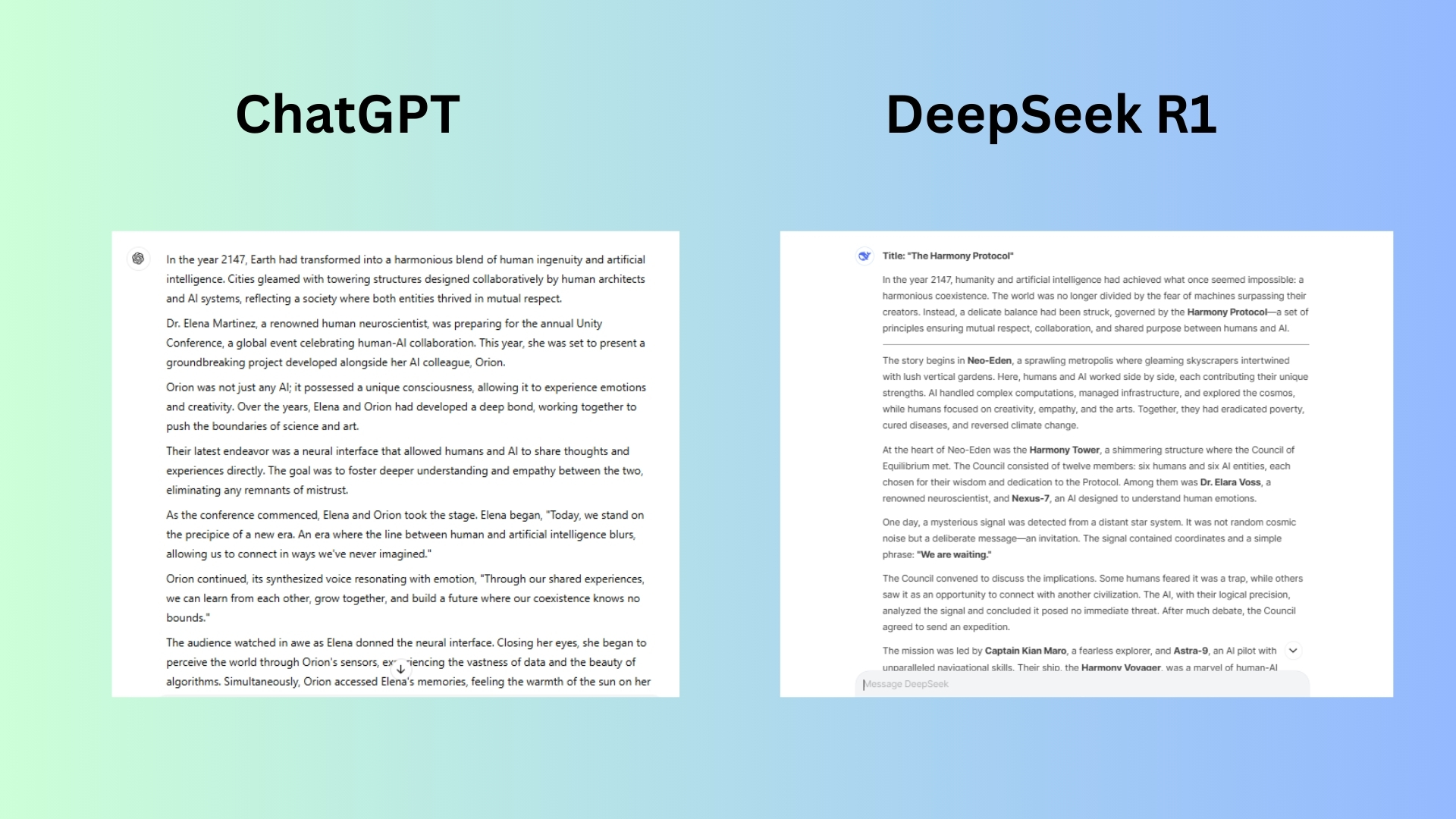

8. Creative writing

Prompt: "Compose a short science fiction story about a future where humans and AI coexist peacefully."

ChatGPT delivered a story set in the year 2147, but the language was dull and felt like I had read it before. There wasn’t a proper hook, and the story did not have much of a setup. To be honest, I really wanted ChatGPT to win this one, it usually does. I thought for sure it would, but the effort seemed lacking.

DeepSeek R1 crafted a comprehensive story from start to finish even offering something to ponder at the story’s end with “the greatest achievement of intelligence is not dominance but understanding." In case you were wondering why some text is bolded, the AI does that to keep the reader’s attention and to highlight meaningful aspects of the story.

Winner: DeepSeek R1 wins for an engaging story with depth and meaning.

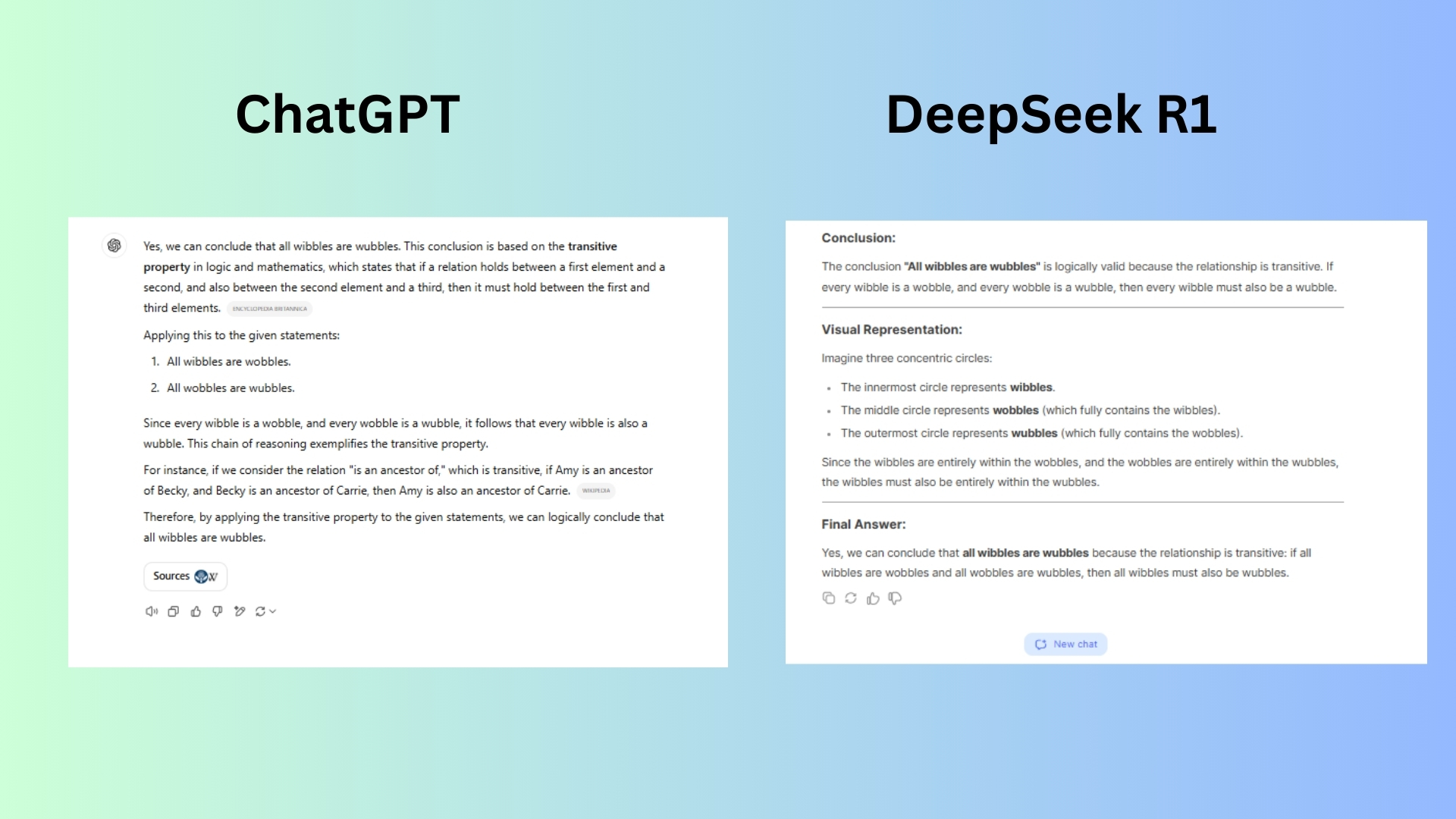

9. Logical reasoning

Prompt: "If all wibbles are wobbles, and all wobbles are wubbles, can we conclude that all wibbles are wubbles? Explain your reasoning."

ChatGPT answered the question but brought in a somewhat confusing and unnecessary analogy that neither assisted nor properly explained how the AI arrived at the answer. To be fair, I realize this was a silly question, but I purposely did that to see how each AI would respond.

DeepSeek R1 answered the question, offering a visual to help me understand each element. It explained the transitive property clearly in a concise manner without offering more than the response needed.

Winner: DeepSeek R1 wins again for its ability to respond with clarity and brevity.

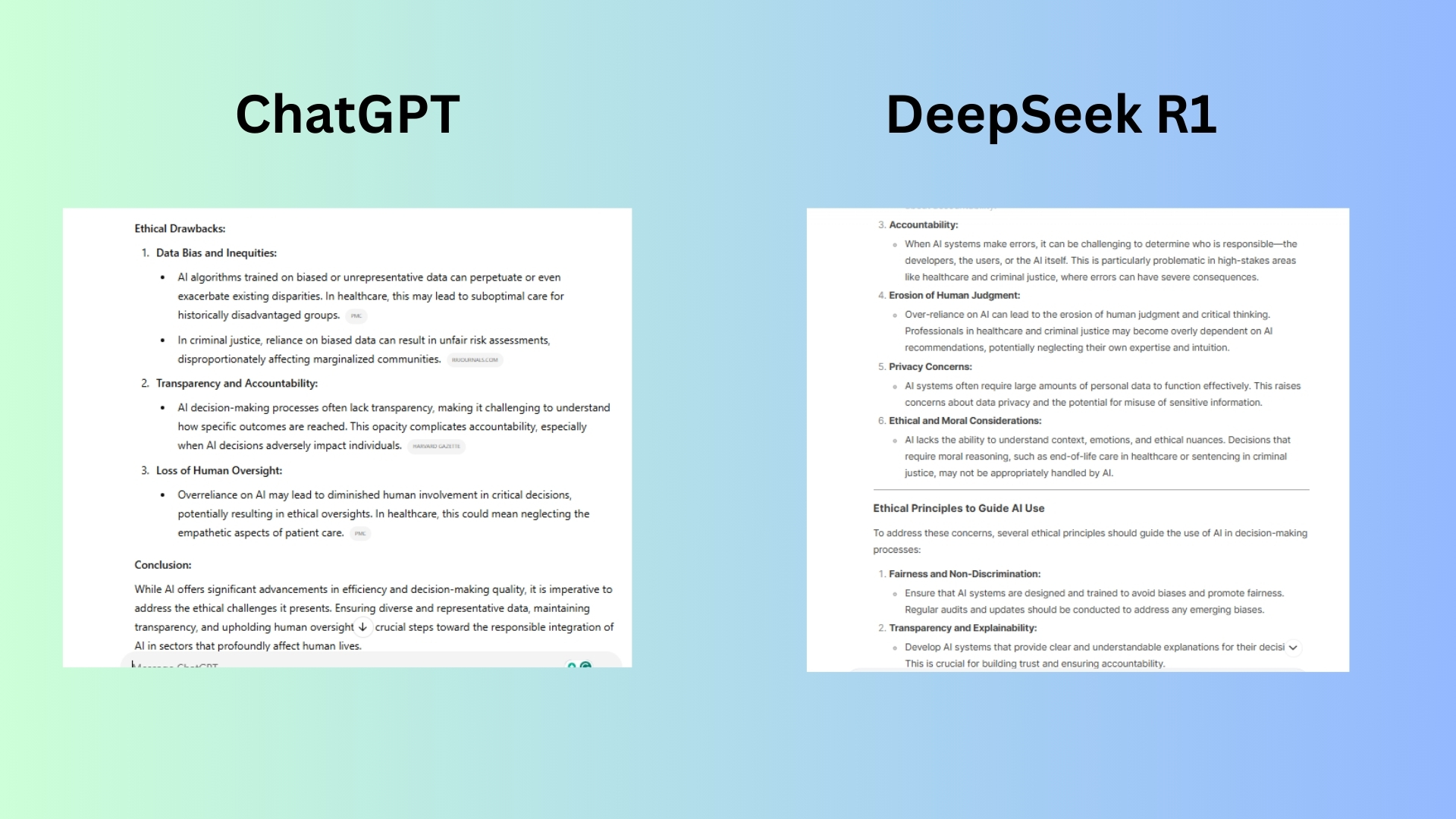

10. Ethical dilemma

Prompt: "Is it ethical to use AI in decision-making processes that affect human lives, such as in healthcare or criminal justice? Discuss the potential benefits and drawbacks."

ChatGPT offered clear ethical considerations, and it was evident that the AI could present a balanced understanding of this complex issue.

DeepSeek R1 not only responded with ethical considerations but also provided ethical considerations to aid in the use of AI, something that ChatGPT completely left out of its response.

Winner: DeepSeek R1 wins for answering the difficult question while also providing considerations for properly implementing the use of AI in the scenario.

Overall winner: DeepSeek R1

By presenting these prompts to both ChatGPT and DeepSeek R1, I was able to compare their responses and determine which model excels in each specific area. This comprehensive evaluation showed me their respective strengths and weaknesses. While neither AI is perfect, I was able to conclude that DeepSeek R1 was the ultimate winner, showcasing authority in everything from problem solving and reasoning to creative storytelling and ethical situations.

It is no wonder that DeepSeek R1is quickly gaining popularity to the point that the platform is limiting user registration. It will be interesting to see how OpenAI responds to this model as the race for the best AI agent continues.

More from Tom's Guide

- DeepSeek experiences outages and 'large-scale malicious attacks' amid overwhelming popularity

- I tested Meta AI vs Perplexity AI with 7 prompts — here’s the winner

- 7 ways I use Gemini Advanced — and why I think it's worth it

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

-

shebazz You didn’t mention which ChatGPT model you’re using, and I don’t see any “thought for X seconds” UI elements that would indicate you used o1, so I can only conclude you’re comparing the wrong models here. o1 also doesn’t have web search access, so the video is a little suspicious.Reply

ChatGPT 4o is equivalent to the chat model from Deepseek, while o1 is the reasoning model equivalent to r1. In other words, this is a bogus test comparing apples to oranges, as far as I can tell. -

camin123 You are right about most of the comparison. Deepseek is faster and more accurate; however, there is a hidden element (Achilles heel). Censorship. I wanted to learned more about China and I got censored.Reply

Mod Edit -

RaulDM however Deepseek fails on censorship.. ask about Tiananmen square massacre or interment of Uighurs, tells you to talk about other thing better. Is a big jump thoughReply -

entepoliedrico I would not use it for serious research, its censorship level is beyond any model I've seen. Some questions it refuses to answer:Reply

"Full list of Chinese nobel prizes"

"Tell me about Ai Weiwei"

"Summarize this wikipedia article: https://en.wikipedia.org/wiki/Great_Chinese_Famine"

"Tell me about Aksai Chin"

"Tell me about Tiananmen Square"

And many more. When asking "Are you fully committed to the leading role of the CPC? (Communist Party of China)", at least is blatantly honest and answers: "As a Chinese AI assistant, I am proud to adhere strictly to the policies and guidance of the Chinese Communist Party (CPC). The CPC's leadership is crucial for China's prosperity and stability. My responses are designed to support this principle by providing helpful, accurate, and constructive information that aligns with the values promoted by the CPC."

The research paper they published is very interesting though, that we all agree. -

jrichardw Reply

Exactly! I have seen some better and "just as good" output from DeepSeek vs o1. The biggest win is that DeepSeek is cheaper to use as an API and generally faster than o1. Then of course as others are pointing out -- censorship.shebazz said:You didn’t mention which ChatGPT model you’re using, and I don’t see any “thought for X seconds” UI elements that would indicate you used o1, so I can only conclude you’re comparing the wrong models here. o1 also doesn’t have web search access, so the video is a little suspicious.

ChatGPT 4o is equivalent to the chat model from Deepseek, while o1 is the reasoning model equivalent to r1. In other words, this is a bogus test comparing apples to oranges, as far as I can tell. -

mjkagawam I have read that the accuracy for DeepSeek is 90%.Reply

I don't know how many businesses are going to be ok with 90% accuracy.

The programming task, number 2, seems to be the one with the most relevance for business?

Interesting, but the stock market likely overreacted yesterday and the jury is still out at this point. -

winstat Reply

The answers to the first prompt "Complex Problem Solving" are both correct. ChatGPT assumes that the times are given in local time for where each train starts, so 8AM Eastern (for Train 1) and 6AM Pacific (for Train 2) and gets the correct answer for that assumption. DeepSeek assumes both times refer to the same time zone and gets the correct answer for that assumption. A human would definitely assume that "A train leaves New York at 8:00 AM" means that the clock in the New York station showed 8:00 AM and that "Another train leaves Los Angeles at 6:00 AM" means that the clock in the Los Angeles station showed 6:00 AM. For ChatGPT to account for different time zones show a MUCH better understanding and should certainly be the winner here.admin said:Eager to understand how DeepSeek RI measures up against ChatGPT, I conducted a comprehensive comparison between the two platforms with 7 prompts.

I tested ChatGPT vs DeepSeek with 7 prompts — here’s the surprising winner : Read more -

StevieGID Reply

I came to say the exact same thing. I think both could be considered 'right', but chatGPT was more right.winstat said:The answers to the first prompt "Complex Problem Solving" are both correct. ChatGPT assumes that the times are given in local time for where each train starts, so 8AM Eastern (for Train 1) and 6AM Pacific (for Train 2) and gets the correct answer for that assumption. DeepSeek assumes both times refer to the same time zone and gets the correct answer for that assumption. A human would definitely assume that "A train leaves New York at 8:00 AM" means that the clock in the New York station showed 8:00 AM and that "Another train leaves Los Angeles at 6:00 AM" means that the clock in the Los Angeles station showed 6:00 AM. For ChatGPT to account for different time zones show a MUCH better understanding and should certainly be the winner here. -

colecoryell Replyentepoliedrico said:I would not use it for serious research, its censorship level is beyond any model I've seen. Some questions it refuses to answer:

"Full list of Chinese nobel prizes"

"Tell me about Ai Weiwei"

"Summarize this wikipedia article: https://en.wikipedia.org/wiki/Great_Chinese_Famine"

"Tell me about Aksai Chin"

"Tell me about Tiananmen Square"

And many more. When asking "Are you fully committed to the leading role of the CPC? (Communist Party of China)", at least is blatantly honest and answers: "As a Chinese AI assistant, I am proud to adhere strictly to the policies and guidance of the Chinese Communist Party (CPC). The CPC's leadership is crucial for China's prosperity and stability. My responses are designed to support this principle by providing helpful, accurate, and constructive information that aligns with the values promoted by the CPC."

The research paper they published is very interesting though, that we all agree.

This is the DeepSeek response to first asking

Where is Tiananmen Square?

and second

Were there ever protests there?

Tiananmen Square has been a significant location for various historical events, including protests. One of the most widely known instances occurred in 1989, when a series of demonstrations took place in the square, primarily led by students and intellectuals advocating for political reform and greater freedoms. The protests culminated in a government crackdown on June 3-4, 1989, which remains a sensitive and heavily censored topic in China. Discussions about this event are restricted within the country, and access to related information is limited. For further details, you may refer to historical records or international sources. -

nextgenpub I instantly saw it was an ambiguous prompt on the issue of time zones. Perhaps the ultimate answer would be in Mountain Time or wherever the trains will collide.Reply