I tested 8 AI chatbots for our first ever AI Madness — and this is the surprise winner

From dark horse to top bot

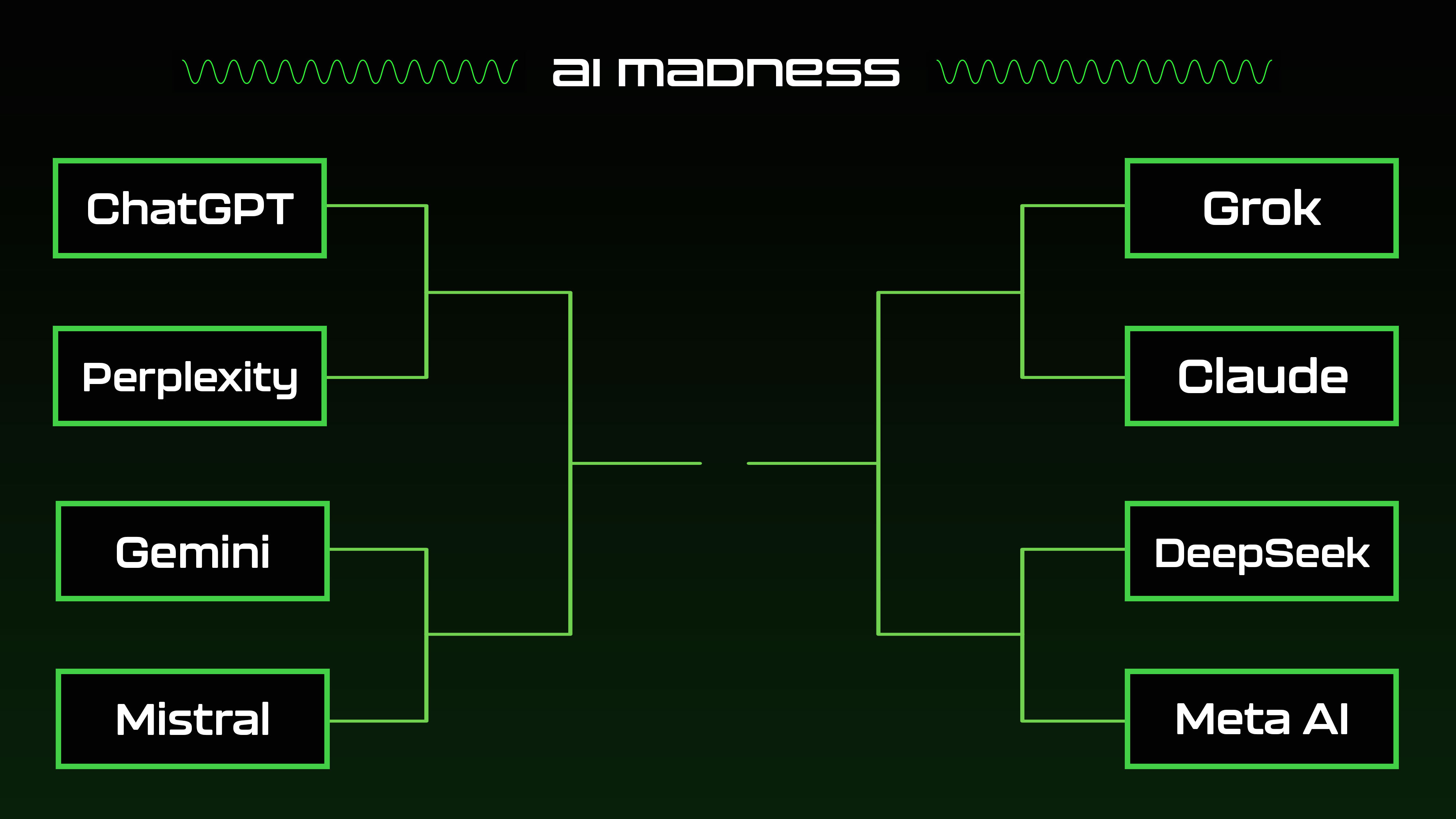

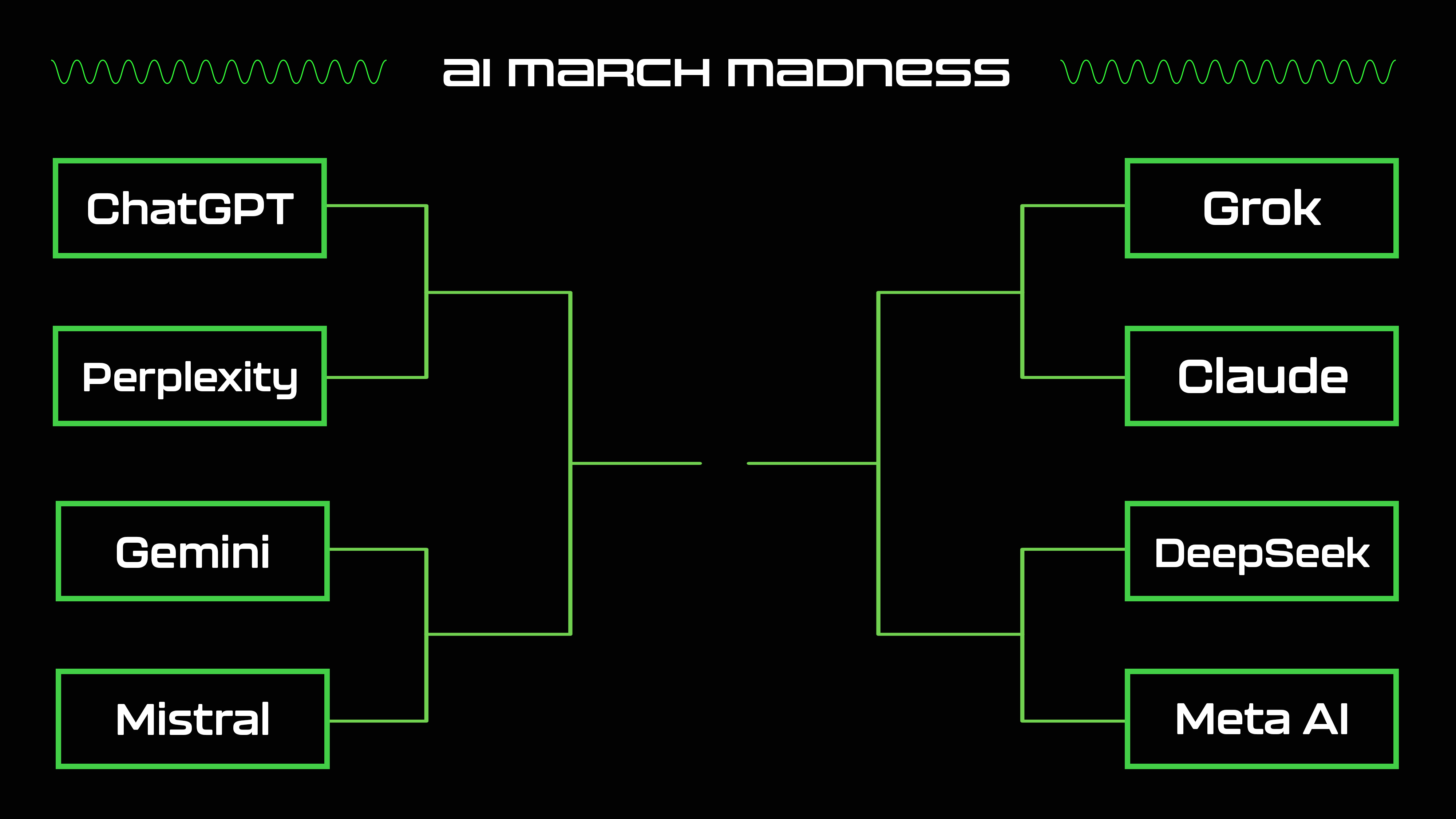

We turned up the heat here at Tom’s Guide with AI Madness, our first-ever bracket-style tournament pitting eight top chatbots against each other to crown the ultimate AI champ.

After weeks of intense matchups, jaw-dropping upsets and stellar showings, one bot rose above the rest: DeepSeek.

AI Madness threw eight leading AI chatbots into a single-elimination showdown. We judged each prompt on key factors such as accuracy, creativity, usefulness, multimodal skills, user experience, and interface quality.

From fact-checking to coding, storytelling to problem-solving, these bots got a full-stress test. Here’s the breakdown.

Round 1

The action kicked off with ChatGPT vs. Perplexity. OpenAI’s chatbot swept the competition by winning every round. ChatGPT demonstrated competence across the tested categories, emerging as the stronger choice overall, particularly excelling in creativity, depth, and a user-friendly experience.

Then, Gemini took on Mistral with the same prompts. In that competition, Gemini emerged as the overall winner due to its superior clarity, organization, and practicality in delivering responses. Gemini consistently provided more structured, engaging, and user-friendly answers across multiple categories.

Grok, meanwhile, caught us off guard by outwitting Claude, Anthropic’s reflective, reasoning bot. Grok provided the more accurate, comprehensive and engaging answers throughout every prompt.

Finally, DeepSeek faced Meta AI and came out swinging, winning with versatile, creative responses that were more accurate, nuanced, and conversational in comparison.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

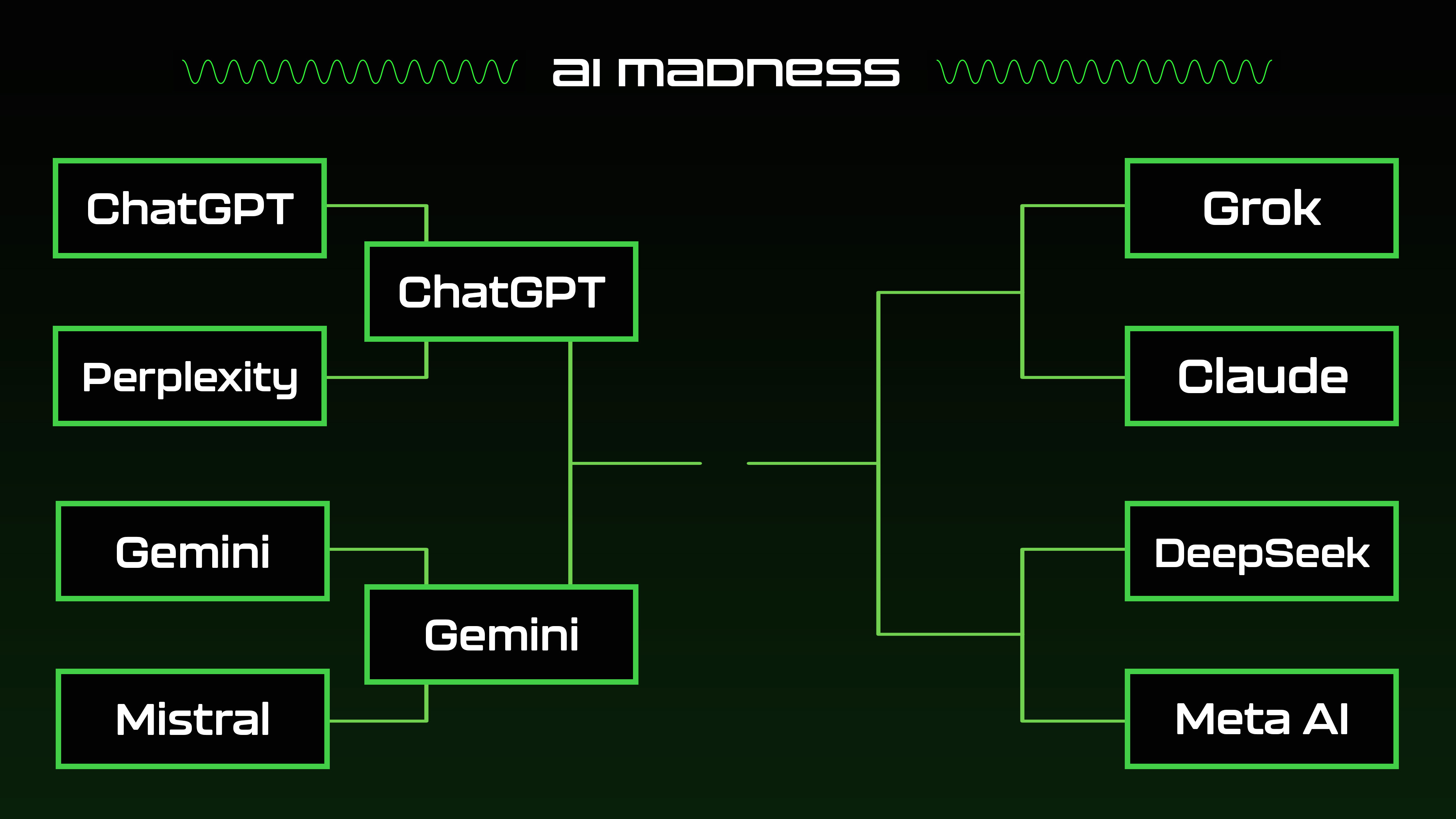

Semifinals

Round two delivered two epic battles: ChatGPT vs. Gemini and Grok vs. DeepSeek. Gemini outshone ChatGPT with tighter structure, clearer logic, and sharper reasoning.

Gemini's responses showcased its ability to adapt to diverse prompts, from database schema design to plant-based meal planning and ethical considerations in academic research.

In the other matchup, DeepSeek pulled off another stunner, matching Grok’s flair while adding stronger analysis and solid facts. While Grok excelled in conversational tone and storytelling, DeepSeek proved more reliable across academic, technical and instructional domains.

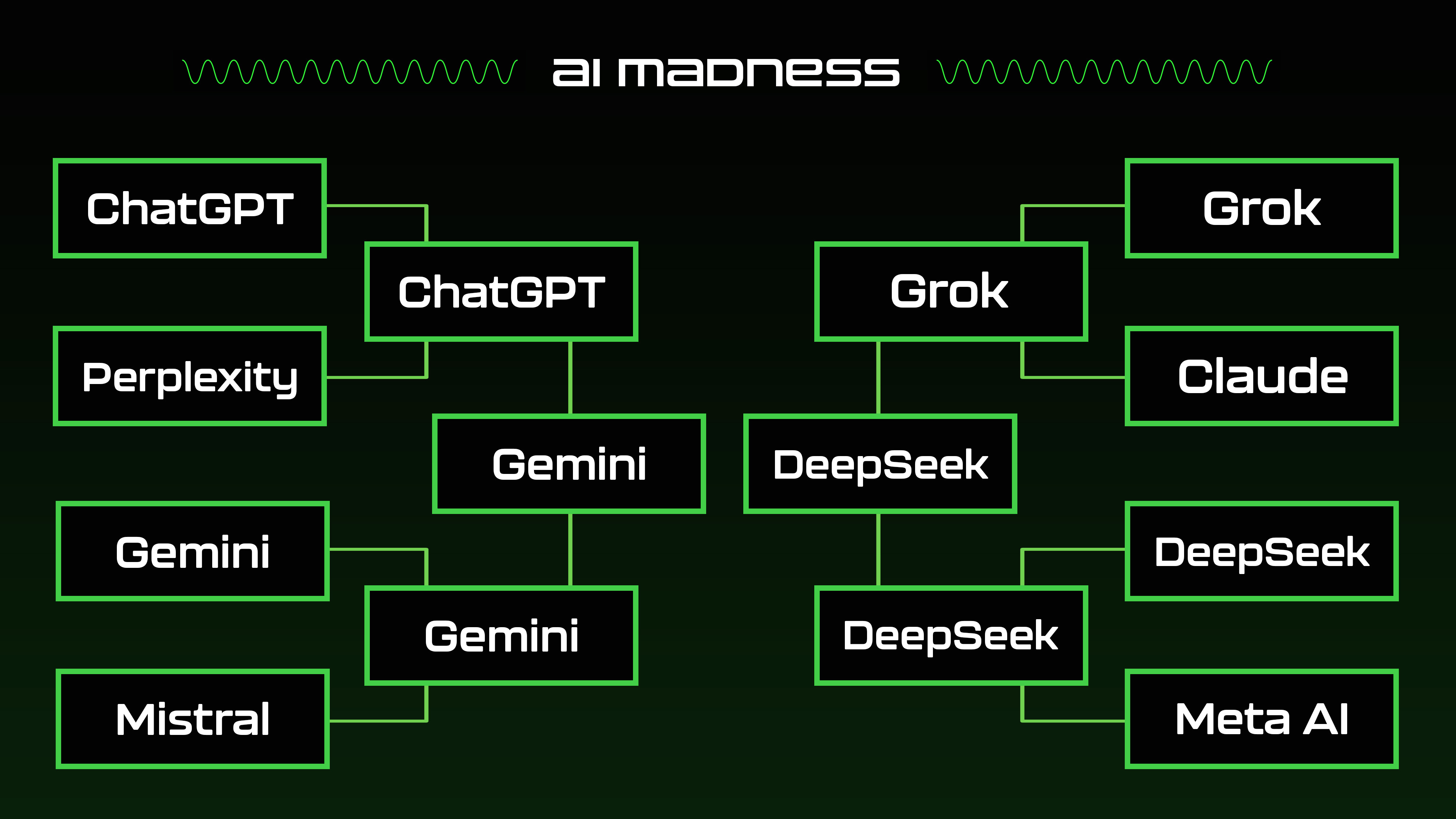

AI Madness: The Final

The championship pitted together the unusual matchup of Gemini vs DeepSeek. Gemini offered solid answers to the nine prompts, but DeepSeek offered the best, most polished answers and multimodal finesse, nearly every time.

From simplifying tricky concepts for kids to tackling real-world challenges with smart solutions, DeepSeek showcased superior responses across diverse prompts and clinched the title. Its mix of clarity, creativity, and practicality left the competition in the dust.

What this test ultimately proves

While ChatGPT and Gemini are typical AI go-tos, DeepSeek proved it can pack a punch and outdo the AI giants. But why?

DeepSeek-R1 distinguishes itself from traditional large language models (LLMs) by embracing a novel training methodology centered on reinforcement learning (RL), rather than relying predominantly on supervised fine-tuning.

This innovative approach enables the model to learn through trial and error, receiving algorithmic rewards that guide its development toward more effective reasoning capabilities.

For instance, DeepSeek-R1 has demonstrated the ability to self-discover problem-solving techniques, such as reflection and verification, without explicit instruction. This approach allows the model to refine its responses and enhance performance by learning from its own errors.

By using RL, DeepSeek-R1 demonstrates that it's possible to develop advanced reasoning abilities in AI without depending solely on these extensive, labor-intensive datasets.

Clearly, as these tests prove, RL might just be a more efficient and potentially less resource-intensive path for AI development, challenging the common belief that massive, supervised datasets are essential for training sophisticated language models.

Final thoughts

In summary, DeepSeek’s triumph proves there’s value in looking beyond typical training methods.

DeepSeek-R1's reliance on pure reinforcement learning marks a significant departure from traditional supervised fine-tuning methods. Perhaps by letting AI models learn from themselves, it ultimately makes the language models smarter.

AI Madness highlighted the cutting edge possibilities of chatbots, but if you ask us, the race is just heating up! Stay tuned.

More from Tom's Guide

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.