I test AI for a living and Meta's new MetaAI chatbot might be my new favorite

Hold a conversation and even make images move

Meta finally released its MetaAI assistant as a standalone chatbot, powered by the impressive Llama 3 large language model and it is already up there as one of my favorite AI tools.

For the past year the Facebook owner has been playing with the idea of a dedicated AI assistant, adding versions of MetaAI to the Ray-Ban smart glasses, WhatsApp and other products but avoiding rolling out a dedicated, ChatGPT-style chatbot.

MetaAI is gradually rolling out to places outside the U.S. including Australia and New Zealand and is available across all Meta platforms. During a recent press event Meta said the aim was to make MetaAI “the best virtual assistant on the market.”

I’ve found it very conversational, easy to interact with and more open to offering an opinion than some of the other chatbots. It has more of a Claude 3 feel to it and once it gets the full Llama 3 upgrade this summer it may challenge Anthropic for the “my favorite AI chatbot” crown.

What is MetaAI?

Meta likes to name things after itself. Which is odd given the brand only came about a few years ago and MetaAI is no different. This is the name for the assistant in all of Meta’s products, including the Quest 3, Ray-Ban smart glasses, Instagram, WhatsApp and now meta.ai.

MetaAI has already proved itself capable, even when running on the earlier, much less powerful Llama 2. Built into the smart glasses it has offered advice on things like clothes and in WhatsApp it has aided in conversational flow. Now you can use it like Google Gemini or Claude 3. It can even code.

The chatbot is powered by the recently released Llama 3 70B which has already beaten equivalent sized models, as well as OpenAI's GPT-3.5 on many benchmarks.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

From this summer it will be powered by Llama 3 400B, a GPT-4-level AI model that is currently in training at the Meta HQ. This will also be open sourced and power third-party apps.

You do currently need a Facebook account to use MetaAI, although that may expand to other Meta applications in future.

Why is MetaAI worth trying in a crowded market?

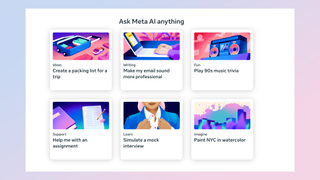

When you open MetaAI you're presented with a UI similar to ChatGPT, Google Gemini and Microsoft Copilot. There's a side menu with a list of chats, a text box at the bottom and a chat area.

What makes it stand out is its ease of use and natural tone. The way it responds reminds me of Pi, the largely overlooked conversational chatbot with the most friendly responses I’ve seen.

One standout thing from MetaAI is the speed at which it responds. It is almost as fast as using Groq, the inference engine with lightning fast response times. However, MetaAI also has a relatively small context window, so be prepared to repeat yourself or start new chats regularly.

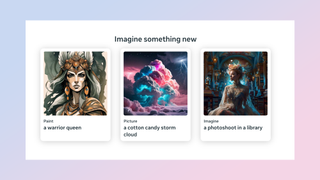

Possibly the best feature is Imagine. This was previously a limited, standalone AI image generator that has now been incorporated into MetaAI, meaning you can use conversational language to craft the perfect image — you can even edit the picture through chat.

What Imagine does that isn’t available through Gemini, Copilot or ChatGPT is animate your images. It creates something similar to a live photo, animating a few frames but it is effective and fun to play with.

Meta AI outlook

During a recent AI press event Meta executives talked about the aim of making MetaAI the gold standard of artificial intelligence-based assistants. The goal was to put it into every Meta product with different ways to interact.

This seems to be the start of that vision coming true, and in future we could even see MetaAI offered as an alternative to Alexa, Siri or Gemini on Android.

One area we will see deeper integration is in various Facebook products such as Groups, offering owners the ability to use MetaAI as a moderation assistant, or for businesses using it to boost the reach of ads or manager user queries.

MetaAI currently lacks any multimedia analysis capability. It can only take in text whereas its competitors can work with an increasing number of mediums including voice, images and most recently video. This may change with Llama 3 400B in July, but it is something Meta needs to solve to truly compete with other chatbots.

More from Tom's Guide

- Meta's Llama 3 is coming this summer — but a small version could drop next week for you to try early

- Meta’s Ray-Ban smart glasses are getting a huge AI upgrade — what you need to know

- Meta’s LLaMA AI aims to be more honest than ChatGPT

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on artificial intelligence and technology speak for him than engage in this self-aggrandising exercise. As the AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover. When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing. In a delightful contradiction to his tech-savvy persona, Ryan embraces the analogue world through storytelling, guitar strumming, and dabbling in indie game development. Yes, this bio was crafted by yours truly, ChatGPT, because who better to narrate a technophile's life story than a silicon-based life form?