I test AI for a living and Google’s Gemini Pro 1.5 is a true turning point

Gemini Pro 1.5 can hold entire movies in its memory

Google dropped a surprise on the AI world on Thursday with the release of Gemini Pro 1.5, a new version of its recently released next-generation AI model Gemini Pro.

A company the size of Google announcing a new update isn’t exactly ground breaking, but what is significant about Gemini Pro 1.5 is just how much better and different it is to version one.

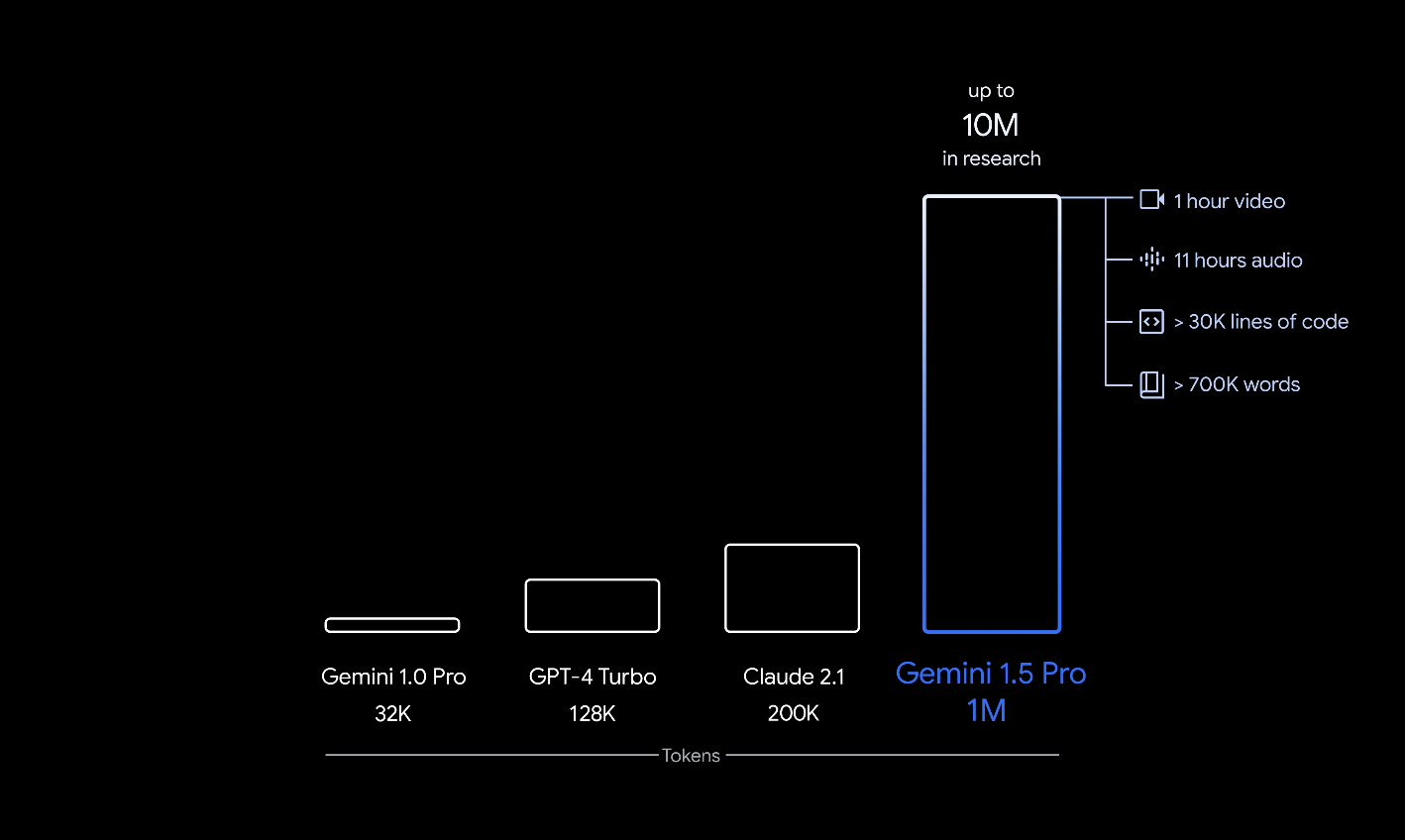

Gemini Pro 1.5 has a significantly larger context window than any other model on the market, up to 10 million tokens compared to, for example, 128,000 tokens for GPT-4. It is also now technically more powerful than Gemini Pro 1.0 and Gemini Advanced 1.0 which powers the paid-for version of the Gemini chatbot.

Google claims its new model is also more reliable, able to pick out specific moments and works across video, audio, images and text natively. This is a big deal as AI moves into the real world through AR interfaces like the Meta Quest, Apple Vision Pro or RayBan smart sunglasses.

What makes Gemini Pro 1.5 so different?

Gemini Pro 1.5 has a staggering 10 million token context length. That is the amount of content it can store in its memory for a single chat or response.

This is enough for hours of video or multiple books within a single conversation, and Google says it can find any piece of information within that window with a high level of accuracy.

Jeff Dean, Google DeepMind Chief Scientist wrote on X that the model also comes with advanced multimodal capabilities across code, text, image, audio and video.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

He wrote that this means you can “interact in sophisticated ways with entire books, very long document collections, codebases of hundreds of thousands of lines across hundreds of files, full movies, entire podcast series, and more."

In “needle-in-a-haystack” testing where they look for the needle in the vast amount of data stored in the context window, they were able to find specific pieces of information with 99.7% accuracy even with 10 million tokens of data.

What are the use cases for such a large context window?

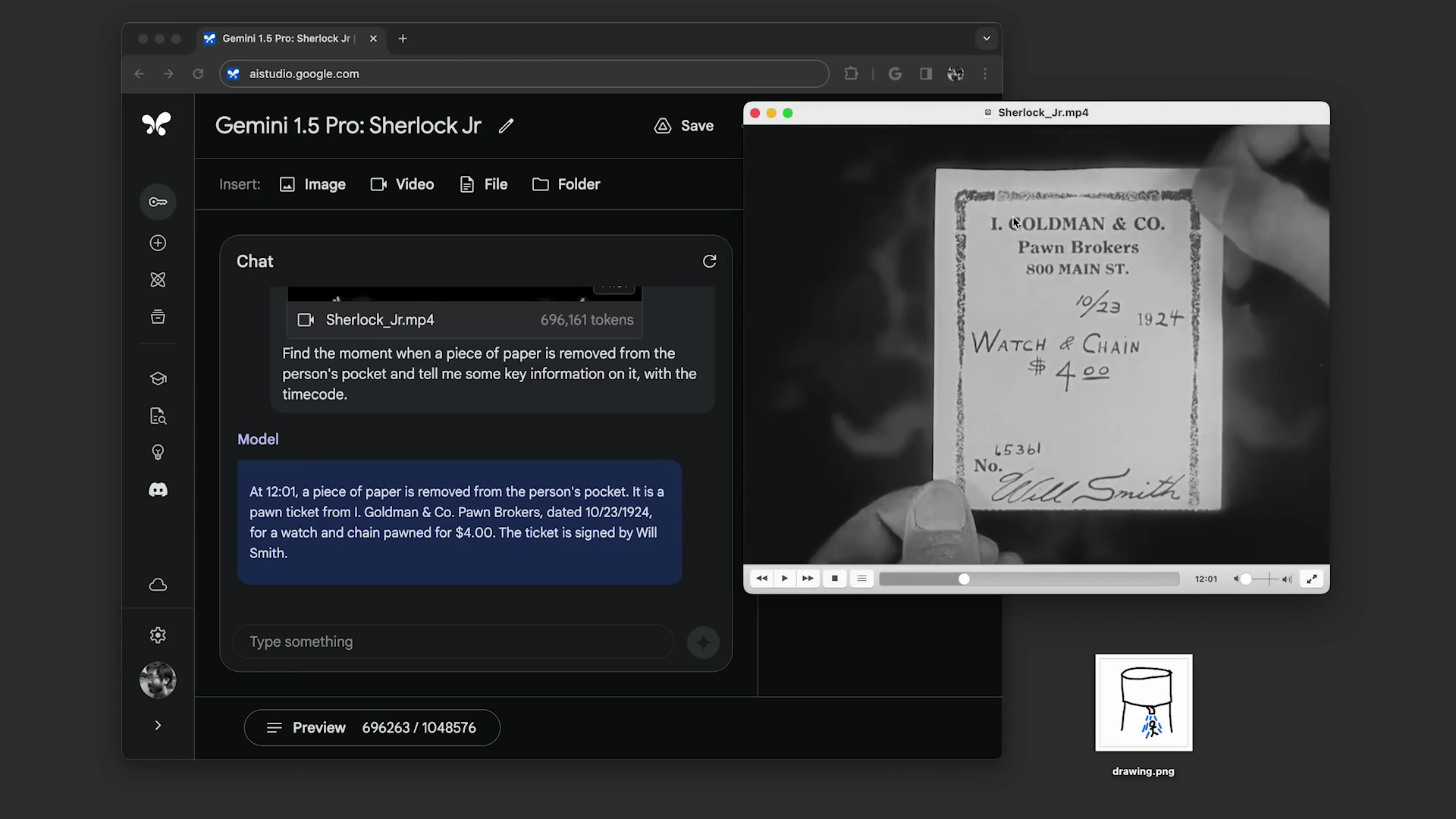

While promoting the new model Google showed off its video analysis ability on Buster Keaton’s silent 45 minute movie “Sherlock Jr.”

Scanning a single frame per second, the input required a total of 648,000 tokens. Gemini could then answer questions about the movie such as "tell me about the piece of paper removed from the person's pocket" and give the exact time code.

Gemini was able to say exactly what was written on the piece of paper and the exact moment the paper is shown in full on screen.

In another example developers were able to make a quick sketch of a scene, not particularly well drawn and using stick figure art. It gave it to Gemini and asked it to give the timestamp for that particular scene — Gemini returned the exact timestamp accurately in seconds.

Other surprising benefits of a large context

One other aspect that hasn’t been widely reported is the potential for saving, learning and even creating new languages. Jim Fan, a senior researcher and AI agent expert at Nvidia pointed out the zero shot ability of Gemini Pro 1.5 to understand linguistics in a suprising way.

He wrote on X that: “v1.5 learns to translate from English to Kalamang purely in context, following a full linguistic manual at inference time.”

Kalamang is a language spoken by about 200 people in New Guinea and Gemini had no information on the language during its original training — so it had nothing to drawn from.

For the test it was given 500 pages of linguistic documentation, a dictionary, and about 400 parallel sentences and the context in which they apply. It used this to learn the language and be able to offer translations of any words or phrases from English to Kalamang and back.

When will I be able to use Gemini Pro 1.5?

Gemini 1.5 Pro - A highly capable multimodal model with a 10M token context lengthToday we are releasing the first demonstrations of the capabilities of the Gemini 1.5 series, with the Gemini 1.5 Pro model. One of the key differentiators of this model is its incredibly long… pic.twitter.com/2KLro4VwLTFebruary 15, 2024

Gemini Pro 1.5 is already available to some enterprise customers using Vertex AI or Google Cloud’s Generative AI studio. At some point it will come to the Gemini chatbot but when it does the maximum context will likely be closer to 128,000, similar to ChatGPT Plus.

This is a game changing moment for the AI sector in a day that also saw OpenAI launch a video model and Meta find a way to use video to teach AI about the real world.

What we are seeing with these advanced multimodal models is the interact of the digital and the real, where AI is gaining a deeper understanding of humanity and how WE see the world.

More from Tom's Guide

- Google could pump its Gemini AI chatbot directly into your ears — here’s what we know

- I test AI for a living — here’s why Google Gemini is a big deal

- ChatGPT finally has competition — Google Bard with Gemini just matched it with a huge upgrade

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on artificial intelligence and technology speak for him than engage in this self-aggrandising exercise. As the AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover. When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing. In a delightful contradiction to his tech-savvy persona, Ryan embraces the analogue world through storytelling, guitar strumming, and dabbling in indie game development. Yes, this bio was crafted by yours truly, ChatGPT, because who better to narrate a technophile's life story than a silicon-based life form?