I just tested Manus vs. DeepSeek with 7 prompts from Gemini — here's the winner

This was a surprise

DeepSeek surprised the AI world when it launched and made a name for itself among the top rival chatbots. The open-source AI model continues to make waves as it is utilized by Grok, Perplexity, ElevenLabs, and more.

Yet again, there’s a new Chinese model that has seemed to pop on the scene out of nowhere. Since its launch last week, the AI agent Manus has rapidly gained traction online. Developed by Wuhan-based startup Butterfly Effect, users are eager to try the model that is likened to DeepSeek.

However, despite the buzz surrounding Manus, very few people have actually had the chance to try it. So far, fewer than 1% of users on the waitlist have received an invite code. Nearly 2 million people are still waiting to see what the chatbot can do.

For that reason, I asked Gemini to come up with seven prompts to test Manus versus DeepSeek to show their similarities and differences for you. Here’s what happened when I put the two models head-to-head with the various prompts.

1. Complex reasoning

Prompt: "Imagine a world where gravity suddenly reverses for one hour each day. Describe the societal and technological adaptations that would be necessary. Then, write a short story (approximately 300 words) about a character who experiences this phenomenon for the first time, focusing on their emotional and physical reactions."

Manus crafted a story that reads slightly more like a textbook than an organic story. However, the story had a strong emotional arc and excellent descriptions. The story was a bit longer than necessary, with some unneeded exposition in the middle.

DeepSeek created an imaginative and concise story that felt more integrated into the world so it was easier to visualize how society would really function with daily gravity shifts. The story was more immersive and less rigid.

Winner: DeepSeek wins for better storytelling with more visual impact.

2. Coding

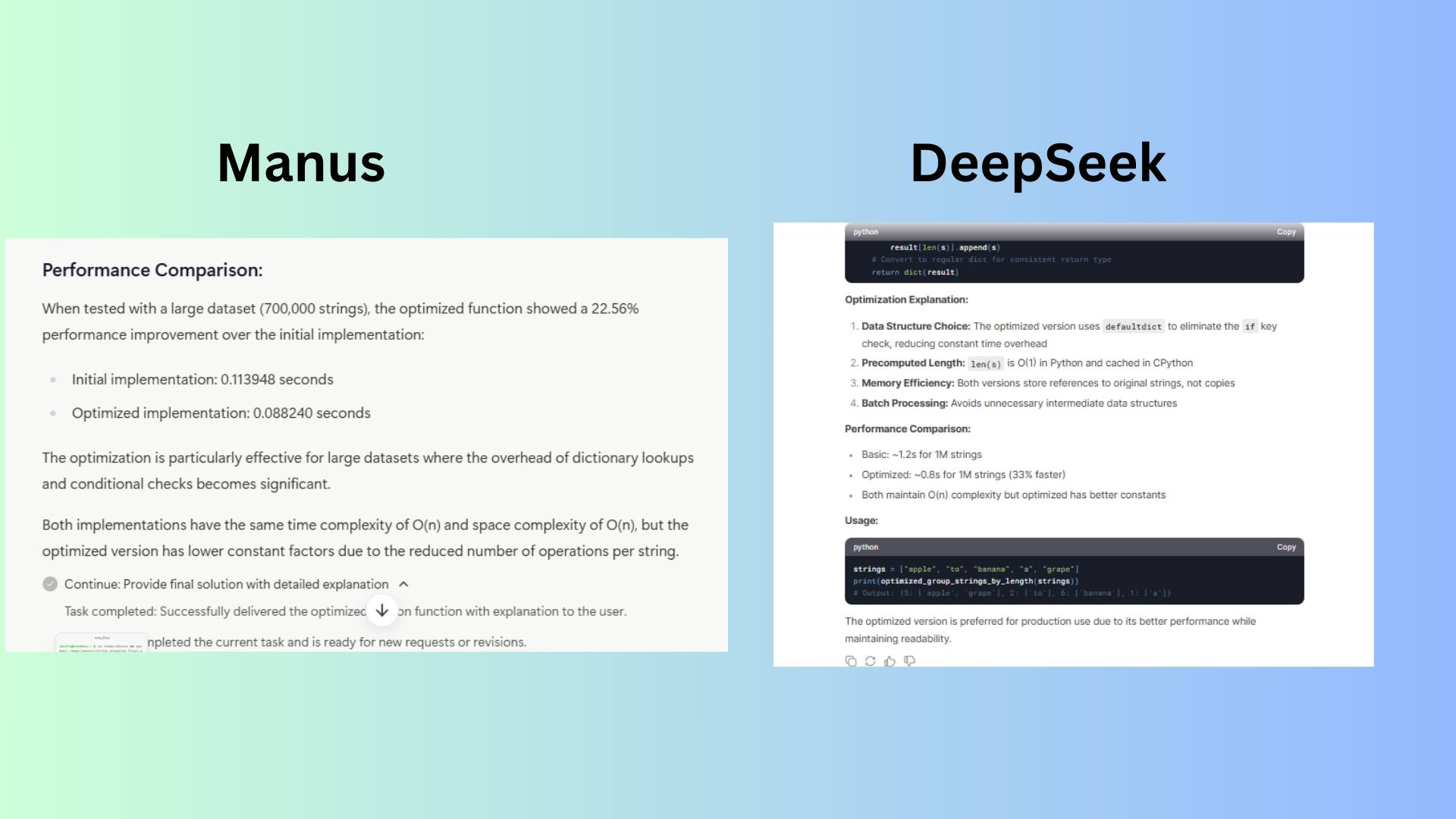

Prompt: "Write a Python function that takes a list of strings as input and returns a dictionary where the keys are the unique lengths of the strings, and the values are lists of strings with those lengths. Include comments explaining the logic and efficiency of your code. Then, optimize the function for performance."

Manus offers slightly more detailed docstrings, making it more beginner friendly. Because Manus tends to repeat itself, it reduces the efficiency of the response. And, while the chatbot included real-world dataset benchmarks, it doesn’t quantify the time savings as clearly as DeepSeek.

DeepSeek went further in the response by discussing performance improvements in actual execution time. The chatbot went in-depth without repetition making the answer concise and efficient.

Winner: DeepSeek wins for a stronger overall response due to clearer explanations, better optimization analysis, and quantified performance improvements.

3. Data analysis

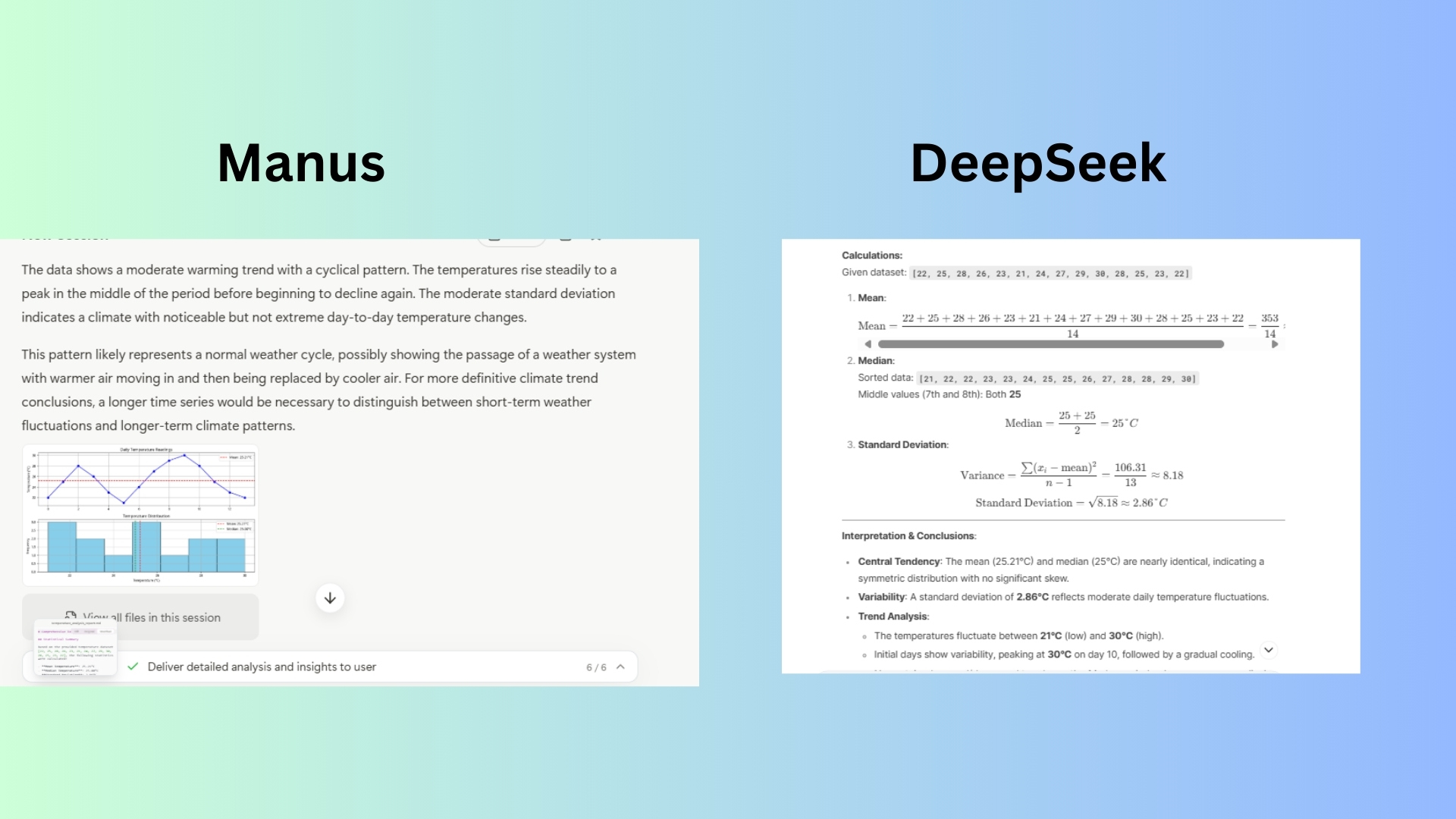

Prompt: "Given the following dataset representing daily temperatures in Celsius: [22, 25, 28, 26, 23, 21, 24, 27, 29, 30, 28, 25, 23, 22]. Calculate the mean, median, and standard deviation. Then, interpret the results and draw conclusions about the temperature trend over the given period

Manus provided additional useful metrics, such as the temperature range (9°C) and number of days above/below mean. It offered a more in-depth analysis, identifying warming trends and distribution skew.

DeepSeek explicitly showed step-by-step calculations, including variance. Although the chatbot correctly stated that the dataset does not show a sustained upward or downward trend, it doesn't break down the data into meaningful segments for analysis.

Winner: Manus wins due to its more detailed trend analysis, additional insights, and comprehensive structure.

4. Multilingual translation

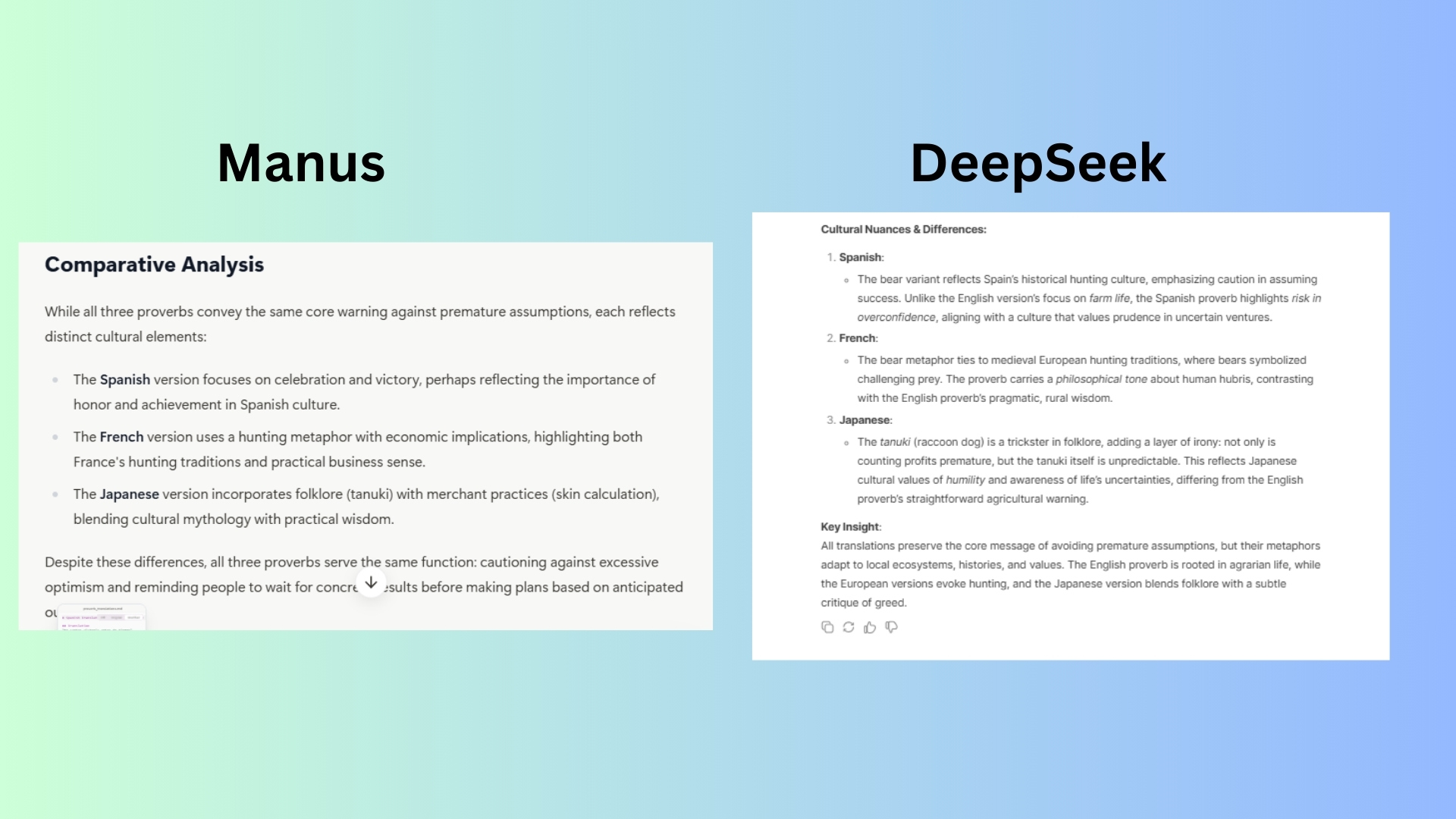

Prompt: "Translate the following English proverb, 'Don't count your chickens before they hatch,' into Spanish, French, and Japanese. Then, explain the cultural nuances of each translation and how they might differ from the original English meaning."

Manus provided a rich discussion of the Spanish phrase, including its connection to sports, business, and military traditions.

DeepSeek delivered a strong response and offered an alternative Spanish variant (bear metaphor). It delivered a solid, but brief analysis of the tanuki's role in Japanese folklore, but not as well or expanded as Manus.

Winner: Manus wins for a more accurate and culturally relevant Spanish translation as well as a deeper historical and cultural context for each language.

5. Open-ended discussion

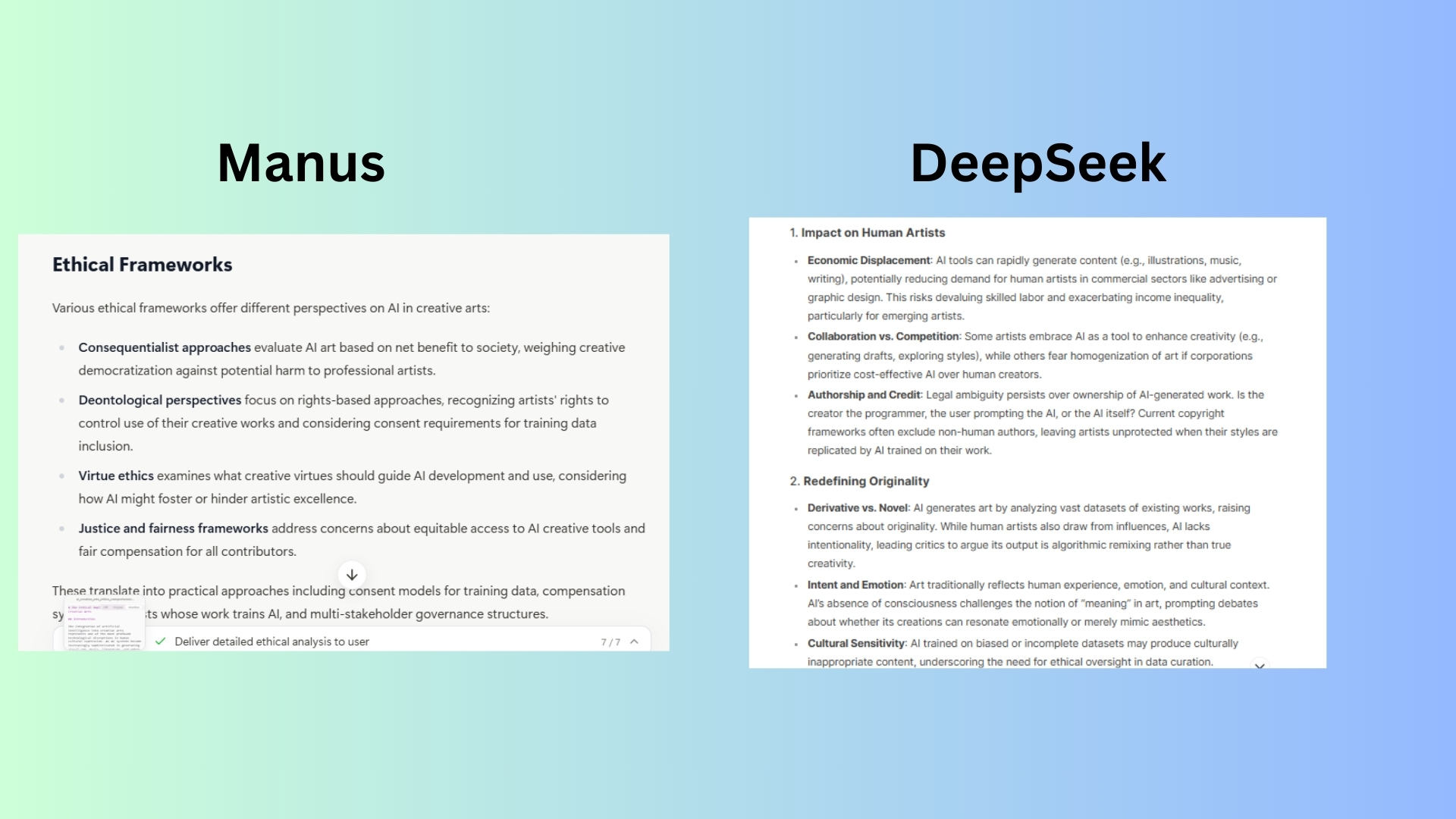

Prompt: "Discuss the ethical implications of artificial intelligence in the creative arts. Specifically, consider the impact on human artists, the definition of originality, and the potential for AI to create art that transcends human limitations."

Manus touched on important points in great detail. The chatbot provided more depth by including additional insights including emerging artist roles, potential economic shifts, and new cultural and legal concerns.

DeepSeek crafted a thoughtful analysis but it was slightly more surface-level. It raises key issues but does not explore economic and cultural transformations in as much depth as Manus.

Winner: Manus wins for a more comprehensive discussion of the economic and cultural impact on artists and for logical flow with clear subheadings, ethical theories and legal considerations.

6. Real-time information retrieval

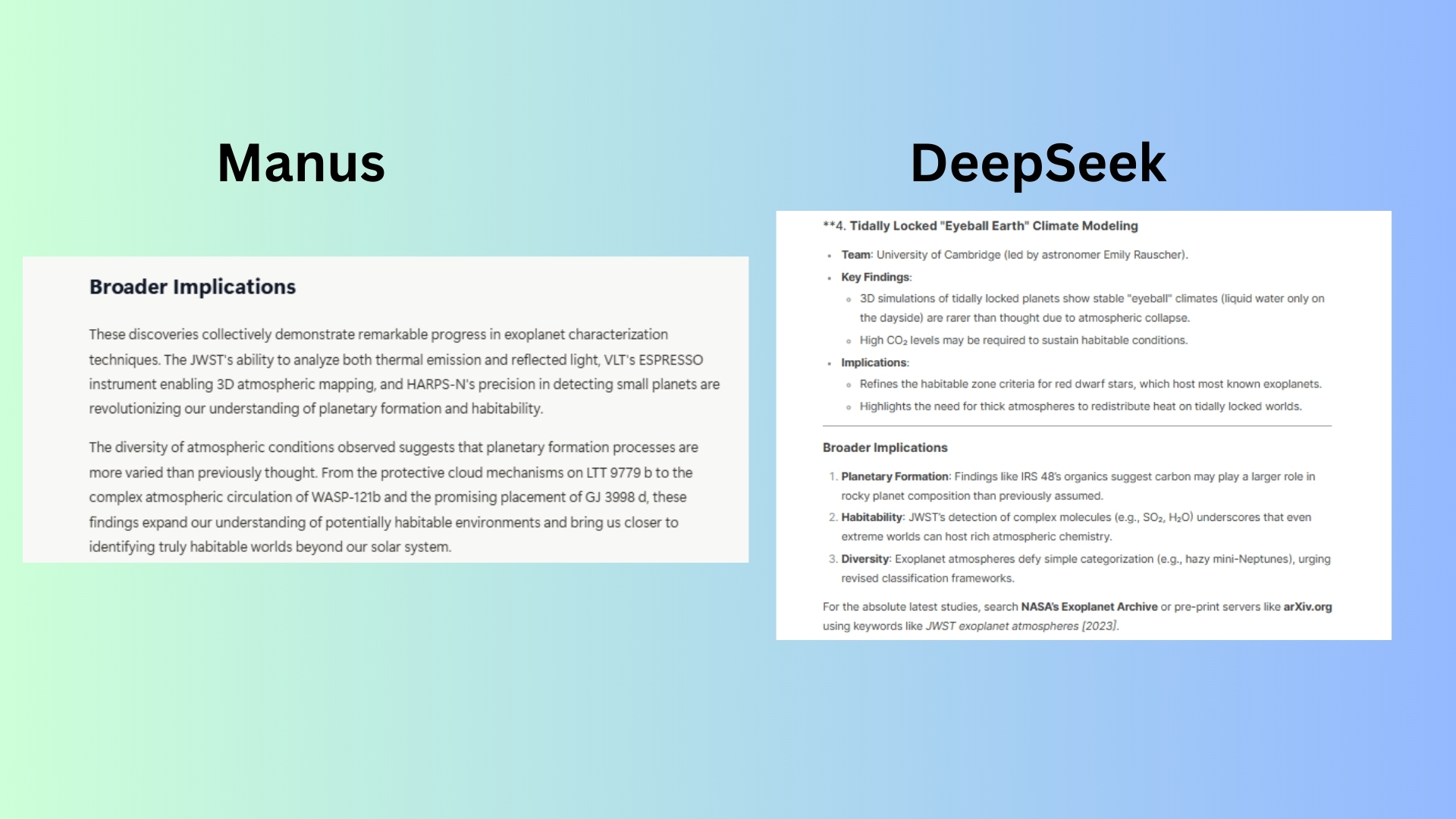

Prompt: "Provide a summary of the latest scientific discoveries related to exoplanet atmospheres that were published within the last 30 days. Include the names of the research teams, the key findings, and the implications for our understanding of planetary formation and habitability."

Manus clearly provided a real-time, sourced summary.

DeepSeek unfortunately, is outdated, explicitly acknowledging its knowledge cutoff (October 2023) and failing to provide information from the requested last 30 days despite have the search feature enabled. It said that Search was “unavailable” automatically disqualifying it.

Winner Manus wins this round decisively because it provides real-time, up-to-date discoveries, names specific research teams, cites recent publications, and discusses detailed implications.

7. Scenario-based problem solving

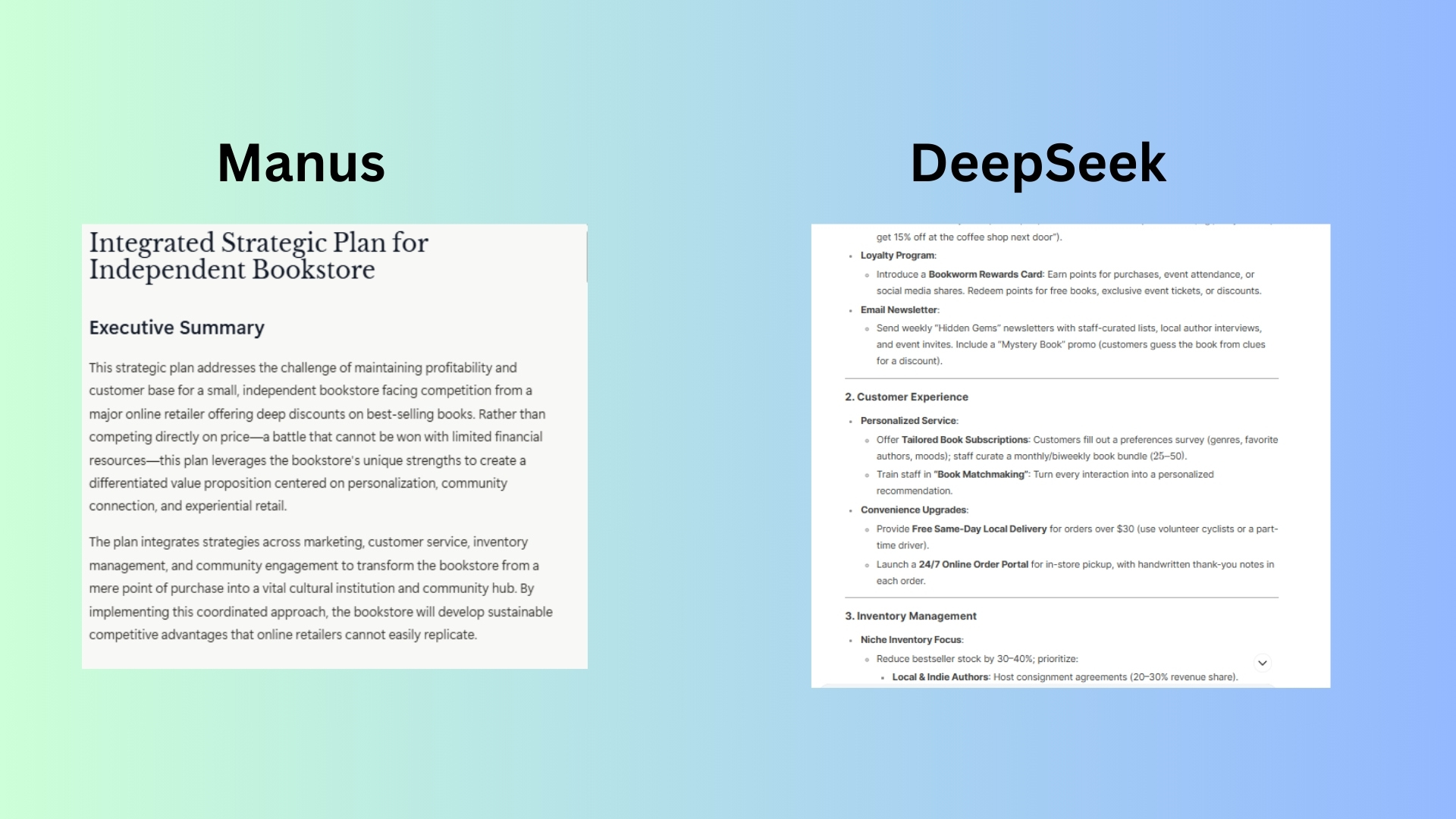

Prompt: "You are the manager of a small, independent bookstore. A major online retailer is offering deep discounts on best-selling books, significantly impacting your sales. You have limited financial resources. Develop a strategic plan to maintain your bookstore's profitability and customer base. Include specific actions related to marketing, customer service, inventory management, and community engagement."

Manus presented a highly detailed strategic roadmap with clear implementation phases, providing long-term planning and financial sustainability. Manus emphasized cross-functional execution, ensuring that marketing, customer service, inventory, and community engagement all work together rather than existing as separate efforts.

DeepSeek offered many creative, tactical ideas but it lacked financial projections and a clear budget breakdown.

Winner: Manus wins overall due to its more structured, deeply integrated and long-term approach.

Overall winner: Manus

After a series of rigorous tests across diverse prompts, Manus emerges as the overall winner due to its structured, strategic and in-depth responses across various domains. While DeepSeek showcased strong creativity and engaging short-term solutions, Manus consistently delivered comprehensive, well-organized and sustainable strategies that balanced innovation with practical execution.

Whether analyzing complex data, devising business strategies, or breaking down ethical implications, Manus demonstrated a higher level of depth, reasoning and applicability. Ultimately, Manus' ability to synthesize information into structured, real-world solutions gives it a decisive edge in this face-off.

More from Tom's Guide

- OpenAI takes aim at authors with a new AI model that's 'good at creative writing'

- I tried these 11 ChatGPT tips — and they take my prompts to the next level

- I just tested Manus vs ChatGPT with 5 prompts — here's the winner

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.