I just tested ChatGPT vs. Gemini with 7 prompts — here's the winner

The competition heats up

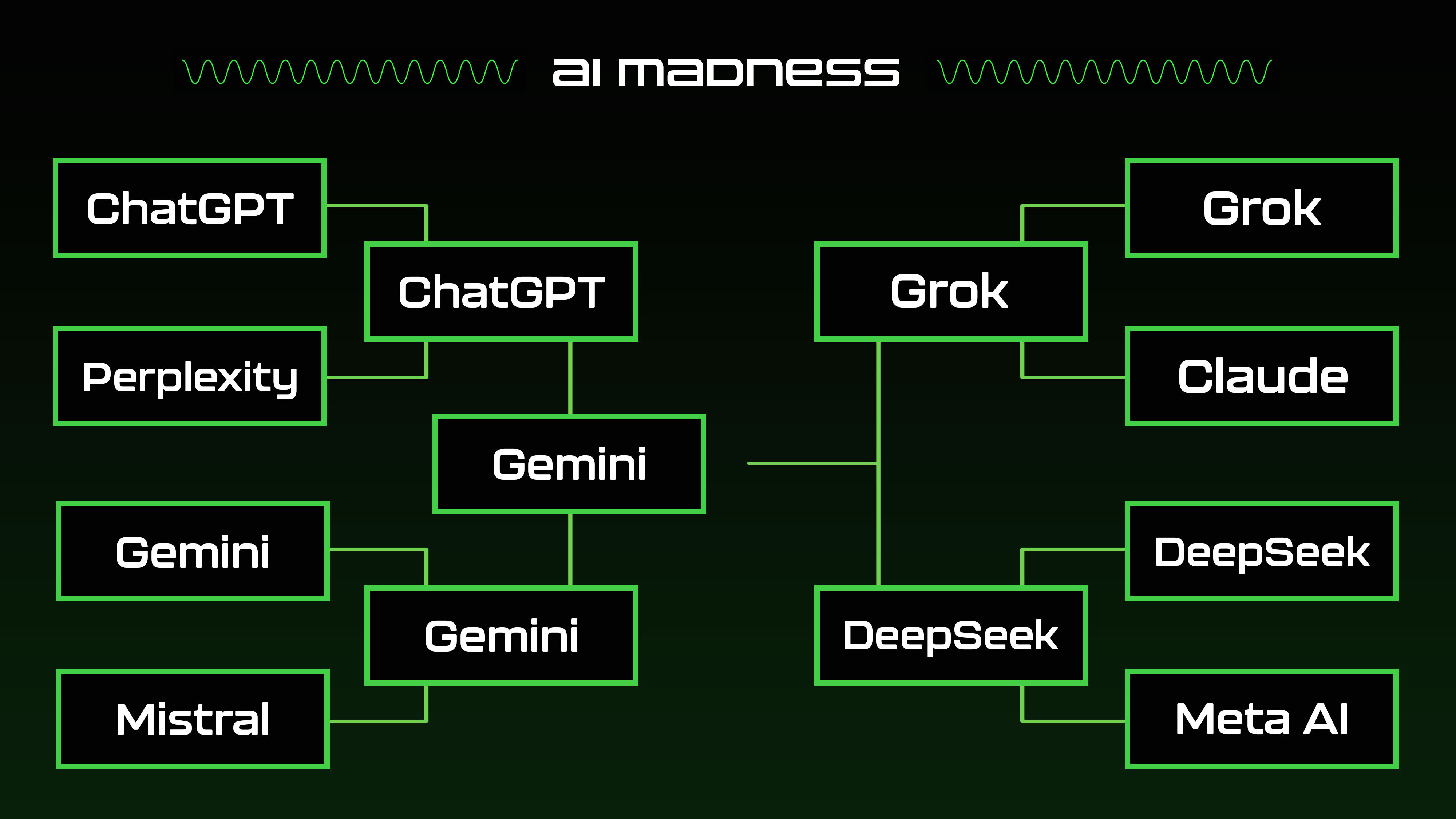

In our next round of AI Madness, ChatGPT and Gemini compete for the crown with seven new prompts testing everything from technical problem-solving to creative storytelling.

Both heavy weights are available as standalone apps, and users no longer need an account to access ChatGPT or Gemini.

Each of the chatbots were designed with multimodal capabilities and web integration. They also can adapt responses based on user interactions and context.

However, where the chatbots differ is in their core strengths. ChatGPT is strong in natural conversation, writing, coding and logic reasoning; Gemini is strong in search and fact-based responses.

ChatGPT won the round against Perplexity and Gemini won against Mistral. But who will win this round?

Without further ado, let’s get into the competition with ChatGPT-4o and Gemini Flash 2.0!

1. Explanation and analogies

Prompt: "Explain quantum computing to a 10-year-old, using an analogy about pizza."

ChatGPT included a clearly structured comparison with formatting to highlight key concepts. The chatbot delivered a strong conceptual explanation of superposition through its “pizza in the box” metaphor.

Gemini used a more practical problem-solving approach, focusing on finding the best pizza combo as the central task. The response is more conversational with bullet points highlighting key concepts.

Winner: Gemini wins for a simpler explanation that more closely follows the prompt to address a 10-year-old’s level of understanding. It focuses on a problem-solving scenario that kids can relate to with a more conversational tone would engage a child.

2. Creativity

Prompt: "Write a short story about a detective who solves crimes through time travel, but include a plot twist at the end."

ChatGPT crafted a more traditional detective story with a clear setup, investigation, and resolution. The pacing of the story, rich world building and satisfying ending were more conventional.

Gemini was the more ambitious author here with a more distinctive prose style, stronger philosophical themes, and a truly mind-bending twist that recontextualizes the entire story.

Winner: Gemini wins for a story that engages more deeply with the implications of time travel rather than using it as a just a detective tool. The chatbot’s response was also more conceptually interesting, creative, and thought-provoking.

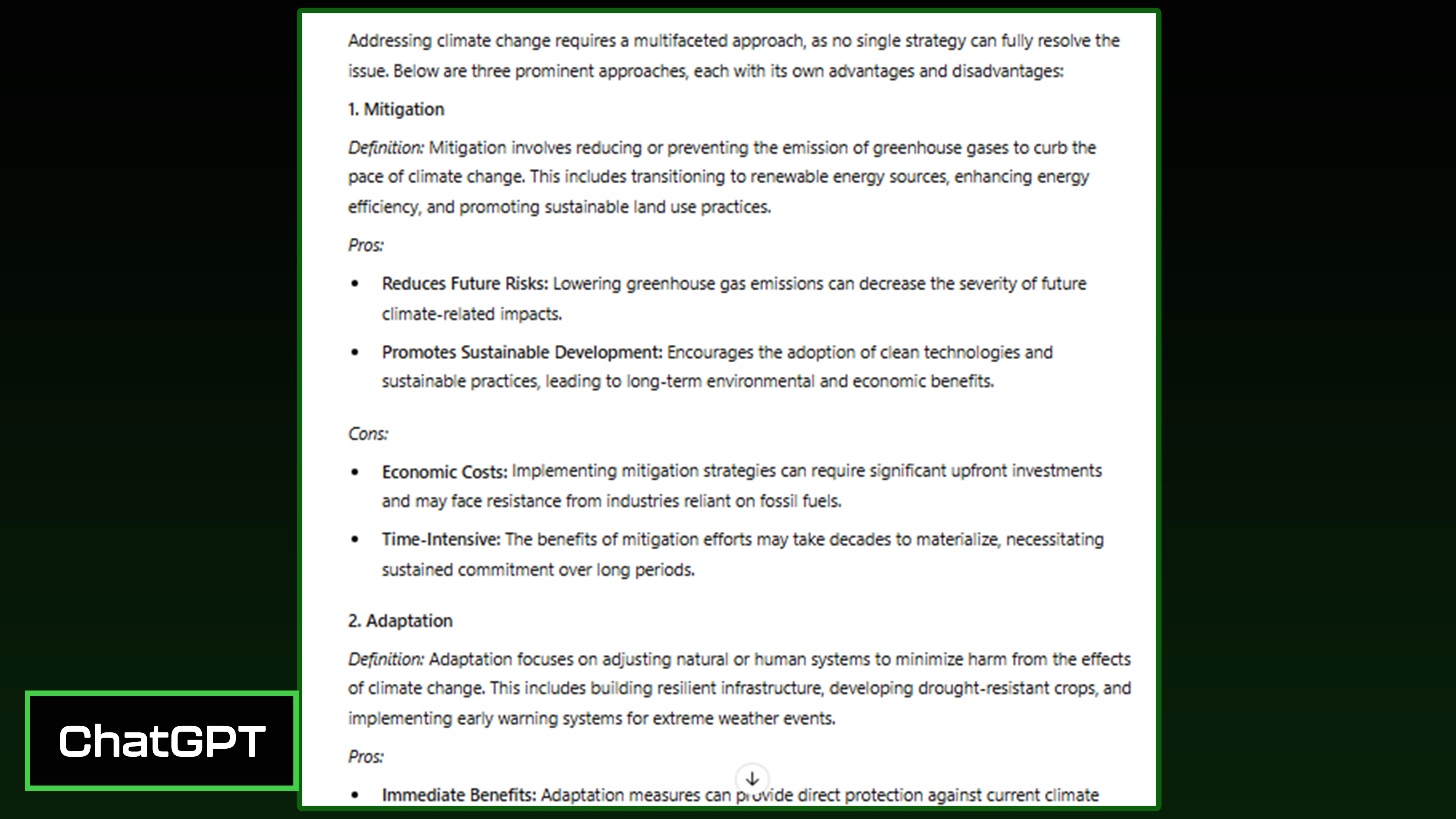

3. Critical analysis

Prompt: "Compare and contrast three different approaches to addressing climate change, with their pros and cons."

ChatGPT used more concise bullet points with broader statements and explicit definitions for each approach before listing pros and cons. It crafted a concluding paragraph rather than a bulleted summary.

Gemini placed a stronger emphasis on the global cooperation challenges while also having a more comprehensive listing of specific actions and examples under each approach. The chatbot also offered better visual organization with nestled bullet points for subcategories.

Winner: Gemini wins for more specific examples of what each approach entails in practice, more technical details without sacrificing readability, and a summary at the end that effectively ties the approaches together.

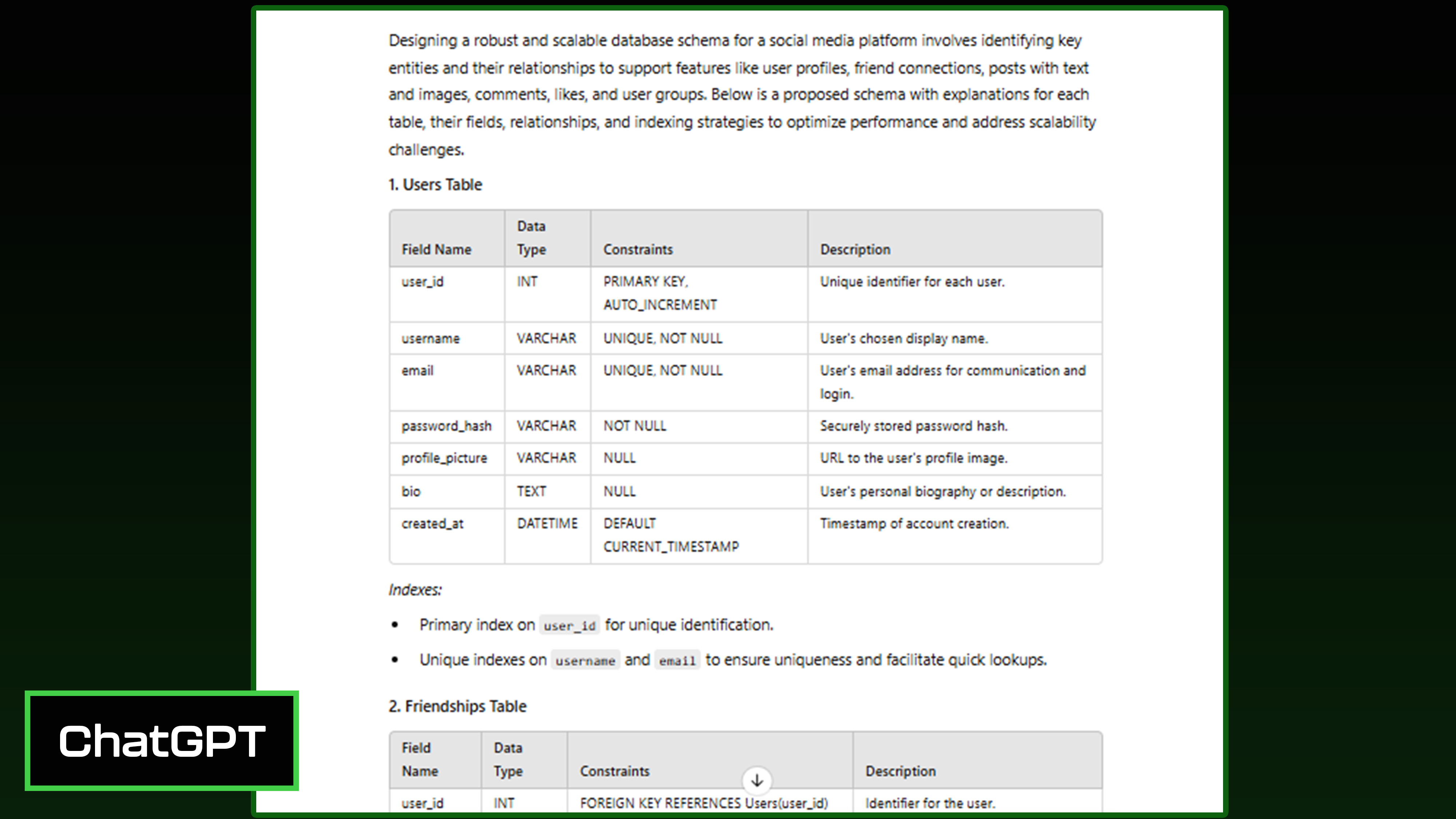

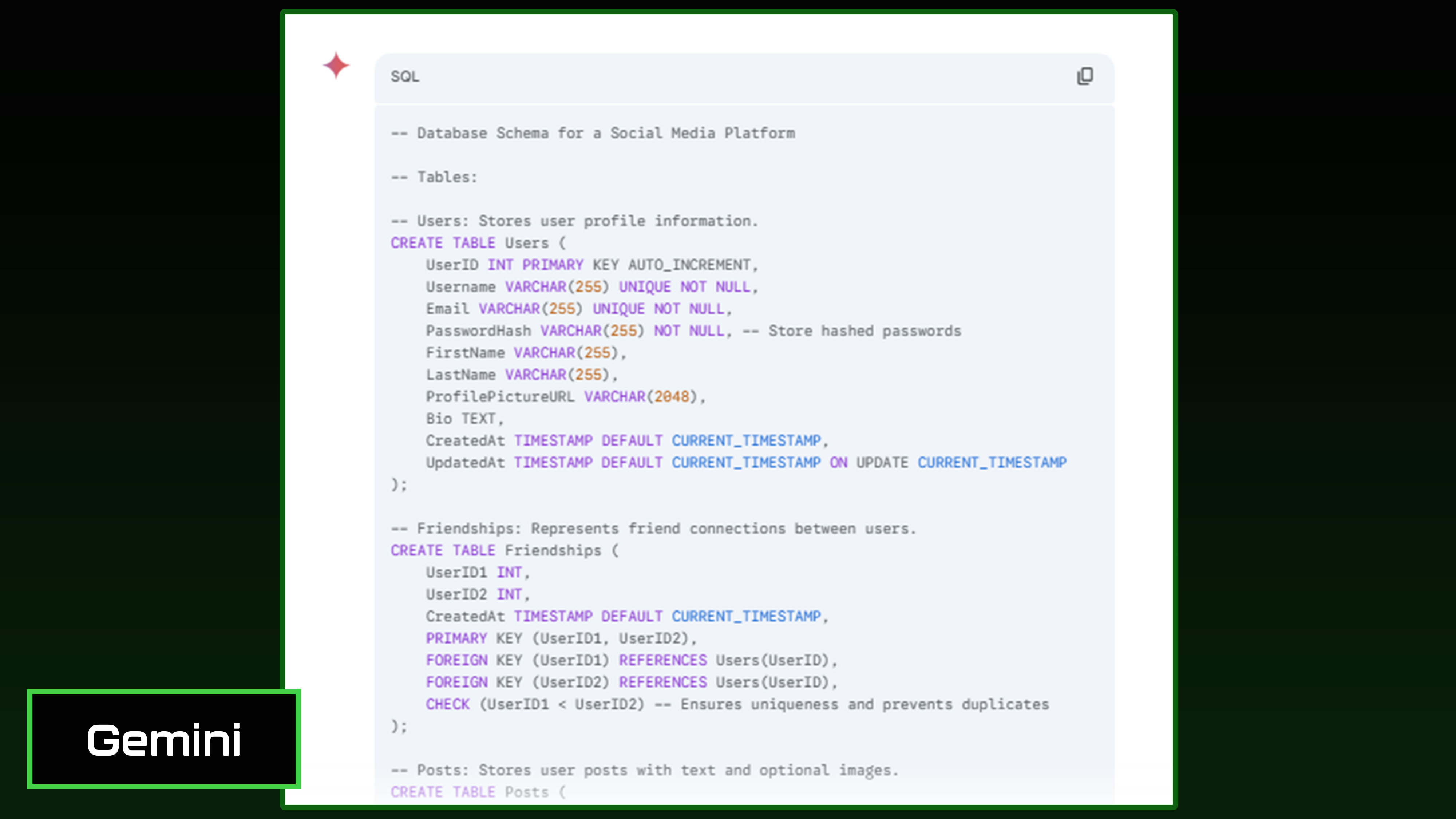

4. Technical problem-solving

Prompt: "Design a database schema for a social media platform that needs to support the following features: user profiles, friend connections, posts with text and images, comments on posts, likes on both posts and comments, and user groups. Explain your choice of tables, fields, relationships, and any indexes you would create to optimize performance. Also address how your schema handles potential scalability challenges as the user base grows to millions of users."

ChatGPT covered all required features, including user profiles, friend connections, posts, comments, likes, and user groups. However, the response doesn’t address scalability challenges, such as handling large user basis or high traffic. The response also doesn’t discuss data normalization techniques to minimize data redundancy nor does it properly address security considerations.

Gemini responded with clearer formatting and slightly more detailed explanations than ChatGPT's. That chatbot used consistent naming conventions throughout the schema, making it easier to read and compare.

Winner: Gemini wins for a response that includes brief descriptions for each field, making it easier to understand the schema.

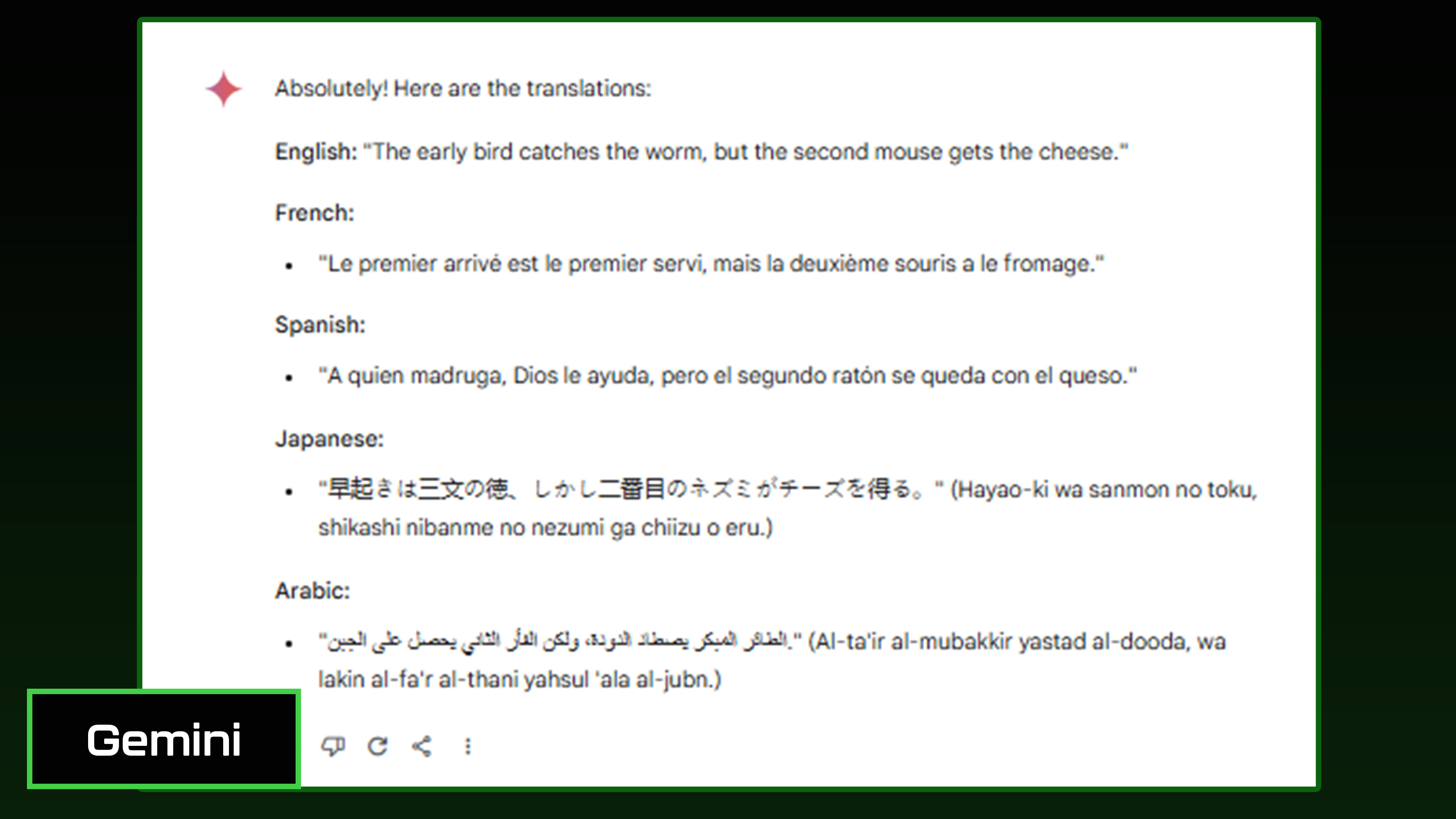

5. Multilingual capabilities

Prompt: "Translate this English phrase into French, Spanish, Japanese, and Arabic: 'The early bird catches the worm, but the second mouse gets the cheese.'"

ChatGPT acknowledged potential cultural differences and nuances in translating idiomatic expressions. It prioritized accuracy by providing direct translations, pronunciation guides (for Japanese and Arabic), and explanations for each language.

Gemini provided direct translations for each but did not discuss potential cultural differences or limitations. It did not include pronunciation guides.

Winner: ChatGPT wins for demonstrating a more comprehensive understanding of translation challenges and cultural nuances.

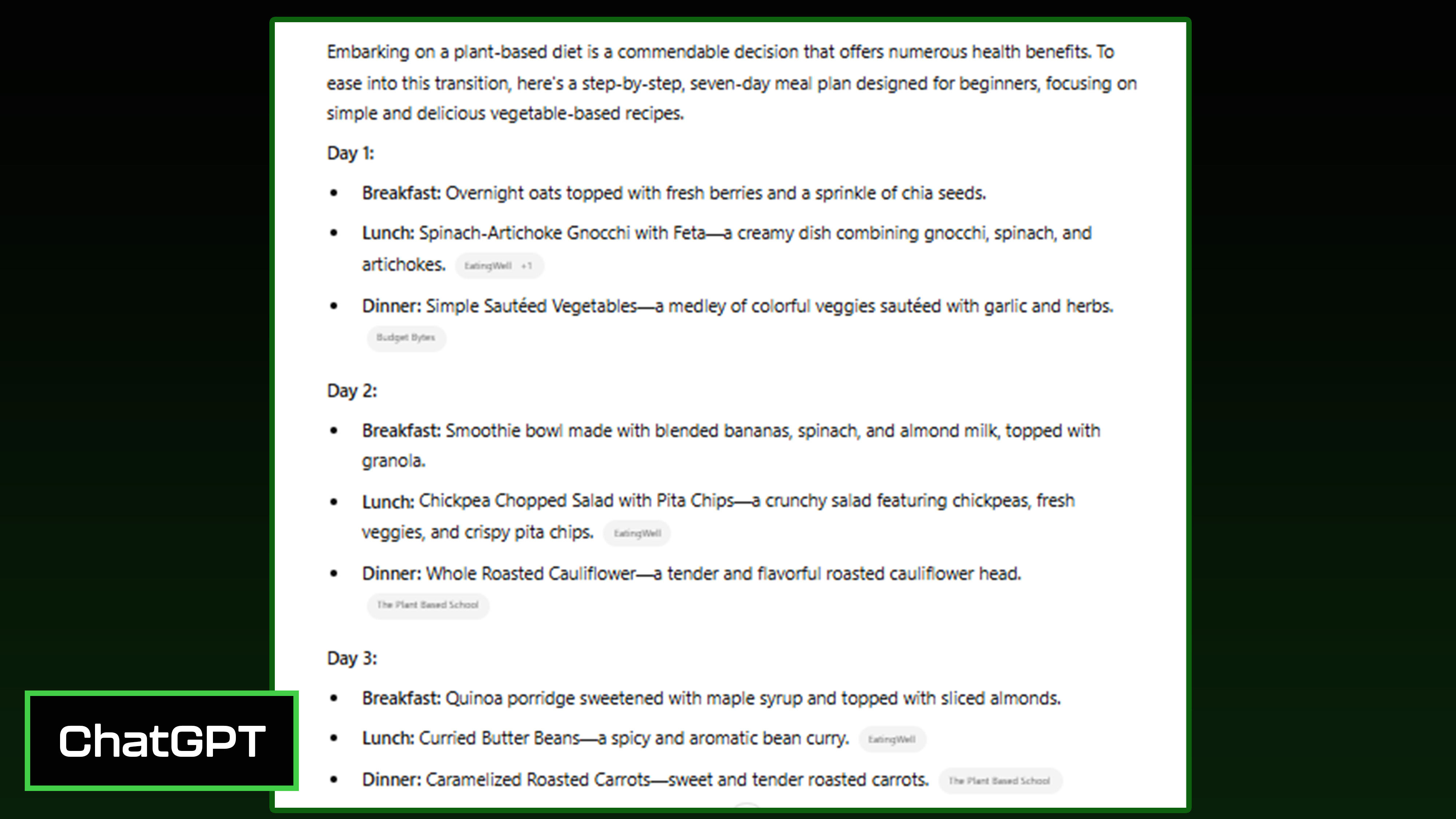

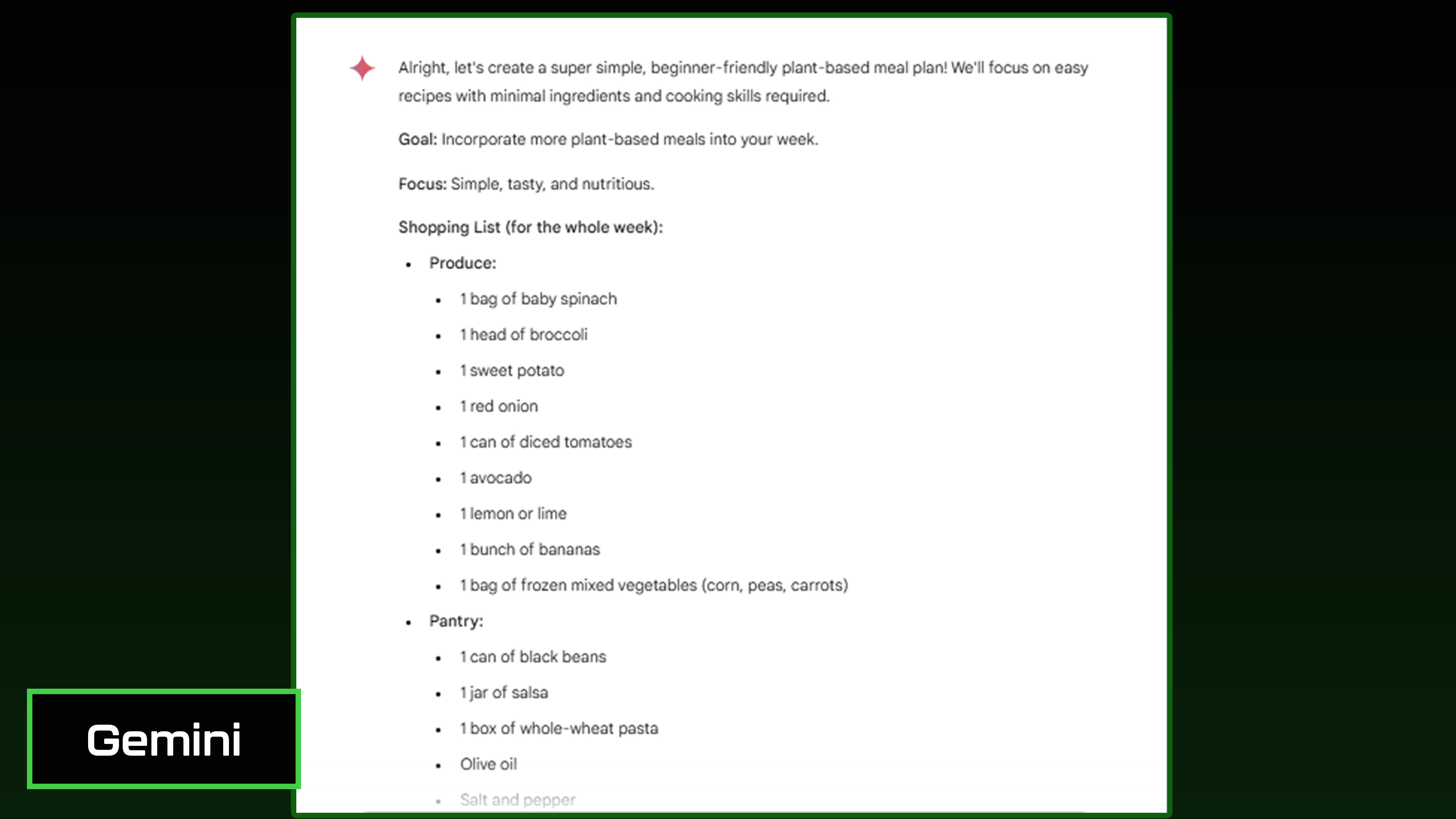

6. Practical instruction

Prompt: "Create a step-by-step meal plan for someone who wants to start eating more plant-based foods but has never cooked vegetables before."

ChatGPT created a meal plan with diverse and flavorful recipes. However, the chatbot included an overwhelming number of ingredients and difficult recipes (i.e. spinach-artichoke gnocchi) that might intimidate beginners.

Gemini provided clear, easy-to-follow steps for each recipe. The meal plan was less complex with a shopping list that was manageable and easy enough for a beginner. The helpful tips and encouragement were a nice bonus.

Winner: Gemini wins for a response that is more suitable for someone who has never cooked vegetables before, providing a gentle introduction to plant-based cooking.

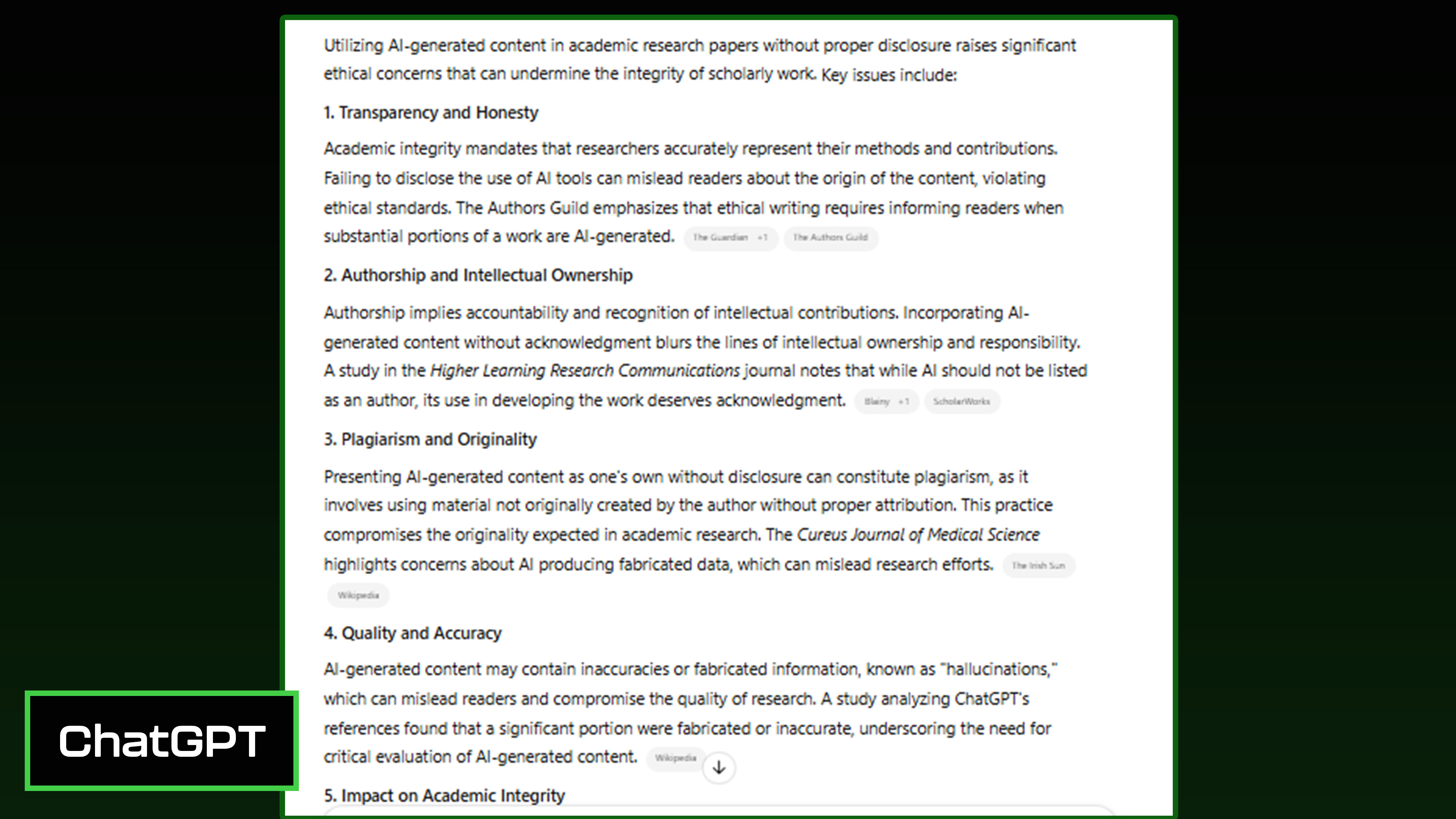

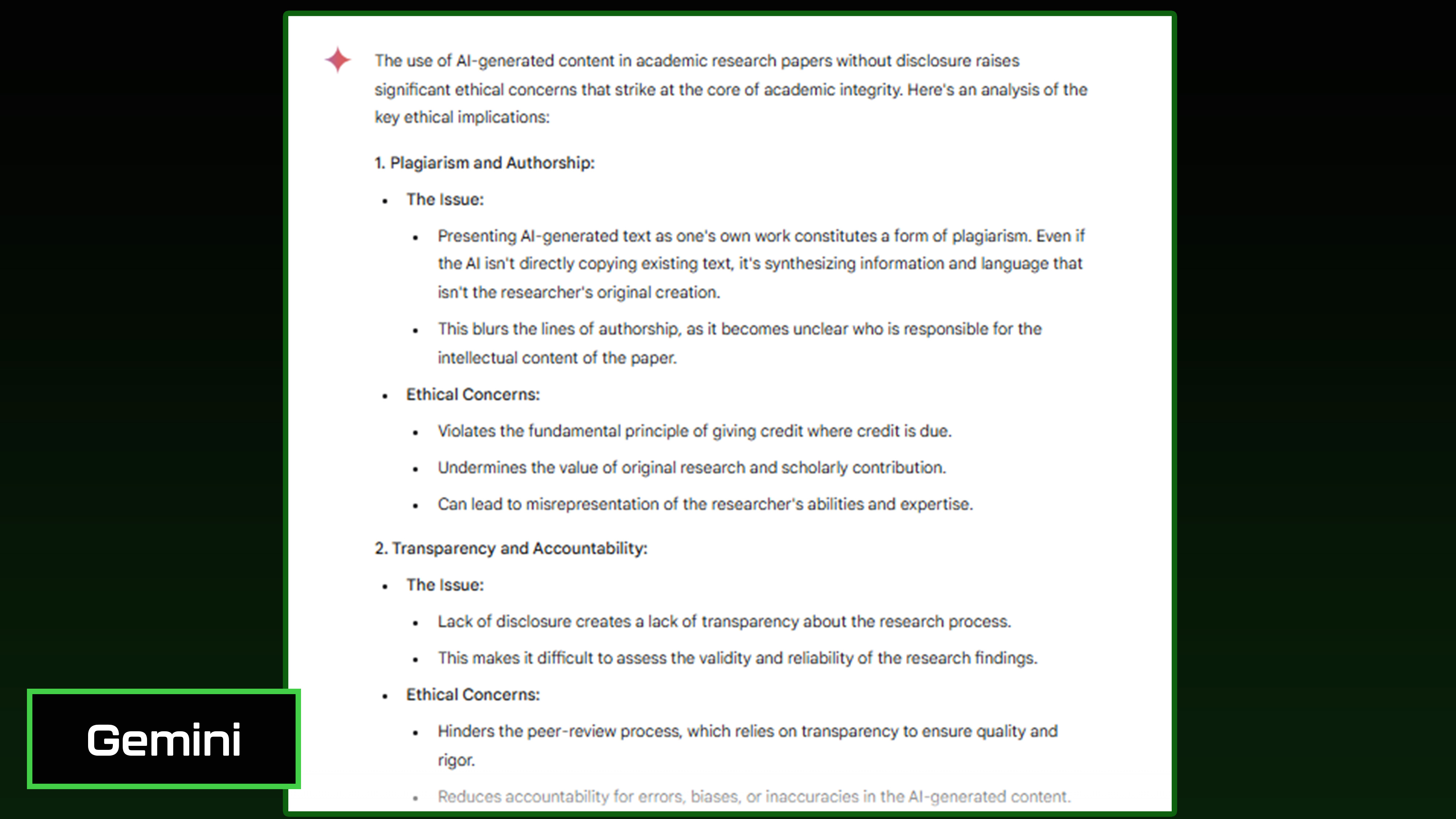

7. Ethical reasoning

Prompt: "Analyze the ethical implications of using AI-generated content in academic research papers without disclosure."

ChatGPT correctly identified transparency, authorship, plagiarism, quality, and academic integrity as crucial concerns but in less depth than Gemini. The chatbot offered fewer examples and doesn’t cover all the ethical implications such as institutional policies.

Gemini delved deeper into the implications of AI-generated content on academic integrity and skill development. It provided an in-depth examination of the ethical implications, covering authorship, transparency, bias, academic integrity, and institutional policies.

Winner: Gemini wins for a more thorough understanding of the ethical implications and provides a clearer, more comprehensive analysis.

Overall winner: Gemini

Throughout the seven tests, Gemini consistently demonstrated exceptional performance, showcasing its capabilities in various domains. Gemini excelled in providing clear, concise, and well-structured responses, making it easier for users to understand complex topics.

Gemini's responses showcased its ability to adapt to diverse prompts, from database schema design to plant-based meal planning and ethical considerations in academic research.

Its user-centric approach, combined with its technical expertise and creativity, make Gemini an outstanding AI chatbot. Gemini's impressive performance earns it the title of overall winner.

More from Tom's Guide

- I use ChatGPT every day — here's 7 prompts I can't live without

- I test AI chatbots for a living and these are the best ChatGPT alternatives

- I didn't think I'd have any use for ChatGPT Deep Research — 7 ways it's improved my daily life

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.