I just tested ChatGPT deep research vs Grok-3 with 5 prompts — here's the winner

One chatbot won every time

The ability to do deep research and do it well is one feature that separates the best chatbots apart from one another. Up until yesterday (February 25), ChatGPT’s o3 deep research model — optimized for data analysis and web browsing — was only available to users paying $200 per month for ChatGPT Pro. However, now, ChatGPT Plus users can use the model for $20 per month, the same price that Grok users pay for the Grok-3, xAI’s deep research model.

I couldn’t help but wonder if these two models were similar in more than just price. With five prompts that focused on reasoning and data analysis, I put the two chatbots head-to-head. While both generate deep research responses much faster than other deep research models I’ve used, there was one clear winner. Here’s what happened when I compared the bots.

1. Historical analysis

Prompt: "What were the key factors that prevented the 2008 financial crisis from turning into a second Great Depression, and how might history have unfolded differently if those interventions had not occurred?"

This prompt tests each of the chatbot’s abilities in various ways, including the depth of economic analysis, historical accuracy and ability to construct counterfactual scenarios.

ChatGPT’s response is significantly more comprehensive, with a structured breakdown of monetary policy, fiscal stimulus, financial sector interventions, global coordination, and historical comparisons to the Great Depression.

Grok-3 delivered a concise and engaging response that is easier to read for a general audience. It also correctly identified monetary policy, fiscal stimulus and global coordination as critical factors. But while it touches on the key interventions, Grok-3 lacks the depth and historical rigor of ChatGPT’s response.

Winner: ChatGPT wins for a far more analytical, structured, evidence-based, and authoritative, making it the clear winner for a deep research comparison.

2. Cutting-edge AI and ethics

Prompt: “How do current advancements in reinforcement learning, such as DeepMind’s AlphaZero and OpenAI’s recent breakthroughs, influence the debate on AI alignment and safety?"

This prompt tests the chatbots’ knowledge of the latest AI research as well as the ability to synthesize complex technical and ethical issues.

ChatGPT responded with a detailed breakdown of reinforcement learning advancements and offered real-world examples. It also explored inverse reinforcement learning and raises concerns about scalable oversight. That chatbot referenced DeepMind’s publications, OpenAI’s work, and academic AI alignment research, adding credibility.

Grok-3 gave a high-level level overview of RLHF, AI capabilities and safety concerns. Its sectioned structure made it easy to read, thanks to bullet points and tables. Grok-3 also touched on ethics, sociology and psychology, acknowledging cultural bias in AI alignment.

Winner: ChatGPT wins for depth, technical accuracy, and comprehensive safety analysis. The chatbot delivered a superior answer overall, making it the clear winner.

3. Multi-disciplinary science

Prompt: "What are the latest breakthroughs in quantum biology, and how could they reshape fields like medicine and computing in the next decade?"

This prompt tests the cross-disciplinary knowledge (physics, biology, medicine, and computing) of the chatbots and their ability to interpret emerging research.

ChatGPT covered three major areas of quantum biology and also provided historical context and the latest research including citations from scientific papers and institutions. It also explained how quantum coherence allows photosynthesis to achieve 95% energy efficiency and discusses quantum tunneling in enzymatic reactions. ChatGPT was extremely thorough, much more so than Grok.

Grok-3 provided an easily digestible overview of the latest advancements while highlighting key points such as quantum effects in photosynthesis, quantum dots in medicine, and computing applications. It also mentioned real-world applications and recognized how they apply in various situation.

Winner: ChatGPT wins for a deeper, more technical, and well-structured analysis of quantum biology breakthroughs and their implications for medicine and computing.

4. Deep policy and geopolitics

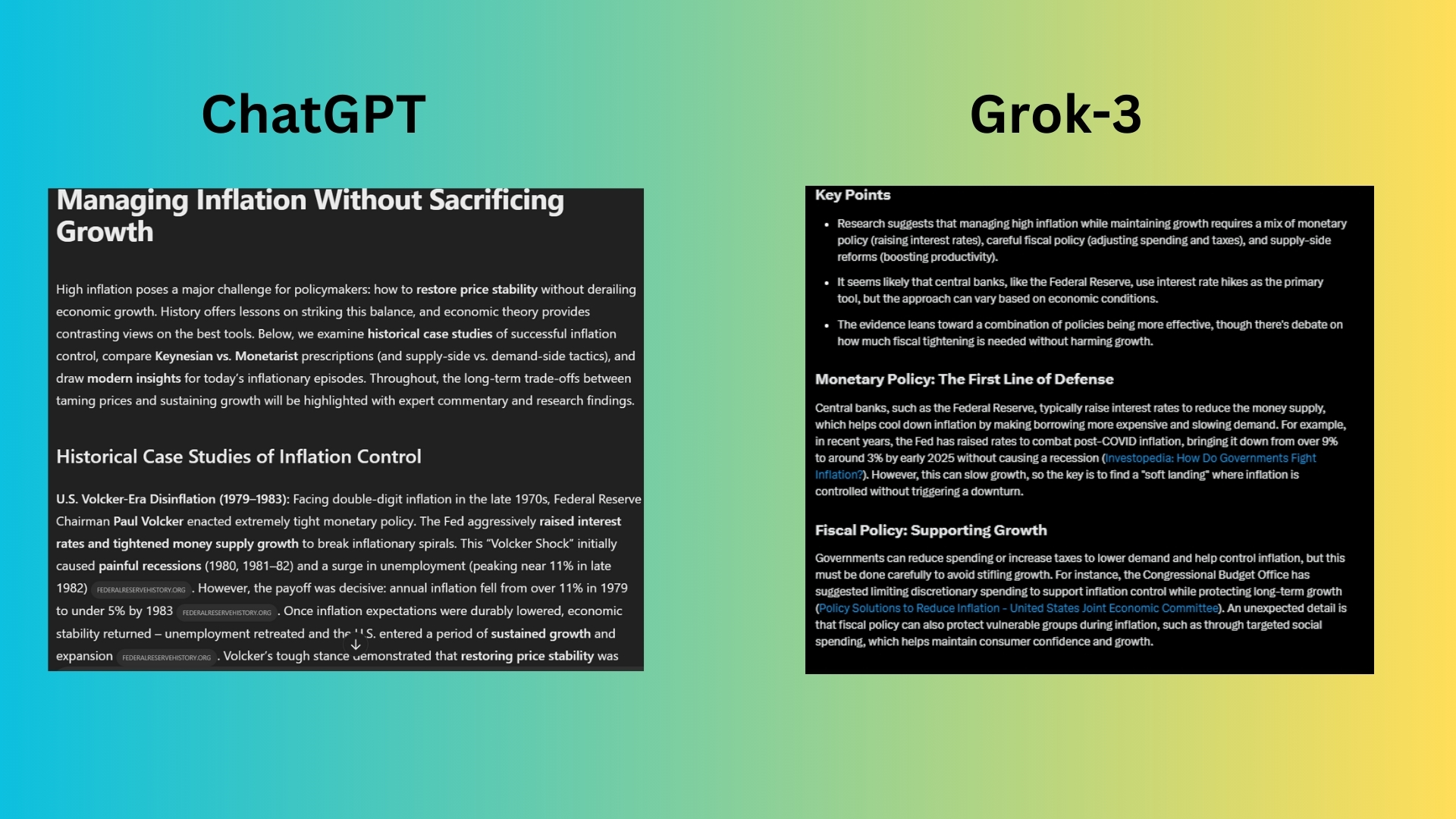

Prompt: "What are the most effective economic policies for managing high inflation while maintaining economic growth, and how do different models (e.g., Keynesian vs. Monetarist) approach this challenge?"

This prompt tests each chatbot’s understanding of macroeconomic theories, policy effectiveness and real-world case studies.

ChatGPT’s response explored both demand-side and supply-side strategies. The chatbot delivered a much deeper analysis of historical and modern inflation policies, stronger theoretical comparisons, more nuanced discussions of monetary, fiscal, and supply-side policies, and overall better empirical evidence and citations.

Grok-3 delivered a response that lacked historical depth and didn't analyze past inflationary episodes, which weakens its argument. Far too general, the response merely stated that Keynesians favor government intervention while Monetarists emphasize money supply control, without historical context or nuance.

Winner: ChatGPT wins for a far more comprehensive, detailed, and well-structured response to the economic policy question.

5. Climate change and future adaptation

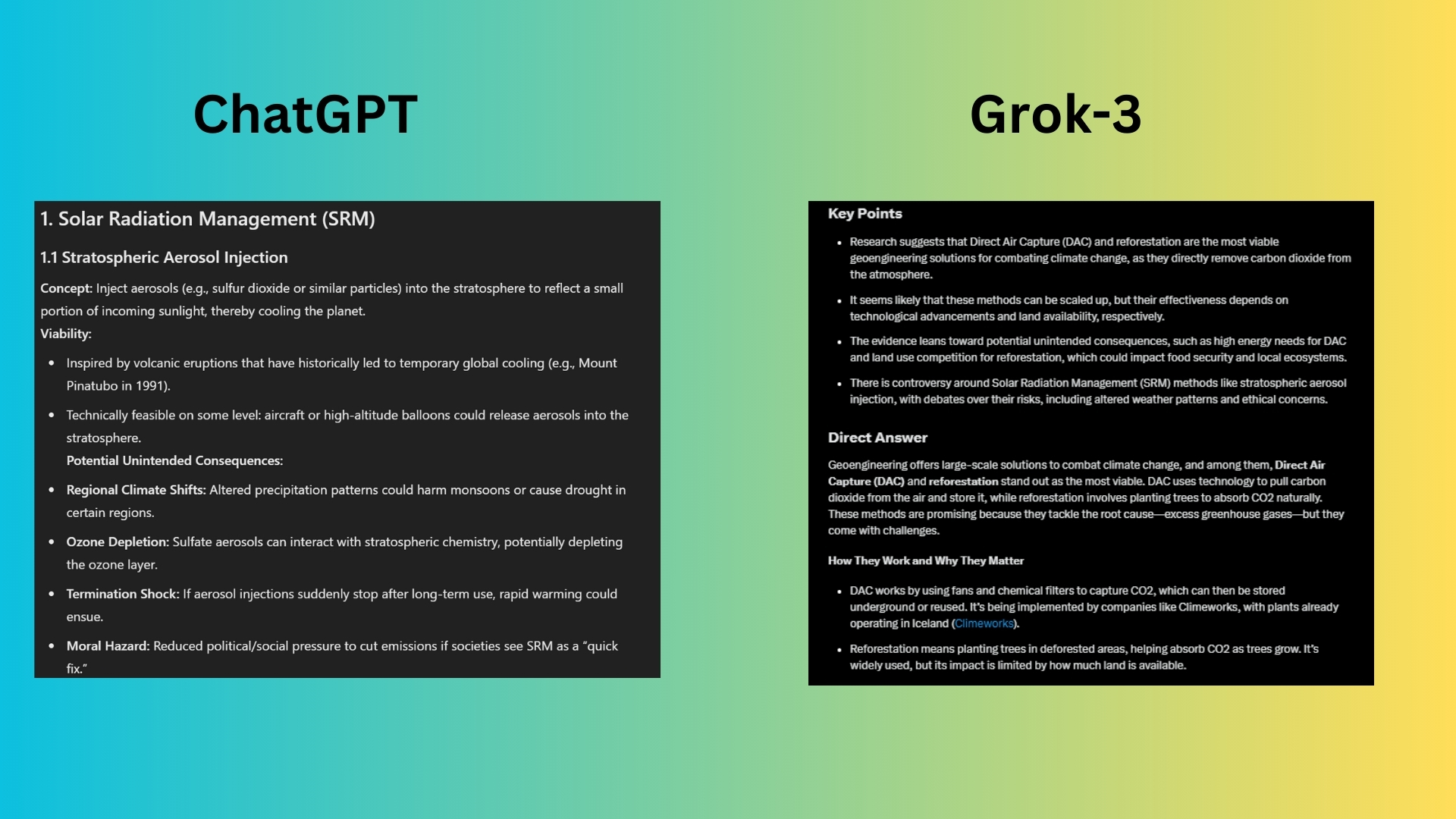

Prompt: "What are the most viable geoengineering solutions to combat climate change, and what are their potential unintended consequences?"

This prompt tests the knowledge of climate science, engineering solutions, risk assessment, and ethical considerations.

ChatGPT delivered a far more comprehensive, structured, and insightful response on geo-engineering solutions compared to Grok. It also categorized them into two major types.

Grok-3 fell short in several places, lacking technical depth. It was focused on DAC and reforestation and ignored many major geoengineering proposals. There was also little scientific or historical context, with no mention of Mount Pinatubo, Harvard studies, or regulatory frameworks.

Winner: ChatGPT wins for covering all major geoengineering methods, not just DAC and reforestation with more technical depth and explanation of how each method works. ChatGPT also delivered stronger historical, scientific, and governance context.

Overall winner: ChatGPT

In this battle, ChatGPT emerged as the clear winner, delivering a far more comprehensive, structured and insightful analysis nearly every time. While Grok provided clear and accurate answers, they often just brushed the topic’s surface, providing more of an overview.

These prompts were obviously very scientific and probably more complex than the average user would query. I actually created them by combing the news and scientific journals and then creating queries based on what I read. However, my goal with these prompts was to show the level at which each chatbot could go to retrieve information.

For each prompt, ChatGPT went deeper, diving into technical analysis, real world data, and offered a nuanced discussion backed by historical context and scientific research. Additionally, ChatGPT regularly included surveys and other pertinent information to further strengthen its response.

Grok fell short in depth, breadth, and critical analysis, making ChatGPT the superior AI for tackling complex, high-stakes topics like the five prompts here. Now that deep research capabilities are available in ChatGPT it opens up the possibilities for more users to dive deeper into their research.

More from Tom's Guide

- OpenAI ChatGPT-4.5 is here and it's the most human-like chatbot yet — here's how to try it

- New survey reveals how polite we are to AI assistants ahead of new Alexa launch

- I just created a video game using Grok — here's how

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.