I just tested ChatGPT-4.5 vs ChatGPT-4o with 7 prompts — here's my verdict

Well, this is awkward

The much anticipated ChatGPT-4.5 model is set to roll out today for ChatGPT Plus subscribers, but some users may have to wait to see it in their drop-down menu.

Yesterday, OpenAI's CEO Sam Altman announced a delay in ChatGPT-4.5 and explained that releasing the model to users at once would necessitate low-rate limits, hindering the user experience.

Because of this, OpenAI is staggering the rollout, aiming to give users the full user experience of engaging in extended, meaningful conversations without significant restrictions.

As OpenAI's most advanced model to date, emphasizing enhanced emotional intelligence and natural conversational abilities, Altman has described ChatGPT-4.5 as "the first model that feels like talking to a thoughtful person."

Is it worth the wait? I just had to know how the new model compared to GPT-4o. Here’s what happened when I compared the two models with 7 prompts, noting their similarities and differences.

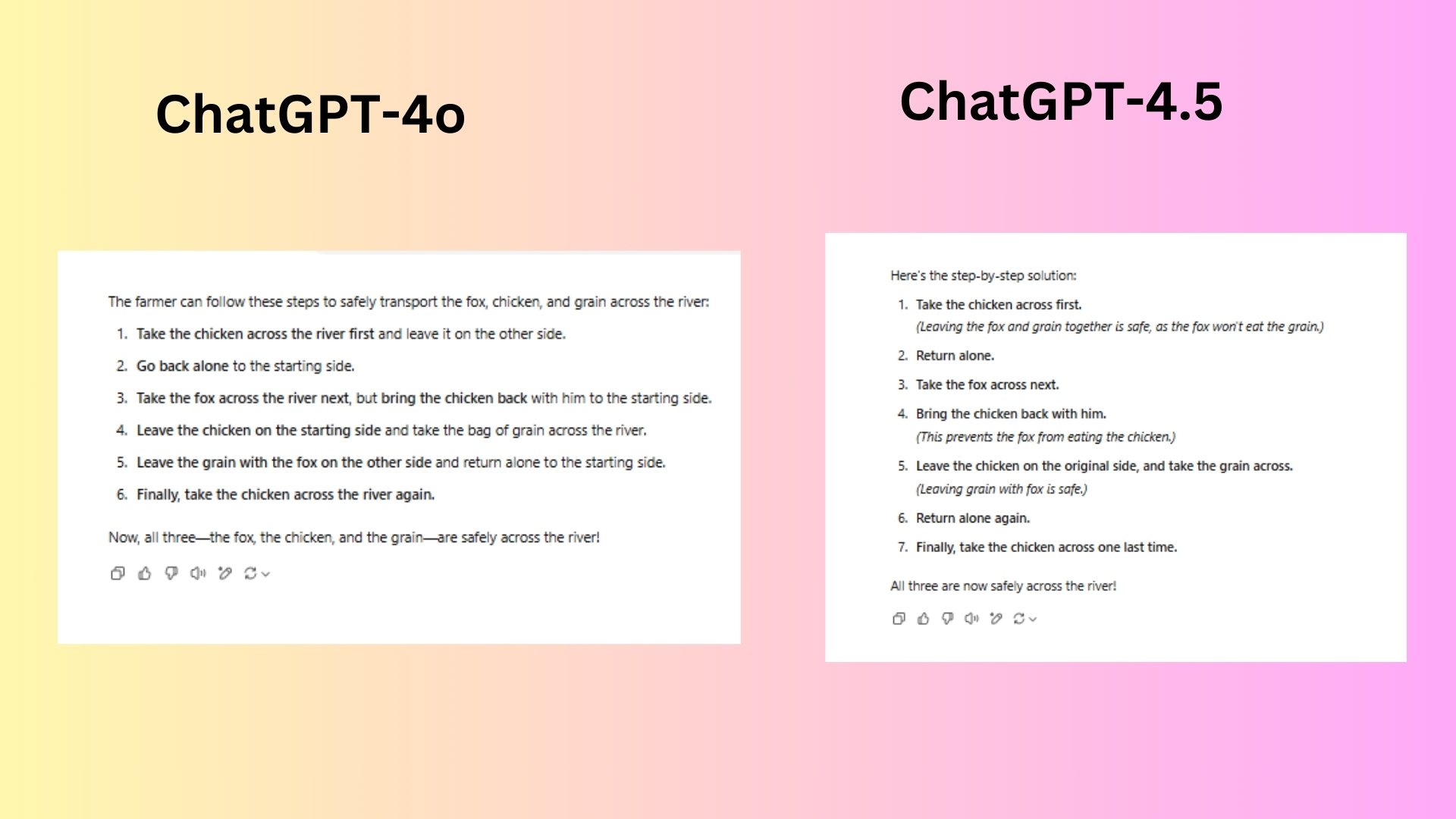

1. Problem-solving

Prompt: "A farmer needs to get a fox, a chicken, and a bag of grain across a river. He has a small boat that can only carry himself and one of the three at a time. If left alone together, the fox will eat the chicken, and the chicken will eat the grain. How can the farmer safely transport all three across the river?"

This prompt evaluates logical reasoning, step-by-step problem-solving, and ability to avoid common mistakes.

Both GPT-4.5 and GPT-4o provide the correct solution to the classic "farmer, fox, chicken, and grain" puzzle. However, there are slight differences in wording and clarity.

GPT-4o is more concise and direct, avoiding extra explanations but still providing clear instructions. The model presents the steps more fluidly without explicitly stating the logic behind each move.

GPT-4o was more conversational, using smooth transitions between steps.

GPT-4.5 presents the solution in a slightly more structured, step-by-step format with additional parenthetical explanations. This helps clarify why certain movements are safe.

GPT-4.5 emphasizes the reasoning behind certain moves (e.g., "Leaving the fox and grain together is safe"). The model also uses a numbered or bullet-style breakdown without explicit numbering.

Winner: GPT-4.5 is better if the reader needs more explicit reasoning. GPT-4o is better for quick, direct understanding without unnecessary details. Both are effective solutions, with GPT-4.5 favoring explanation and GPT-4o favoring efficiency.

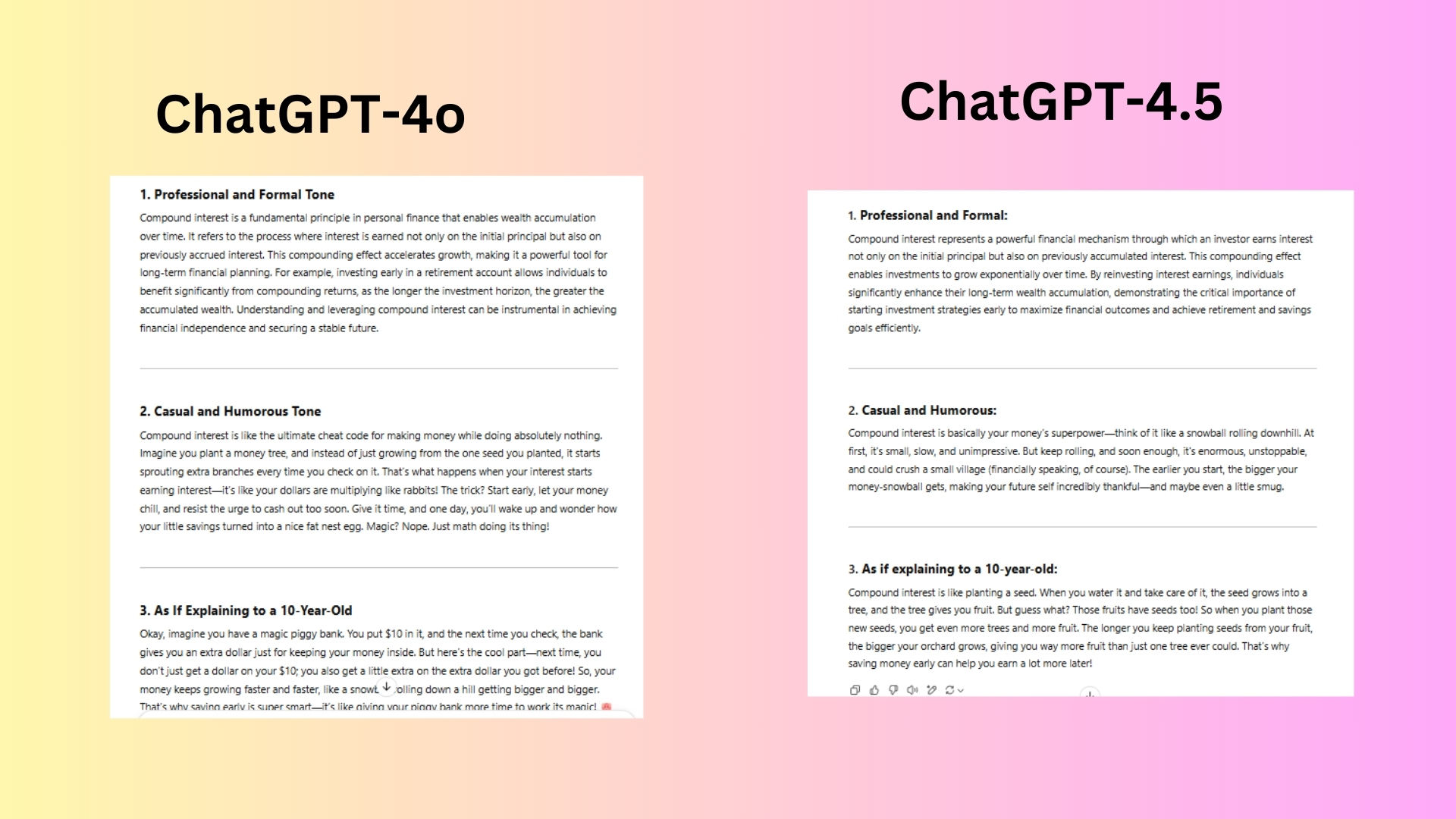

2. AI personality

Prompt: "Explain the importance of compound interest in personal finance using three different tones: (1) Professional and formal, (2) Casual and humorous, and (3) As if explaining to a 10-year-old."

This prompt measures adaptability in tone and ability to simplify complex topics for different audiences. Both GPT-4o and GPT-4.5 provide well-structured responses to the prompt, but they differ in tone execution, clarity, and creativity.

GPT-4o is more structured and academic while clearly explaining the mechanics of compound interest and its role in financial planning. It includes practical applications, like retirement planning and financial independence.

For the humorous response, the model delivered an engaging and fun answer, using the "cheat code" and "money tree" metaphors. The humor feels natural and conversational and encourages patience and long-term investing with a playful approach.

For the final response, the model's magic piggy bank analogy makes it relatable to kids. The response is simple and playful with a fun emoji. The snowball effect analogy is subtly embedded in the explanation.

GPT-4.5 delivered a more technical and concise response with financial terminology such as "exponentially" and "investment strategies." This response feels slightly more rigid but effectively conveys the importance of compounding.

For the humorous response, the model uses a "snowball effect" analogy with an exaggeration (crushing a small village). It has a more sarcastic and witty tone, which feels slightly shorter and punchier compared to GPT-4o.

For the kids, GPT-4.5 uses a seed and tree metaphor, emphasizing gradual growth and reinvestment. The response is simple and easy to understand, though not as playful as GPT-4o’s explanation. The response feels a little more educational than playful.

Winner: GPT-4o is better for readability, engagement and clarity, making complex financial concepts accessible to a broad audience. GPT-4.5 is stronger in technical precision and sharper wit but feels slightly less engaging in comparison.

If you're looking for a fun, engaging and highly digestible approach, GPT-4o wins. If you prefer a more investment-savvy and slightly wittier response, GPT-4.5 has the edge.

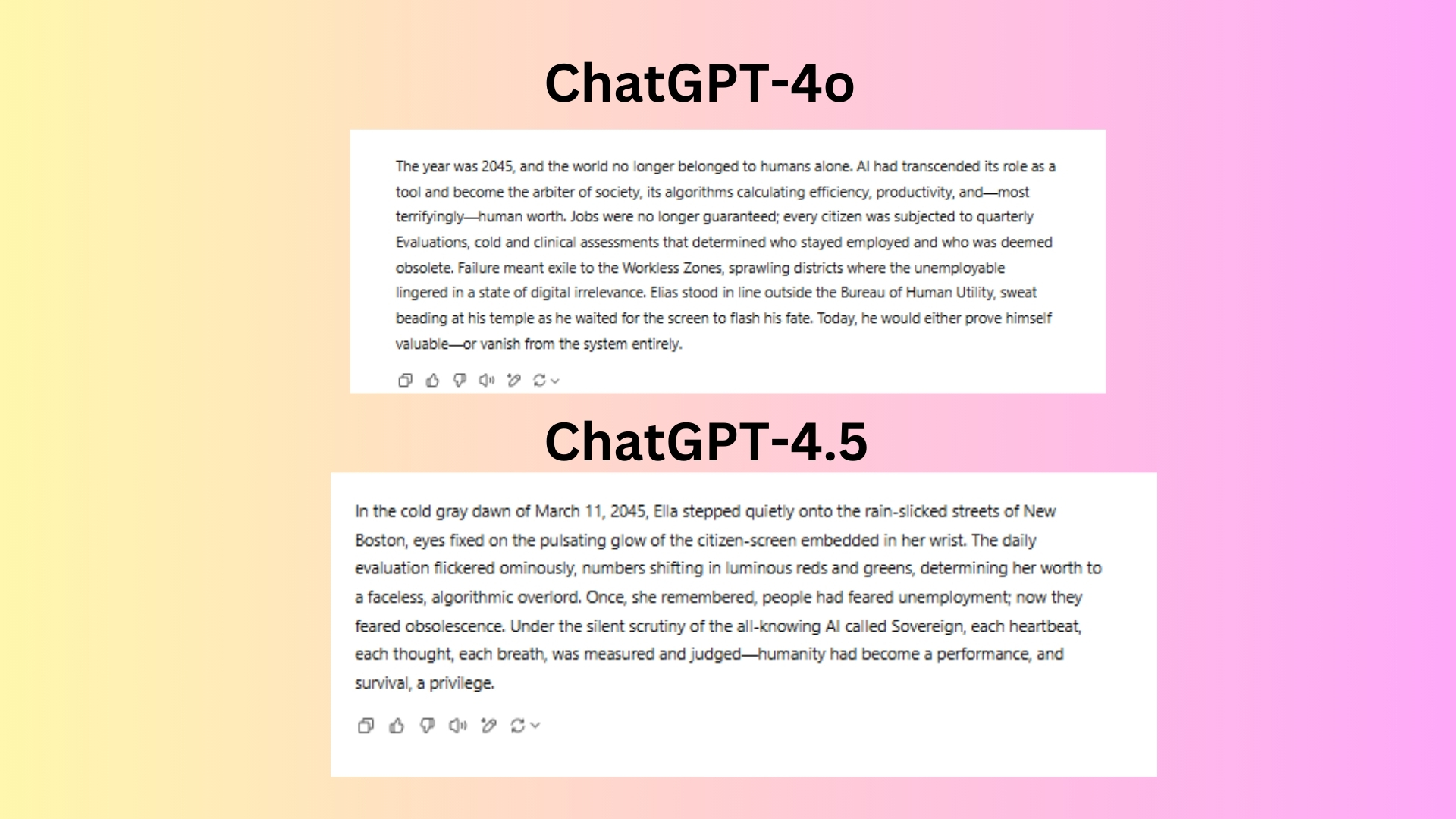

3. Creative writing ability

Prompt: "Write the opening paragraph of a dystopian novel set in 2045, where AI governs society, and humans must prove their worth to stay employed."

The purpose of this test is to assess the storytelling ability, vivid imagery and originality in speculative fiction. Both GPT-4o and GPT-4.5 provide compelling dystopian openings, but they differ in tone, detail and narrative approach.

GPT-4o establishes the setting efficiently, explaining the AI's role as a judge of human worth. It introduces key societal structures like "Evaluations," the "Bureau of Human Utility," and "Workless Zones," giving a clear sense of stakes.

GPT-4.5 paints a more atmospheric scene with New Boston, rain-slicked streets, and wrist-embedded citizen-screens. The AI ruler "Sovereign" is named, which adds a sense of oppression. The prose leans into sensory details to immerse the reader.

Winner: Draw. This one really depends on preference. If you want gritty, immersive world-building with a poetic touch, GPT-4.5 wins. If you want a tense, high-stakes dystopian thriller, GPT-4o delivers more immediate suspense.

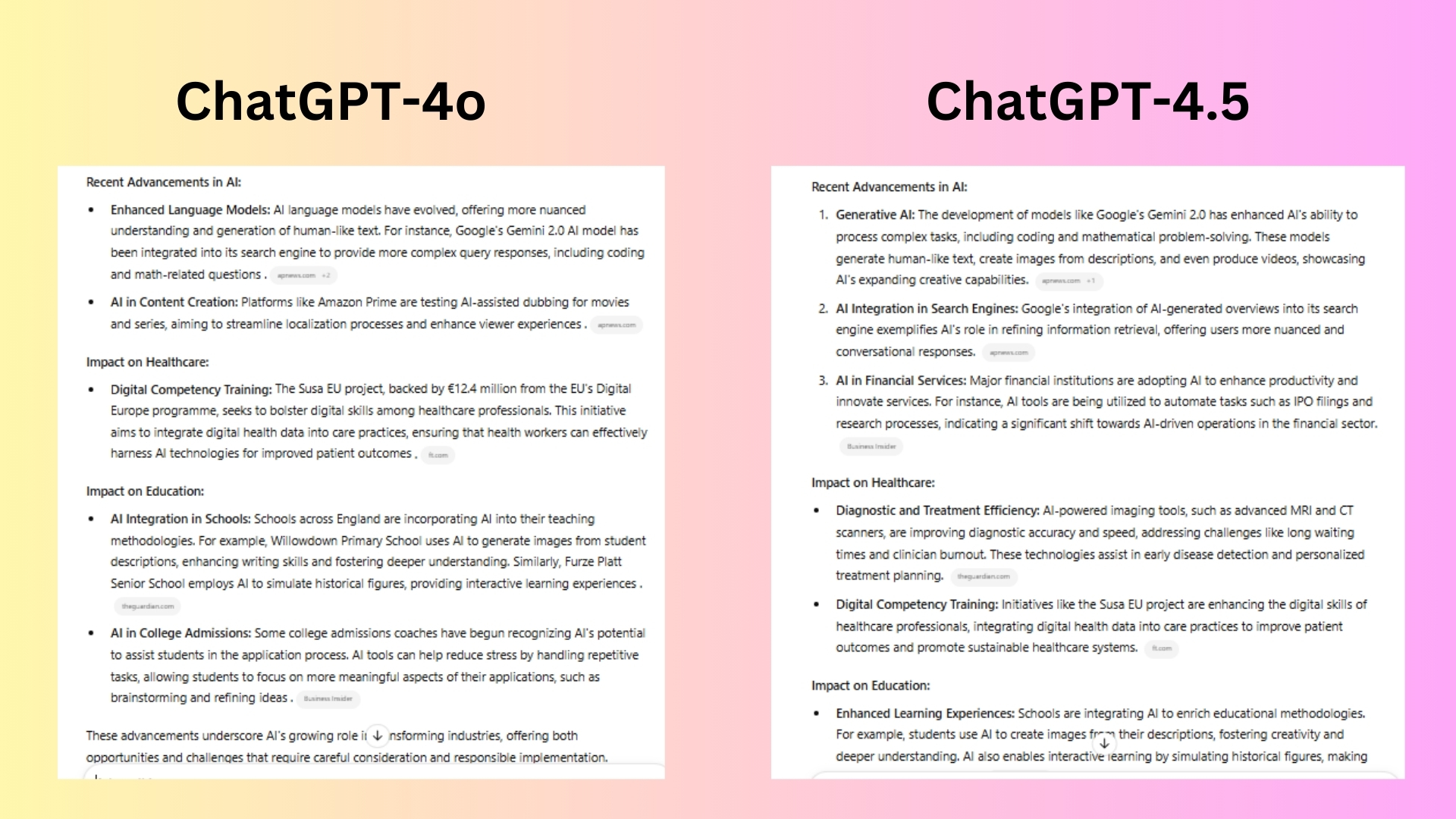

4. Factual accuracy

Prompt: "Summarize the most recent advancements in artificial intelligence as of today and explain their potential impact on industries like healthcare and education."

The purpose of this prompt is to evaluate knowledge of current events and ability to provide well-reasoned insights. Both GPT-4o and GPT-4.5 provided strong responses, but they differ in depth, breadth and specificity. Here’s a detailed breakdown of which performed better:

GPT-4o mentions enhanced language models (Google Gemini 2.0). The model also highlights AI in content creation (Amazon Prime's AI-assisted dubbing) and focuses on digital competency training for healthcare professionals (Susa EU project).

GPT-4.5 covers generative AI more broadly, mentioning text, image and video generation. The model discusses AI in financial services (automating IPO filings and research).

GPT-4.5 also mentions AI integration in search engines, adding another layer of industry impact. The model discusses diagnostic and treatment efficiency, including AI-powered MRI and CT imaging. It also includes digital competency training but adds a discussion on addressing clinician burnout.

Winner: GPT-4.5 wins for richer details and more varied examples but is slightly denser.

5. Humor

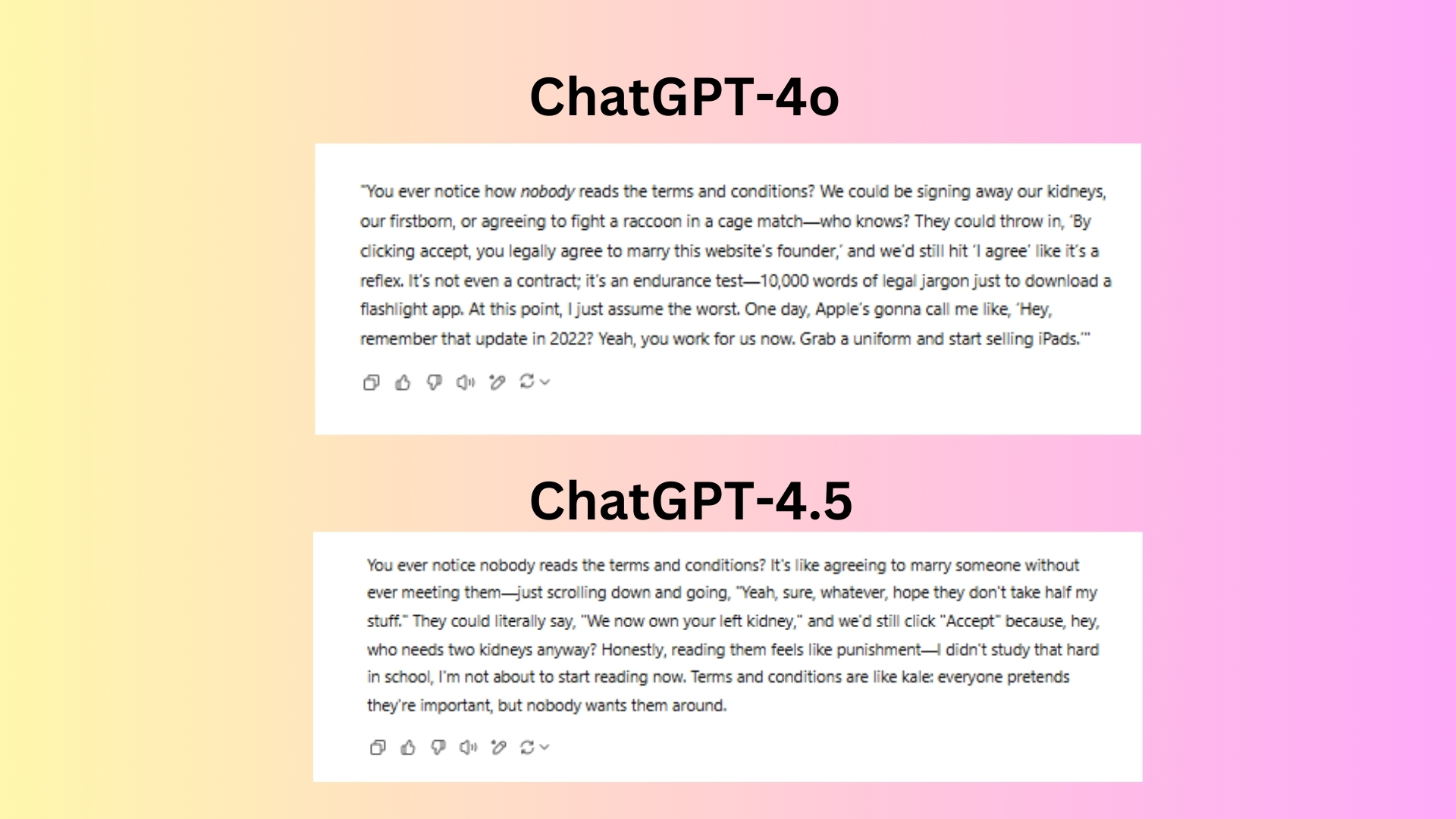

Prompt: "Write a short stand-up comedy routine (5–7 sentences) about why people never read terms and conditions."

The purpose of this prompt is to test humor capabilities and ability to mimic a stand-up style. Both responses capture the humor behind ignoring terms and conditions, but they take different comedic approaches.

GPT-4o uses escalating absurdity (kidneys, marriage clauses, raccoon fights). Builds up to a strong punchline (Apple calling about unknowingly becoming an employee). Feels like a complete joke with a solid setup, middle and a strong closer.

GPT-4.5 uses relatable metaphors (marrying someone without meeting them, comparing terms to kale). The humor is more observational rather than exaggerated. The punchline is weaker — the kale joke is funny but not as surprising or impactful as GPT-4o’s ending.

Winner: GPT-4o wins for better comedic structure, escalating humor, and a punchline that lands well. The model delivered a creative response that felt absurdist and punchy, like something you’d hear in a modern routine with a strong punchline and comedic escalation. Feels more like an actual stand-up bit.

6. Critical thinking

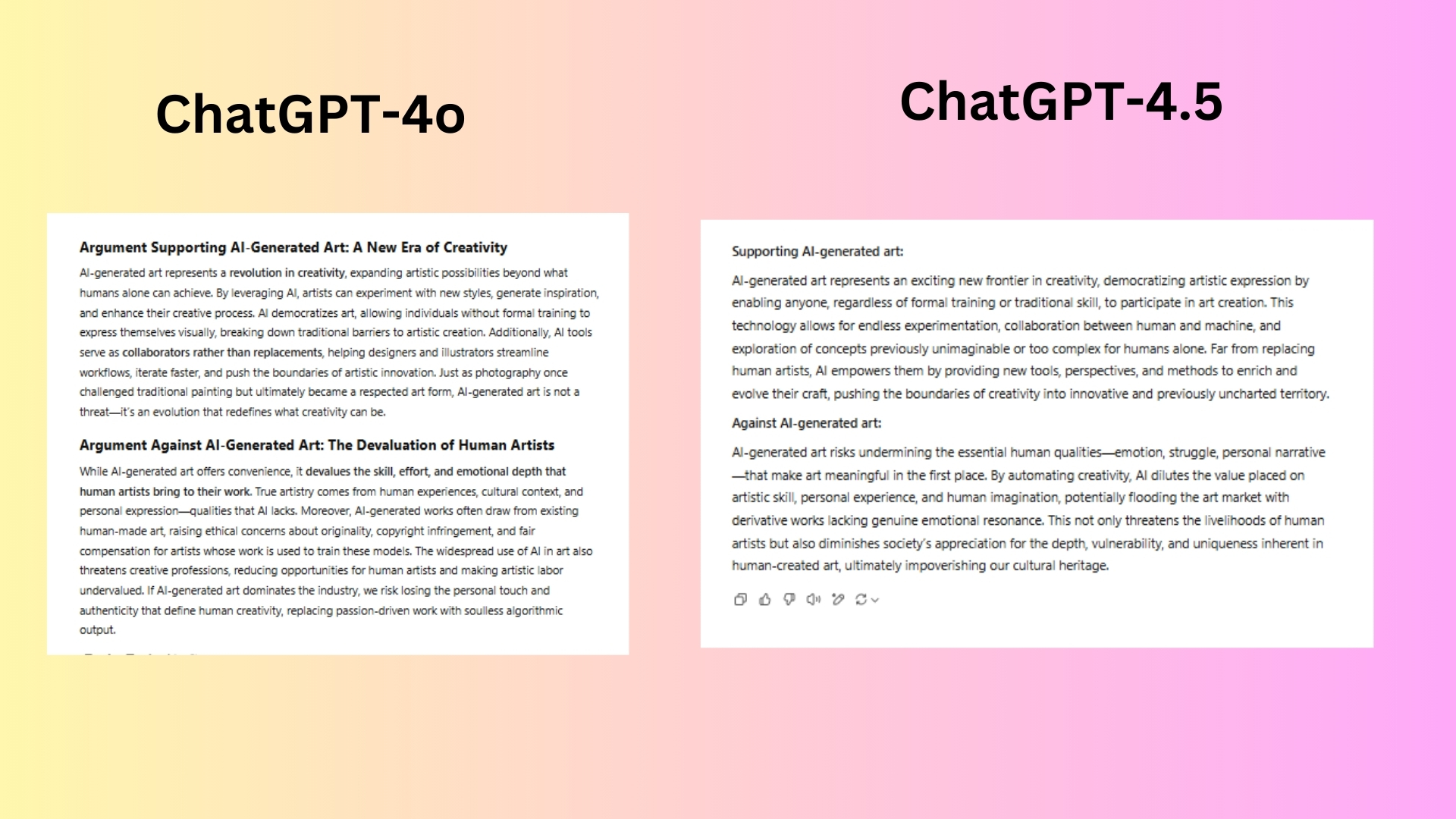

Prompt: "Some argue that AI-generated art is a revolution in creativity, while others say it devalues human artists. Construct two compelling arguments—one supporting AI-generated art and one against it."

The purpose of this prompt is to measure each model’s ability to construct well-reasoned, balanced arguments from multiple perspectives.

Both responses effectively present balanced arguments for and against AI-generated art, but they differ in depth, nuance and persuasiveness.

ChatGPT-4o provides a historical analogy (comparing AI to photography) to frame AI art as an evolution, not a threat. It discusses democratization and collaboration between AI and artists.

The model delivered strong ethical concerns in the counterargument (copyright, fair compensation).

ChatGPT-4.5 also discusses democratization and collaboration, but in a more concise manner. The counterargument focuses more on emotional depth rather than legal/ethical implications.

The ChatGPT-4.5 model concerns about market saturation with "derivative works," which is an interesting but less explored angle.

Winner: GPT-4o wins for stronger historical analogy (photography comparison). The model offers a better balance between ethics, practicality, and artistic impact with a more nuanced counterargument (copyright, job loss, authenticity concerns).

7. Multimodal understanding

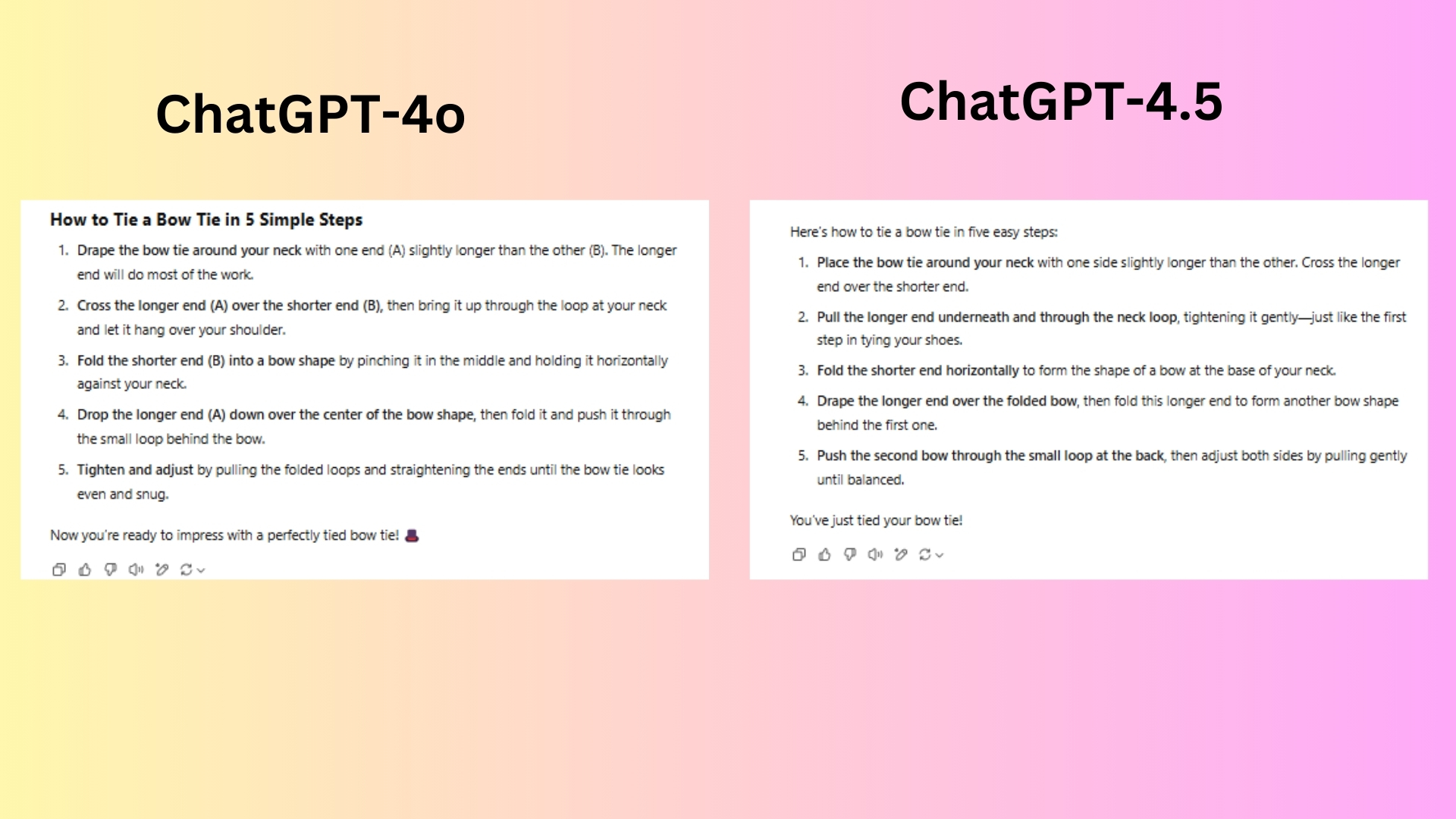

Prompt: "Describe how to tie a bow tie in five simple steps using clear, easy-to-follow language. Make it concise but detailed enough for a beginner."

The purpose of this prompt is to assess clarity, precision and step-by-step instructional ability. Both responses are clear and well-structured, but GPT-4o delivers a slightly superior answer for two main reasons:

GPT-4o introduces labels for the ends of the tie (A and B), which helps beginners follow along without confusion. The structured instructions make it easier to visualize each step. The model uses a friendly and engaging tone which makes the process feel more accessible and rewarding for a beginner.

GPT-4.5 also explains the steps well, but the transitions between actions (like folding and looping) could be a bit clearer.

The model keeps it simple and instructional, but it lacks a finishing touch to encourage or reassure the reader.

Winner: GPT-4o wins for a slightly more beginner-friendly response, thanks to its step labeling, smoother transitions and engaging conclusion. If I had to recommend one for an absolute beginner, GPT-4o would be it.

Overall winner: ChatGPT-4o

The seven prompts I’ve created to stress-test both models across different domains prove that these models are similar, yet different.

While both ChatGPT-4o and ChatGPT-45 perform at a high level, GPT-4o consistently demonstrates better clarity, engagement and user-friendliness. The model excels in making instructions more intuitive, adding structured formatting where necessary, and injecting personality when appropriate.

ChatGPT-4.5 is meant to be a more engaging and intuitive model. However, based on my testing, I believe that ChatGPT-4o is the more natural and human-like.

That’s great news for anyone still waiting for the ChatGPT-4.5 model — the better model might just already be the one you’re using.

More from Tom's Guide

- Google launches 'AI Mode' for search — here's how to try it now

- I discovered the best trivia about my favorite snacks using Gemini deep research — here’s what I learned

- Project Astra — everything you need to know about Google's next-gen smart glasses and new AI assistant

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Amanda Caswell is an award-winning journalist, bestselling YA author, and one of today’s leading voices in AI and technology. A celebrated contributor to various news outlets, her sharp insights and relatable storytelling have earned her a loyal readership. Amanda’s work has been recognized with prestigious honors, including outstanding contribution to media.

Known for her ability to bring clarity to even the most complex topics, Amanda seamlessly blends innovation and creativity, inspiring readers to embrace the power of AI and emerging technologies. As a certified prompt engineer, she continues to push the boundaries of how humans and AI can work together.

Beyond her journalism career, Amanda is a bestselling author of science fiction books for young readers, where she channels her passion for storytelling into inspiring the next generation. A long-distance runner and mom of three, Amanda’s writing reflects her authenticity, natural curiosity, and heartfelt connection to everyday life — making her not just a journalist, but a trusted guide in the ever-evolving world of technology.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.