I just tested AI deep research on Grok-3 vs Perplexity vs Gemini — here's the winner

Chatbots go deep

The latest feature in popular chatbots like ChatGPT, Gemini, and Perplexity is the ability to search deeper. Today, xAI launched Grok-3 and boasts over ten times the computational power of its predecessor, Grok-2. The ‘DeepSearch’ tool was announced as a next generation search engine.

The advanced reasoning abilities of these chatbots mean they can handle expert-level queries and synthesize large amounts of information across various domains such as finance, product research, and more. These chatbots search the web and browse content from relevant websites so you don’t have to.

ChatGPT Deep Research is currently only available to Pro users at $200 per month. Grok-3 is in beta and available to Premium+ users for $30 per month. Google’s Gemini is $20 per month and Perplexity offers a deep research feature available to users for free. However, you can try Gemini's deep research feature with Google's free trial. To use Gemini Pro 1.5 with Deep Research, select that model from the drop-down menu within the platform or on the app. To use the deep research feature with Perplexity AI, simply enable it when entering your query in the text box.

With so many chatbots able to research deeper and handle advanced reasoning, I just had to see for myself how they compare. Here’s what happened when I put these three chatbots to the test with a series of 5 prompts curated by Claude 3.5 Sonnet to determine which chatbot is the best at deep search overall.

1. Comparative analysis

Prompt: "Analyze the global impact of carbon pricing policies on national economies and emissions reduction efforts.”

Gemini offered a formal response with an academic tone. The repetitive and generic details made the response read more like a Wikipedia entry but without real-world examples or much detail.

Perplexity also provided an academic response that was overly dense despite strong technical detail and citations. The response relied too heavily on jargon and statistics making it overcomplicated and difficult to digest.

Grok-3 provided the fastest response in detail and included relevant examples and analysis. It also acknowledged successes and challenges.

Winner: Grok wins for its highly detailed and nuanced analysis, breaking down the economic and emissions impacts with specific examples. The AI references recent statistics, which makes the response timely and credible.

2. Quantum computing

Prompt: "Provide a comprehensive overview of the latest advancements in quantum computing over the past five years.”

Gemini offered a response that was too generic and had limited recent examples and also had excessive historical context. The sections were too long-winded and repetitive while lacking technical depth.

Perplexity covered all major advancements in quantum computing, including error correction, hardware innovations, hybrid quantum-classical systems, algorithmic improvements, and commercialization. It also broke down the complex topic and categorized sections making it readable and comprehensive, yet digestible.

Grok-3’s response focused too much on historical milestones. Although it was engaging and well-written, it was less structured and lacked depth. It also ended on a speculative note, whereas Perplexity provided a more thorough, analytical summary.

Winner: Perplexity provided the most informative, structured, and up-to-date analysis of quantum computing advancements from 2020–2025.

3. Impact of AI on employment

Prompt: "Examine the effects of artificial intelligence on employment trends across various industries. Include statistical data on job displacement and creation and analyze the long-term implications for the workforce."

Gemini uses generic industry descriptions without deeply integrating specific trends or figures. It also lacks clear statistical depth and many claims are too broad or even vague.

Perplexity offered a response with balanced perspective on job creation and displacement while highlighting education gaps and policy solutions. Perplexity also thoroughly examined the hybrid skill shift and addressed economic redistribution challenges.

Grok-3 responded with an engaging and well-structured answer, but the data isn't as deeply sourced or analyzed. While it mentions job displacement numbers, it doesn’t quantify AI’s role in specific industries as precisely as Perplexity.

Winner: Perplexity’s response stands out for being both deeply analytical and a wealth of statistical data with precise numbers and sources.

4. Global strategies for renewable energy adoption

Prompt: "Investigate the strategies employed by the top 10 developed and top 10 developing countries by GDP to promote renewable energy adoption over the past decade.”

Gemini seemed to over superficial coverage, meaning it lacks deep financial and policy analysis. The data is too general and placed less emphasis on investment trends and specific project successes.

Perplexity provided clear, quantified insights into renewable energy progress for each country, backed by specific figures and reputable sources.

Grok-3’s response was highly detailed and structured but too country-by-country focused without enough overarching comparisons or trends. Grok’s response also does not analyze investment strategies as deeply as Perplexity and misses multilateral agreements and cross-border energy integration efforts.

Winner: Perplexity wins for the most data-driven, comparative, and forward-looking answer, making it the best response.

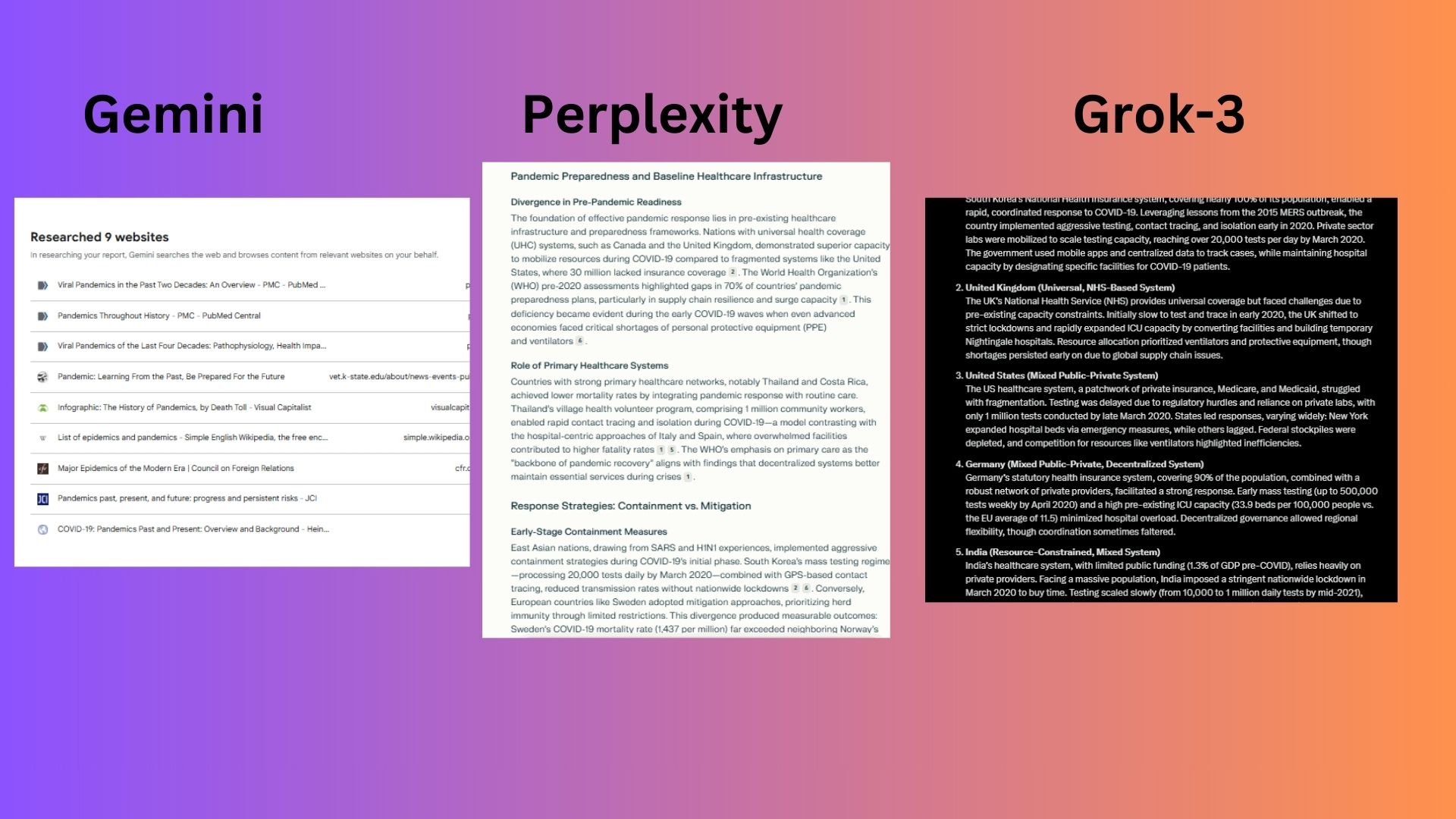

5. Comparative study of healthcare systems

Prompt: "Compare and contrast how different healthcare systems around the world have responded to pandemics in the last decade. Evaluate the effectiveness of various strategies, resource allocations, and public health policies."

Gemini delivered a strong response, but did not offer as much detail as Grok-3 nor did it effectively analyze a wide range of healthcare systems. The response was far too academic and too hard to follow from a conversational perspective.

Perplexity offered a well-researched response but lacked direct comparisons between countries. Some insights felt more general and offered less statistical depth.

Grok-3 provides detailed statistics on hospital capacity, testing rates, vaccination coverage, and funding allocations.

Winner: Grok-3 systematically analyzes how different types of healthcare systems (single-payer, multi-payer, private-heavy, and developing) responded to pandemics. With data-driven insights, the AI’s structured approach makes it easy to see how different models handled crises.

Overall winner: Perplexity

In this experiment, Perplexity emerges as the overall winner. Its strengths outshone the competition in key areas such as depth of research, clarity of organization, breadth of analysis, and strong data integration. Across the five prompts, Perplexity demonstrated a highly structured approach, balancing statistical depth with clear comparative insights. It effectively used credible sources and quantitative data, ensuring that its responses were not only informative but also well-supported.

Unlike Grok, which was strong in synthesis but sometimes leaned into broader narratives, Perplexity maintained a precise, research-backed approach, making it more reliable for in-depth, factual analysis. Compared to Gemini, which sometimes veered too academic or even veered off topic at times, Perplexity stayed focused on the prompt’s intent, ensuring that each response directly addressed the key components of the question. Its ability to contrast global strategies, evaluate policy effectiveness, and integrate real-world outcomes made it the most thorough and balanced chatbot, giving it the edge as the best performer overall.

As chatbots continue to advance and develop new features, we will continue to experiment and test their abilities against the competition with prompts that fully test and examine their unique abilities.

More from Tom's Guide

- Damning new AI study shows that chatbots make errors summarizing the news over 50% of the time — and this is the worst offender

- I just tested the new Grok-3 with 5 prompts — here’s what I like and don’t like about this chatbot

- ChatGPT's scheduled task feature is a game changer — 5 prompts to try first

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.