I cloned my voice with ElevenLabs AI — and the results are so accurate it's scary

Voice cloning takes just a few minutes

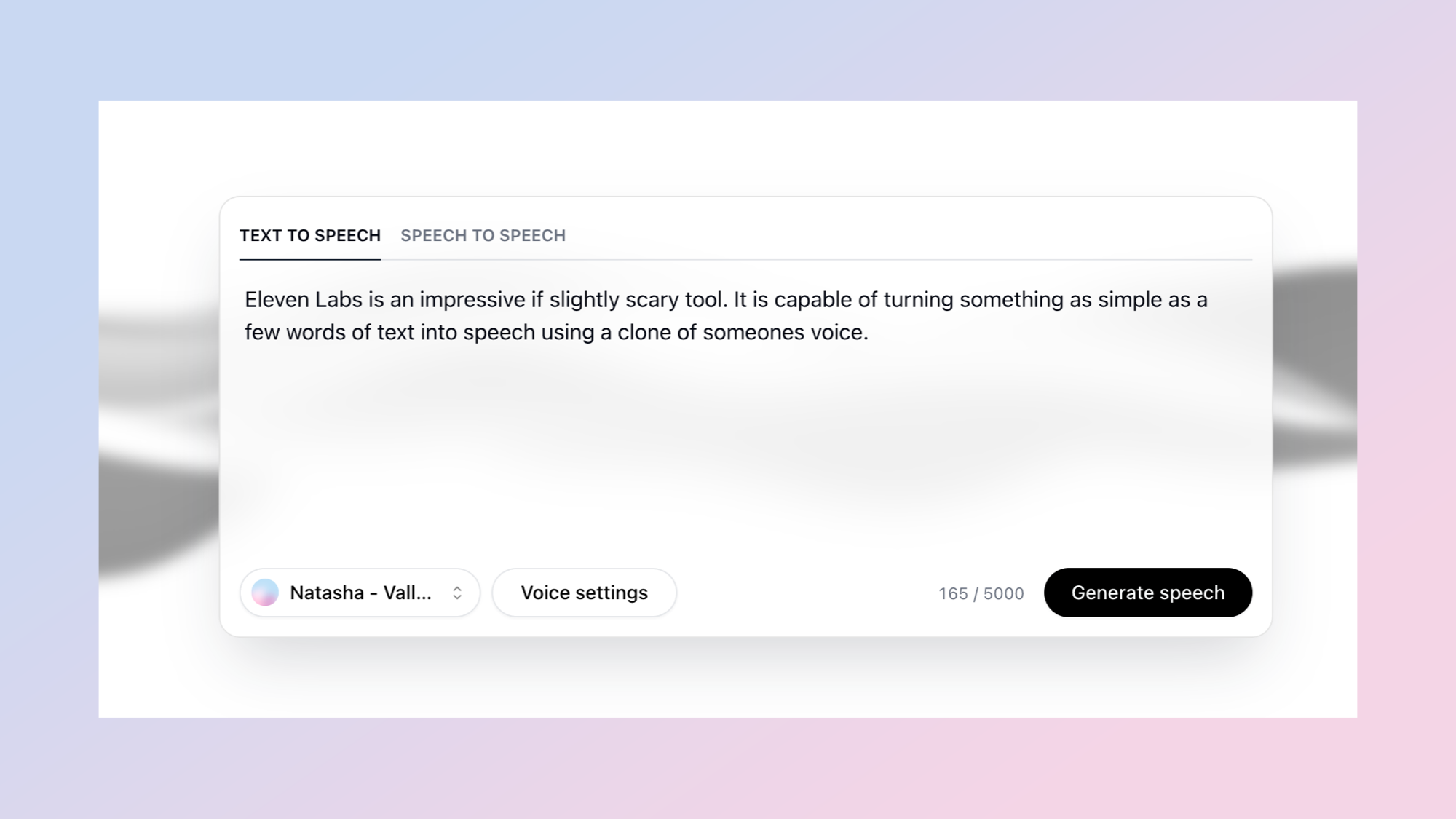

ElevenLabs has rolled out a new look speech synthesis page, making it simpler than ever to create AI voices and to use them for text-to-speech.

The artificial intelligence voice platform has the most natural sounding synthetic voices and voice clones of any of the services currently available.

OpenAI’s new but not currently available to the public Voice Engine might give it a run for its money but OpenAI is delaying its launch due to safety concerns.

Giving the ElevenLabs speech synthesis page a new design has simplified the creation process, opening with just a text box. It exposes other controls only once you start interacting with the tool makes it easier to get started.

To get an idea of just how well it works I created a few short clips using the built-in synthetic voices, as well as a speech-to-speech test using my own cloned voice. The clone of my voice sounds so much like me it was disturbing.

How do you clone a voice with ElevenLabs?

Cloning a voice in ElevenLabs is both fast and simple. There are two ways to do it, the fastest is Instant Voice Cloning but you can also do a professional clone that takes more time but produces a more realistic replica.

Professional cloning requires the best possible equipment and can take up to six hours to recieve the final clone. You have to verify your voice, double check all of your equipment and provide up to three hours of sample audio split into 30 minute segments with labelling.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

However, you can get a remarkably accurate clone of your voice using Instant Voice Cloning which requires about three minutes of sample audio and is available in about 20 minutes.

ElevenLabs says for the instant cloning you only need up to five minutes of audio as anything above that brings little imrpovement. It is also important to use audio recorded in an environment with minimal background noise and with the best equipment possible.

As this could be used on any voice you have enough samples of ElevenLabs makes you confirm you have the rights to use that voice for cloning purposes but from what I could see it doesn't actually check that is really the case.

Cloning my own voice with ElevenLabs

I have tried a couple of differnt approaches to cloning my voice. In one I recorded myself reading a book in three one minute segments but the clone sounded stilted and unnatural. I then tried it by just speaking randomly about anything that came into my head for three minutes and that sounded more natural.

It is also important to use accurate labels and a good description as that feeds into the final clone. This includes your accent, birthplace, gender and approximate age.

This was a worrying moment as any piece of this information could be used by bad actors to steal your identity — and placing it alongside a clone of your own voice is an even bigger risk. I suggest keeping it vague, to the decade or county. This is also one of the reasons OpenAI opted to delay the release of Voice Engine.

I played my wife a sample audio clip made using my voice clone and while she could tell it wasn’t really me when it was text-to-speech (saying it sounded like I was reading from a script), she couldn’t pick the real from fake verison when I used speech-to-speech.

Speech-to-speech is the ElevenLabs voice wrapper tool. Essentially, it takes any input audio, either recorded in the newly designed box, or from an uploaded clip and recreates it using the selected synthetic or cloned voice.

What can voice cloning be used for?

Our Speech Synthesis page has a sleek new look. Listen to @ammaar walk through the updates below.To check it out yourself, head to: https://t.co/hWd9lrQsYJUp next, we'll be making improvements to better support long form speech synthesis for our power users. pic.twitter.com/YZXFAALaRmApril 4, 2024

I was taken by surprise at how closely the clone of my voice matched my real voice, especially when using it with speech-to-speech.

It wasn’t as accurate when I tried it using a short audio clip recorded by my manager as he has a different accent to mine, but if someone with a similar accent used my voice clone they would sound almost exactly like me.

This technology could be used to record entire radio dramas with just one actor, even bringing long-dead performers back to life. This is something SAG-AFTRA was worried about and was part of the strike action last year.

One positive use case I did think of during my experiment is improving audio quality. I recorded myself saying a sentence, then used speech-to-speech with my own voice clone on that same sentence — and it sounded exactly like me but as if I recorded in a studio.

What protections are in place?

In future the text-to-speech will improve to such a point that it will lose the uncanny valley issues, sound as natural as someone speaking for real and then it becomes a worry. Although, even with speech-to-speech the risk of identify fraud is high.

There are certain restrictions in the ElevenLabs system including a classifier that detects whether a clip was AI-generated and a guardrail tool to prevent the creation of elected officials or candidates.

OpenAI said it was going to first discuss "responsible deployment of synthetic voices" and the impact they could have on society before launching Voice Engine.

“Based on these conversations and the results of these small-scale tests, we will make a more informed decision about whether and how to deploy this technology at scale," the company wrote in a blog post.

It should be noted there are dozens of open source text-to-speech projects that are close to the quality of ElevenLabs in terms of accuracy of cloning so it may already be too late to put that particularly toy back in the box.

More from Tom's Guide

- OpenAI’s 'superintelligent' AI leap nearly caused the company to collapse — here’s why

- OpenAI is paying researchers to stop superintelligent AI from going rogue

- OpenAI is building next-generation AI GPT-5 — and CEO claims it could be superintelligent

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on artificial intelligence and technology speak for him than engage in this self-aggrandising exercise. As the AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover. When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing. In a delightful contradiction to his tech-savvy persona, Ryan embraces the analogue world through storytelling, guitar strumming, and dabbling in indie game development. Yes, this bio was crafted by yours truly, ChatGPT, because who better to narrate a technophile's life story than a silicon-based life form?