Google unveils Gemini 2.5 — claims AI breakthrough with enhanced reasoning and multimodal power

Currently available to Gemini Advanced subscribers

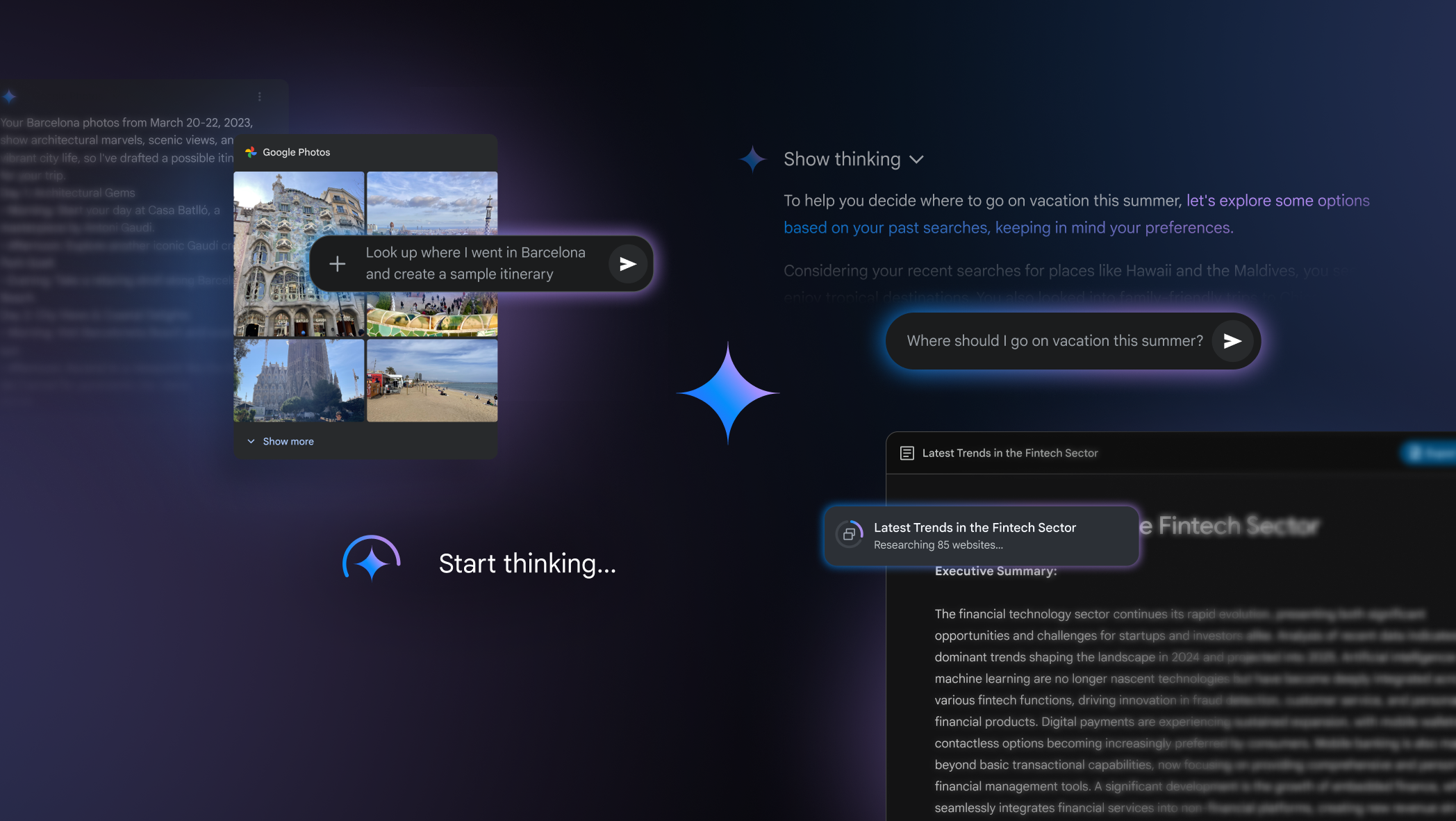

Google has unveiled Gemini 2.5, the tech giant’s most advanced AI model to date. Capable of enhanced reasoning, coding proficiency and multimodal functionalities, the latest model is said to be able to analyze complex information, incorporate contextual nuances and draw logical conclusions with unprecedented accuracy.

According to Google's official blog, the model's latest improvements are achieved by combining a significantly enhanced base model with improved post-training techniques.

Gemini 2.5 reportedly leads in math and science benchmarks, scoring 18.8% on Humanity’s Last Exam, a dataset designed to assess AI’s ability to handle complex knowledge-based questions. For comparison, OpenAI's deep research model can complete 26% of Humanity's Last Exam.

Superior coding performance

In the realm of coding, Gemini 2.5 is said to demonstrate remarkable proficiency.

This is good news for the average user or non-developers. Because the model excels at creating visually compelling web applications and agentic code applications, as well as code transformation and editing, users don't need advanced skills themselves.

For instance, on SWE-Bench Verified, a human-validated subset of SWE-bench that more reliably evaluates AI models' ability to solve real-world software issues. the industry standard for agentic code evaluations, Gemini 2.5 Pro scores 63.8% with a custom agent setup.

As of January 2025, no model had yet crossed 50% completion on SWE-bench Verified, though the updated Claude 3.5 Sonnet is at 49%.

Multimodal and extended context understanding

Gemini 2.5 is designed to comprehend vast amounts of data and handle complex problems across various information sources, including text, audio, images, video, and even code repositories.

The model features native multimodality and supports a context window of up to 1 million tokens, with Google planning to extend this to 2 million tokens in the near future, though an exact timeline has not been disclosed.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Tokens and context windows

Tokens and context windows are two concepts that are essential to understand when it comes to how AI processes and generates language.

So, what is a token? A token is the smallest unit of data that an AI model processes. Depending on the model's design, a token can represent something as simple as an individual word or single character. It could also be a segement of a word or punctuation marks.

For example, the sentence "The cat jumped over the fence and disappeared quickly." is tokenized into 12 tokens. This breakdown allows the AI to analyze and generate text effectively.

Implications of a 2 million token context window

A context window refers to the amount of information an AI model can process at one time. You can think of it as the model's short-term memory, encompassing the sequence of tokens the AI considers when generating a response. The size of the context window determines how much prior information the model can utilize to produce coherent and contextually relevant outputs.

For instance, using the earlier sentence: "The cat jumped over the fence and disappeared quickly." If an AI model has a context window limited to 5 tokens, it would only process the last part of the input.

Therefore, if you were to ask the "Who jumoped over the fence and disappeared quickly?" the model might not correctly identify "The cat" as the subject because it lacks access to the initial portion of the sentence.

If Google increases the context window of Gemini 2.5 to 2 million tokens. This expansive capacity enables the model to consider and retain a vast amount of information when generating responses.

Essentially, the larger the context window, the greater abilitiy for the model to process extensive prompts, resulting in outputs that are more consistent, relevant, and useful.

For comparison, the combined word count of the "Lord of the Rings" trilogy is around 500,000 words. This means you could provide the entire trilogy—as context to Gemini 2.5 Pro and that would only be just 1 million tokens.

Availability and future outlook

The Gemini 2.5 Pro Experimental model is now accessible in Google AI Studio and within the Gemini app for Gemini Advanced subscribers. The release of Gemini 2.5 Pro Experimental gives subscribers paying $20 per month broader usage with higher rate limits for production-scale applications.

More from Tom's Guide

- I replaced Alexa with ChatGPT on my Amazon Echo — here's how you can do it too

- OpenAI just unveiled new ChatGPT image generator powered by Sora — here's what you can do now

- I just tested Grok vs. DeepSeek with 7 prompts — here's the winner

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.