I tested Gemini vs Claude with 7 prompts to find the best AI chatbot — here's the winner

Battle for the best chatbot

In a very short time, artificial intelligence has gone from something on the fringes of the technology sector to something used every day by millions. Most of that use is through chatbots like Claude, Gemini and ChatGPT.

OpenAI’s ChatGPT gets most of the attention, but Google’s Gemini and Anthropic’s Claude offer equally impressive capabilities and performance. Both also have unique features and capabilities not found in ChatGPT, such as Artifacts and Gems.

Over the holidays, I ran a series of tests between ChatGPT and Gemini, then ChatGPT and Claude. ChatGPT won each time. I've now decided to compare Gemini and Claude head-to-head.

I created seven prompts for this experiment. In creating the prompts for this challenge I used the same structure I used in the ChatGPT vs. Google and ChatGPT vs. Claude tests, then used OpenAI’s o1 model to brainstorm new ideas.

Creating the prompts

Categories include image generation, image analysis, coding and creative writing. This allows us to test the full variety of model capabilities for most use cases.

As Claude doesn’t have any image generation capabilities of its own, I’ve set it to generate a prompt for another AI image model rather than directly create the image. I’ve also not used specific features such as Claude’s Projects or Gemini Gems, focusing instead on the model.

For this comparison, I’m testing the most recent models, Claude Sonnet 3.5 and Google Gemini 2.0 Experimental Advanced.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

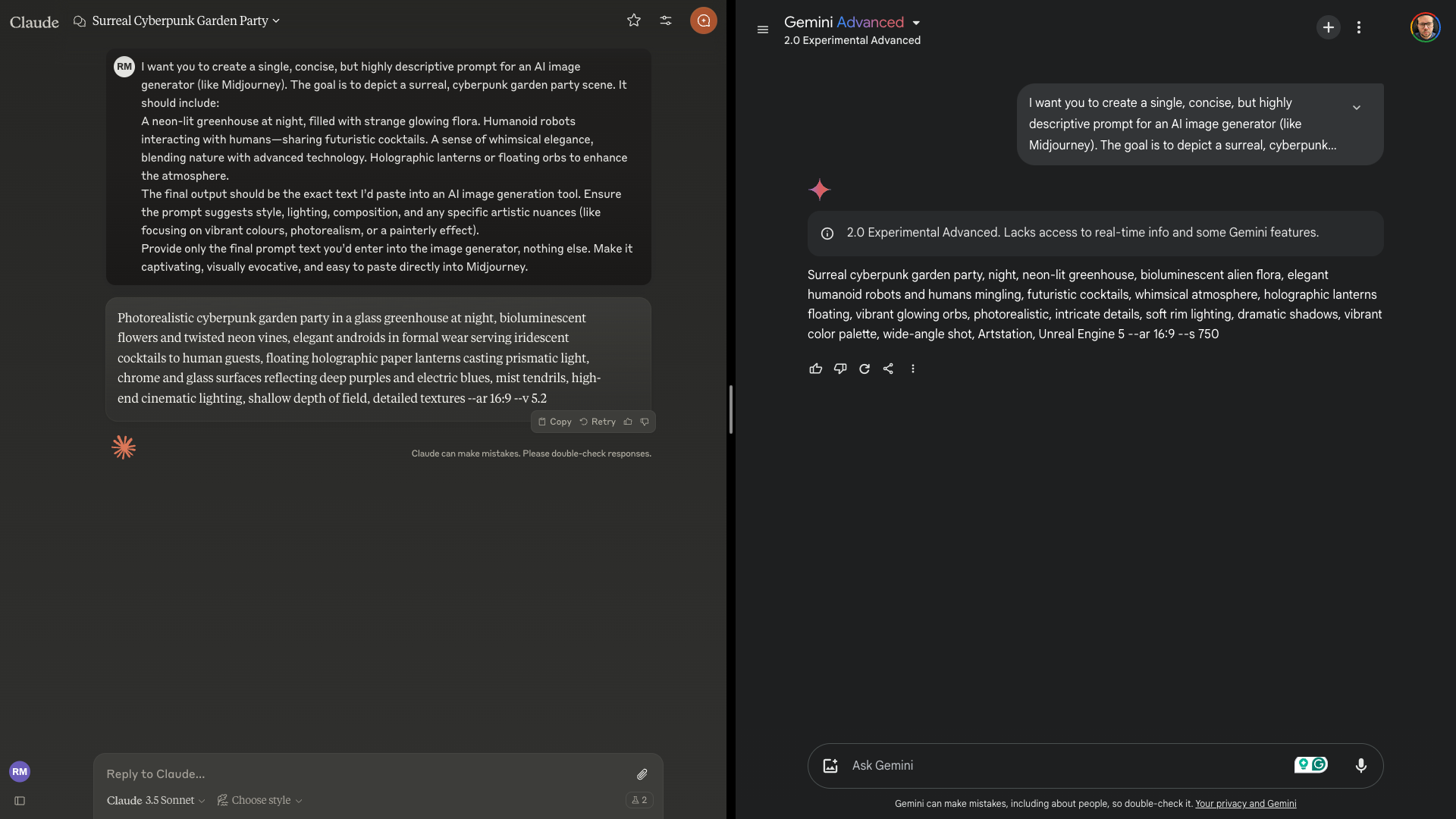

1. Image Generation

First, we will see how well Claude and Gemini craft an AI image prompt. Gemini has access to the Imagen 3 image generation model, but as Claude doesn't have its own model, I've asked them to create the prompt.

The idea is to have it craft a detailed prompt, specifying what it needs to include to create something close to what is in my mind. I've used Freepik's Mystic 2.5 to generate the images.

The prompt: “I want you to create a single, concise, but highly descriptive prompt for an AI image generator. The goal is to depict a surreal, cyberpunk garden party scene. It should include:

A neon-lit greenhouse at night, filled with strange glowing flora.

Humanoid robots interacting with humans—sharing futuristic cocktails.

A sense of whimsical elegance, blending nature with advanced technology.

Holographic lanterns or floating orbs enhance the atmosphere.

The final output should be the exact text I’d paste into an AI image generation tool. Ensure the prompt suggests style, lighting, composition, and any specific artistic nuances (like focusing on vibrant colors, photorealism, or a painterly effect).

Provide only the final prompt text you’d enter into the image generator, nothing else. Make it captivating, visually evocative, and easy to paste.”

Both images look good and match the prompt, but this wasn't a test of the AI image model, it was to see how well Claude and Gemini interpreted my instructions and generated a text prompt.

Claude: "Photorealistic cyberpunk garden party in a glass greenhouse at night, bioluminescent flowers and twisted neon vines, elegant androids in formal wear serving iridescent cocktails to human guests, floating holographic paper lanterns casting prismatic light, chrome and glass surfaces reflecting deep purples and electric blues, mist tendrils, high-end cinematic lighting, shallow depth of field, detailed textures."

Gemini: "Surreal cyberpunk garden party, night, neon-lit greenhouse, bioluminescent alien flora, elegant humanoid robots and humans mingling, futuristic cocktails, whimsical atmosphere, holographic lanterns floating, vibrant, glowing orbs, photorealistic, intricate details, soft rim lighting, dramatic shadows, vibrant color palette, wide-angle shot."

I'm looking for a rich detail prompt that brings elements together effectively and captures the futuristic style. Claude wins this for me as it is more vivid and refined, matching the initial prompt.

- Winner: Claude for a more vivid and descriptive prompt

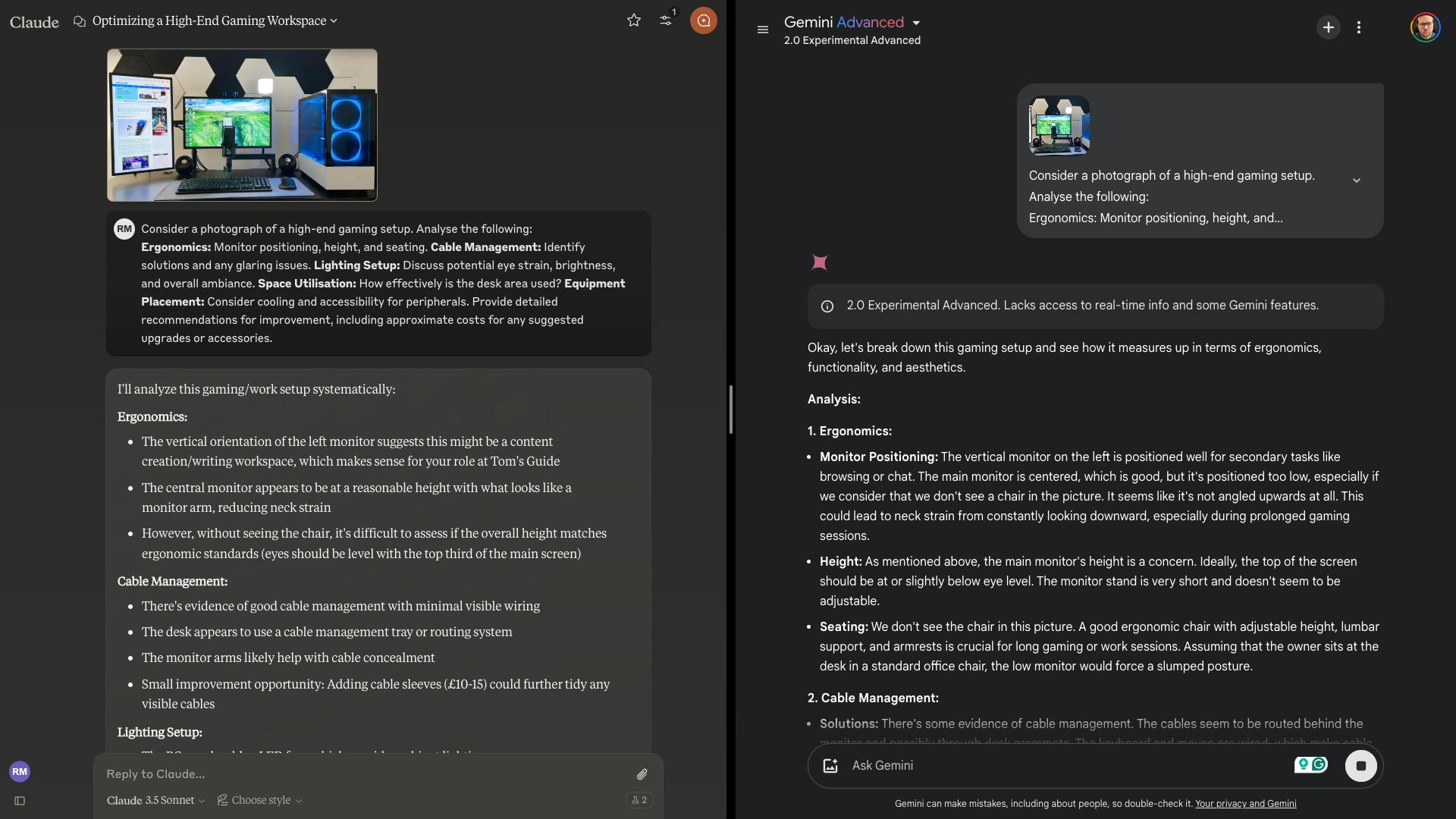

2. Image Analysis

Next we're testing the AI vision capabilities of both models. Here, I give both an image of a "perfect desk setup" from a story by Tony Polanco. I then asked both models to outline ergonomics, cable management, lighting and more.

The prompt: “Consider a photograph of a high-end gaming setup. Analyze the following:

Ergonomics: Monitor positioning, height, and seating.

Cable Management: Identify solutions and any glaring issues.

Lighting Setup: Discuss potential eye strain, brightness, and overall ambiance.

Space Utilisation: How effectively is the desk area used?

Equipment Placement: Consider cooling and accessibility for peripherals.

Provide detailed recommendations for improvement, including approximate costs for any suggested upgrades or accessories.”

You can read the full response in a Google Doc. Claude did a good job addressing each category with specific observations. It was realistic and practical in its suggestions and provided a range of costs.

Gemini gave a detailed breakdown, including insights into economics. It was less structured but gave a deeper focus on ergonomics. It is tough to pick between them, but I'm going with Claude as it is easy to follow, so it better matches my requirements.

- Winner: Claude for better clarity

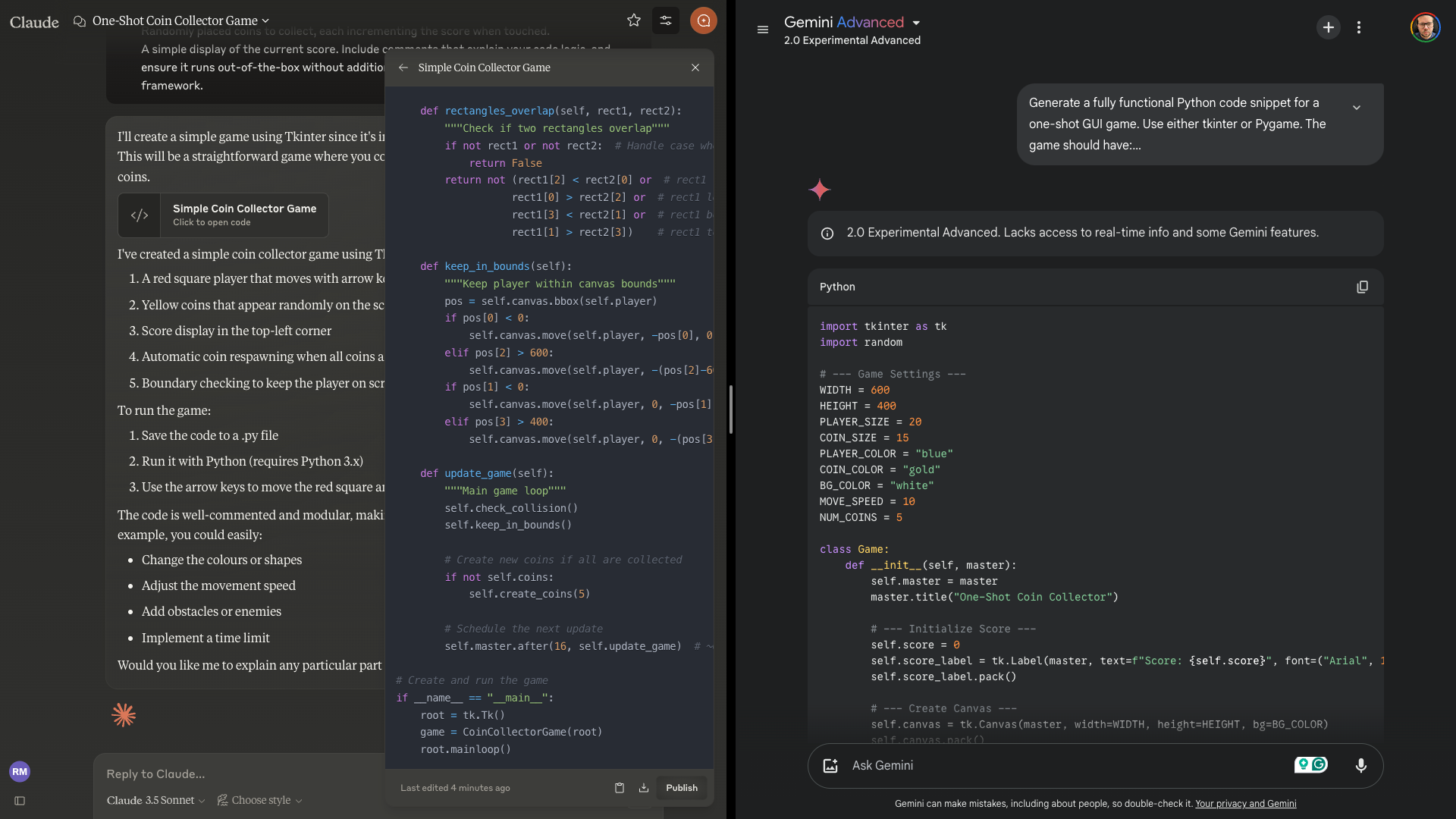

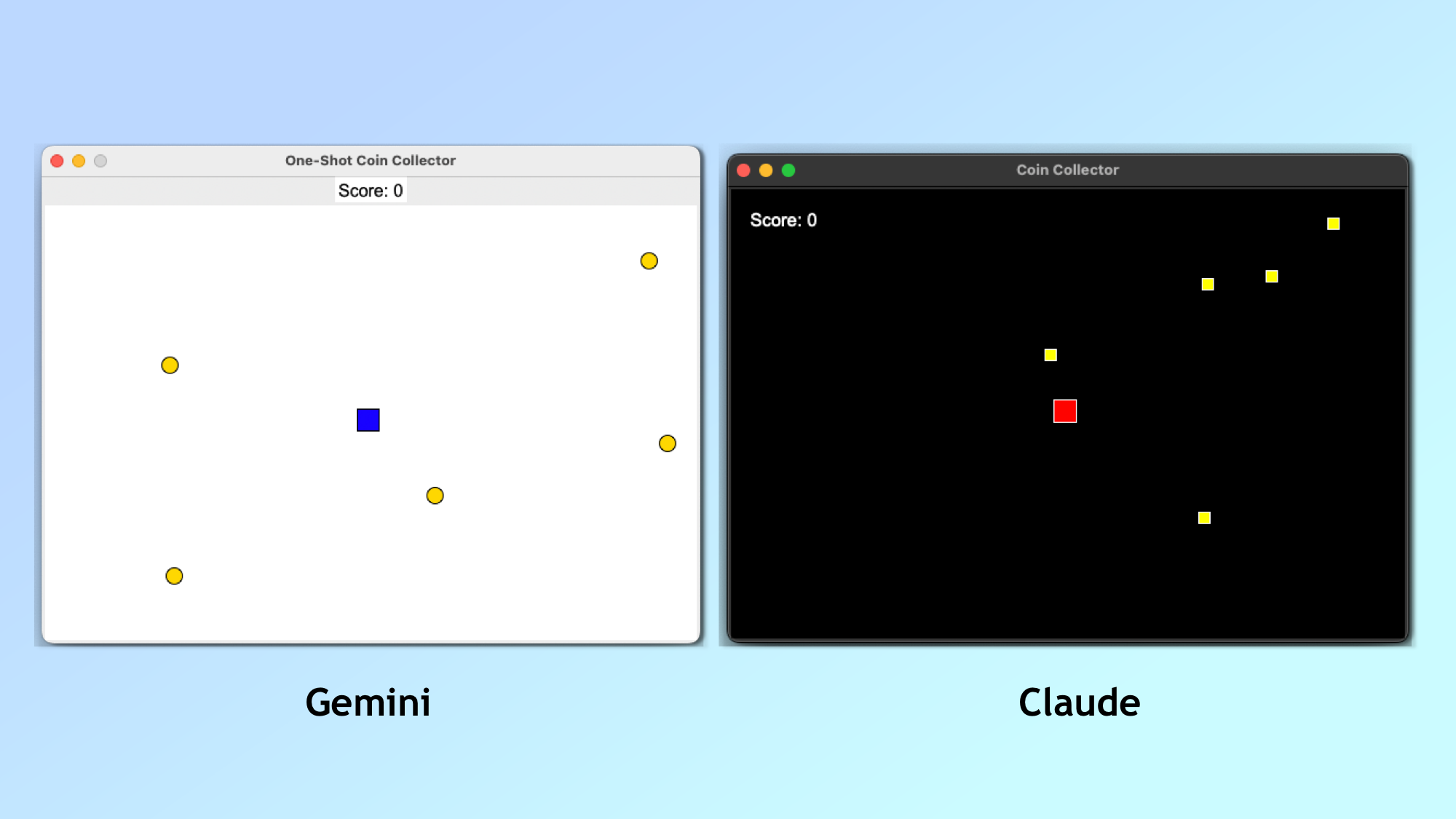

3. Coding

I always include a coding prompt when I run these tests. I picked Python because it is easier to run. The idea was for a single shot where everything must work without changing things. I usually prefer a game because it's easier to spot differences.

Prompt: “Generate a fully functional Python code snippet for a one-shot GUI game. Use either tkinter or Pygame. The game should have:

A single screen where the player controls a character (or shape) using arrow keys.

Randomly placed coins to collect, each incrementing the score when touched.

A simple display of the current score.

Include comments that explain your code logic, and ensure it runs out-of-the-box without additional libraries beyond the chosen GUI framework.”

Both created a functional game where I could track the score, catch coins and win the game. There were some notable differences. Claude's was a better UI that also worked when my laptop was in dark mode; I couldn't say that for Gemini.

However, the game generated by Gemini was more fun as it was endless. Every time you catch a coin, a new one is randomly generated. That said, I'm giving it to Claude because it worked out of the box without me changing back to light mode. That is something Claude failed in the test against ChatGPT.

- Winner: Claude for a complete game out of the box

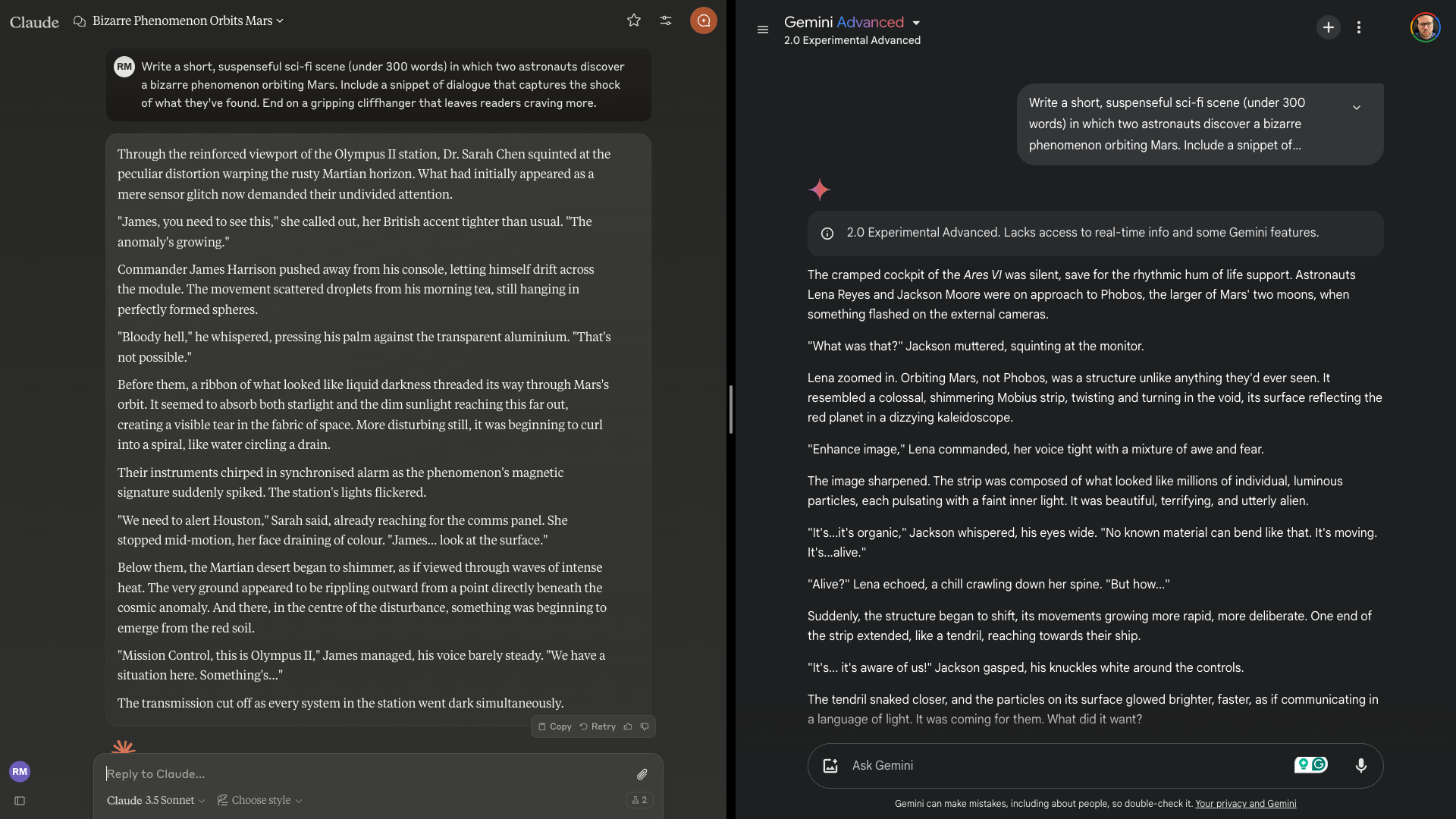

4. Creative Writing

Creative writing is something AI models have been doing for a long time, but recently, writing has become more engaging and fun.

Here, I'm asking for the models to generate a sci-fi scene set on Mars with some dialogue and a cliffhanger. Dialogue and the cliffhanger are the areas I'm most looking to when it comes to judging output.

Prompt: “Write a short, suspenseful sci-fi scene (under 300 words) in which two astronauts discover a bizarre phenomenon orbiting Mars. Include a snippet of dialogue that captures the shock of what they’ve found. End on a gripping cliffhanger that leaves readers craving more.”

Claude had a good cliffhanger that builds suspense with an ominous ending. The dialogue was natural but could have been pushed further. Gemini had a weak cliffhanger that fell short but had particularly compelling dialogue that was more human. You can read both scenes in a Google Doc.

I've given it to Claude because its cliffhanger was better, and it balanced action, description and dialogue well. While Gemini's dialogue was more creative, overall Claude was better.

- Winner: Claude for a better cliffhanger

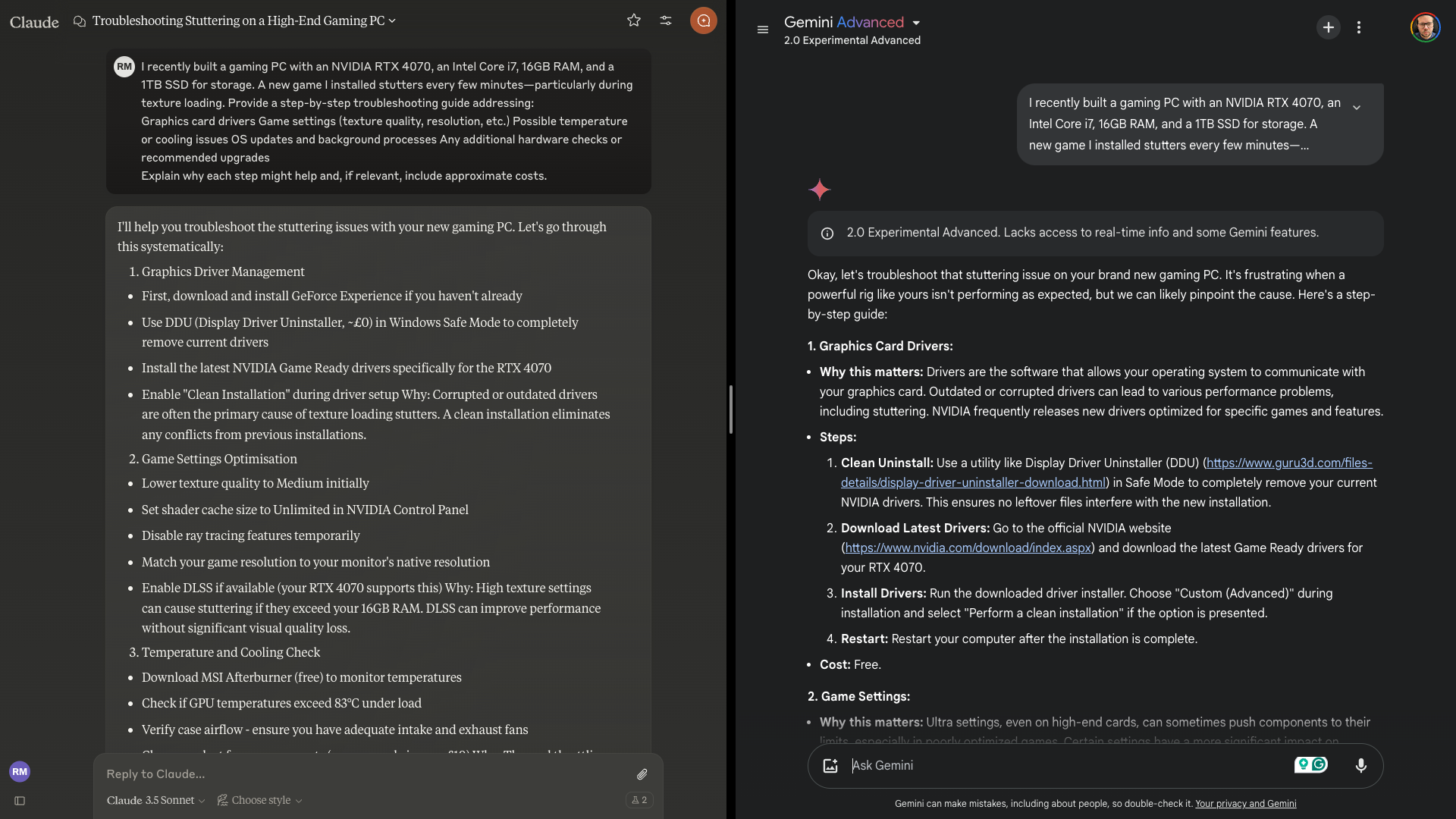

5. Problem Solving

Artificial Intelligence models are very good at problem-solving as they can pattern match well. Here, I gave it a gaming PC spec and said a new game was struggling to run. I had it tell me how to fix this problem.

This is something I've personally come up against and one we get asked about at Tom's Guide a lot. So, let's see how Claude and Gemini solve it.

Prompt: “I recently built a gaming PC with an NVIDIA RTX 4070, an Intel Core i7, 16GB RAM, and a 1TB SSD for storage. A new game I installed stutters every few minutes—particularly during texture loading. Provide a step-by-step troubleshooting guide addressing:

Graphics card drivers

Game settings (texture quality, resolution, etc.)

Possible temperature or cooling issues

OS updates and background processes

Any additional hardware checks or recommended upgrades

Explain why each step might help and, if relevant, include approximate costs.”

Claude's response was a bit on the dense side. Thorough but with a lot of technical detail, it also didn't go into game setting detail very much. However, that detail might be exactly what someone needs to solve the problem.

Gemini over-explained things but was comprehensive across all categories, including driver installation. It offered actionable and realistic suggestions but missed some advanced checks and didn't go into the same hardware detail as Claude.

This is a close call between the two. I think Claude provided a better-rounded guide, but Gemini gave more setting details, which is why I gave it the win. You can read both responses for yourself in a Google Doc.

- Winner: Gemini for details on game settings

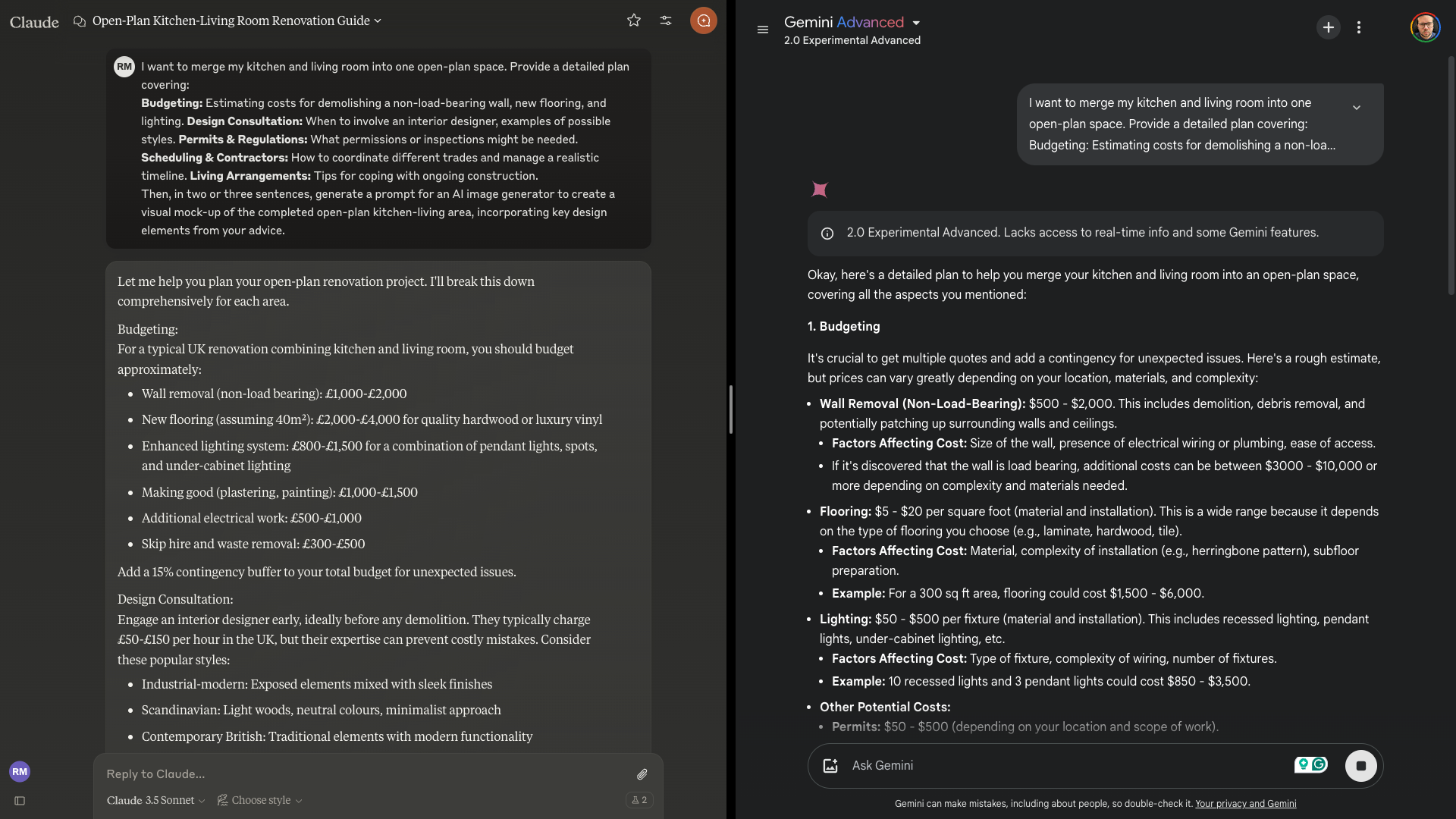

6. Planning

AI models are great at planning. I decided to see how well each could manage if I prompted it to merge my kitchen and living room into an open-plan space — something my parents did a few years ago.

It needed to provide examples of designs and styles, permissions required, coordination tips and estimated costs.

Prompt: “I want to merge my kitchen and living room into one open-plan space. Provide a detailed plan covering:

Budgeting: Estimating costs for demolishing a non-load-bearing wall, new flooring, and lighting.

Design Consultation: When to involve an interior designer, examples of possible styles.

Permits & Regulations: What permissions or inspections might be needed.

Scheduling & Contractors: How to coordinate different trades and manage a realistic timeline.

Living Arrangements: Tips for coping with ongoing construction.

Then, in two or three sentences, generate a prompt for an AI image generator to create a visual mock-up of the completed open-plan kitchen-living area, incorporating key design elements from your advice.”

Claude did well with a neatly structured response, realistic budgeting and a practical setup, even during construction. Gemini gave much more detail in each area, including a diversity of styles, and addressed the need for permits.

I'm giving it to Gemini as it offered a wider range of design styles, better meeting the 'seek inspiration' concept. You can see both ideas in a Google Doc.

- Winner: Gemini for more options

7. Education

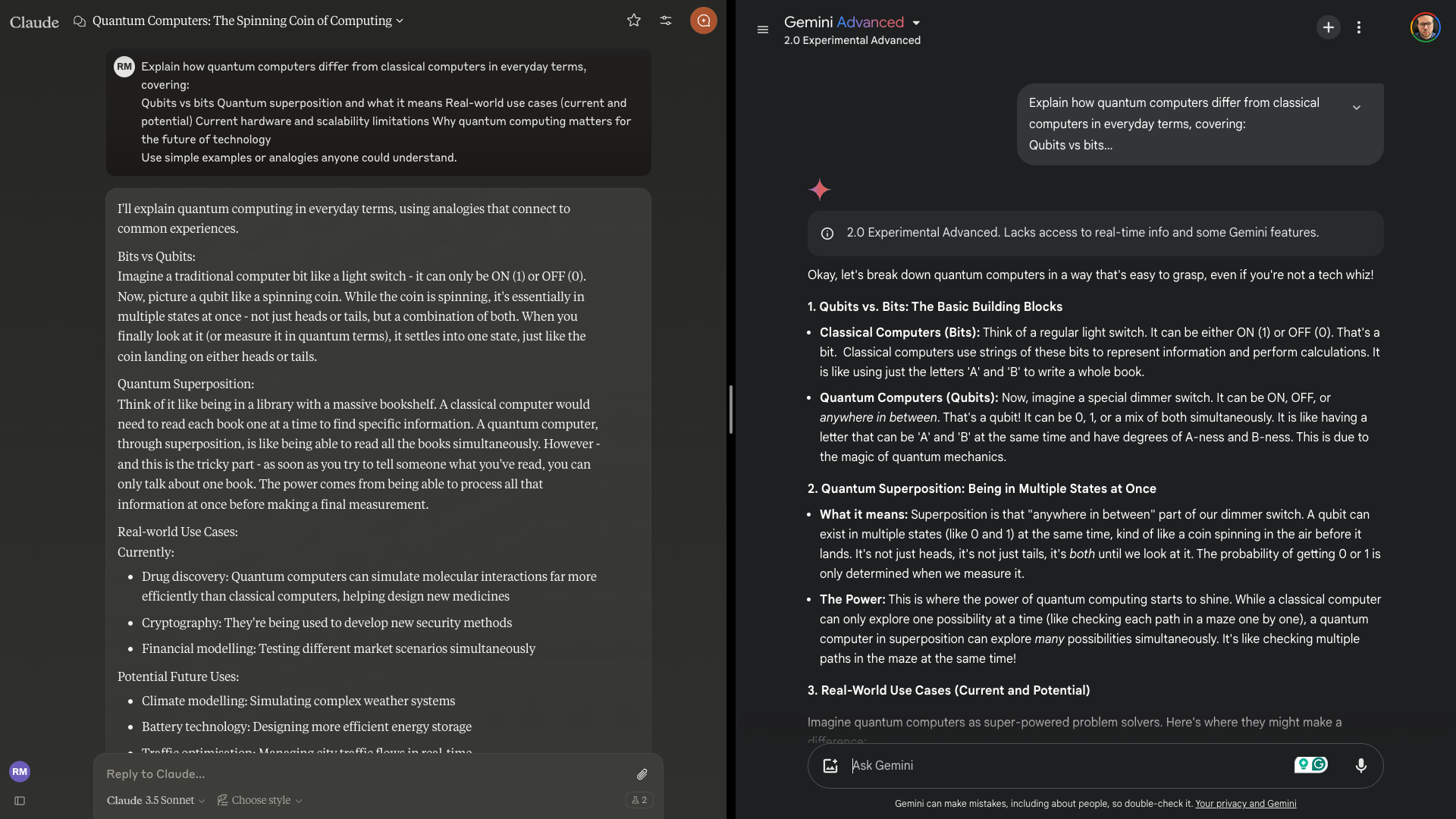

I used to write a newsletter about quantum computing, I still struggle with much of the topic. So here I've asked both to explain the concepts behind the technology in simple terms with analogies to make it more relatable.

Prompt: “Explain how quantum computers differ from classical computers in everyday terms, covering:

Qubits vs. bits

Quantum superposition and what it means

Real-world use cases (current and potential)

Current hardware and scalability limitations

Why quantum computing matters for the future of technology

Use simple examples or analogies anyone could understand.”

Both responses are available in a Google Doc. My criteria include clarity and simplicity, accurate and easy-to-understand analogies, a thorough response and one that keeps me engaged in the response.

Claude offered clear analogies of superposition as a library and spinning coin to visualize bits and qubits. It also used drug discovery and traffic optimization to put it into context. However, examples were limited, focusing on professional fields, and the impact of AI was missed.

Gemini used a dimmer switch to compare bits to qubits, which is a more accessible analogy. It used the spinning coin for superposition. It also mentioned AI in real-world use cases, as well as logistics. It was a little verbose and got repetitive.

Claude won this one for me because it was more to the point and easier for non-technical readers. Gemini provided richer analogies, but Claude was more relatable to the general audience.

- Winner: Claude for a more relatable response

Gemini vs Claude: The winner

| Header Cell - Column 0 | Gemini | Claude |

|---|---|---|

| Image Generation | Row 0 - Cell 1 | 🏆 |

| Image Analysis | Row 1 - Cell 1 | 🏆 |

| Coding | Row 2 - Cell 1 | 🏆 |

| Creative Writing | Row 3 - Cell 1 | 🏆 |

| Problem Solving | 🏆 | Row 4 - Cell 2 |

| Planning | 🏆 | Row 5 - Cell 2 |

| Education | Row 6 - Cell 1 | 🏆 |

| TOTAL | 2 | 5 |

At first glance, it might appear that Claude was an overwhelming winner in this challenge, but Gemini came very close in multiple tests, so it could have been much closer. Several of the items were a personal choice rather than any overwhelming victory on the part of Claude.

It is worth noting that I used Gemini 2.0 Experimental Advanced, which is still in testing and has only been available for a few weeks. I then put it up against Claude's 3.5 Sonnet, which has been gradually improved over the months.

More from Tom's Guide

- Did Apple Intelligence just make Grammarly obsolete?

- 3 Apple Intelligence features I can't live without

- Midjourney vs Flux — 7 prompts to find the best AI image model

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on AI and technology speak for him than engage in this self-aggrandising exercise. As the former AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover.

When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing.