Google’s Gemini Pro 1.5 can now hear as well as see — what it means for you

Available through VertexAI

Google has updated its incredibly powerful Gemini Pro 1.5 artificial intelligence model to give it the ability to hear the contents of an audio or video file for the first time.

The update was announced at Google Next, with the search giant confirming the model can listen to an updloaded clip and provide information without the need for a written transcript.

What this means is you could give it a documentary or video presentation and ask it questions about any moment, both audio and video, within the clip.

This is part of a wider push from Google to create more multimodal models that can understand a variety of input types beyond just text. The move is possible due to the Gemini family of models being trained on audio, video, text and code at the same time.

What is new in Gemini Pro 1.5?

Google launched Gemini Pro 1.5 in February with a 1 million token context window. This, combined with the multimodal training data means it can process videos.

The tech giant has now added sound to the options for input. This means you can give it a podcast and have it listen through for key moments or specific mentions. It can do the same for audio attached to a video file, while also analysing the video content.

The update also means Gemini can now generate transcripts for video clips regardless of how long they might run and find a specific moment within the audio or video file.

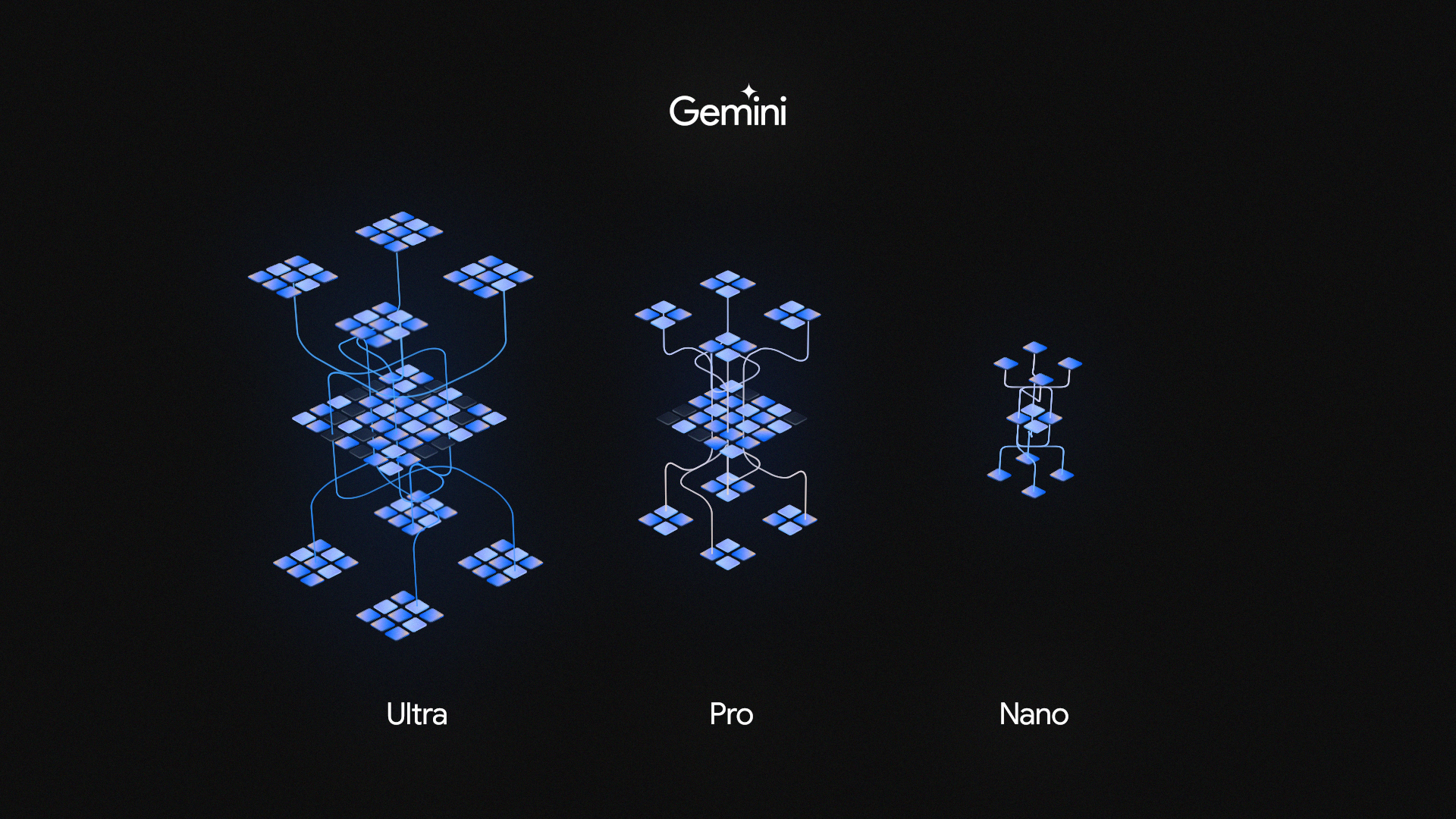

The new update is part of the middle-tier of the Gemini family, which comes in three form factors — the tiny Nano for on-device, Pro powering the free version of the Gemini chatbot and Ultra powering Gemini Advanced.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

For some reason Google only released the 1.5 update to Gemini Pro rather than Ultra, meaning their middle-tier model now out performs the more advanced version. It isn’t clear if there will be a Gemini Ultra 1.5 or when it will be accessible if it launches.

The massive context window — starting at 250,000 (similar to Claude 3 Opus) and rising to over a million for certain approved users — means you also don’t need to fine tune a model on specific data. You can load that data in at the start of a chat and just ask questions.

The update also means Gemini can now generate transcripts for video clips regardless of how long they might run and find a specific moment within the audio or video file.

How to access Gemini Pro 1.5?

I imagine at some point Google will update its Gemini chatbot to use the 1.5 models, possibly after the Google I/O developer conference next month. For now it is only available through the Google Cloud developer dashboard VertexAI.

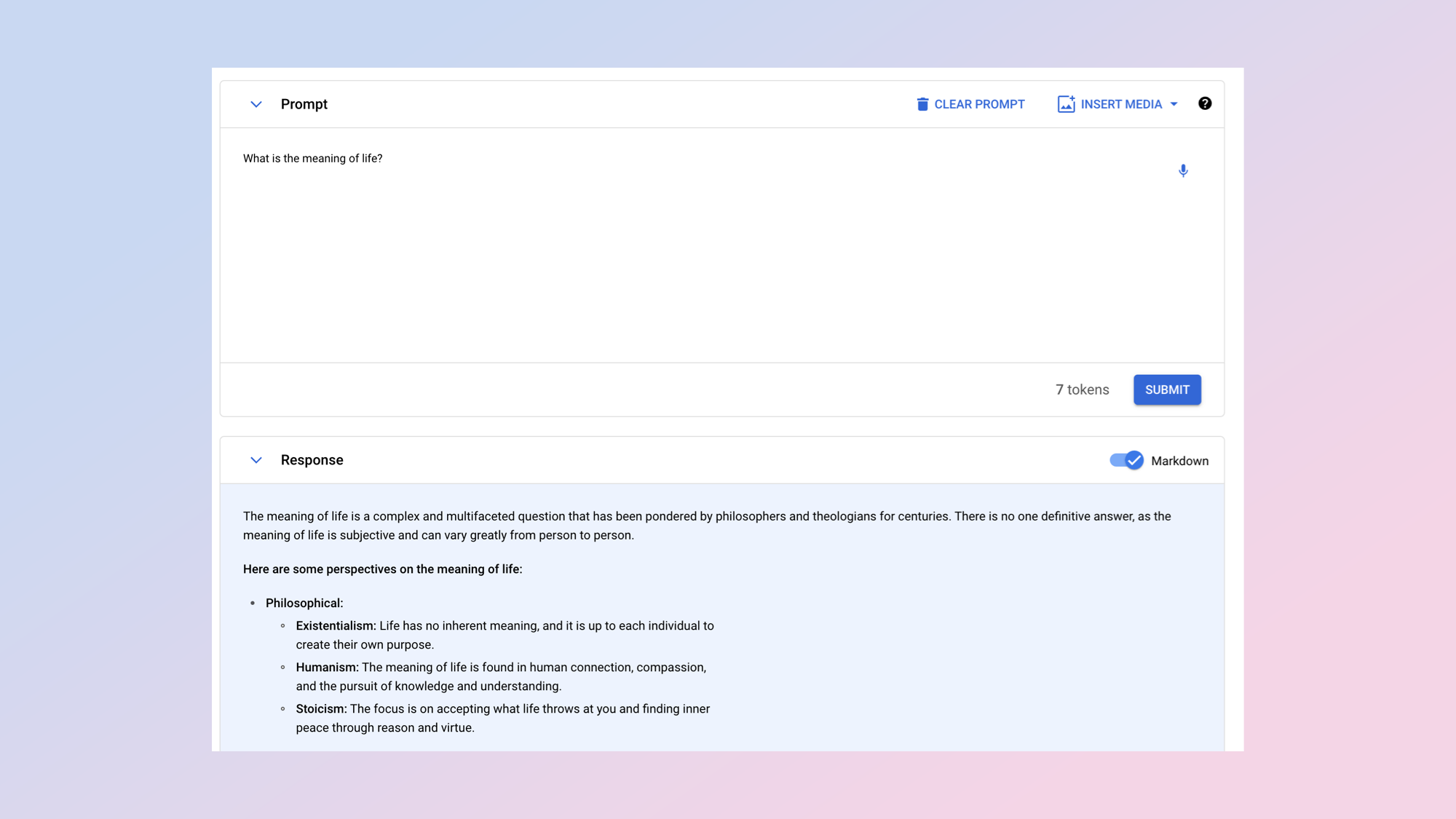

While VertexAI is a powerful tool for interacting with a range of models, building out AI applications and testing what is possible — it isn't widely accessible and mainly targeted at developers, enterprise and researchers rather than consumers.

Using VertexAI you can insert any form of visual or audio media such as a short film or someone giving a talk and add a text prompt. This could be "give me five bullet points summing up the speech" or "how many times did they say Gemini".

Google's main audience for Gemini Pro 1.5 is enterprise with partnerships already in the works with TBS, REplit and others who are using it for metadata tagging and creating code.

Google has also started using Gemini Pro 1.5 in its own products including the Generative AI coding assistant Code Assist to track changes across large-scale codebases.

What else did Google announce?

New @Google developer launch today:- Gemini 1.5 Pro is now available in 180+ countries via the Gemini API in public preview- Supports audio (speech) understanding capability, and a new File API to make it easy to handle files- New embedding model!https://t.co/wJk1e1BG1EApril 9, 2024

The changes to Gemini Pro 1.5 were announced at Google Next along with a big update to the DeepMind AI image model Imagen 2 that powers the Gemini image-generation capabilities.

This is getting inpainting and outptaining where users can remove or add any element from a generated image. This is similar to updates OpenAI made to its DALL-E model recently.

Google is also going to starts grounding its AI responses across Gemini and other platforms with Google Search so they always contain up to date information.

More from Tom's Guide

- ChatGPT Plus vs Copilot Pro — which premium chatbot is better?

- I pitted Google Bard with Gemini Pro vs ChatGPT — here’s the winner

- Runway vs Pika Labs — which is the best AI video tool?

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on artificial intelligence and technology speak for him than engage in this self-aggrandising exercise. As the AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover. When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing. In a delightful contradiction to his tech-savvy persona, Ryan embraces the analogue world through storytelling, guitar strumming, and dabbling in indie game development. Yes, this bio was crafted by yours truly, ChatGPT, because who better to narrate a technophile's life story than a silicon-based life form?