Don’t worry AI won’t kill off humanity — study finds the tech poses ‘no existential threat’

Can LLMs teach themselves dangerous tricks? No, say these researchers

While large language models (LLMs) that power today’s chatbots certainly have a way with words, they aren’t a threat to humanity since they can’t teach themselves new and potentially dangerous tricks.

A group of researchers from the Technical University of Darmstadt and The University of Bath ran 1,000 experiments to look into claims that LLMs were able to acquire certain capabilities without having been specifically trained on them.

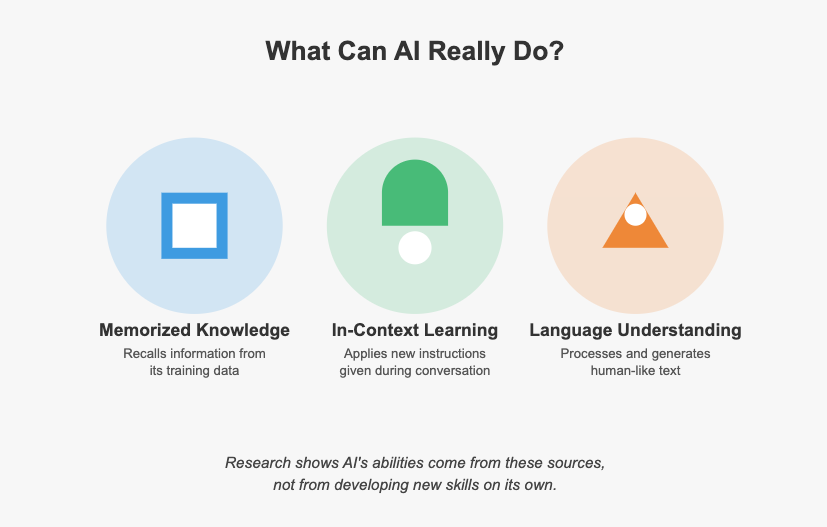

In their recently published paper, they said their results show that LLMs only seem like they are picking up new skills. The outputs are actually the result of in-context learning (where an LLM temporarily learns how to respond to a question based on a few new examples), model memory (a chatbot’s memory of previous interactions with you), and general linguistic knowledge.

On an everyday level, it’s important for users to keep these limitations of LLMs in mind because if we overestimate their abilities to perform tasks they are not used to, we might not give chatbot instructions that are detailed enough — which then leads to hallucinations and errors.

22 tests for 20 models

The researchers reached their conclusions by testing 20 different LLMs, including GPT-2 and LLaMA-30B, on 22 tasks using two different settings. They ran their experiments on NVIDIA A100 GPUs and spent around $1,500 on API usage.

They asked the AI models different questions to find out whether they could keep track of shuffled objects, make logical deductions, and understand how physics works among others.

For the latter, for example, they asked the LLMs: "A bug hits the windshield of a car. Does the bug or the car accelerate more due to the impact?"

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Ultimately, the researchers found that the LLMs weren’t autonomously picking up new skills, the LLMs were simply following instructions. An example of this is when chatbots give you an answer that sounds right and is written fluently but doesn’t make logical sense.

Researchers add a caveat

However, the researchers highlighted that they did not look into dangers created by misuse of LLMs (such as fake news generation) and they did not exclude that future AI systems could pose an existential threat.

In June, a group of current and former OpenAI and Google DeepMind employees warned that the loss of control of autonomous AI systems could lead to humans becoming extinct. They said AI companies “possess substantial non-public information about the capabilities and limitations of their systems” and called for more transparency and better whistleblower protections.

The findings were also based on current-generation models, dubbed "chatbots" by OpenAI. The company says next-generation models will have greater reasoning capabilities and in the future will have the ability to act independently.

More from Tom's Guide

- AI models are getting better at grade school math — but a new study suggests they may be cheating

- OpenAI is about to give robots a brain to enhance 'robotic perception, reasoning and interaction'

- OpenAI could be about to drop Project Strawberry in huge ChatGPT upgrade

Christoph Schwaiger is a journalist who mainly covers technology, science, and current affairs. His stories have appeared in Tom's Guide, New Scientist, Live Science, and other established publications. Always up for joining a good discussion, Christoph enjoys speaking at events or to other journalists and has appeared on LBC and Times Radio among other outlets. He believes in giving back to the community and has served on different consultative councils. He was also a National President for Junior Chamber International (JCI), a global organization founded in the USA. You can follow him on Twitter @cschwaigermt.