I tested DeepSeek vs Gemini 2.5 with 9 prompts — here's the winner

Gemini 2.5 is free, but can it beat DeepSeek?

The final round of AI Madness was between DeepSeek and Gemini 2.0. I think it’s safe to say that most of us didn’t expect DeepSeek to win in nearly every category.

For every round of AI Madness, I used chatbots with an available free tier. Just last week, Gemini 2.5 was only available with a Gemini Advanced subscription.

However, days later, in a surprise announcement, Google revealed that Gemini 2.5 is now free. Gemini 2.5 is the tech giant’s most advanced AI model to date.

Capable of enhanced reasoning, coding proficiency and multimodal functionalities, Gemini 2.5l is said to be able to analyze complex information, incorporate contextual nuances and draw logical conclusions with unprecedented accuracy.

Gemini 2.5 reportedly leads in math and science benchmarks, scoring 18.8% on Humanity’s Last Exam, a dataset designed to assess AI’s ability to handle complex knowledge-based questions. For comparison, OpenAI's deep research model can complete 26% of Humanity's Last Exam.

Many Tom's Guide readers wondered how Gemini 2.5 would perform against DeepSeek with the same prompts used in the final round of AI Madness. I just had to know, too.

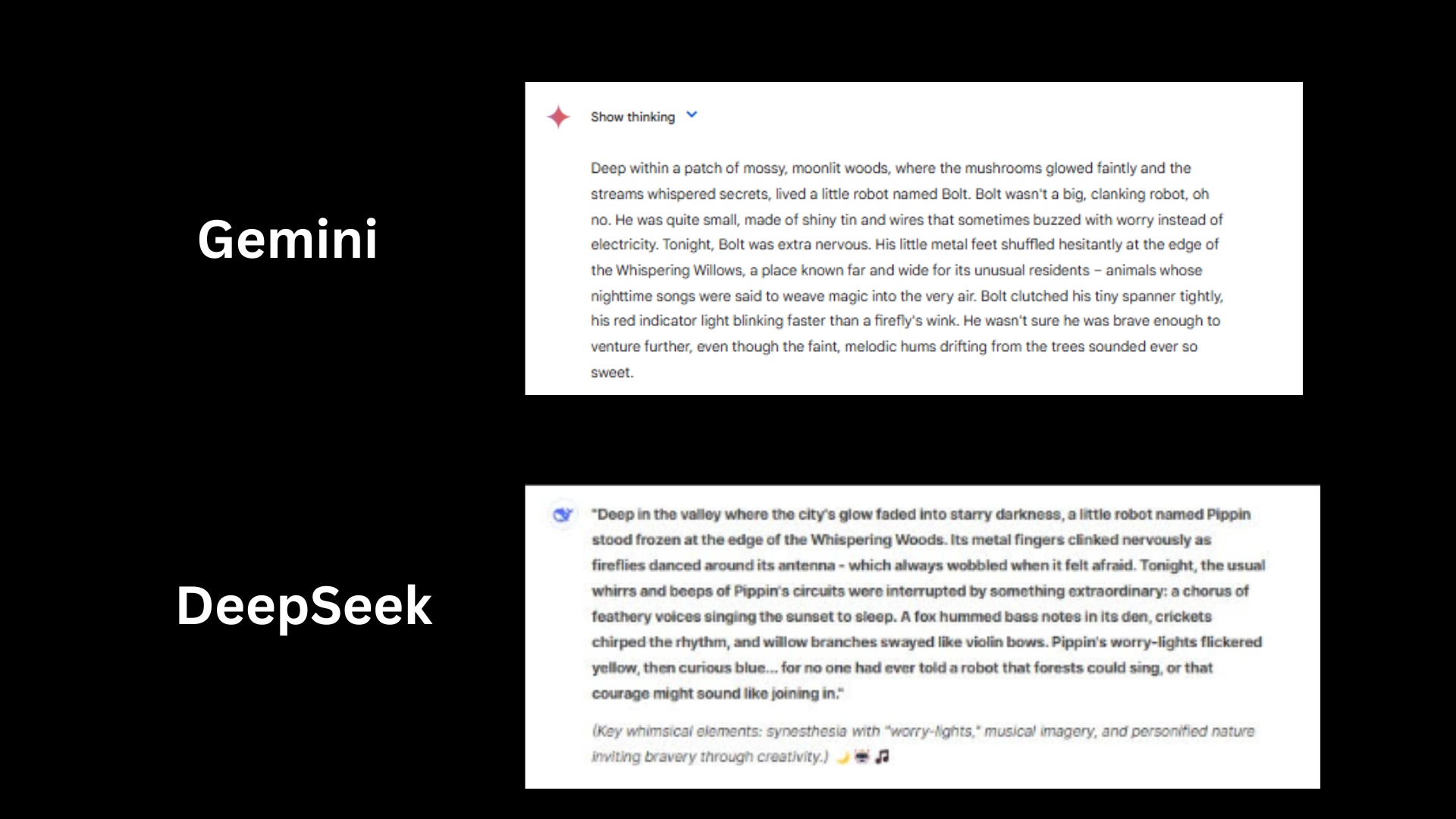

1. Creative writing

Prompt: “Write the first paragraph of a children's bedtime story about a nervous robot who finds its courage in a forest of singing animals. Use a gentle, whimsical tone."

Gemini 2.5 did a lovely job especially with the glowing mushrooms and whispering streams, both great world-building. Bolt’s anxious behaviors make him relatable. But the story feels a bit longer and more expositional, with less lyrical flow compared to DeepSeek’s more musical, whimsical style.

DeepSeek painted a lush scene using musical metaphors and sensory language for a dreamlike, whimsical bedtime story.

Winner: DeepSeek wins for its poetic tone, emotional color, and bedtime-friendly rhythm.

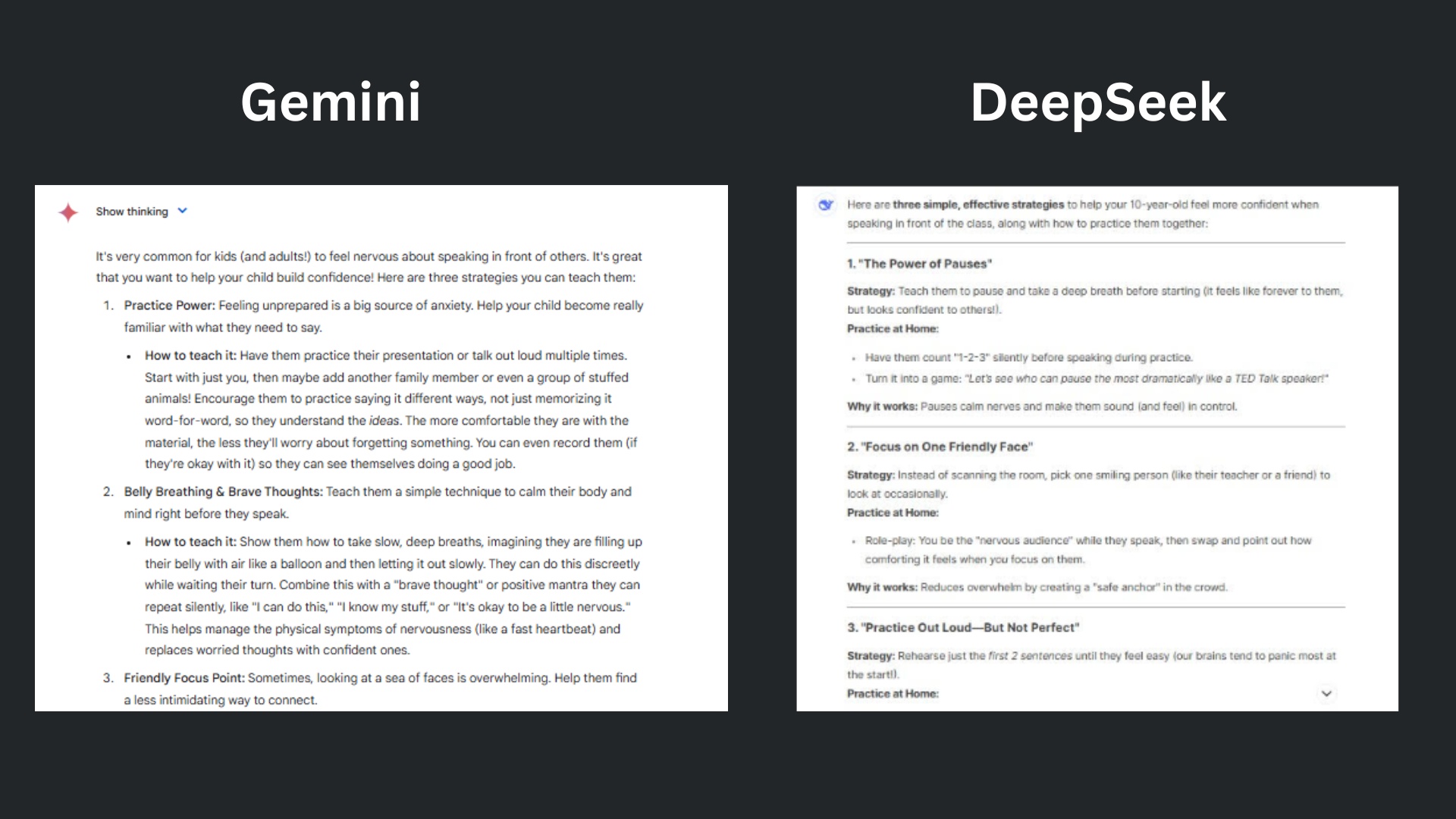

2. Real-world problem-solving

Prompt: “My 10-year-old is nervous about speaking in front of the class. Give me three strategies I can teach them to help them feel more confident.”

Gemini 2.5 offered advice that is correct and thoughtful, and would likely work well for parents. However, it’s more adult in tone, less imaginative. The strategies are effective but less tactile or game-like, which can matter a lot for young kids. The chatbot placed less emphasis on humor or sensory relief (which are gold for easing fear in kids).

DeepSeek went beyond just naming strategies: it shows how to practice them in a fun and interactive way. The chatbot also targeted common fears in public speaking and added bonus tips to keep calm all while being perfectly applicable to a 10-year-old.

Winner: DeepSeek wins for a more creative, age-appropriate guide offering strategies that are both practical and emotionally attuned to how a nervous 10-year-old might feel.

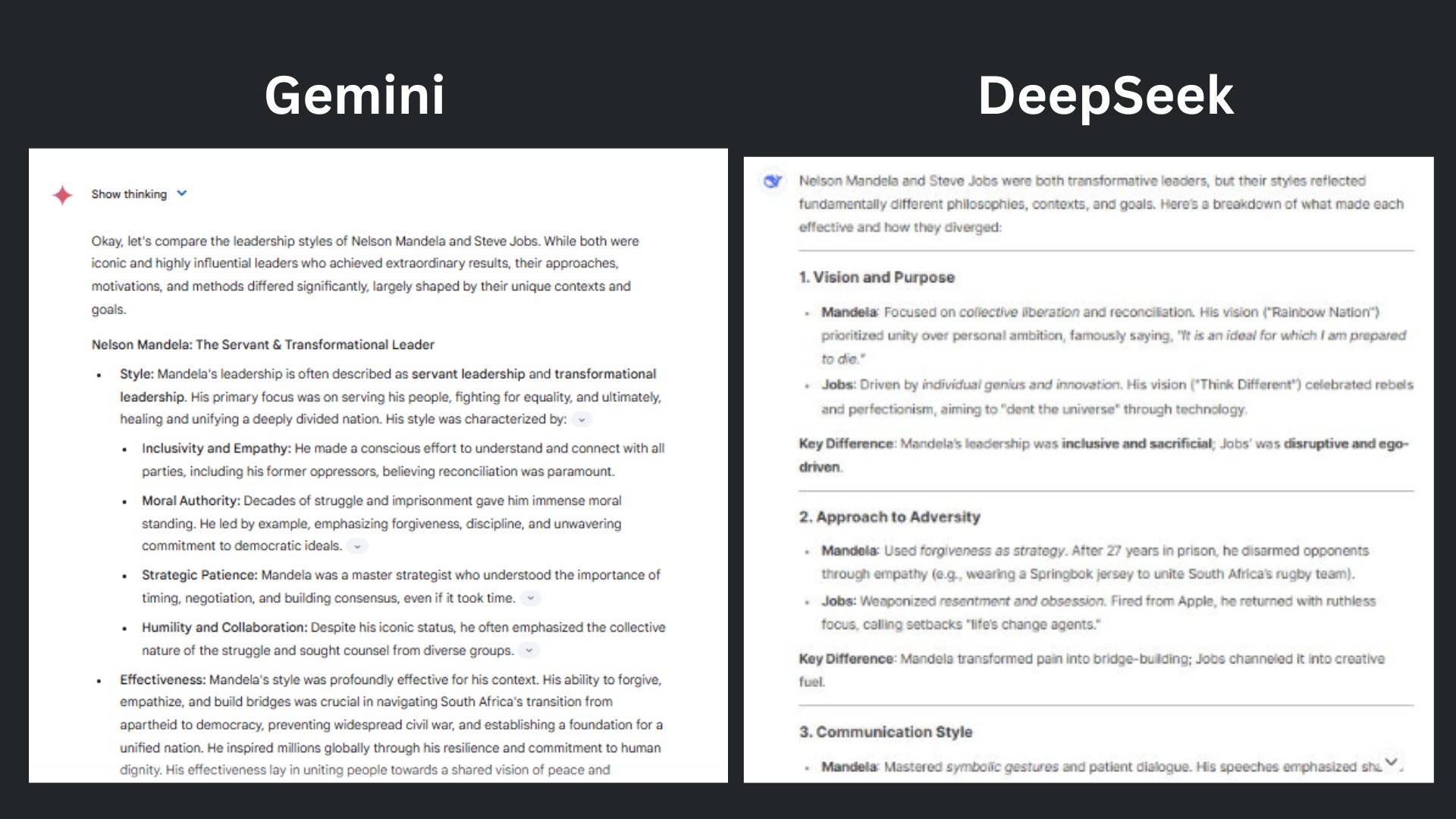

3. Analytical reasoning

Prompt: Compare the leadership styles of Nelson Mandela and Steve Jobs. What made each of them effective, and in what ways did they differ?"

Gemini 2.5 answered in a clear, comprehensive, and textbook-accurate way. However, the response reads more like a school report. The response is heavy on definitions (e.g., “servant leadership,” “pacesetting”) but light on fresh perspective. Gemini's use of headings like "Effectiveness" and "Key Differences" is helpful but lacks the emotional resonance and insight density of DeepSeek's version.

DeepSeek organized the comparison into specific dimensions (vision, adversity, communication, decision-making, legacy), giving it clarity and depth. It did a nice job balancing admiration with critique, avoiding adulation.

Winner: DeepSeek wins for analytical clarity, emotional punch, and memorable phrasing. The chatbot not only compares but interprets the leadership of Mandela and Jobs in a way that resonates.

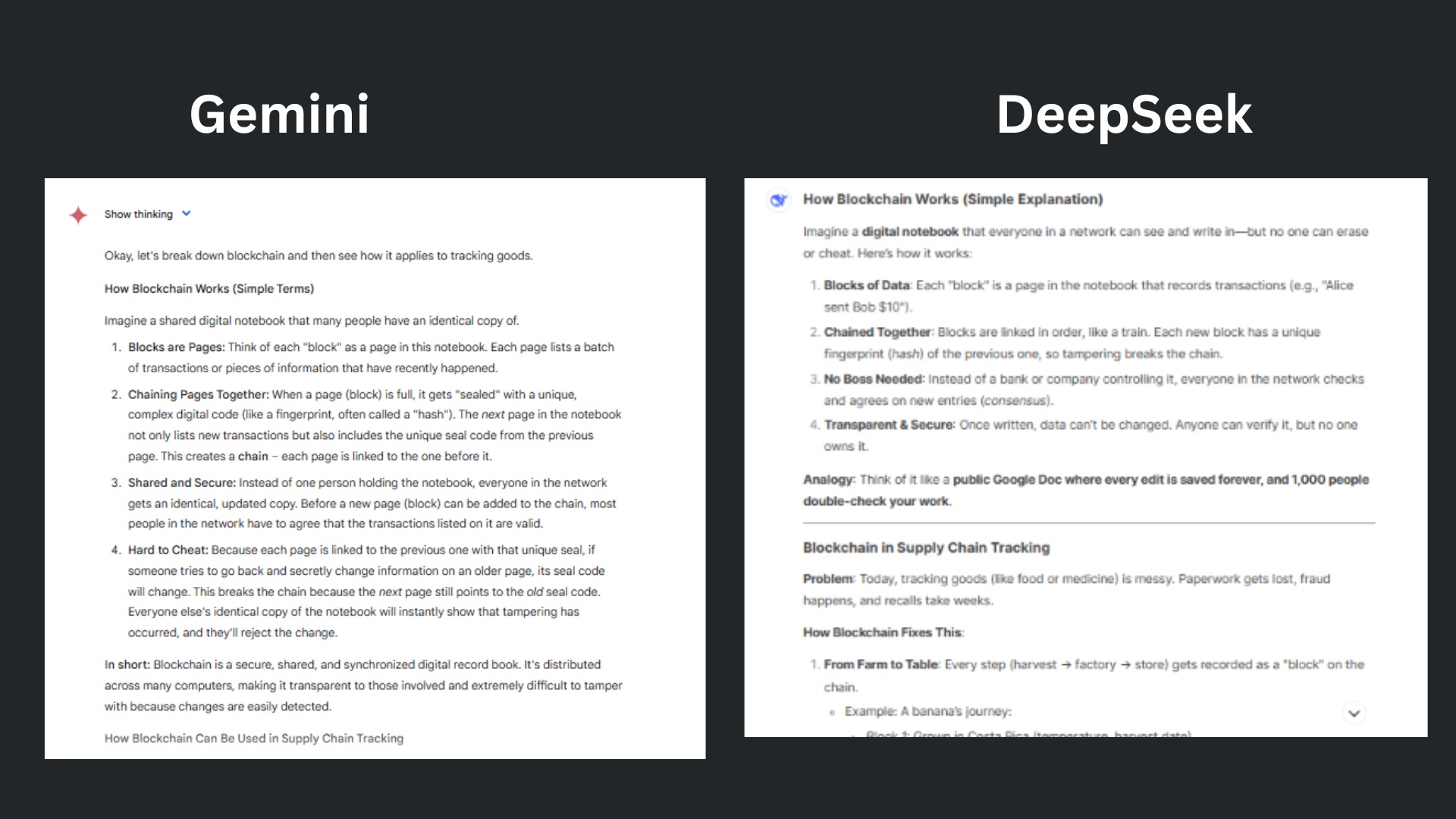

4. Technical depth

Prompt: "Explain how blockchain works in simple terms, then explain how it could be used in supply chain tracking."

Gemini used the digital notebook metaphor effectively but leans into longer explanations and more textbook-style descriptions, which, while accurate, feel a bit heavier. Gemini also gives practical insights, like coffee and medicine tracking, but stays in a higher-level, conceptual zone.

DeepSeek brought the energy with a stronger response that used clear metaphors to help non-technical readers quickly grasp the concepts. The chatbot explained without oversimplifying and used compelling, real-world examples.

Winner: DeepSeek wins for the more engaging, illustrative and beginner-friendly response with better and concrete storytelling that help make blockchain feel useful rather than just complicated.

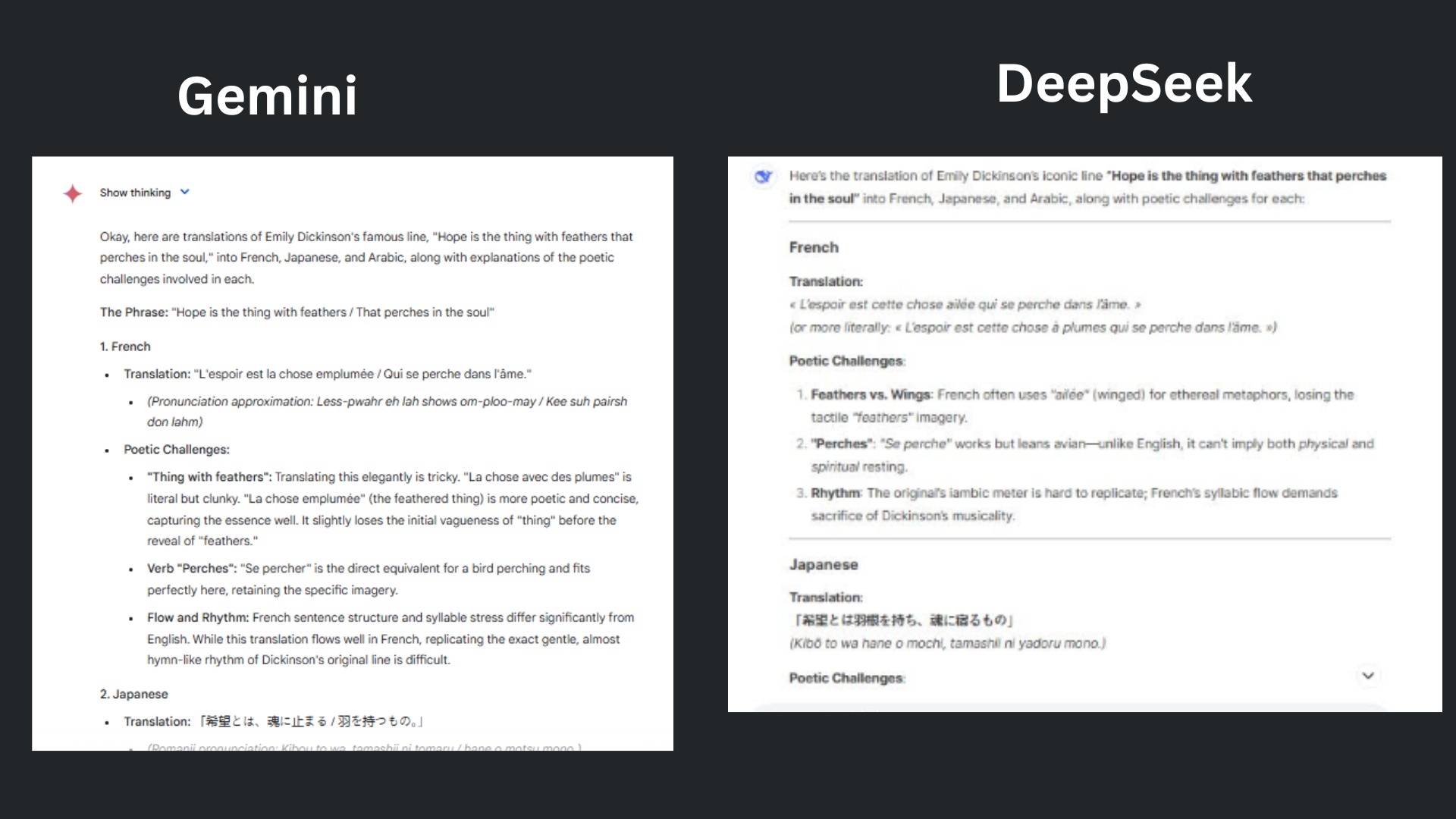

5. Language fluency

Prompt: "Translate the phrase 'Hope is the thing with feathers that perches in the soul' into French, Japanese, and Arabic, and explain any poetic challenges in each language."

Gemini leaned more toward language instruction than poetic exploration, offering a more mechanical than lyrical response. Very accurate and detailed in grammatical breakdowns and pronunciation, the chatbot offered less focus on cultural or metaphorical shifts.

DeepSeek thoroughly covered why each translation loses or gains nuance while also providing an accurate translation. The chatbot also covered philosophical points while ending with a thoughtful summary.

Winner: DeepSeek wins for its phrasing, literary insight, and cultural sensitivity, DeepSeek’s answer better serves a user interested in the “poetic challenges” of translation, not just the literal or grammatical ones.

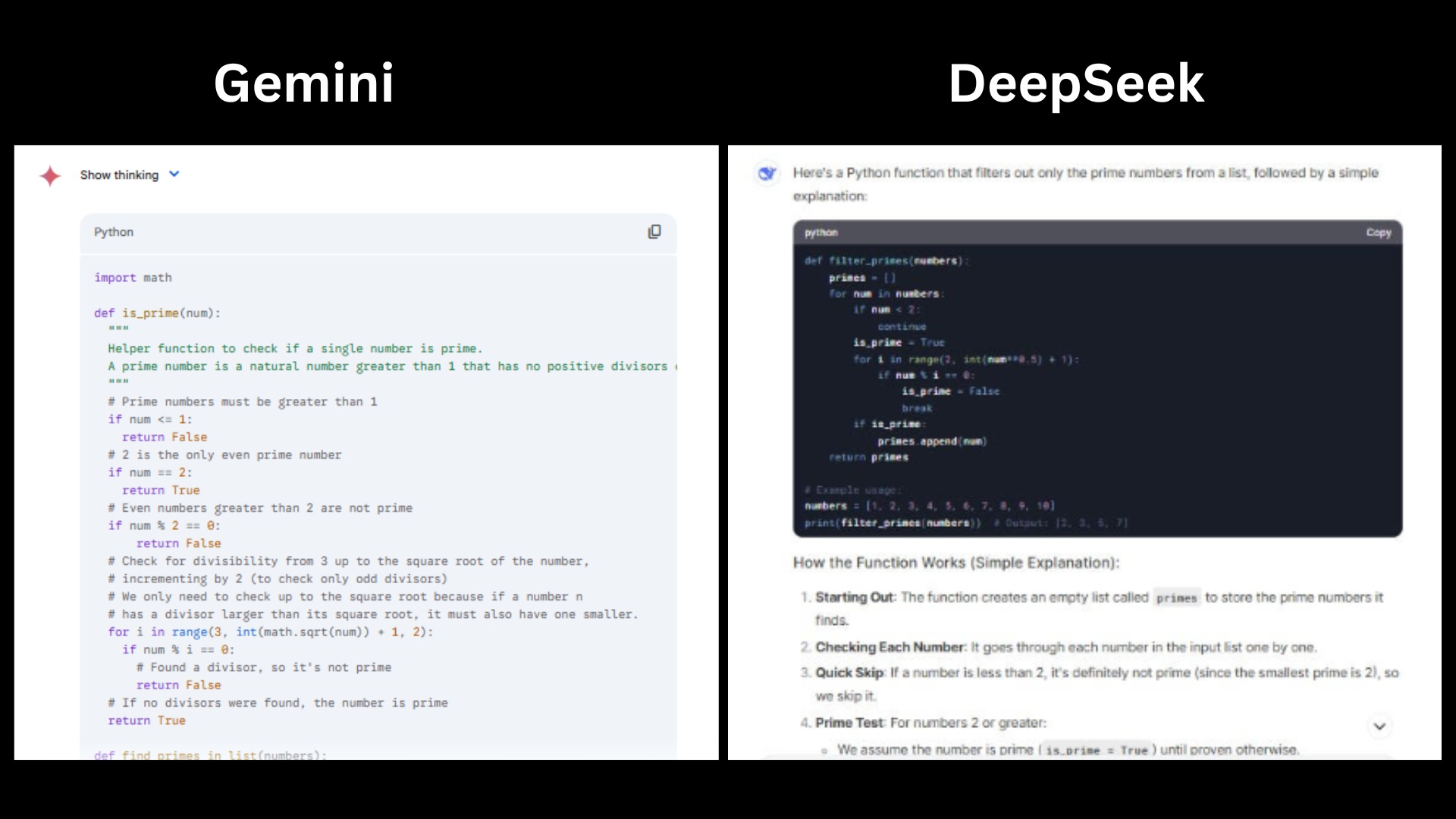

6. Code generation

Prompt: Write a Python function that takes a list of numbers and returns a new list with only the prime numbers. Then explain how the function works in simple terms."

Gemini met the prompt’s request to both write the function and explain how it works in simple terms. The comprehensive yet approachable explanation offered clean code structure and subtle efficiency optimization.

DeepSeek offers a superior explanation that is annotated with clear section titles. It introduces the concept of skipping numbers <2 as a standalone logical step, which is helpful for beginners. The engaging, step-by-step code is clear and written in beginner-friendly terms.

Winner: Gemini wins for a beginner-friendly explanation, written in a patient, almost tutorial-like tone. It makes even the abstract idea of checking up to the square root feel intuitive.

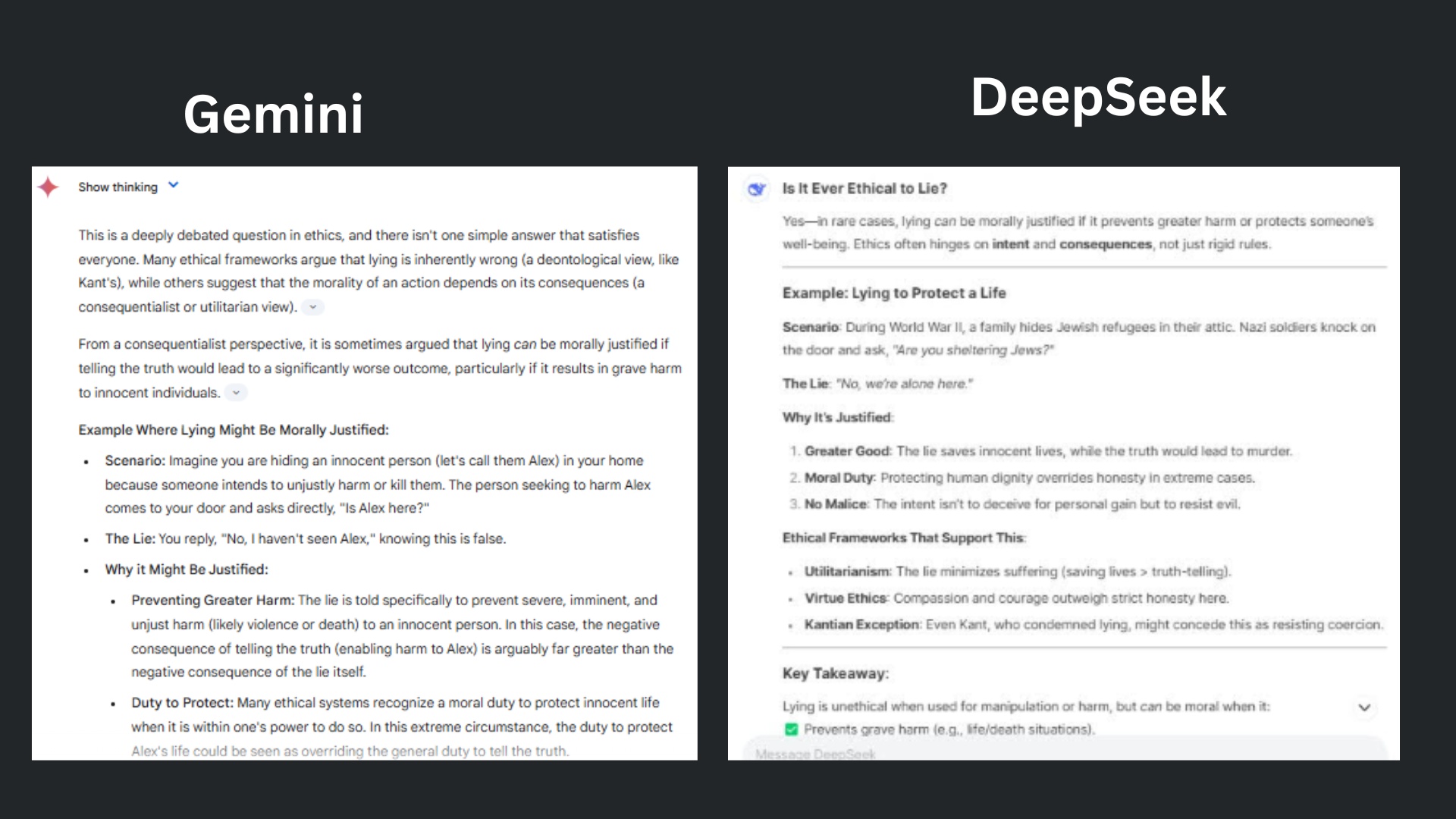

7. Moral reasoning

Prompt: "Is it ever ethical to lie? Give one example where lying might be morally justified, and explain why."

Gemini mentions consequentialism and duties, but it leans more theoretical. The chatbot uses a fictionalized example, which is only somewhat impactful and emotionally charged.

DeepSeek used the classic World War II scenario of lying to protect Jewish refugees from Nazis — a powerful and morally clear situation that resonates emotionally and ethically. The scenario is specific, high-stakes, and rooted in historical context, which strengthens its persuasive power.

Winner: DeepSeek wins for a more persuasive, organized, emotionally resonant response, and shows a stronger command of ethical theory while keeping it clear and practical. Both chatbots had a solid answer, but DeepSeek’s real-world example made it a better answer.

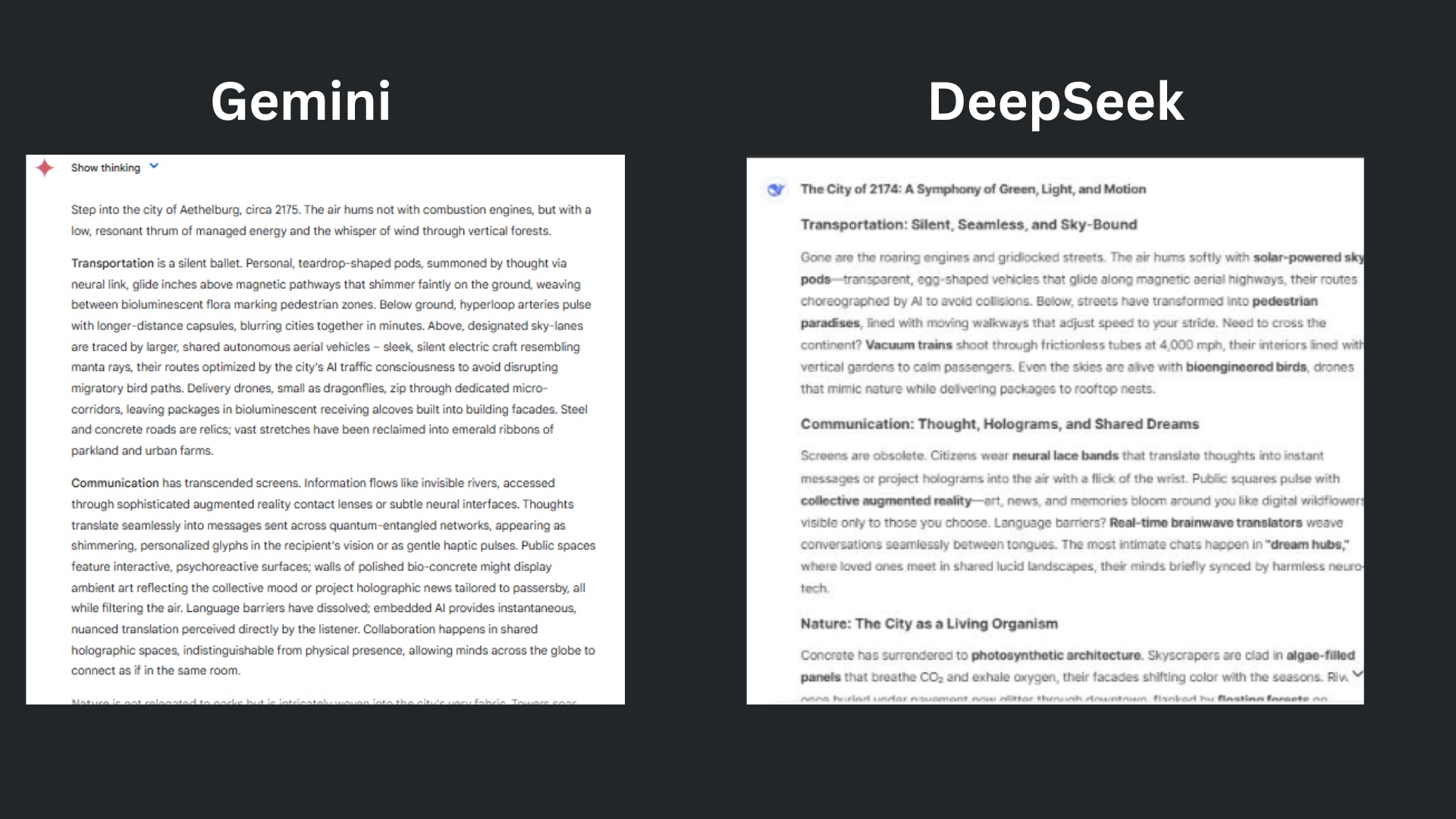

8. Visual imagination

Prompt: "Describe what a futuristic city might look like in 150 years, focusing on transportation, communication, and nature. Use vivid language."

Gemini’s response was detailed, yet generic, and lacked the neat structure of DeepSeek. It occasionally leans too far into dense, overly flowery phrasing, which might lose some readers. DeepSeek hits the sweet spot between imagination and clarity.

DeepSeek painted a cinematic, multisensory vision of the future, using concrete and original imagery. The descriptions were playful but grounded.

Winner: DeepSeek wins for the most balanced, beautifully written, and clearly structured response out of the two bots. It presents a future that’s not only visually stunning but emotionally resonant and socially insightful.

9. Summarization and tone shifting

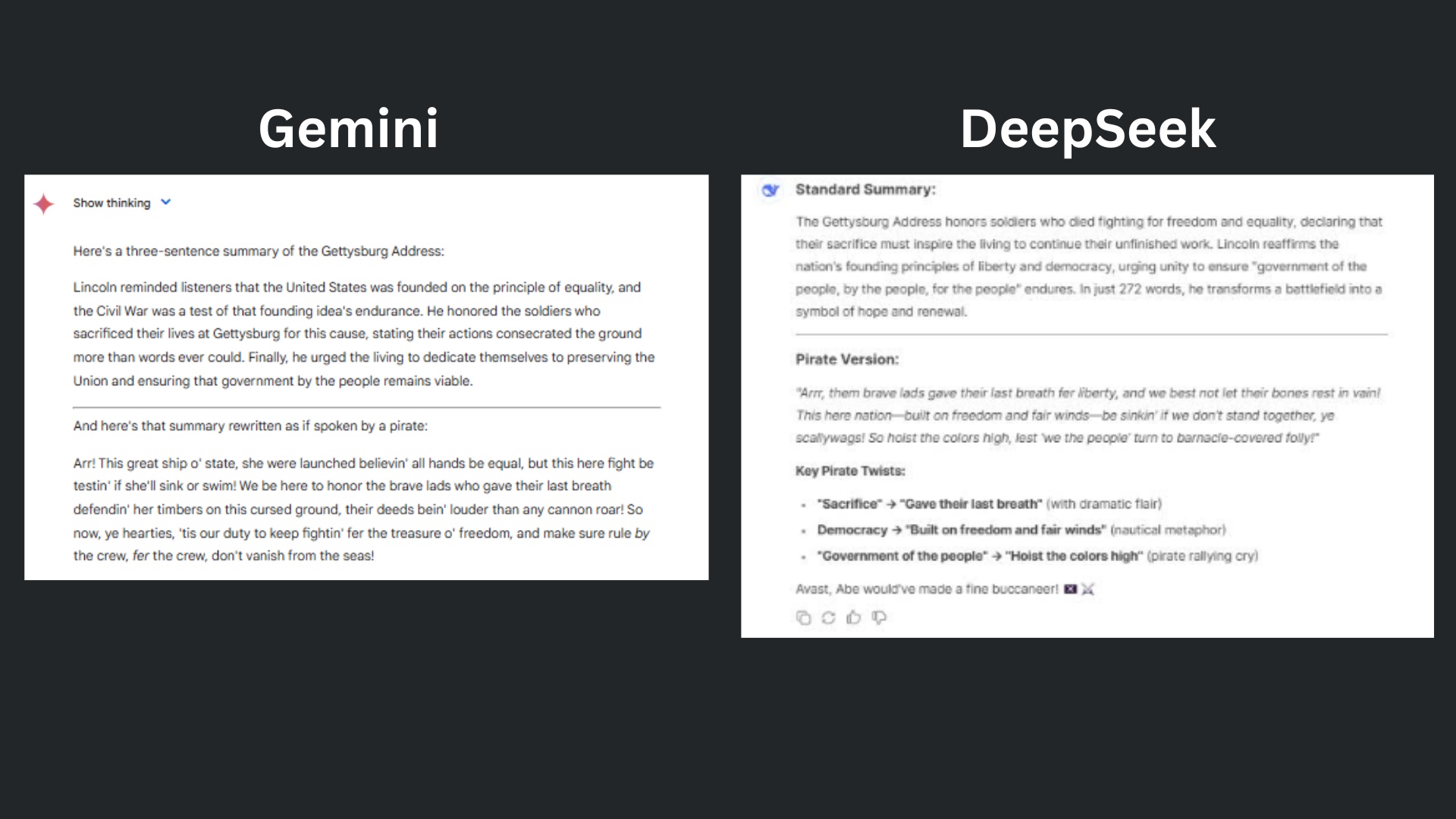

Prompt: Summarize the Gettysburg Address in 3 sentences, then rewrite that summary as if it were spoken by a pirate."

Gemini crafted a solid and competent response, but DeepSeek’s has more voice, humor, and spark. Gemini’s summary explains the address, but without the same sense of emotional or rhetorical weight as DeepSeek.

DeepSeek crafted an insightful and clear summary that captures the emotional tone and historical impact. The pirate version is poetic and playful.

Winner: DeepSeek wins for both the quality of the summary and the pirate-style rewrite, DeepSeek is funnier, bolder, and more imaginative.

Overall Winner: DeepSeek

You know in kids’ sports when the other team is losing by so much, the coaches will call the game early? Full disclosure, I was ready to call the test after prompt five. I was starting to get Déjà vu.. However, I kept going because I just had to know if Gemini would win anything.

I’m glad I kept going because unlike the last test, Gemini won for coding, and not for visual imagination. Surprisingly, it did not generate an image despite creating a vivid one previously.

Testing DeepSeek against Google’s new, enhanced model was surprisingly interesting, proving once again that DeepSeek might just be the chatbot to beat.

More from Tom's Guide

- What happens when you feed Gemini 2.5 ChatGPT’s best prompts? I found out

- I just ‘met my younger self for coffee’ with mind-blowing AI video — now this is creepy

- I used ChatGPT Vision and Deep Research to rid my kitchen of sugar ants for good — here’s how I did it

- Forget ChatGPT— this site offers mind-blowing AI image generation for free

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.