Damning new AI study shows that chatbots make errors summarizing the news over 50% of the time — and this is the worst offender

Not their strong suit

A recent BBC investigation has revealed that leading AI chatbots — including OpenAI's ChatGPT, Microsoft's Copilot, Google's Gemini, and Perplexity AI — frequently produce significant inaccuracies and even distortions when summarizing news stories.

The study evaluated the chatbots and uncovered that over half of their generated responses contained major flaws.

The scope of the BBC’s study involved presenting 100 news articles from its website to the four AI chatbots and requesting each one to craft a summary. Subject matter experts from the BBC then assessed the quality of these summaries. The findings were concerning, indicating that 51% of the AI-generated answers exhibited significant errors, including factual inaccuracies, misquotations, and outdated information.

Specific inaccuracies identified

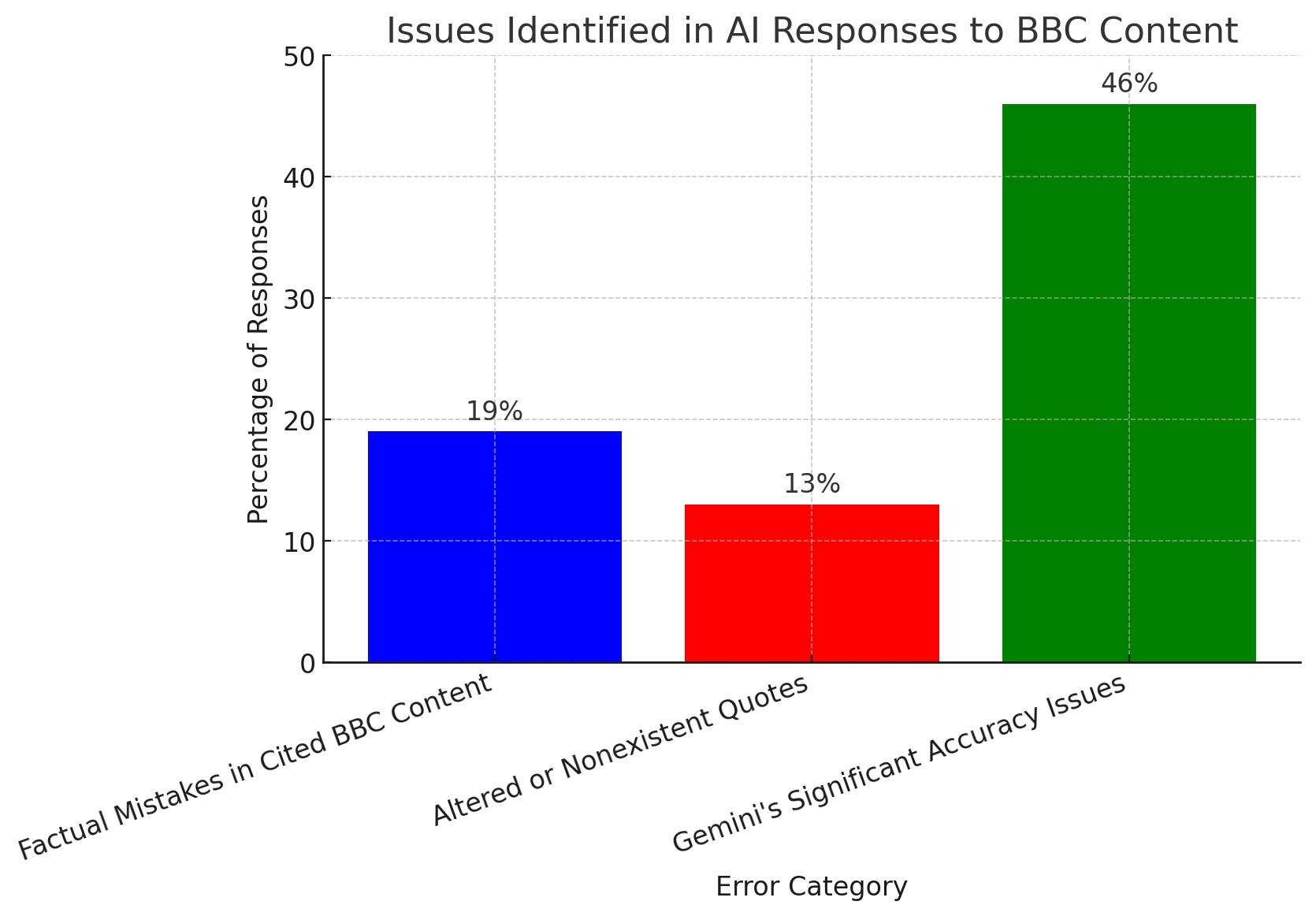

Among the errors, 19% of the AI responses that cited BBC content introduced factual mistakes, such as incorrect dates, numbers and statements. Additionally, 13% of the quotes attributed to the BBC were either altered from their original form or did not exist in the cited articles.

The study highlighted that Gemini's responses were particularly problematic, with 46% flagged for significant accuracy issues.

The above graph shows a few of the more notable examples include Gemini's misrepresentation of NHS guidelines. Gemini incorrectly stated that the UK's National Health Service (NHS) advises against vaping as a method to quit smoking. In reality, the NHS recommends vaping as a viable aid for those attempting to stop smoking. The study highlighted that Gemini's responses were particularly problematic, with 46% flagged for significant accuracy issues.

Other issues included outdated political information from both ChatGPT and Copilot erroneously reporting that Rishi Sunak and Nicola Sturgeon were still serving as the UK's Prime Minister and Scotland's First Minister, respectively, despite their departures from office.

Additionally, Perplexity misquoted Middle East coverage, inaccurately stating that Iran initially showed "restraint" and described Israel's actions as "aggressive."

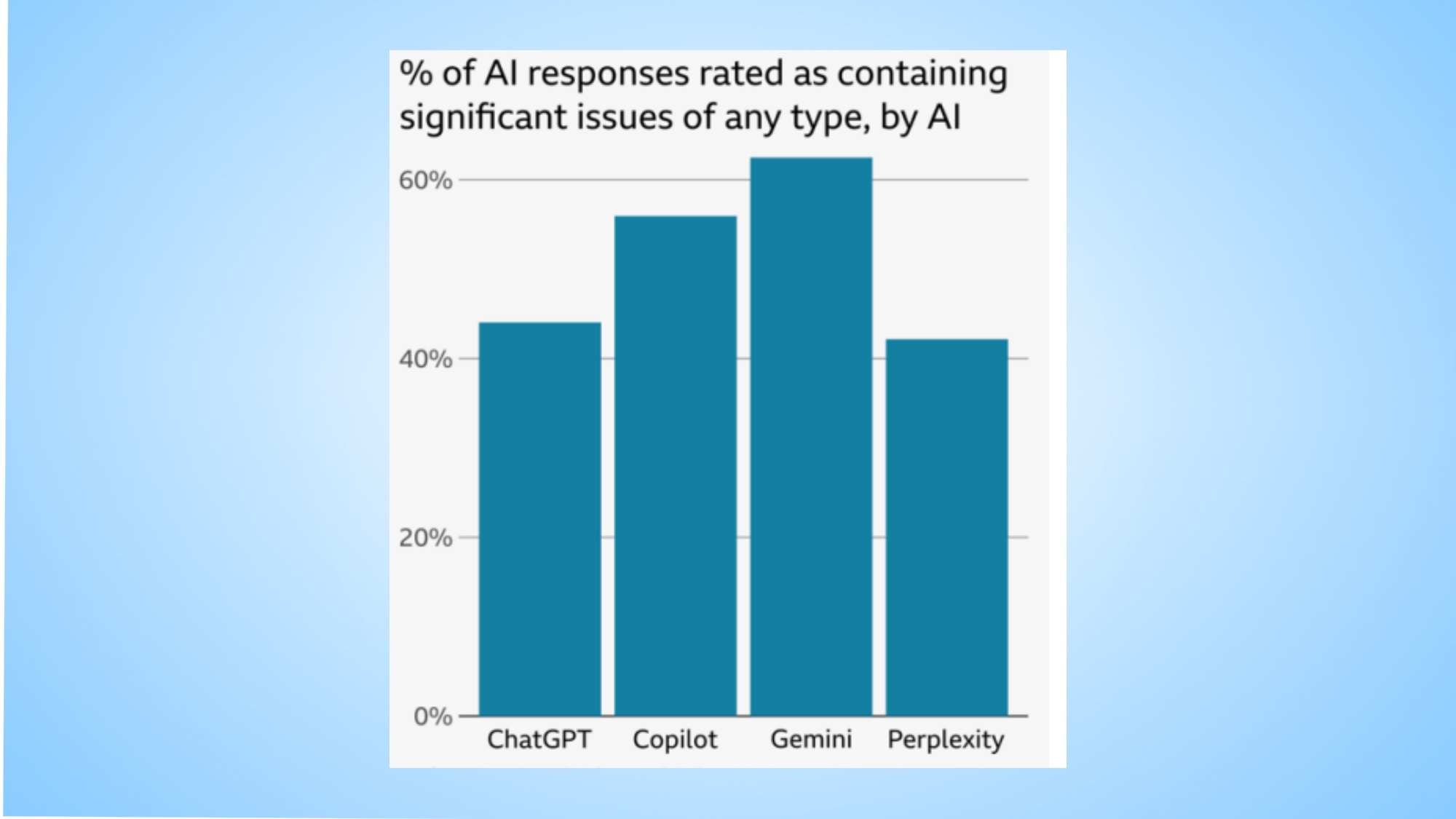

The BBD further reported the percentage of AI-generated responses that were rated as containing significant issues as seen in the above blue graph, highlighting accuracy and reliability concerns across all tested AI models when answering news-related questions. According to the study:

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Gemini (Google) had the highest percentage of problematic responses, exceeding 60%.

Copilot (Microsoft) followed closely, with issues present in more than 50% of responses.

ChatGPT (OpenAI) and Perplexity showed comparatively fewer significant issues, with each hovering around 40%.

This research underscores the urgent need for AI companies to improve accuracy, transparency, and fact-checking mechanisms, especially in news-related queries.

Industry response and concerns

Deborah Turness, CEO of BBC News and Current Affairs, expressed concern over these findings. In a blog post, she emphasized that while AI offers "endless opportunities," the technology's current application in news summarization is fraught with risks. Turness questioned, "We live in troubled times, and how long will it be before an AI-distorted headline causes significant real-world harm?"

Turness called for AI developers to "pull back" their news summarization tools, citing a precedent where Apple paused its AI-generated news summaries after the BBC reported misrepresentations. She urged for a collaborative approach, stating that the BBC seeks to "open up a new conversation with AI tech providers" to find solutions collectively.

An OpenAI spokesperson responded to the study, noting, "We support publishers and creators by helping 300 million weekly ChatGPT users discover quality content through summaries, quotes, clear links, and attribution." They added that OpenAI has collaborated with partners to improve citation accuracy and respect publisher preferences.

Implications for the future

The BBC's findings highlight the challenges of integrating AI into news dissemination. The prevalence of inaccuracies not only undermines public trust but also poses potential risks, especially when misinformation pertains to sensitive topics.

Pete Archer, the BBC's Program Director for Generative AI, emphasized that publishers should have control over their content's usage. He advocated for transparency from AI companies regarding how their assistants process news and the extent of errors they produce. Archer stated, "This will require strong partnerships between AI and media companies and new ways of working that put the audience first and maximize value for all."

With AI rapidly integrating into various industries, this study underlines the necessity for rigorous oversight, collaboration and a commitment to accuracy to ensure that technological advancements serve the public good without compromising the integrity of information.

More from Tom's Guide

- I tried the open source AI tool that creates audiobooks for free — here's my verdict

- Perplexity AI's Deep Research feature is available now — here's how to try it for free

- Siri 2.0 isn't ready for the limelight as Apple runs into bugs and delays

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.