Intel says Copilot will run on your laptop — but only Snapdragon chips can handle it

Maybe speak to Qualcomm

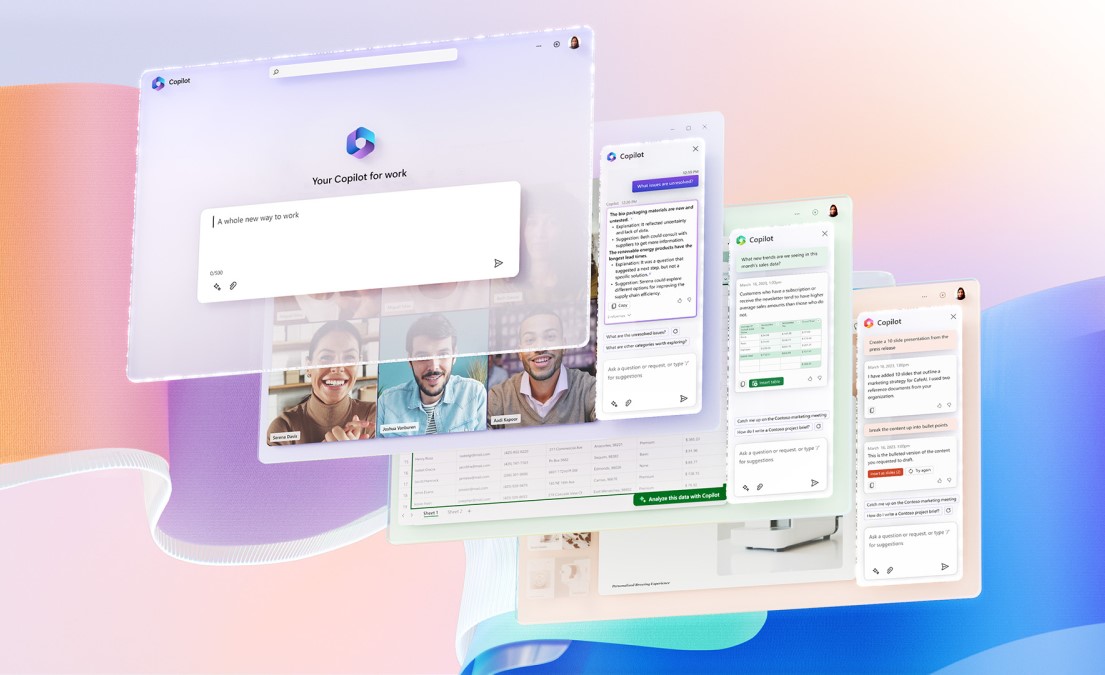

Copilot has become so ingrained in the Microsoft ecosystem that there is even a new keyboard key dedicated to the AI chatbot. Now it looks like much of its functionality could happen offline, with the processing happening on your laptop — but not if you’ve got a new Intel AI PC.

At the recent Intel AI Summit in Taipei, executives for the chip manufacturer told our sister publication Tom's Hardware that none of the current generation Intel Core Ultra chips meet the minimum requirements for running Copilot offline and on your device.

It all comes down to Trillion Operations per Second (TOPS) — a measure of how fast the NPU can run AI tasks, with higher numbers meaning better performance. You’ll need an NPU with at least a 40 TOPS count and at the moment, Intel Core Ultra chips only have 10 TOPS.

Currently, the only processor commonly used to run Windows that comes close to this capability is the Qualcomm Snapdragon X Elite with 45 TOPS. Intel says its next generation of Core Ultra chips will meet the minimum requirements.

Why run Copilot locally?

At the moment the processing for Copilot's impressive generative AI abilities happens in the cloud, with data sent out to Microsoft servers and responses returned. But long term, the aim has been to bring at least some of that on to the device itself.

I can install a large language model like Meta's Llama 2 on my M2 MacBook Air today, but I certainly notice when its thinking or responding as my entire machine freezes until its done.

There are multiple reasons for running an AI tool like Copilot locally including privacy protection, security, offline access and cost — your machine does the expensive processing not the cloud.

The challenge is having enough compute power to run elements of the Copilot experience without the user noticing through the machine slowing down or the battery draining quickly.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Battery woes are largely solved by pushing much of the inference off to the NPU rather than relying on the GPU. According Todd Lewellen, the Vice President of Intel's Client Computing Group ,Microsoft insists Copilot runs on the NPU due to the impact on battery life.

I can install a large language model like Meta's Llama 2 on my M2 MacBook Air today, but I certainly notice when its thinking or responding as my entire machine freezes until its done. That won't be acceptable for a mainstream application like Copilot — or a future version of Siri.

What are TOPS and why do they matter?

| Company | Chip | NPU TOPS |

|---|---|---|

| Intel | Core Ultra | 10 |

| AMD | 8040 series | 16 |

| Qualcomm | Snapdragon X Elite | 45 |

TOPS are the number of trillions of operations per second that chip can handle. Specifically it is a measure of arithmetic operations the processor can perform in a second and it particularly useful as a measure of performance in AI and machine learning tasks.

Often a TOPS figure will be given for the chip as a whole, CPU and NPU combined but according to Intel, having a higher NPU value is better for overall performance on LLM tasks.

Lewellen told Tom's Hardware that reaching the magical 40 TOPS for an NPU would “enable us to run more things locally.”

Although don’t expect to run all of Copilot on your machine just most of the key capabilities. Extra features like some image generation and editing will likely require an internet connection and access to the cloud services for some time.

“The general trend is to offload as much of it [the AI processes] to endpoint [on-device] as possible,” David Feng, VP of Client Computing Group at Intel told Tom’s Guide at MWC.

When can I run AI on my laptop?

There are already a number of AI apps that run services locally, including image and video editors but they’re mainly using the GPU. Intel is actively working with developers to encourage better use of the NPU, but that might not happen until we get the next gen chips.

In the meantime, Qualcomm is running away with the Windows AI space. Its often delayed Snapdragon X Elite chips measure 45 TOPS for the onboard NPU and run Windows.

Qualcomm CFO Dom McGuire predicted the low TOPS count in the Intel chips would be an issue when they were first unveiled. Speaking at MWC in Barcelona in February he told Tom’s Guide there isn’t much you can do with just a handful of TOPS.

There’s not much you can do from an AI user experience with 10 TOPS.

Qualcomm CFO Dom McGuire

“We can support 70+ TOPs of AI performance across all three of our different processor units,” he explained.

He added that the Intel AI PCs could only handle about 10 TOPS. “There’s not much you can do from an AI user experience with 10 TOPS.”

Even Apple only tops out at 34 TOPS on its highest end A17 Pro mobile chips and the M2 Ultra desktop chip, but with its tighter integration between operating system and hardware, it is able to make better use of the available processing capability and spread loads across the entire system.

The answer is you can run AI on your laptop today. Most of the time it will use the GPU and you’ll see an impact on battery life or overall system performance. However, in the next year or two as second generation AI PCs and new software comes out — local AI will become the norm.

What is for certain is that you're going to hear a lot more about TOPS in the coming few years as companies seek to capitalise on the capabilities of the NPU for running complex calculations and AI processes without a major battery life impact.

More from Tom's Guide

- I test AI for a living and these are 11 AI-powered apps I use on my Mac

- Google is adding Gemini to Messages on Android — giving you a new way to chat with AI

- Tim Cook: Apple will ‘break new ground in generative AI’ this year

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on artificial intelligence and technology speak for him than engage in this self-aggrandising exercise. As the AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover. When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing. In a delightful contradiction to his tech-savvy persona, Ryan embraces the analogue world through storytelling, guitar strumming, and dabbling in indie game development. Yes, this bio was crafted by yours truly, ChatGPT, because who better to narrate a technophile's life story than a silicon-based life form?

-

mortiz29772 As always marketing missleading communications. Customers have to educate themselves before buying all the lies from marketing. As well as arm architecture can't be compared to x86 in a fair way, you can't compare TOPS from arm SoC's to x86 SoC's. People need to educate properly before fall for marketing strategies: https://towardsdatascience.com/when-tops-are-misleading-70b53e280c39Reply

https://www.embedded.com/tops-vs-real-world-performance-benchmarking-performance-for-ai-accelerators/