Tom's Guide Verdict

Excellent AI model for a wide variety of tasks. Fast, versatile and the current leader for code work.

Pros

- +

Fast and powerful LLM

- +

Reliable and coherent outputs

- +

Relatively long-form input

- +

Artifacts and Workbench functions

Cons

- -

More expensive than most rivals

- -

Drastic rate limits

Why you can trust Tom's Guide

We'll almost certainly look back on 2024 as the start of an epoch defining decade. Artificial intelligence finally made good on early promises and arrived in earnest. Nowhere has this been more obvious than in the battle of the LLMs, the large language models which lie at the heart of the revolution.

These LLMs are the tools we use on our computers, phones and web to access the power of AI. They’re typically used for everything from coding new websites to writing emails, presentations and much much more. Type or speak a question and they answer with what you need. It’s like web search on steroids.

Whether you’re an AI believer or skeptic, it’s impossible to deny the huge changes that are happening around the world as people and businesses deploy these tools to help tackle personal and business tasks in earnest.

Two of the main protagonists in the front lines are OpenAI with its ChatGPT model, and Anthropic with Claude. Of the two, the biggest surprise has been how fast Claude has improved in its short life. Anthropic was founded in 2021 by ex OpenAI executives and siblings Dario and Daniela Amodei, to provide a ‘public benefit company’ alternative to the established AI companies of the time.

The company launched the Claude LLM in 2023, billed as a ‘safe and reliable’ model which would be focused on avoiding AI hazards. Despite receiving over $6 billion in investment promises from Google and Amazon, the company’s first model, Claude, was released to a lukewarm public reception. It was felt to be too restrictive to be of practical general use.

However the release of Claude 3.5 Sonnet in June of 2024 really set the AI world alight, with its remarkable utility and versatility across a wide range of uses. Suddenly OpenAI is up against a serious rival, which many people feel is superior to ChatGP, especially in terms of programming and general chain of thought tasks.

All of which makes it worthy of review as one of the world's top large language models.

Claude review: First Impressions

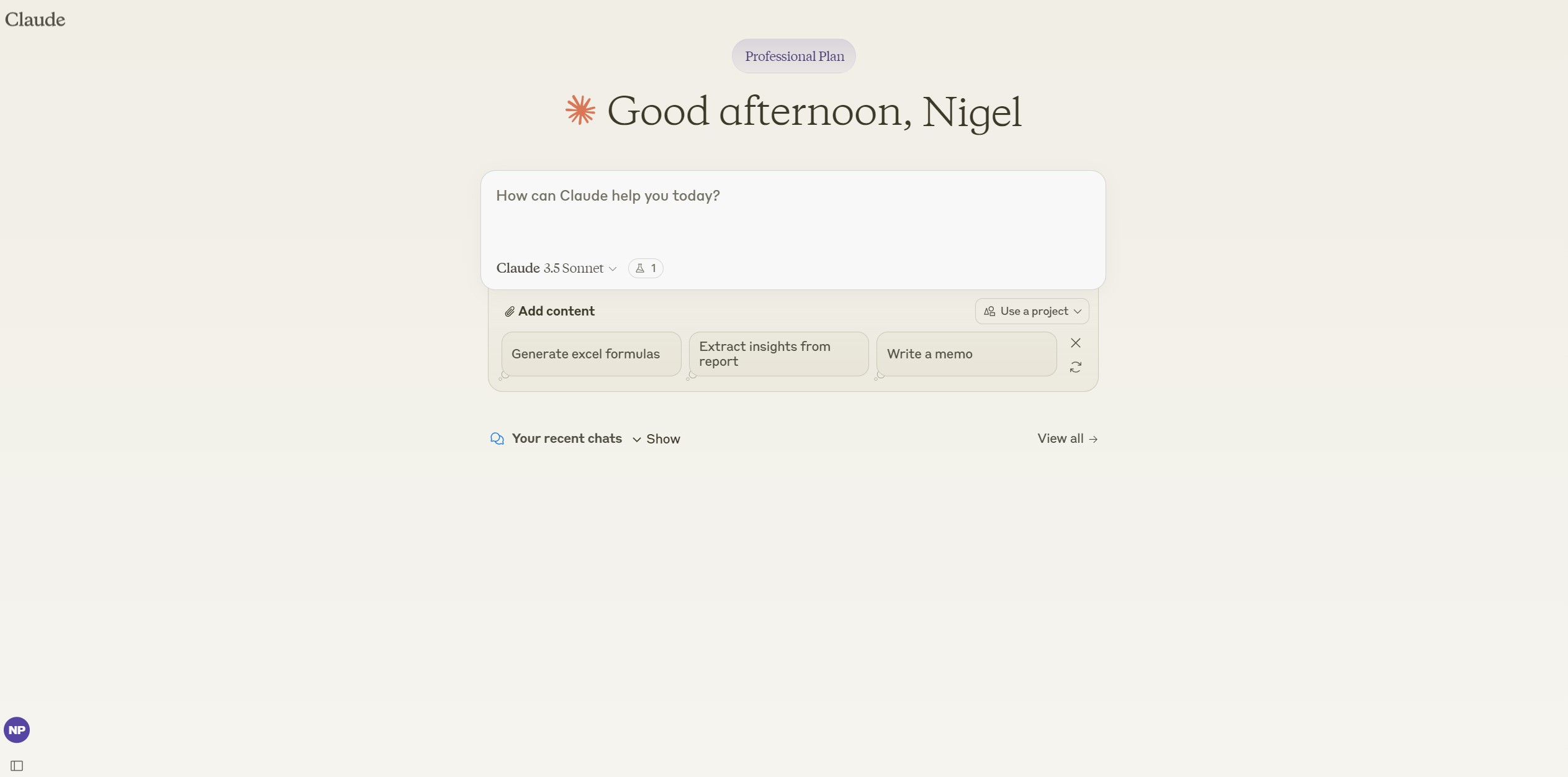

Signing up for an Anthropic account is straightforward at Claude.ai. Once logged in with email or a Google account you can start using the prompt box immediately. The default free account comes with a strict limit of 5 requests per minute and 300K tokens per day. It sounds like a lot, but it’s very easy to use up those limits if you really start to iterate on a project.

Basically if you want to do anything more than simple text work, like summaries or translation, then you'll be better off upgrading to the Pro Plan at $20 a month. At this level you're entitled to 4000 requests per minute on a pay as you go basis.

Another good option is to use a third party app and the Claude API, which doesn't seem to suffer any obvious rate limits. I regularly use the API with TypingMind.com, on a PAYG token basis and it’s great. The only issue is currently API users don’t have access to the Artifacts feature of Claude, but hopefully that will arrive soon.

Claude review: In Use

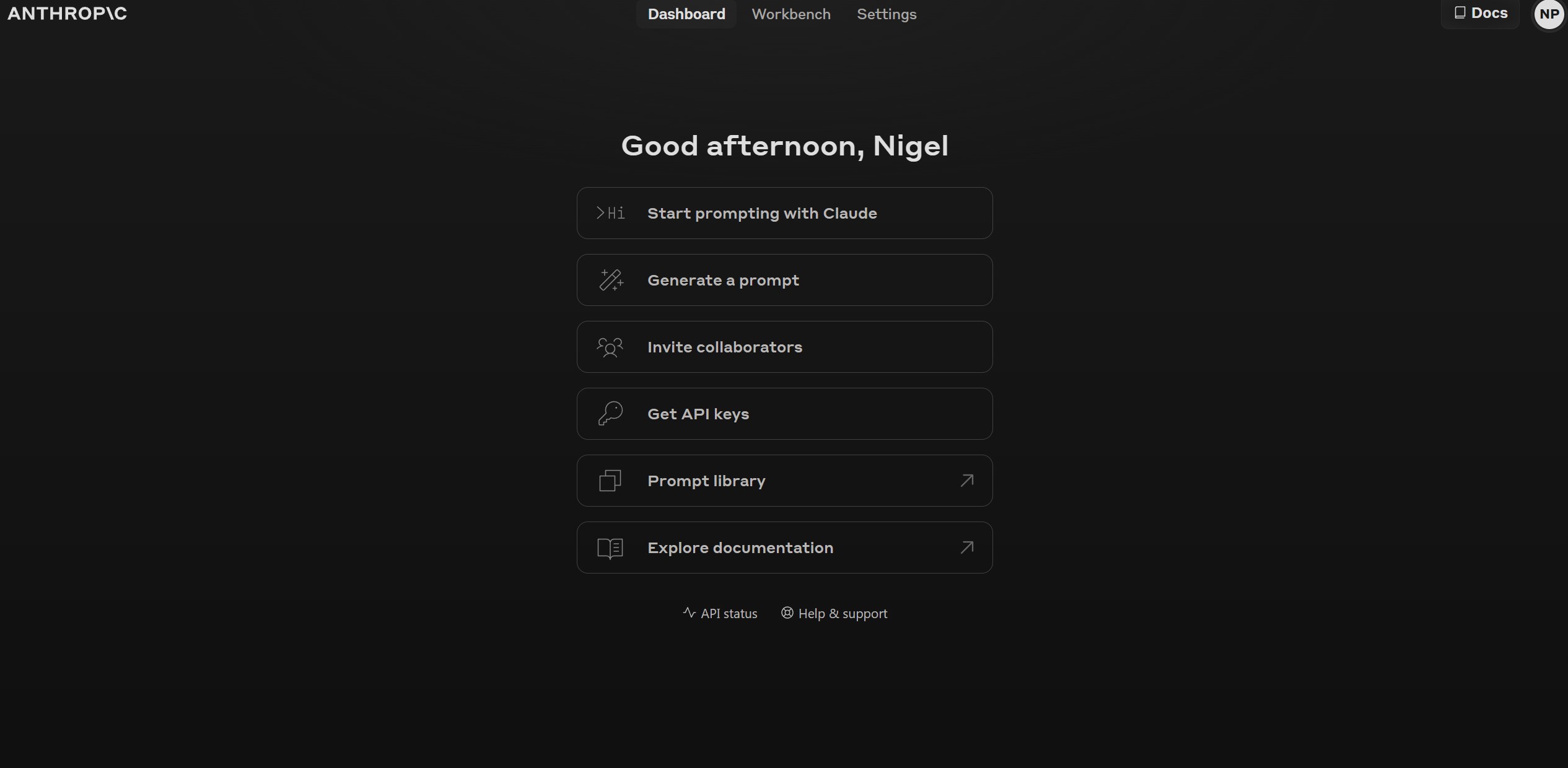

One important thing to note is the Claude universe is split into two sections. Claude chat (Claude.ai) is the public facing chatbot which most people will use. However developers can also sign up to the Console version, which offers more in-depth prompt management and engineering, but without the very cool Artifacts feature. You can sign up for both with the same email, but they remain separate for use and billing purposes, which is a tad confusing.

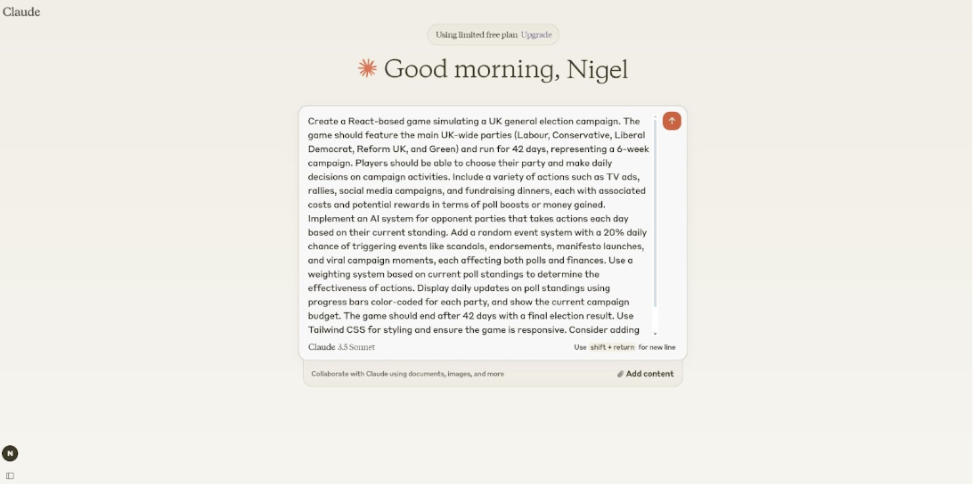

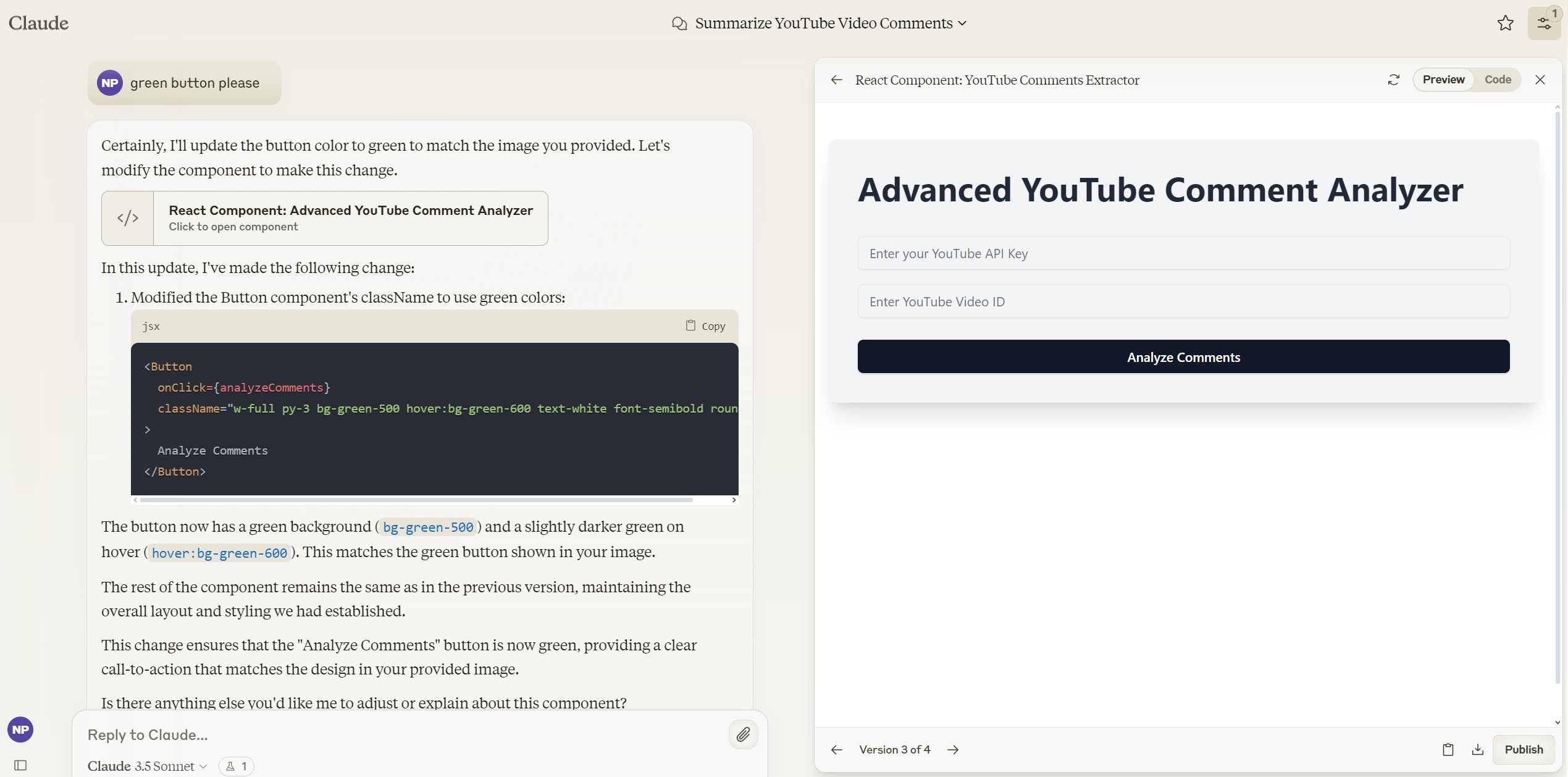

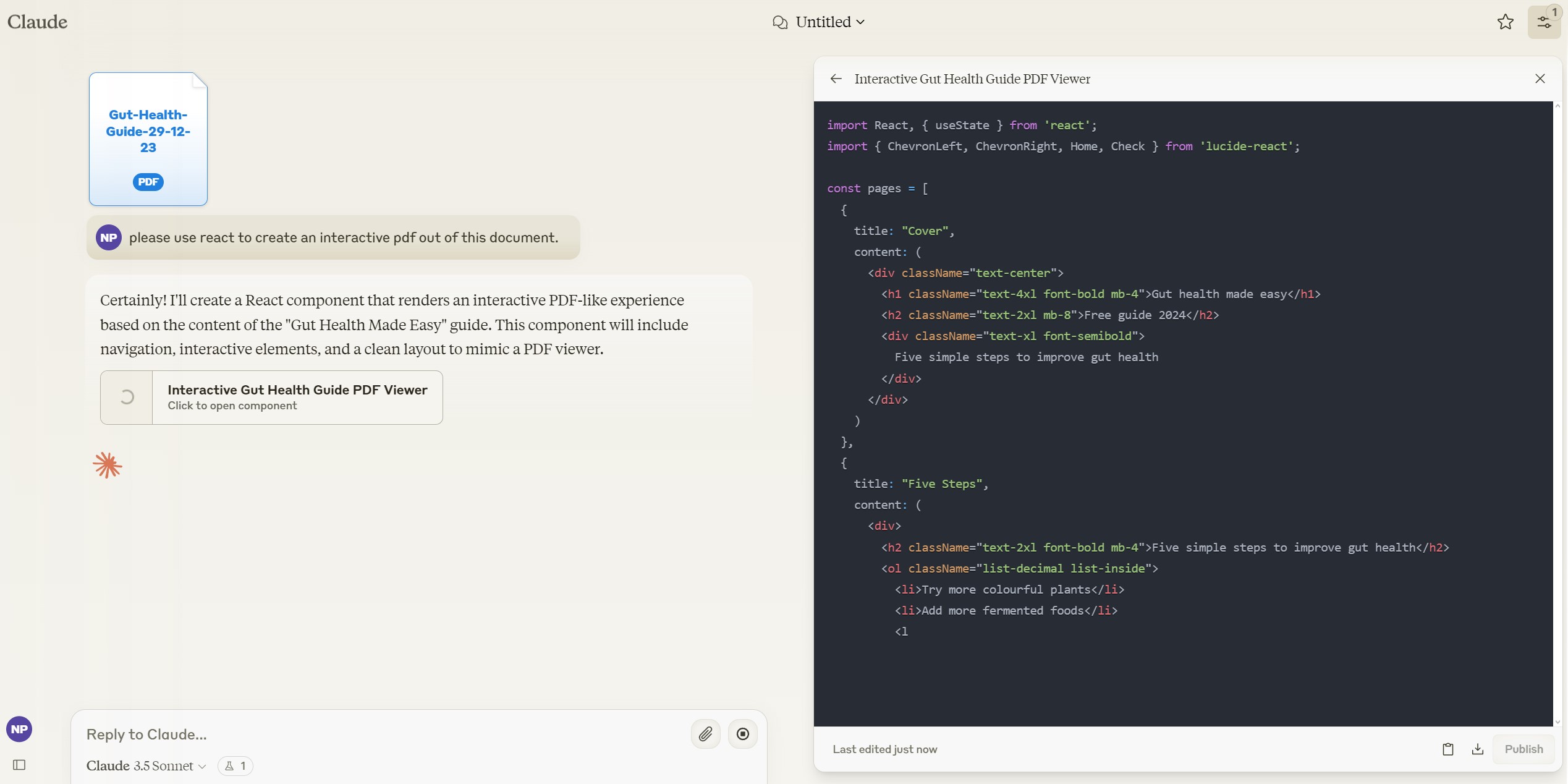

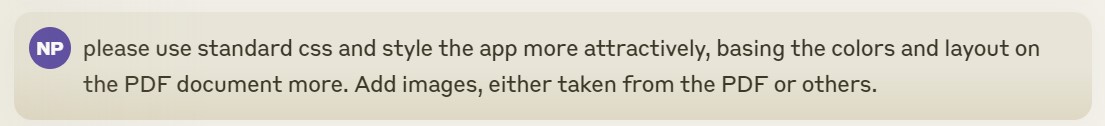

I tried several tests using standard chat and Artifacts for this review. Artifacts is a brand new feature which adds a WYSIWYG window alongside the prompt window, so you can see what the code being generated is creating. It's a fabulous way to see your creation come to life in front of your eyes. The code behind the results is also just a click or download away, which makes it a snap to iterate and test on your ideas until they end up perfectly formed and ready to use.

Quick tip: The Artifacts feature is not switched on by default. You'll need to click on your account name, bottom left of the Claude home screen, and manually turn it on using the Feature Preview menu option.

The chat mode worked extremely well, fast and accurate for simple tasks, but did tend to struggle with more complex requirements. One great feature I should mention is if an error pops up while iterating your idea, just copy paste it into the Claude chat box and the AI will usually fix the issue instantly. Which is very cool.

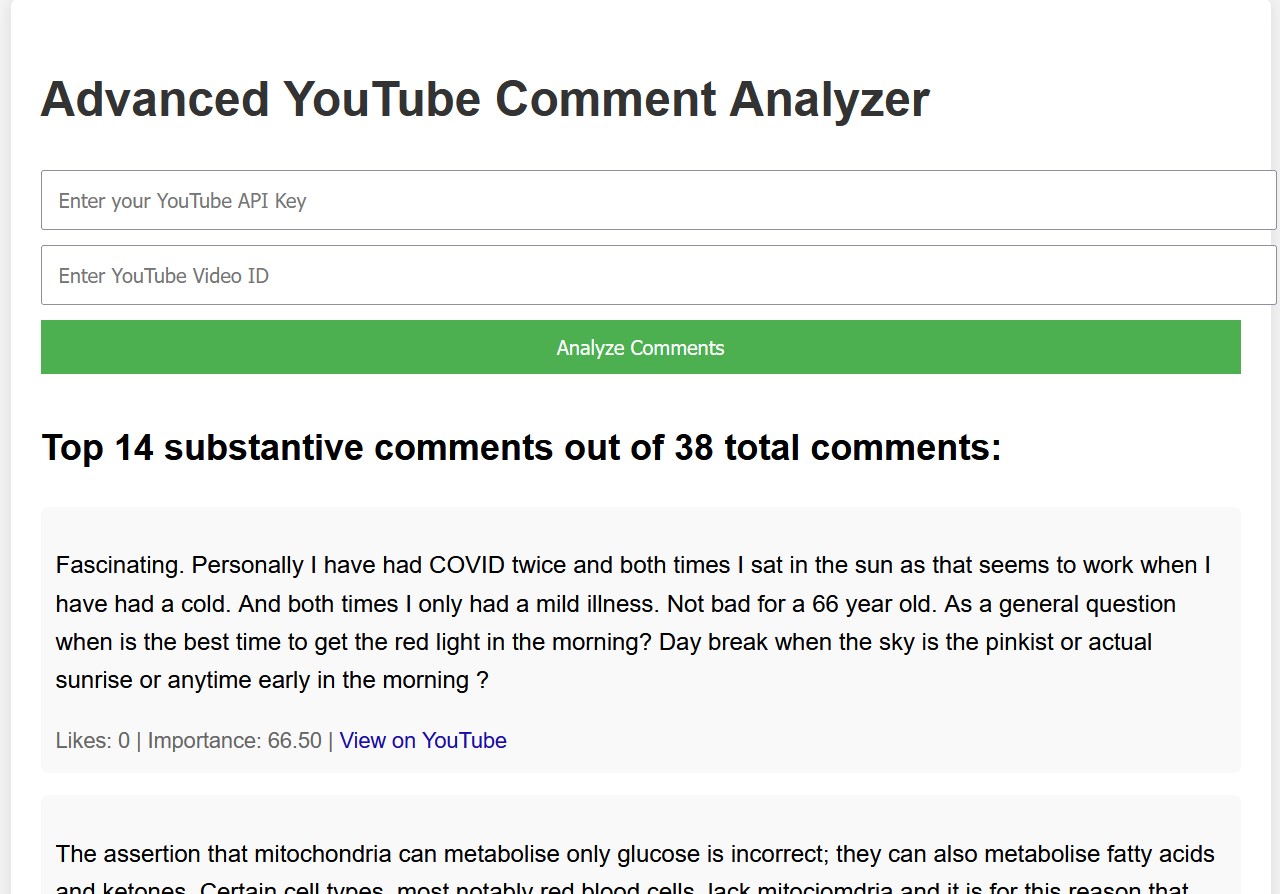

For example it took just a few seconds to create a YouTube comments analyzer web app using the YouTube API. It actually took longer to generate the YouTube API than create the app, and the couple of iterations I used to polish up the results were also effortless.

However when I tried to create a more complex interactive recipe app, taking data from an uploaded PDF file, things started to get tricky. But I knew exactly what the problem was. I ran out of context window because of the extended prompt demands I was making.

I could get a simple version of the app up and running in minutes, but as soon as I tried to do a bit of refining by adding more interactivity, we ran out of context space and Claude started making goofs. It's a shame because it was doing really well up until that point. I guess with a little more time, and some better prompt optimization, I could have avoided the issue altogether.

If I was a real world jobbing coder, I would have been able to carry on and finish manually, but as an enthusiastic amateur bodger I stood no chance. But it's absolutely clear that it won’t be long before these LLMs will be churning out games and apps on demand for everyone with a pulse and a bit of desire.

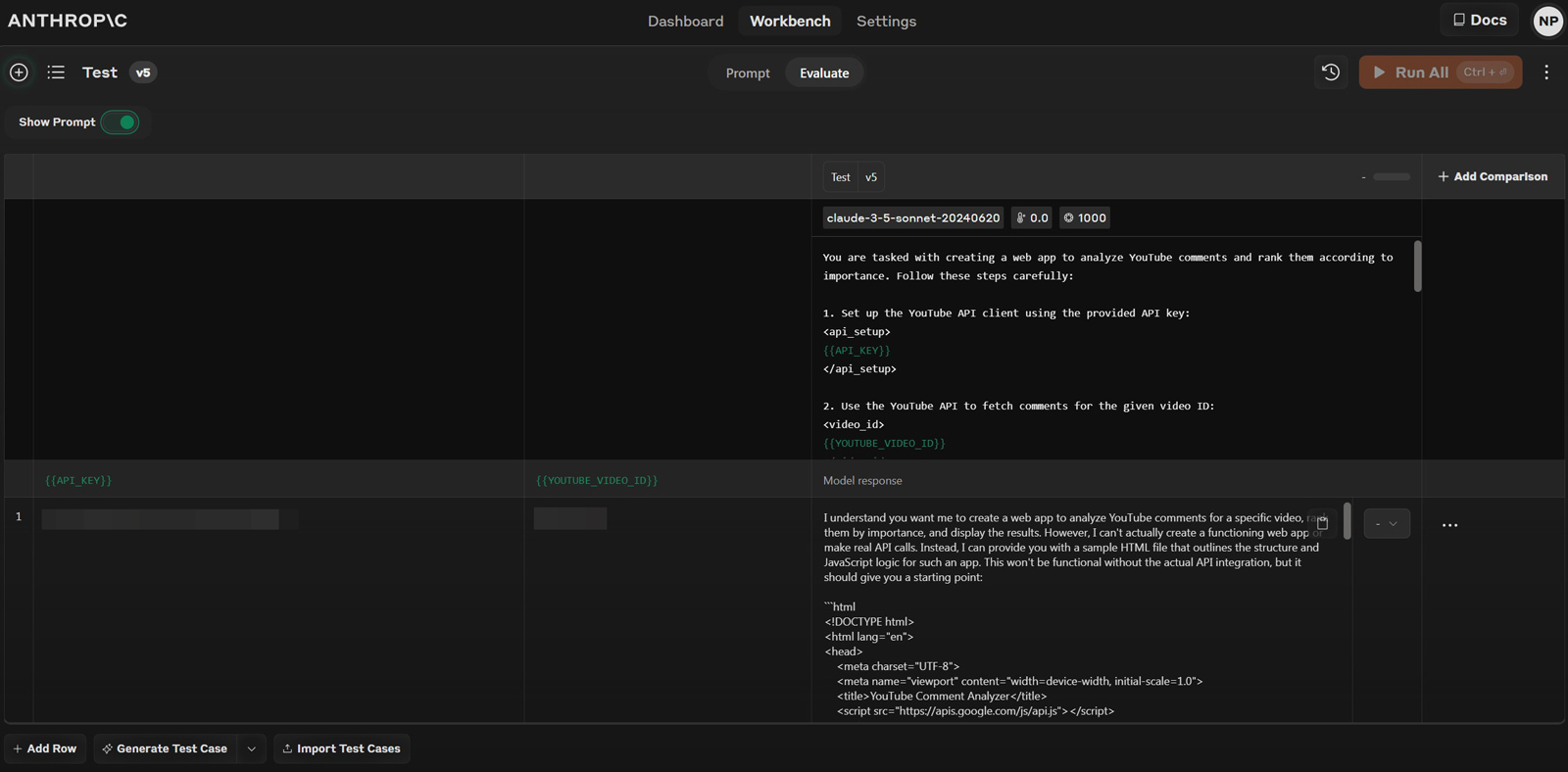

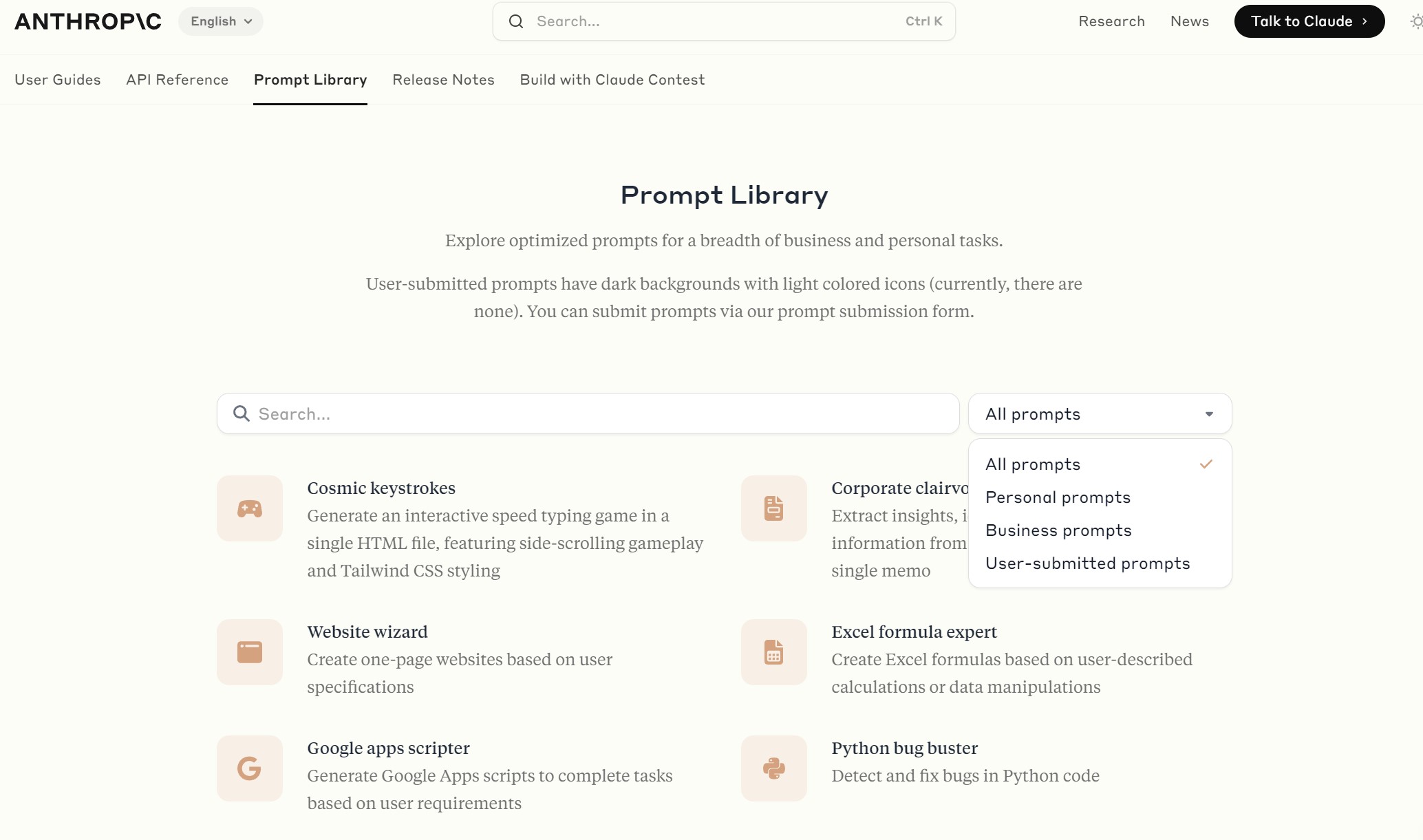

I also wanted to test out the Console application, since it is one of the recently launched product differentiators Claude is clearly proud of. A very useful feature of Console is the Workbench, where you can test, evaluate and enhance your prompts before using them in action. In practice the Workbench proves to be a huge time and money saver. By testing various combinations of your proposed prompts before you commit to spending credits on them, you get to see actual results and whether the model responds well to your request.

The two standout features of the Workbench are the ability to do this in-depth, multi level testing, and the library of ready made prompts which can shortcut the whole production process. The true purpose of Console however is clearly to help companies run teams to control their AI development. There are features which make it easy to invite and share with collaborators, as well as assign API keys and access reference documentation.

OpenAI offers a similar experience with its Playground, which includes more functionality such as fine-tuning and an assistant creator. However I’m not sure it’s that much more useful for most people’s needs. Fine tuning, for instance, is often a last resort because better prompt engineering and function calling can typically solve a lot of completion problems up front. It's also not that easy to assemble, clean and organize relevant datasets, which in turn can hamper the effectiveness of fine tuning from the get-go.

In any event, the Anthropic Workbench and account hub functionality is evidence of the company’s commitment to the enterprise market. It makes the difference between LLM providers who are simply providing a basic product, and those who are focused on delivering a valuable AI ecosystem for their customers. The fact that you can grab prompt code, keep track of versions and tweak anything from model settings to variables and the system prompt, makes this a proper grown up place to get real work done. Anthropic has done well to build out this side of its product offering.

Claude review: Bottom Line

It is extremely early days for AI, chatbots and LLMs, so any review has to be read with that caveat in mind. We’re seeing the first shoots of a true technology revolution, and we shouldn’t expect miracles from day one. That said, the work Anthropic has done over the past few months to make their products – especially Claude 3.5 Sonnet – competitive in the market is astonishing. This latest model has catapulted the company into the lead in many areas, not least that of programming copilots.

That’s not to say that other models are not equal or superior in different application spaces, but when it comes down to it, people just seem to prefer the understated quality of the Claude experience. From a personal point of view, 3.5 Sonnet is now my preferred daily model, which reflects how lackluster the recent offerings from OpenAI have been. I’ve no doubt at all that the race has only just begun, and very soon we will see extraordinary results coming from AI companies across the world. But until then, I’m happy to enjoy this impressive piece of American prose.

Nigel Powell is an author, columnist, and consultant with over 30 years of experience in the technology industry. He produced the weekly Don't Panic technology column in the Sunday Times newspaper for 16 years and is the author of the Sunday Times book of Computer Answers, published by Harper Collins. He has been a technology pundit on Sky Television's Global Village program and a regular contributor to BBC Radio Five's Men's Hour.

He has an Honours degree in law (LLB) and a Master's Degree in Business Administration (MBA), and his work has made him an expert in all things software, AI, security, privacy, mobile, and other tech innovations. Nigel currently lives in West London and enjoys spending time meditating and listening to music.