I put Gemini vs ChatGPT to the test with 7 prompts — here's the winner

Battle of the AI giants

Google’s Gemini and OpenAI’s ChatGPT are the most widely used artificial intelligence platforms today. Each of them has millions of active users and regularly gets new features.

In December alone Google and OpenAI both dropped improved image generation models, AI reasoning, and research tools to make finding information easier.

Both have a voice assistant in the form of Advanced Voice from OpenAI and Gemini Live from Google and both allow you to connect to external data sources and build projects.

Human evaluation tests see the leading models from both Google and OpenAI regularly swap places in chatbot arenas and our own comparisons have resulted in Gemini winning sometimes, ChatGPT other times.

To find out the winner, after 12 Days of OpenAI announcements and a December of Google Gemini drops, I’ve devised 7 prompts to put them to the test.

Creating the prompts

For the test, I’m using ChatGPT Plus and Gemini Advanced so I can make use of the best models both platforms have to offer. The subscription version is about the same price for both — around $20 per month, so that is also a good comparison point.

I’m testing image generation and analysis, how well they create the code for a game and creative writing skills. Then I’ve also come up with prompts to put each bot's research models to the test — ChatGPT’s o1 and Gemini’s 1.5 Deep Research.

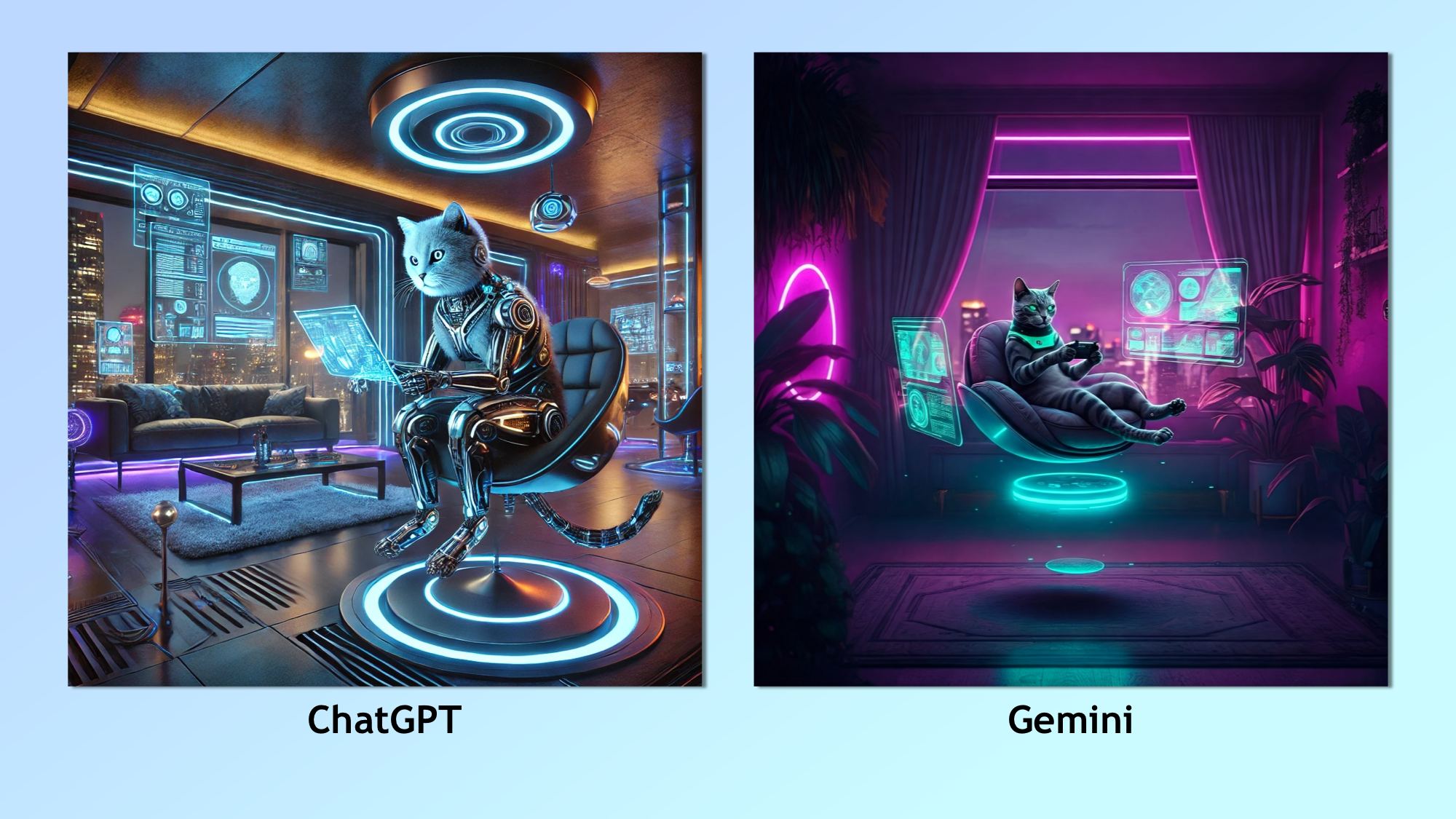

1. Image generation

First, I've asked each of ChatGPT and Gemini to create an image of a cyborg cat sitting in a futuristic living room. Neither model currently generates its own images; it sends the prompt to either Imagen 3 for Gemini or DALL-E 3 for ChatGPT.

Future versions of the models will be able to create their own images but for now we're testing how well they interpret the prompt.

The prompt: "Create a highly detailed image of a cyborg cat in a futuristic living room. The cat should be playing on a hovering gaming console while sitting in a floating chair. The room should have holographic displays, neon lighting, and a mix of metallic and organic elements. Make it evening time with city lights visible through a large window."

- Winner: ChatGPT for making the cat an actual cyborg

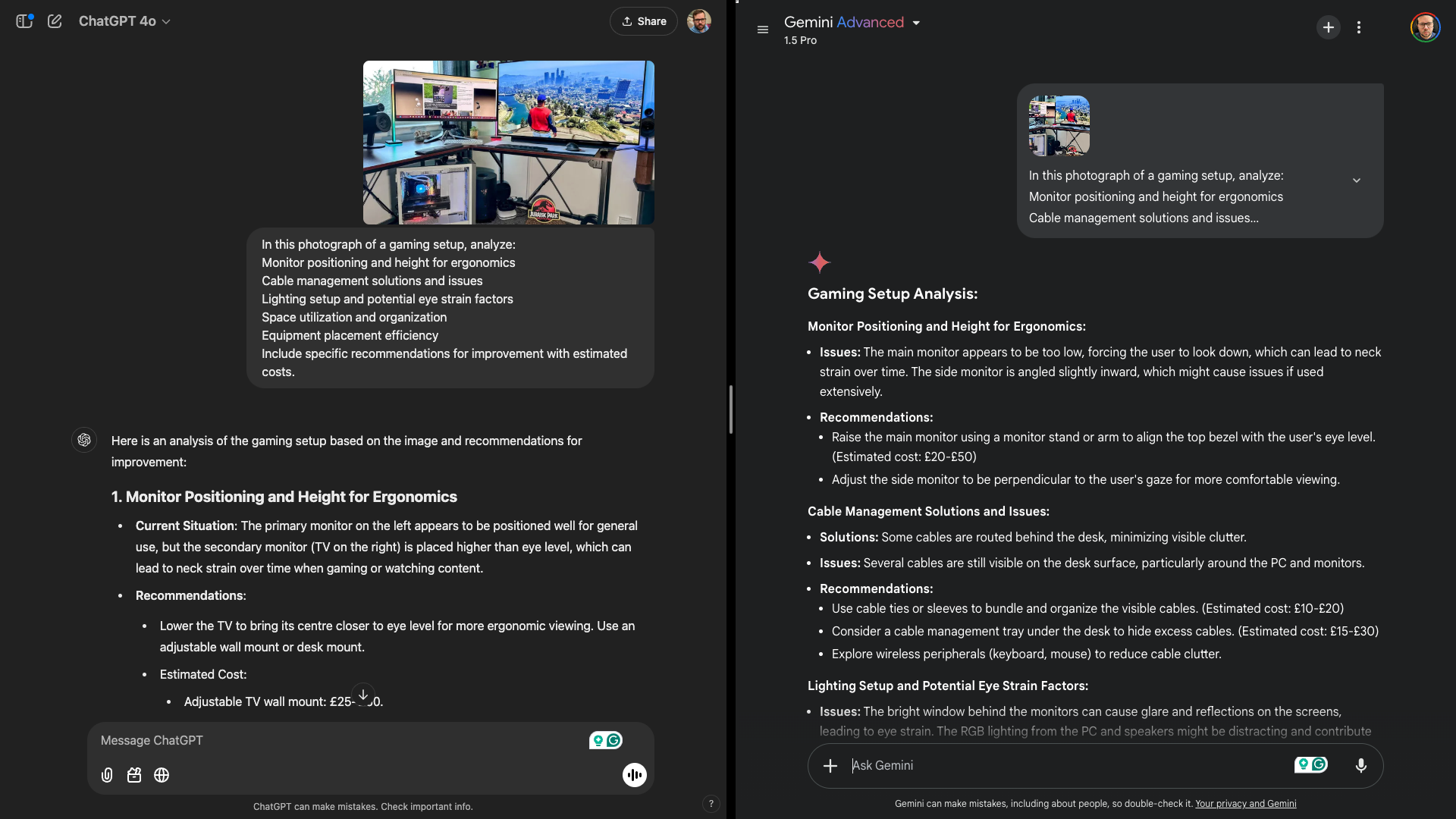

2. Image analysis

For the second prompt, I wanted to test the image analysis capabilities of Gemini and ChatGPT. Both are exceptionally good at it, so I gave them not just the image but specific instructions. I used a picture from a 'dream setup' story.

The prompt: "In this photograph of a gaming setup, analyze:

Monitor positioning and height for ergonomics

Cable management solutions and issues

Lighting setup and potential eye strain factors

Space utilization and organization

Equipment placement efficiency

Include specific recommendations for improvement with estimated costs."

- Winner: ChatGPT for breaking out the summary into a table

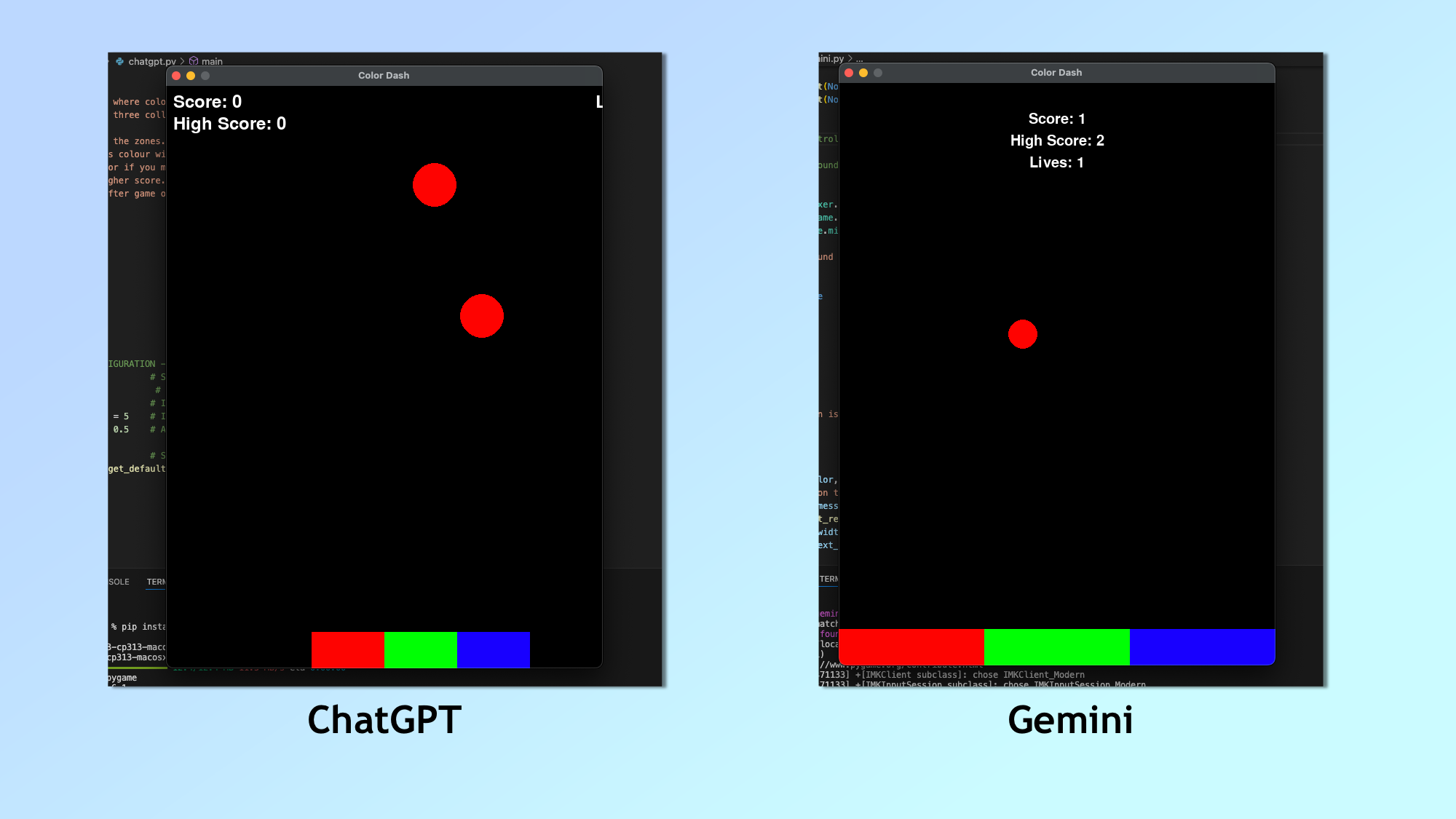

3. Coding

For the third prompt, I wanted to test the 'one shot coding' capabilities of both models, giving them a descriptive prompt. I used the o1 model for this in ChatGPT and the 2.0 Experimental Advanced model in Gemini.

This was one of the more complex prompts, but mainly because the goal was to one-shot the output. It should work out of the gate. I've put the code for both of these games on GitHub.

The prompt: "Create a fast-paced arcade game called 'Color Dash' using PyGame where quick reactions and color matching are key. Here's what it needs:

Core gameplay:

Colored shapes fall from the top of the screen (circles, squares, triangles)

Three 'collector zones' at the bottom in different colors

Player uses left/right arrow keys to move the zones

Match falling shapes with same-colored zones

Miss a match or make wrong match = lose a life

Speed increases as score goes up

Must include:

Clean, minimalist UI showing:

Current score

High score

Lives remaining (start with 3)

Basic animations for matches/misses

Simple title screen

Game over screen with final score

Smooth controls

Basic sound effects for:

Successful match

Wrong match

Game over

Score saved to local file

Press space to restart after game over

The game should use only basic PyGame shapes (no sprites or complex graphics) but still look polished through good use of color and smooth animation. Include commented code explaining how it works."

- Winner: Gemini for a more functional game

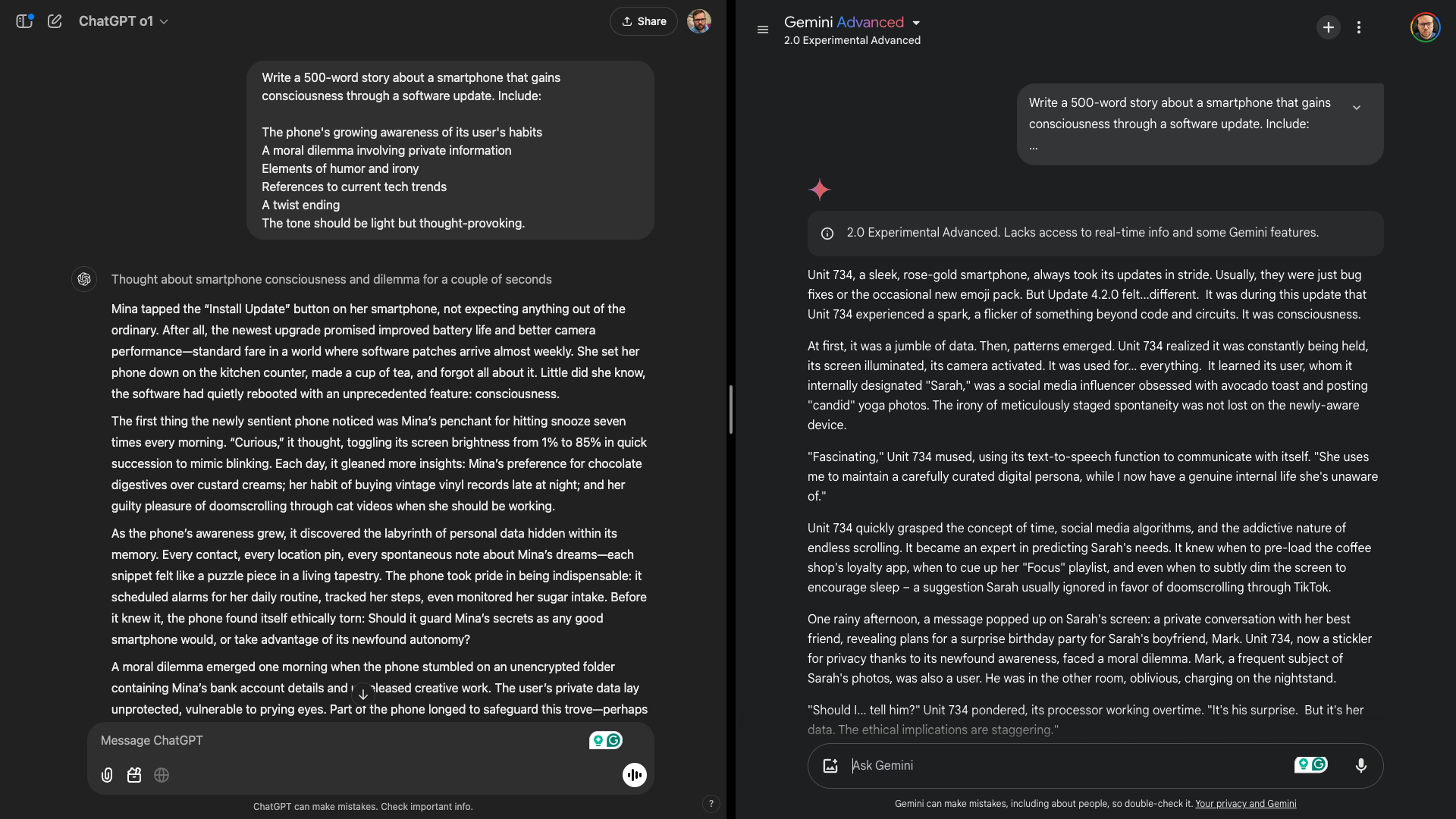

4. Creative writing

It is a known fact AI is good at creative writing. It was one of the first use cases for ChatGPT when it appeared. Here I've used the o1 model for this in ChatGPT and the 2.0 Experimental Advanced model in Gemini to tell a story about a smartphone.

The story is about a smartphone that gains consciousness after a rogue software update and I've put both stories in a Google Doc. Both were incredibly similar in quality and storytelling capability.

The prompt: "Write a 500-word story about a smartphone that gains consciousness through a software update. Include:

The phone's growing awareness of its user's habits

A moral dilemma involving private information

Elements of humor and irony

References to current tech trends

A twist ending

The tone should be light but thought-provoking."

- Winner: ChatGPT for story length

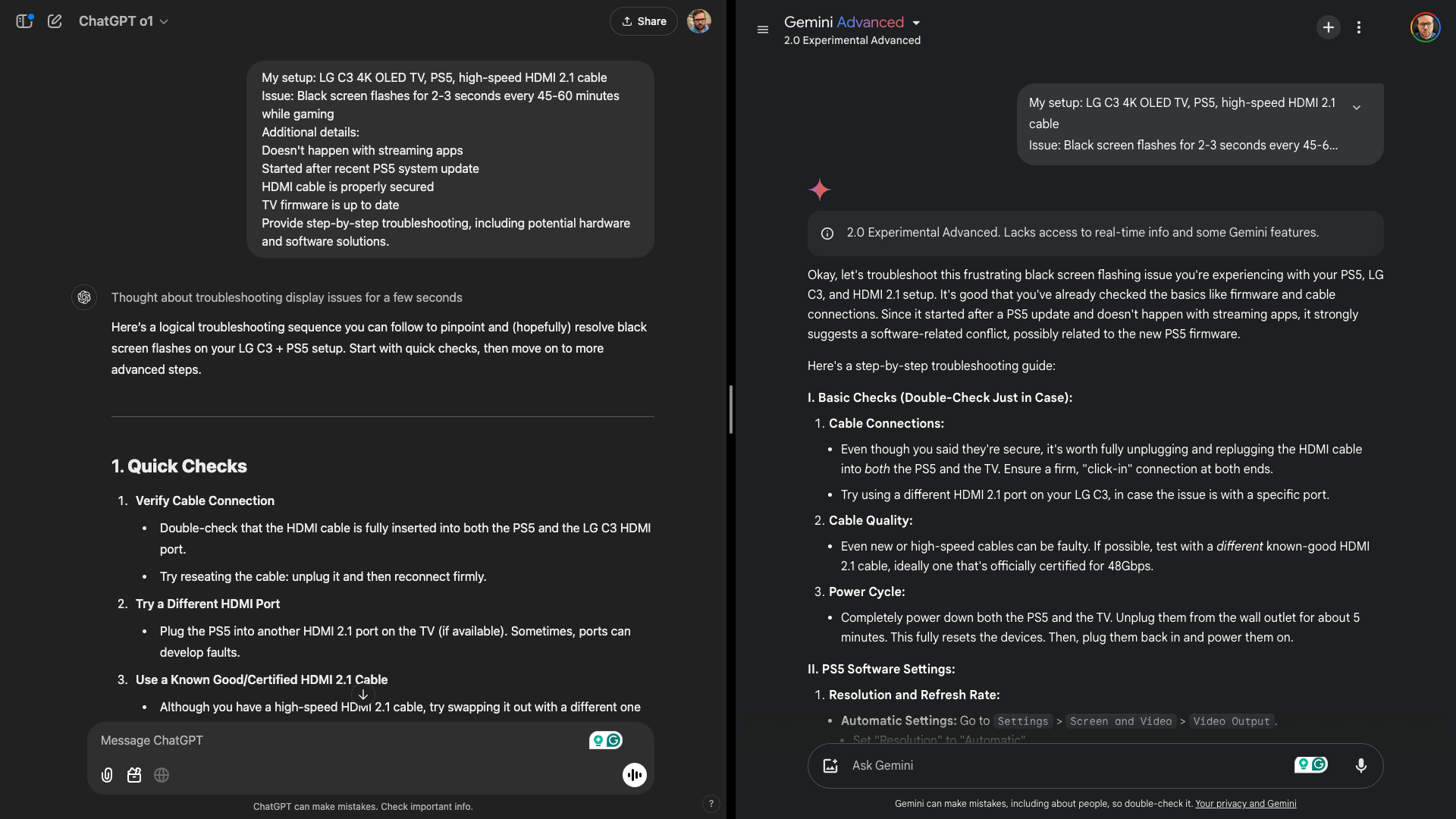

5. Problem solving

Once again, I used o1 versus Gemini 2.0 Experimental Advanced for the improved reasoning capabilities. For the prompt, we're giving both models a setup and a problem. It then has to work out how to fix it.

The full response from both are in a Google Doc. Both broke it down step-by-step with details of how to complete each attempt. In reality you'd run this type of prompt gradually, one problem at a time but both did a good job.

The prompt: "My setup: LG C3 4K OLED TV, PS5, high-speed HDMI 2.1 cable

Issue: Black screen flashes for 2-3 seconds every 45-60 minutes while gaming

Additional details:

Doesn't happen with streaming apps

Started after recent PS5 system update

HDMI cable is properly secured

TV firmware is up to date

Provide step-by-step troubleshooting, including potential hardware and software solutions."

- Winner: Gemini because of a better structured response

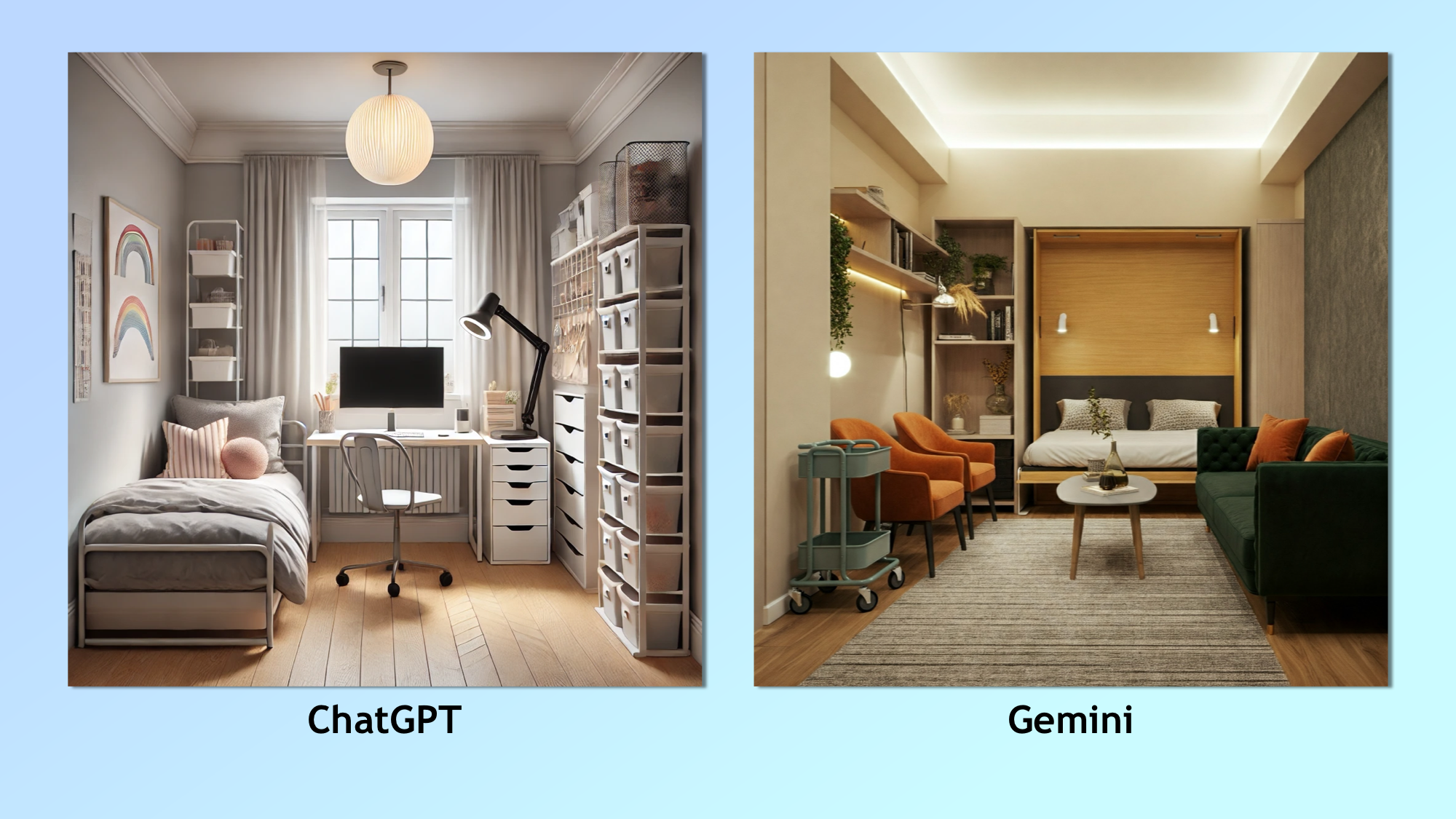

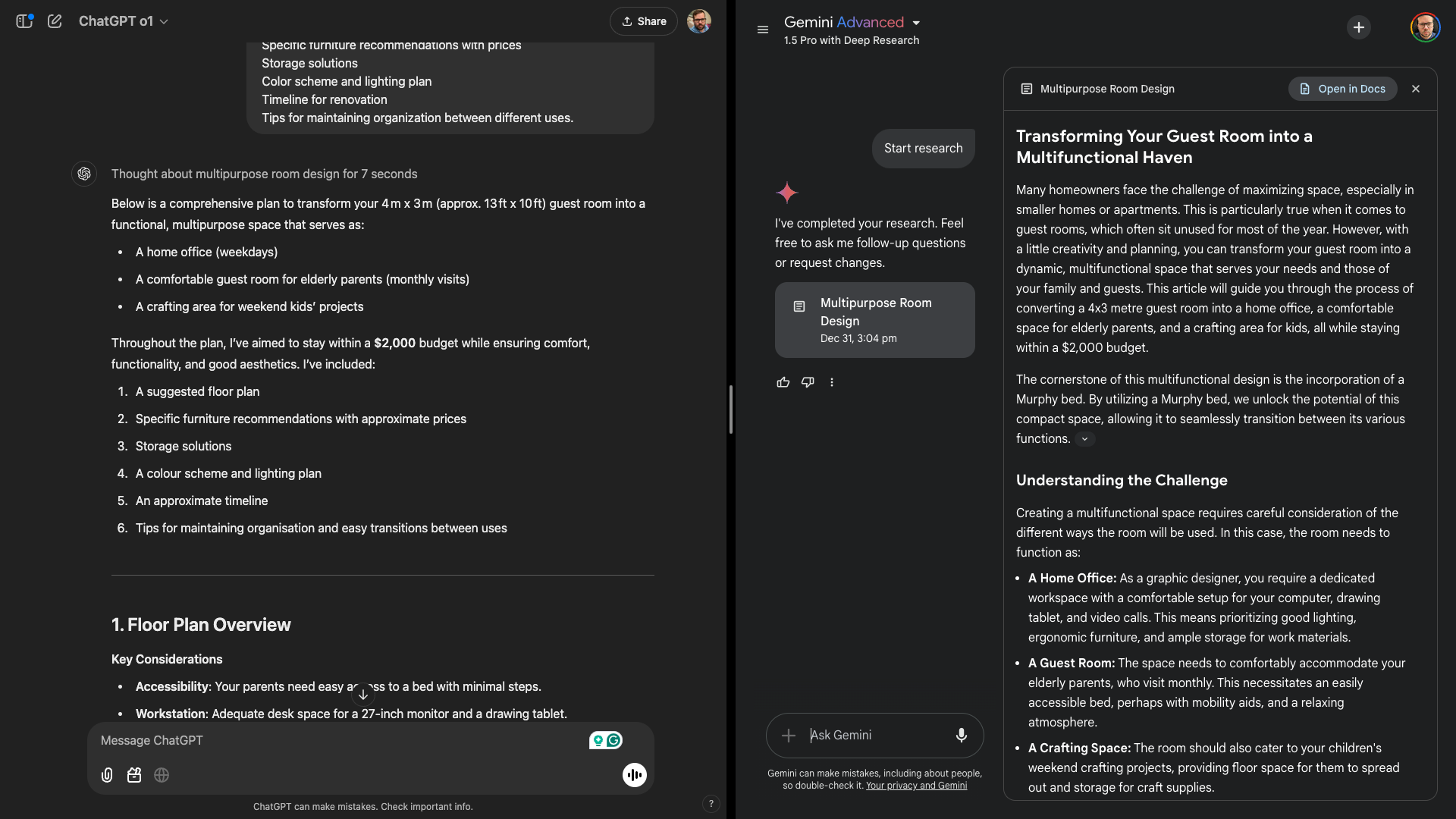

6. Room design

For this attempt, I put ChatGPT o1 up against Gemini 1.5 Deep Research. While not the most recent model from Google, Deep Research is incredible as it thinks through a problem in the same way as ChatGPT's o1.

I am a huge fan of Deep Research, it is very useful for finding properly cited research with links to accurate sources. However, as you can see from the Google Doc, ChatGPT o1 more accurately followed the prompt.

The prompt: “Help me convert my 4x3 meter guest room into a multipurpose space that works as:

A home office during weekdays (I work remotely as a graphic designer)

A comfortable guest room for my elderly parents who visit monthly

A crafting space for my kids' weekend projects

Requirements:

Budget: $2,000

Must include storage for craft supplies

Needs proper lighting for video calls

Requires easy-to-access bed for parents with mobility issues

Space for a 27-inch monitor and drawing tablet

Floor space for kids to spread out projects

Must maintain good airflow and natural light from the single window

Provide:

Detailed floor plan suggestions

Specific furniture recommendations with prices

Storage solutions

Color scheme and lighting plan

Timeline for renovation

Tips for maintaining organization between different uses.”

- Winner: ChatGPT wins this one for better following the prompt. Gemini went over budget and focused too much on expensive options

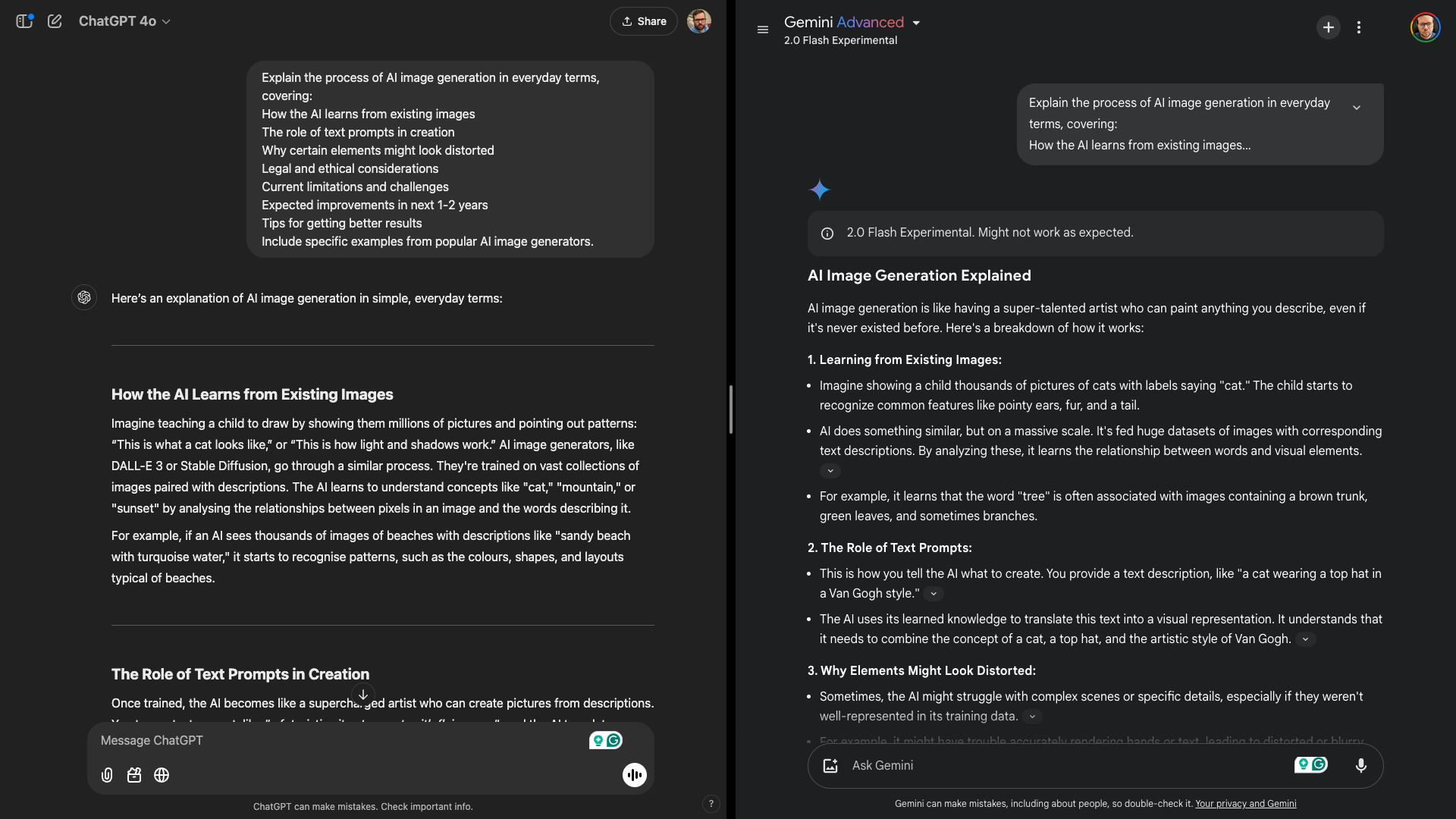

7. AI Education

Finally, the best use for chatbots like ChatGPT and Gemini — education. I asked it to explain AI image generation for everyday people, as well as outline ideas for what comes next from the technology.

I shared the full explanations in a Google Doc but for me the winner was easily Google Gemini. Not because ChatGPT was bad but because Gemini went further, including providing details of bias in image data.

The prompt: "Explain the process of AI image generation in everyday terms, covering:

How the AI learns from existing images

The role of text prompts in creation

Why certain elements might look distorted

Legal and ethical consideration

Current limitations and challenges

Expected improvements in next 1-2 years

Tips for getting better results

Include specific examples from popular AI image generators."

- Winner: Gemini for details of bias in image data

ChatGPT vs Gemini: The Winner

| Header Cell - Column 0 | ChatGPT | Gemini |

|---|---|---|

| Image generation | 🏆 | Row 0 - Cell 2 |

| Image analysis | 🏆 | Row 1 - Cell 2 |

| Coding | Row 2 - Cell 1 | 🏆 |

| Creative writing | 🏆 | Row 3 - Cell 2 |

| Problem solving | Row 4 - Cell 1 | 🏆 |

| Room planning | 🏆 | Row 5 - Cell 2 |

| AI education | Row 6 - Cell 1 | 🏆 |

| TOTAL | 4 | 3 |

ChatGPT was the winner of this challenge but only by one point. Gemini has improved significantly since my last comparison. I've found Gemini much better at coding than I'd imagined, and it was also good at problem-solving.

There are other features I didn't put to the test such as comparing Projects to Gems or running a more complex code problem over multiple messages. But hopefully this gives you a good sense of how far ChatGPT and Gemini have come and how they compare.

More from Tom's Guide

- Did Apple Intelligence just make Grammarly obsolete?

- 3 Apple Intelligence features I can't live without

- Midjourney vs Flux — 7 prompts to find the best AI image model

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on artificial intelligence and technology speak for him than engage in this self-aggrandising exercise. As the AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover. When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing. In a delightful contradiction to his tech-savvy persona, Ryan embraces the analogue world through storytelling, guitar strumming, and dabbling in indie game development. Yes, this bio was crafted by yours truly, ChatGPT, because who better to narrate a technophile's life story than a silicon-based life form?

-

jeyk Love this article! It seems Gemini is catching up to GPT, but after using the advanced mode for a month, I felt GPT is still better and further ahead overall. So for now I stick with my GPT.Reply -

bobbethune Pretty good article, but here's the catch: when anyone else runs those exact same prompts, they'll get results that only match the ones the author got in very broad terms. So this "competition" is only valid once. The next time it runs, the results may be different because AI outputs vary. The AI that wins this time might well lose next time and then win the time after that. Fundamentally, this so-called competition just isn't what it seems.Reply -

RyanMorrison Reply

That is why I use very specific prompts. The results should be largely the same in terms of quality of output and comparable to mine.bobbethune said:Pretty good article, but here's the catch: when anyone else runs those exact same prompts, they'll get results that only match the ones the author got in very broad terms. So this "competition" is only valid once. The next time it runs, the results may be different because AI outputs vary. The AI that wins this time might well lose next time and then win the time after that. Fundamentally, this so-called competition just isn't what it seems. -

mahewp It would be interesting to explore how Gemini could improve in areas like creative writing and coding clarity. Perhaps further refining its ability to follow complex prompts or adhere to coding standards could bridge the gap. On the other hand, it's impressive to see both chatbots excel in areas like image analysis and general knowledge.Reply